This repo is a companion to the paper

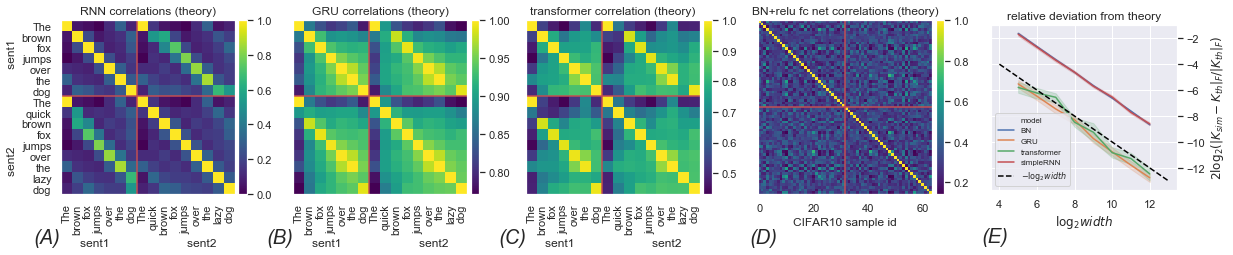

which shows that the Gaussian process behavior arises in wide, randomly initialized, neural networks regardless of architecture.

Despite what the title suggests, this repo does not implement the infinite-width GP kernel for every architecture, but rather demonstrates the derivation and implementation for a few select architectures.

| Architecture | Notebook | Colab |

|---|---|---|

| Simple RNN | Notebook | |

| GRU | Notebook | |

| Transformer | Notebook | |

| Batchnorm+ReLU MLP | Notebook |

Plots.ipynb also reproduces Figure (3) of the paper.

We have included the GloVe embeddings ExampleGloVeVecs.npy of example sentences we feed into the networks, as well as their normalized Gram matrix ExampleGloVeCov.npy.

GloVe.ipynb recreates them; if you wish to try the kernels on custom sentences, then modify GloVe.ipynb as appropriate.