In this work we develop an approach to rapidly and cheaply generate large and diverse synthetic overhead imagery for training segmentation CNNs with CityEngine. Using this approach, we generate and release a collection of synthetic overhead imagery, termed Synthinel-1, with full pixel-wise building labels. We use several benchmark datasets to demonstrate that Synthinel-1 is consistently beneficial when used to augment real-world training imagery, especially when CNNs are tested on novel geographic locations or conditions.

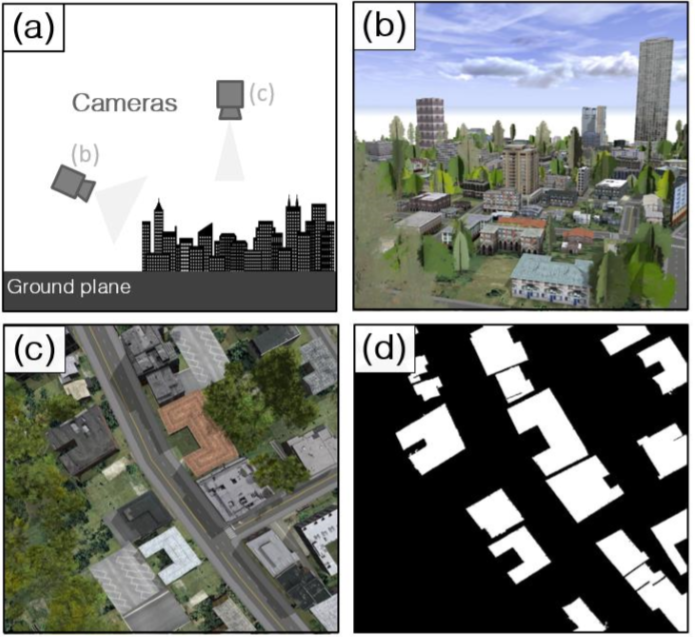

Fig 1. (a) Illustration of a virtual city and two perspectives of a virtual camera, set by the designer. The corresponding images for each camera are shown in panels (b) and (c). The camera settings in (c) are used to generate the dataset in this work. In (d) we show the corresponding ground truth labels extracted for the image in (c).

We summarized our work in paper "The Synthinel-1 dataset: a collection of high resolution synthetic overhead imagery for building segmentation" .

Synthinel-1 is now publicly released. Please download the dataset here.

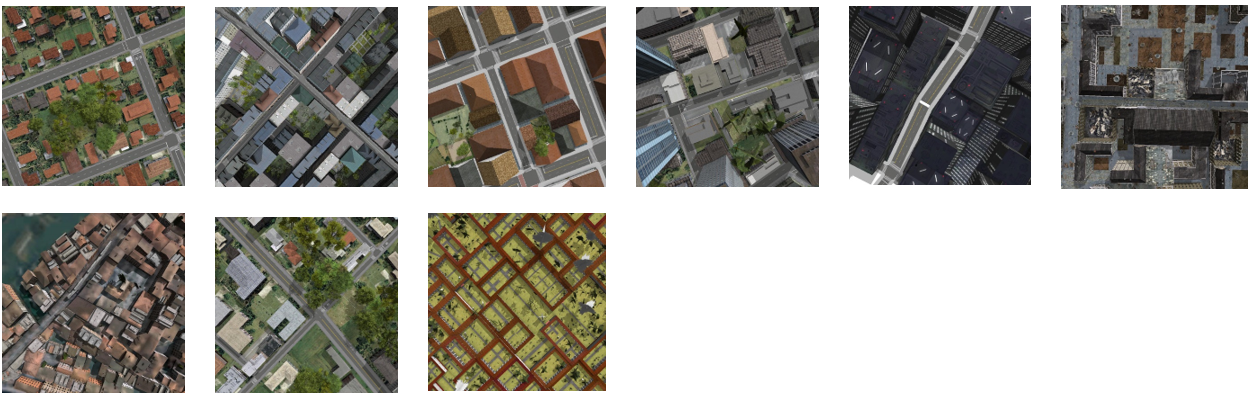

Fig 2. The automatic virtual city scene generation process.

Fig 3. Examples of caputured synthetic images in Synthinel.

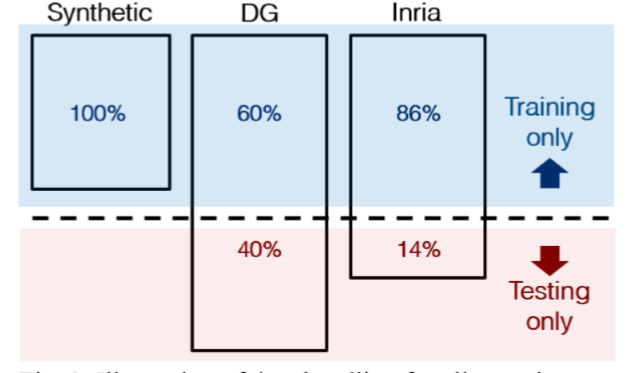

In our experiments, we used two avaliable real-world dataset, Inria and DeepGlobe, to demonstrate the effectiveness of our synthetic dataset for pixel-wise building extraction task. We split Inria and DeepGlobe datasets into two disjoint subsets, one for training and one for testing, as illustrated in Fig 4.

Fig 4. Illustration of data handling for all experiments.

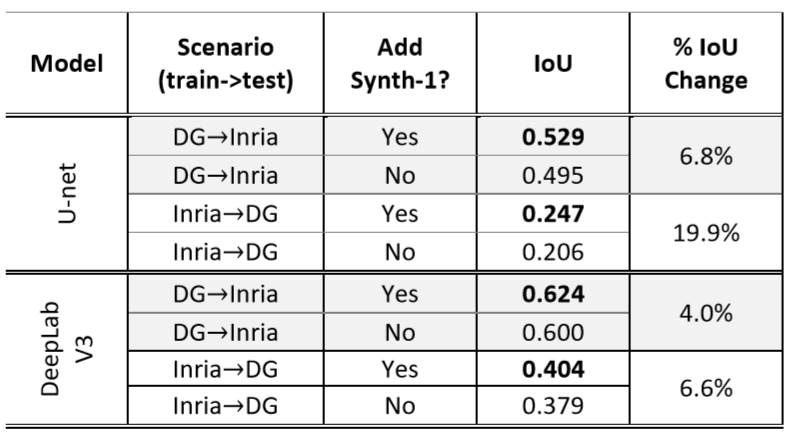

Then, we used two popular CNN models for image semantic segmentation, which are U-net and DeepLabV3. U-net is proposed in paper "U-Net: Convolutional Networks for Biomedical Image Segmentation" and DeepLabV3 is proposed in "Rethinking Atrous Convolution for Semantic Image Segmentation." For training, we have two schemes. For one scheme, we trained our networks only on real-world dataset. For the other, we trained the networks on real-world dataset jointly with synthetic dataset. Joinly training method is illustrated in our paper. For testing, we only tested our models on real-world datasets, Inria and DeepGlobe respectively. Specifically, we used the cross-domain testing to show the benefits of Synthinel. That is, for model trained on Inria, we tested it on DeepGlobe. For model trained on DeepGlobe, we tested it on Inria. The experiments decribed above is summarized in the following table,

Table 1: Training and testing on different geographic locations. Results (intersection-over-union) of segmentation on popular building segmentation benchmark datasets.

The dependencies to run the codes are

- CityEngine 2019.0

CityEngine used by Synthinel is a tool for rapidly generating large-scale virtual urban scenes.

If you find our work is helpful for your research, we would very appreciate if you cite our paper.

@inproceedings{kong2020synthinel,

title={The Synthinel-1 dataset: a collection of high resolution synthetic overhead imagery for building segmentation},

author={Kong, Fanjie and Huang, Bohao and Bradbury, Kyle and Malof, Jordan},

booktitle={Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision},

pages={1814--1823},

year={2020}

}

- Find some ground truths are not aligned with objects well. This is caused by the light variations in images for extracting ground truths. This can be fixed by changing the light angle to 90 degree and light intensity to 1 when shooting the images used for extracting ground truths.