This repository contains a Pytorch reimplementation of the feature regularization technique from the paper

Implicit Under-Parameterization Inhibits Data-Efficient Deep Reinforcement Learning.

The authors observe that performance plateaus/declines in value-based deep RL algorithms are linked to a collapse in effective

feature rank (defined below). This in turn is induced by an explosion of the largest singular values of the feature matrix (

The paper defines effective feature rank as the number of normalized singular values such that their sum exceeds a threshold

$$\text{srank}\delta (\Phi) = \min \bigg{ k: \frac{\sum{i=1}^k \sigma_i (\Phi)}{\sum_{i=1}^d \sigma_i (\Phi)} \geq 1 - \delta \bigg}~,$$

where:

-

$\sigma_i (\Phi)$ represents the i-th largest singular value of the feature matrix$\Phi~.$ -

$\delta$ is a threshold parameter set to$0.01$ in the paper.

- Install the conda environment.

- Start a single run with

python feature_regularized_dqn.pyor a batch of runs withfeature_regularized_dqn.sh.

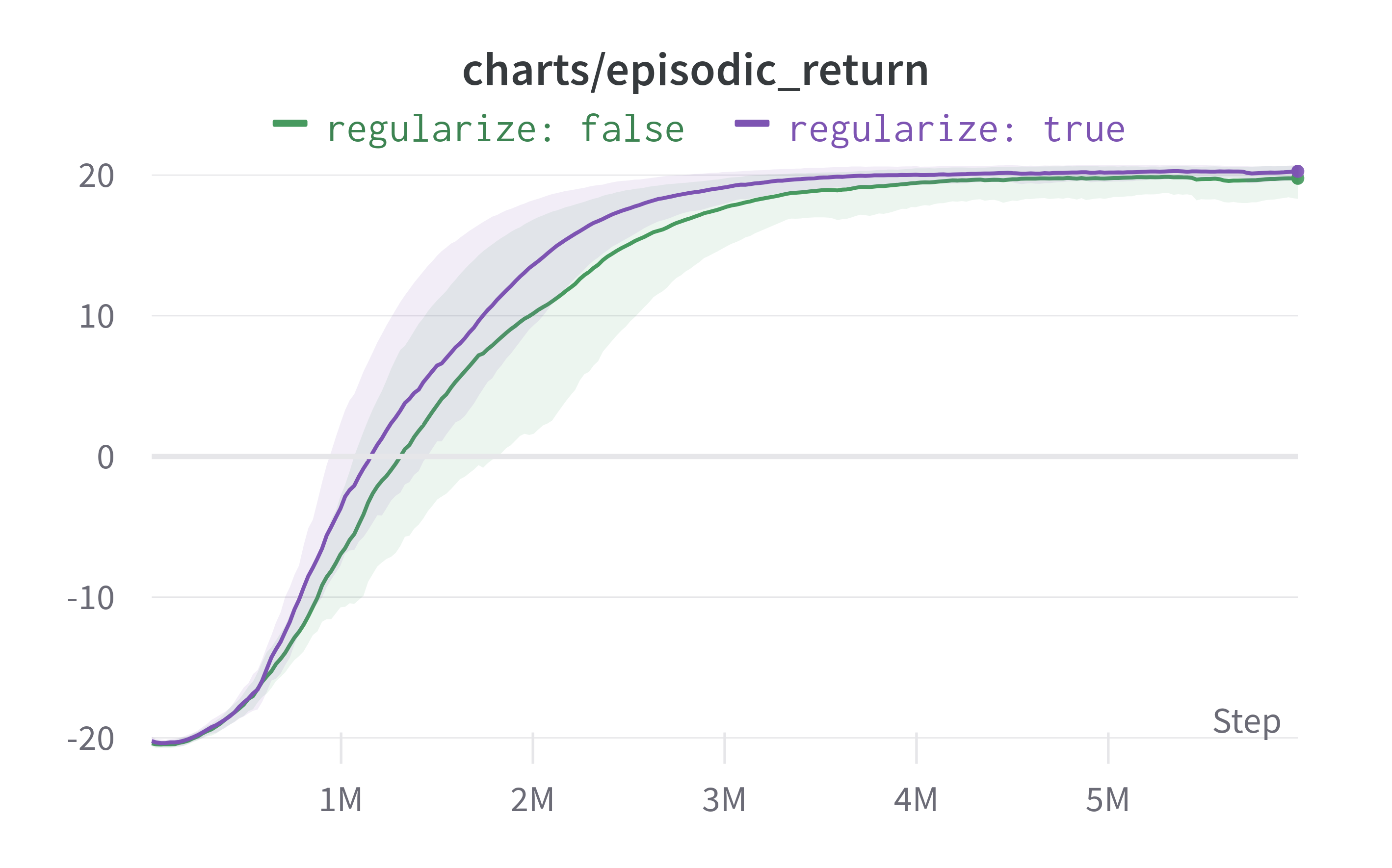

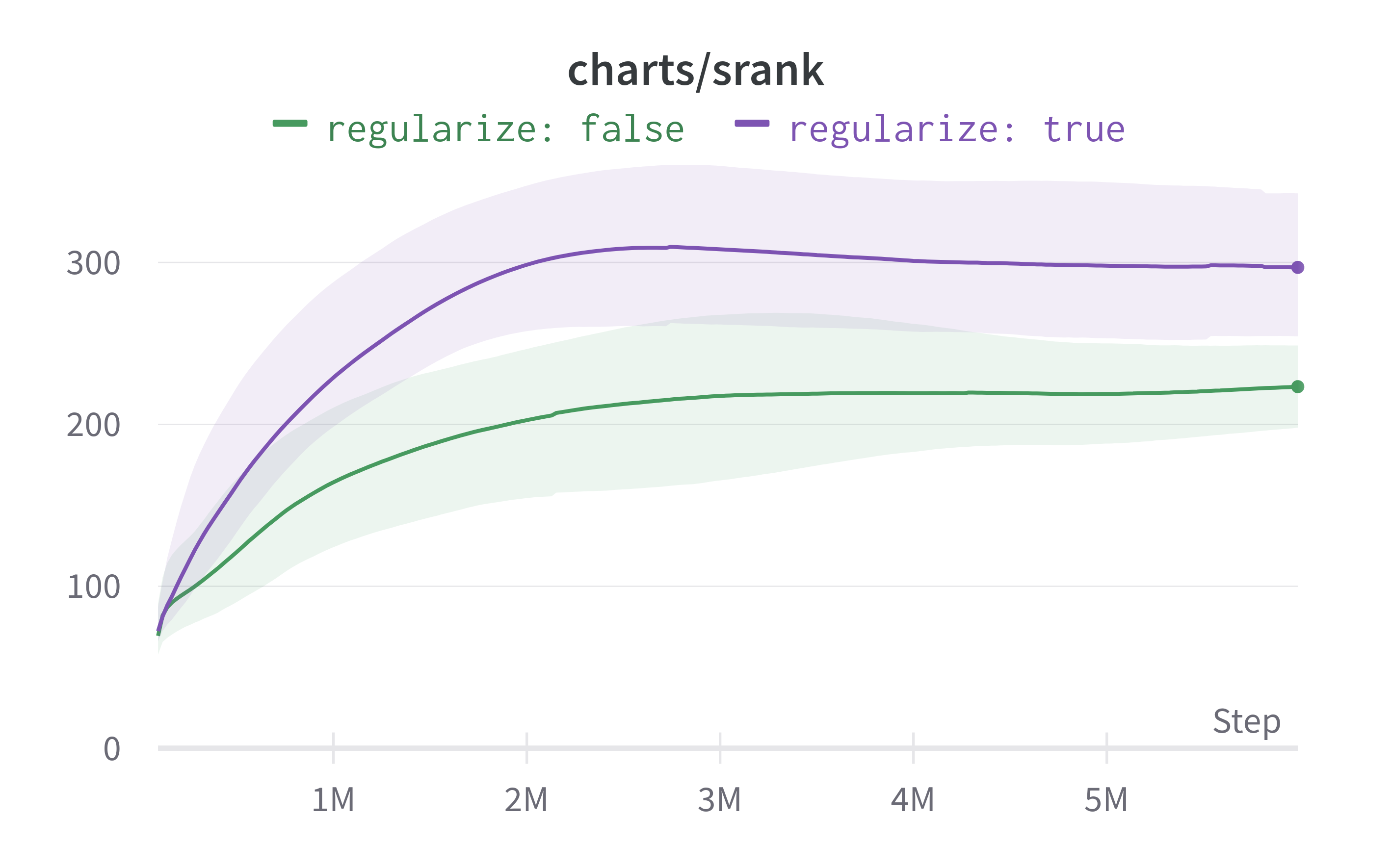

Below are the results from evaluation runs on Pong. Using the proposed regularizer leads to faster learning and a higher effective feature rank.

| Episodic Return | Effective Rank |

|---|---|

|

|

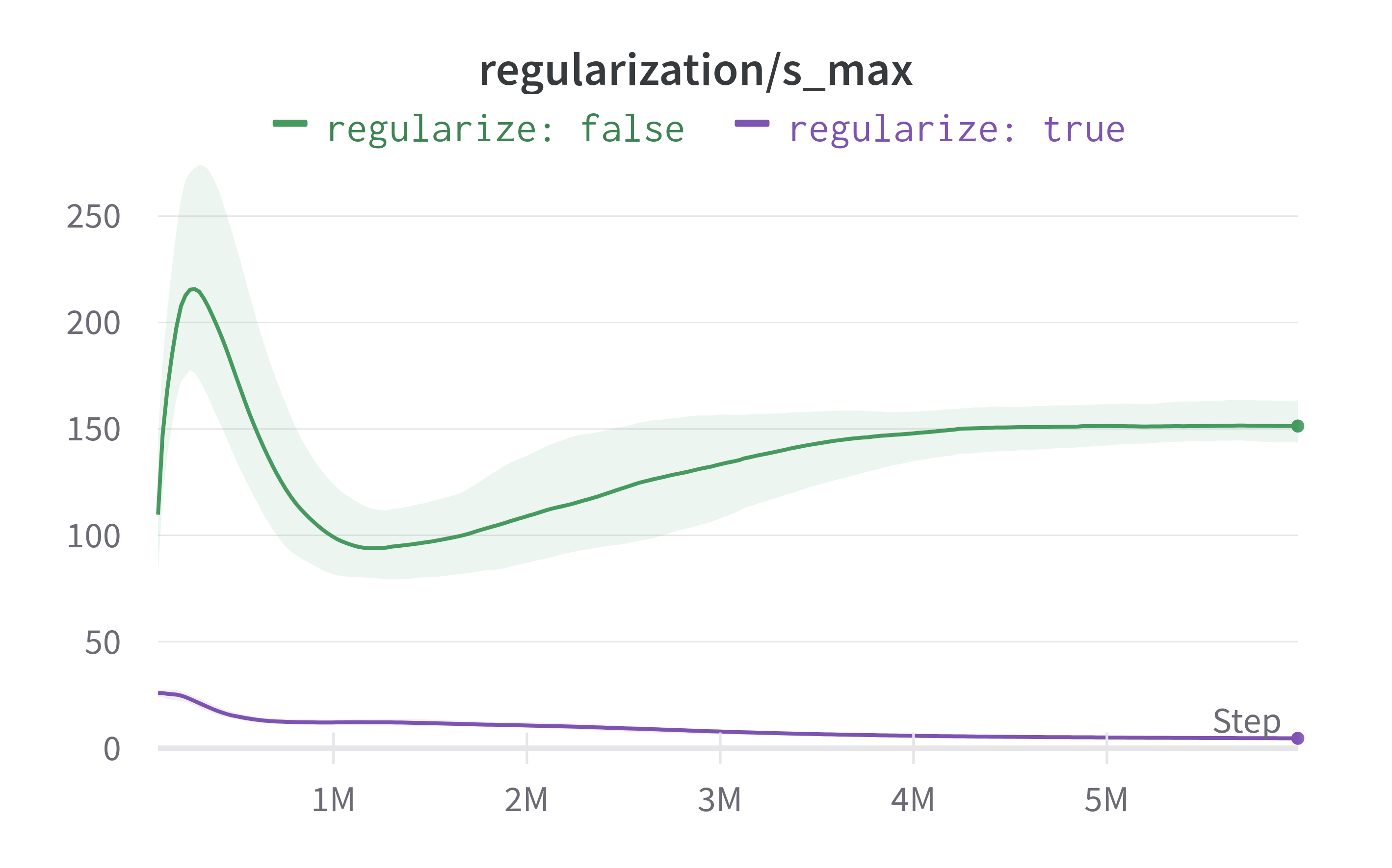

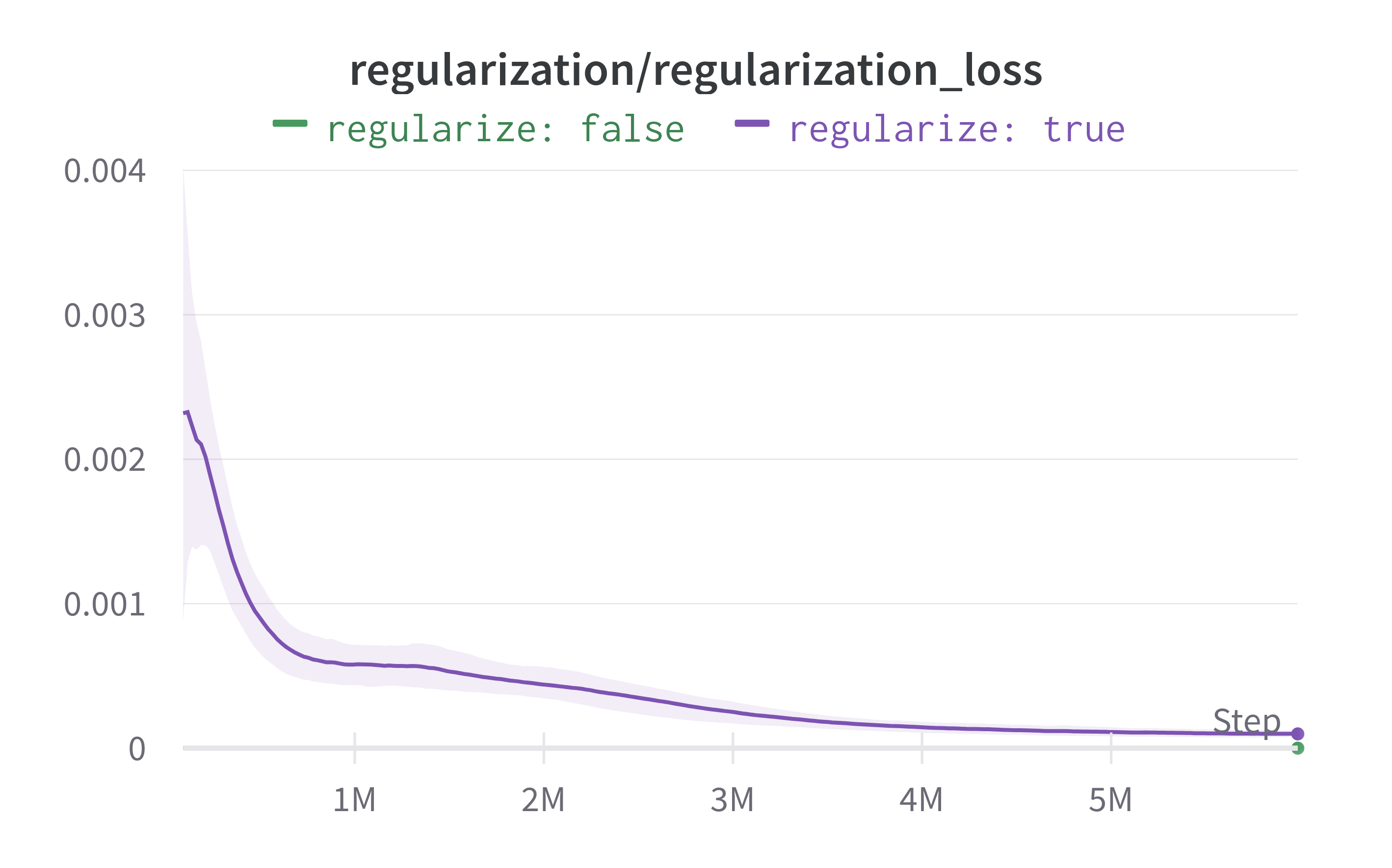

The authors claim that their method prevents exploding singular values of the feature matrix

| Evolution of largest singular value | Evolution of smallest singular value |

|---|---|

|

|

DQN is very sensitive to the regularization coefficient .$ The value of .$ I therefore used

Paper:

@article{kumar2020implicit,

title={Implicit under-parameterization inhibits data-efficient deep reinforcement learning},

author={Kumar, Aviral and Agarwal, Rishabh and Ghosh, Dibya and Levine, Sergey},

journal={arXiv preprint arXiv:2010.14498},

year={2020}

}cleanRL on which the training and agent code is based:

@article{huang2022cleanrl,

author = {Shengyi Huang and Rousslan Fernand Julien Dossa and Chang Ye and Jeff Braga and Dipam Chakraborty and Kinal Mehta and João G.M. Araújo},

title = {CleanRL: High-quality Single-file Implementations of Deep Reinforcement Learning Algorithms},

journal = {Journal of Machine Learning Research},

year = {2022},

volume = {23},

number = {274},

pages = {1--18},

url = {http://jmlr.org/papers/v23/21-1342.html}

}Stable Baselines 3 on which the replay buffer and wrapper code is based:

@misc{raffin2019stable,

title={Stable baselines3},

author={Raffin, Antonin and Hill, Ashley and Ernestus, Maximilian and Gleave, Adam and Kanervisto, Anssi and Dormann, Noah},

year={2019}

}