This repo is no longer maintained!

DICTOL - A Discriminative dictionary Learning Toolbox for Classification (MATLAB version).

This Toolbox is a part of our LRSDL project.

Related publications:

-

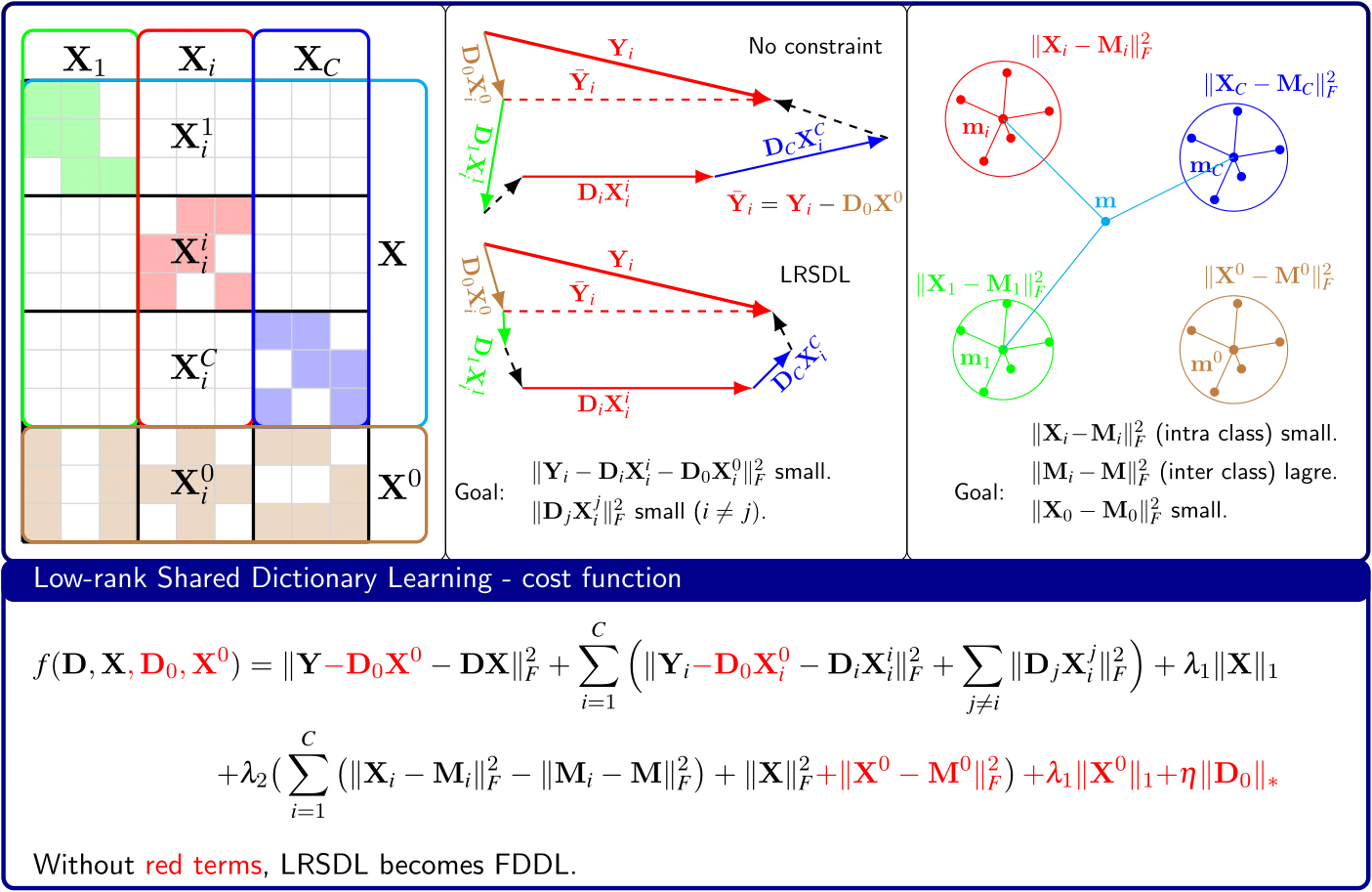

Tiep H. Vu, Vishal Monga. "Fast Low-rank Shared Dictionary Learning for Image Classification." to appear in IEEE Transactions on Image Processing. [paper].

-

Tiep H. Vu, Vishal Monga. "Learning a low-rank shared dictionary for object classification." International Conference on Image Processing (ICIP) 2016. [paper].

Author: Tiep Vu

Run DICTOL_demo.m to see example

If you experience any bugs, please let me know via the Issues tab. I'd really appreciate and fix the error ASAP. Thank you.

On this page:

- Notation

- Sparse Representation-based classification (SRC)

- Online Dictionary Learning (ODL)

- LCKSVD

- Dictionary learning with structured incoherence and shared features (DLSI)

- Dictionary learning for separating the particularity and the commonality (COPAR)

- LRSDL

- Fisher discrimination dictionary learning (FDDL)

- Discriminative Feature-Oriented dictionary learning (DFDL)

- D2L2R2

- Fast iterative shrinkage-thresholding algorithm (FISTA)

- References

Y: signals. Each column is one observation.D: dictionary.X: sparse coefficient.d: signal dimension.d = size(Y, 1).C: number of classes.c: class index.n_c: number of training samples in classc. Typically, alln_care the same and equal ton.N: total number of training samples.Y_range: an array storing range of each class, suppose that labels are sorted in a ascending order. Example: IfY_range = [0, 10, 25], then:- There are two classes, samples from class 1 range from 1 to 10, from class 2 range from 11 to 25.

- In general, samples from class

crange fromY_range(c) + 1toY_range(c+1) - We can observe that number of classes

C = numel(Y_range) - 1.

k_c: number of bases in class-specific dictionaryc. Typically, alln_care the same and equal tok.k_0: number of bases in the shared-dictionaryK: total number of dictionary bases.D_range: similar toY_rangebut used for dictionary without the shared dictionary.

- Sparse Representation-based classification implementation [1].

- Classification based on SRC.

- Syntax:

[pred, X] = SRC_pred(Y, D, D_range, opts)- INPUT:

Y: test samples.D: the total dictionary.D = [D_1, D_2, ..., D_C]withD_cbeing the c-th class-specific dictionary.D_range: range of class-specific dictionaries inD. See also Notation.opts: options.opts.lambda:lambdafor the Lasso problem. Default:0.01.opts.max_iter: maximum iterations of fista algorithm. Default:100. Check this implementation of FISTA

- OUTPUT:

pred: predicted labels of test samples.X: solution of the lasso problem.

- INPUT:

- An implementation of the well-known Online Dictionary Learning method [2].

- Syntax:

[D, X] = ODL(Y, k, lambda, opts, sc_method)- INPUT:

- OUTPUT:

D, X: as in the problem.

Check its project page

- An implementation of the well-known DLSI method [5].

- function

[D, X, rt] = DLSI(Y, Y_range, opts) - The main DLSI algorithm

- INPUT:

Y, Y_range: training samples and their labelsopts:opts.lambda, opts.eta:lambdaandetain the cost functionopts.max_iter: maximum iterations.

- OUTPUT:

D: the trained dictionary,X: the trained sparse coefficient,rt: total running time of the training process.

- function

pred = DLSI_pred(Y, D, opts) - predict the label of new input

Ygiven the trained dictionaryDand parameters stored inopts

Run DLSI_top in Matlab command window.

- An implementation of COPAR [7].

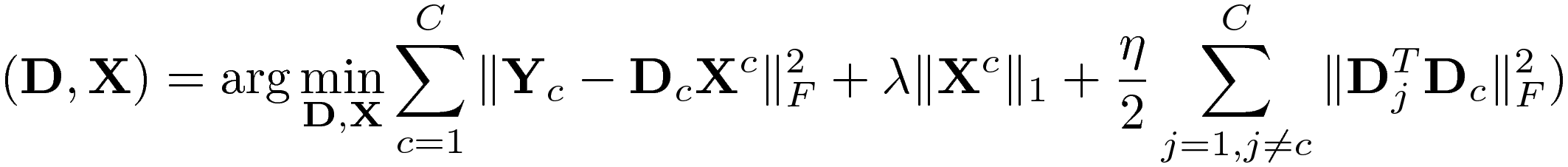

where:

-

function

[D, X, rt] = COPAR(Y, Y_range, opts) -

INPUT:

Y, Y_range: training samples and their labelsopts: a structopts.lambda, opts.eta:lambdaandetain the cost functionopts.max_iter: maximum iterations.

-

OUTPUT:

D: the trained dictionary,X: the trained sparse coefficient,rt: total running time of the training process.

-

function pred = COPAR_pred(Y, D, D_range_ext, opts)

-

predict label of the input Y

-

INPUT:

Y: test samplesD: the trained dictionaryD_range_ext: range of class-specific and shared dictionaries inD. The shared dictionary is located at the end ofD.opts: a struct of options:

opts.classify_mode: a string of classification mode. either'GC'(global coding) or'LC'(local coding)opts.lambda, opts.eta, opts.max_iter: as inCOPAR.m.

-

OUTPUT:

pred: predicted labels ofY.

Run COPAR_top in the Matlab command window.

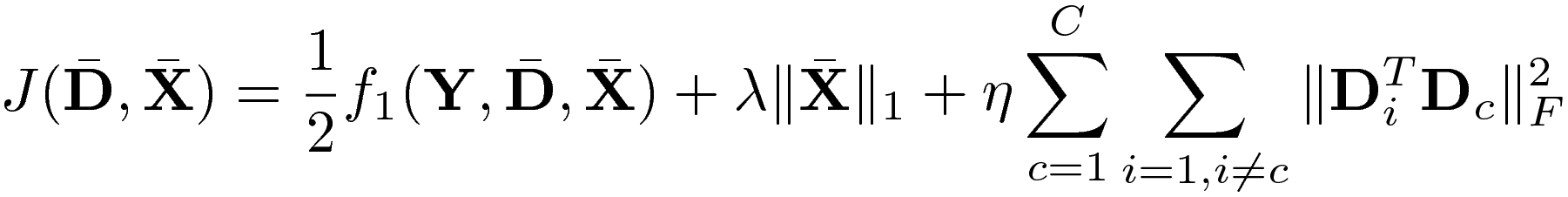

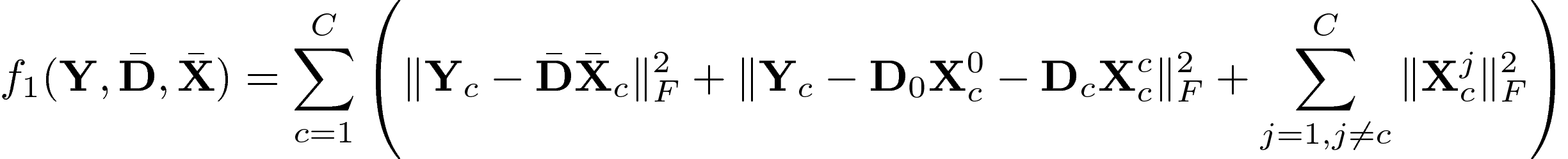

- An implementation of COPAR [8].

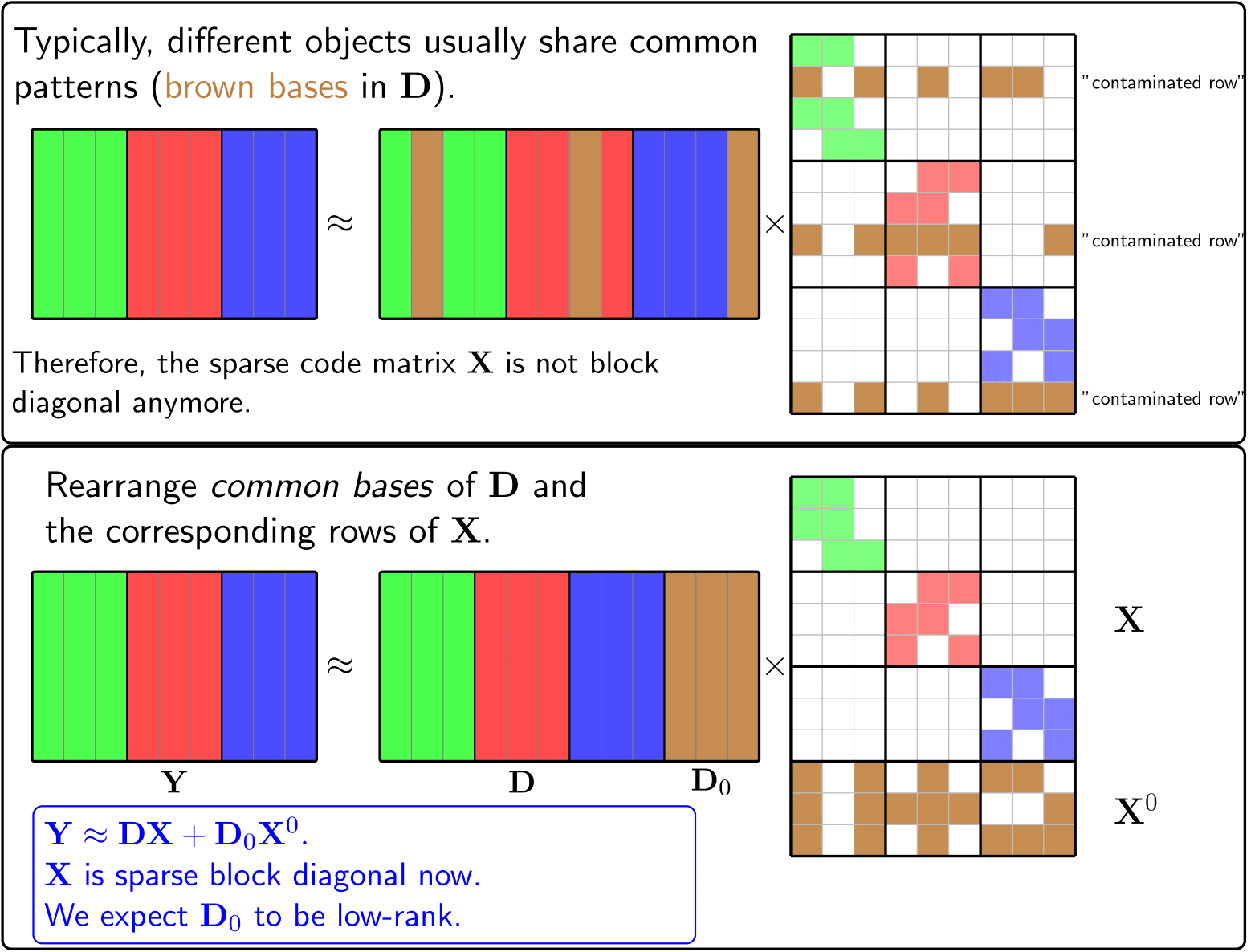

Note that unlike COPAR, in LRSDL, we separate the class-specific dictionaries (D) and the shared dictionary (D_0). The sparse coefficients (X, X^0) are also separated.

-

function `[D, D0, X, X0, CoefM, coefM0, opts, rt] = LRSDL(Y, train_label, opts)``

-

INPUT:

Y, Y_range: training samples and their labelsopts: a structopts.lambda1, opts.lambda:lambda1andlambda2in the cost function,opts.lambda3:etain the cost function (fix later),opts.max_iter: maximum iterations,opts.D_range: range of the trained dictionary,opts.k0: size of the shared dictionary

-

OUTPUT:

D, D0, X, X0: trained matrices as in the cost function,CoefM: the mean matrix.CoefM(:, c)is the mean vector ofX_c(mean of columns).CoefM0: the mean vector ofX0,rt: total running time (in seconds).

See LRSDL_pred_GC.m function

Run LRSDL_top in the Matlab command window.

- An implementation of FDDL [4].

Simiar to LRSDL cost function without red terms.

Set opts.k0 = 0 and using LRSDL.m function.

- function `pred = FDDL_pred(Y, D, CoefM, opts)``

- Its project page.

- Update later

- An implementation of FISTA [10].

- Check this repository

[1]. (SRC)Wright, John, et al. "Robust face recognition via sparse representation." Pattern Analysis and Machine Intelligence, IEEE Transactions on 31.2 (2009): 210-227. paper

[2]. (ODL) Mairal, Julien, et al. "Online learning for matrix factorization and sparse coding." The Journal of Machine Learning Research 11 (2010): 19-60. [paper]

[3]. (LC-KSVD) Jiang, Zhuolin, Zhe Lin, and Larry S. Davis. "Label consistent K-SVD: Learning a discriminative dictionary for recognition." Pattern Analysis and Machine Intelligence, IEEE Transactions on 35.11 (2013): 2651-2664. [Project page]

[4]. (FDDL) Yang, Meng, et al. "Fisher discrimination dictionary learning for sparse representation." Computer Vision (ICCV), 2011 IEEE International Conference on. IEEE, 2011. [paper], [code]

[5]. (DLSI)Ramirez, Ignacio, Pablo Sprechmann, and Guillermo Sapiro. "Classification and clustering via dictionary learning with structured incoherence and shared features." Computer Vision and Pattern Recognition (CVPR), 2010 IEEE Conference on. IEEE, 2010. [paper]

[6]. (DFDL) Discriminative Feature-Oriented dictionary Learning. Tiep H. Vu, H. S. Mousavi, V. Monga, A. U. Rao and G. Rao, "Histopathological Image Classification using Discriminative Feature-Oriented dictionary Learning", IEEE Transactions on Medical Imaging , volume 35, issue 3, pages 738-751, March 2016. [paper] [Project page]

[7]. (COPAR) Kong, Shu, and Donghui Wang. "A dictionary learning approach for classification: separating the particularity and the commonality." Computer Vision ECCV 2012. Springer Berlin Heidelberg, 2012. 186-199. [paper]

[8]. (LRSDL) Tiep H. Vu, Vishal Monga. "Learning a low-rank shared dictionary for object classification." International Conference on Image Processing (ICIP) 2016. [paper]

[9]. A singular value thresholding algorithm for matrix completion." SIAM Journal on Optimization 20.4 (2010): 1956-1982. [paper]

[10]. (FISTA) Beck, Amir, and Marc Teboulle. "A fast iterative shrinkage-thresholding algorithm for linear inverse problems." SIAM journal on imaging sciences 2.1 (2009): 183-202. [paper]

[11]. (SPAMS) The Sparse Modeling Software