This repository contains the source code for our paper:

Egocentric Scene Understanding via Multimodal Spatial Rectifier

Tien Do, Khiem Vuong, and Hyun Soo Park

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022

Project webpage

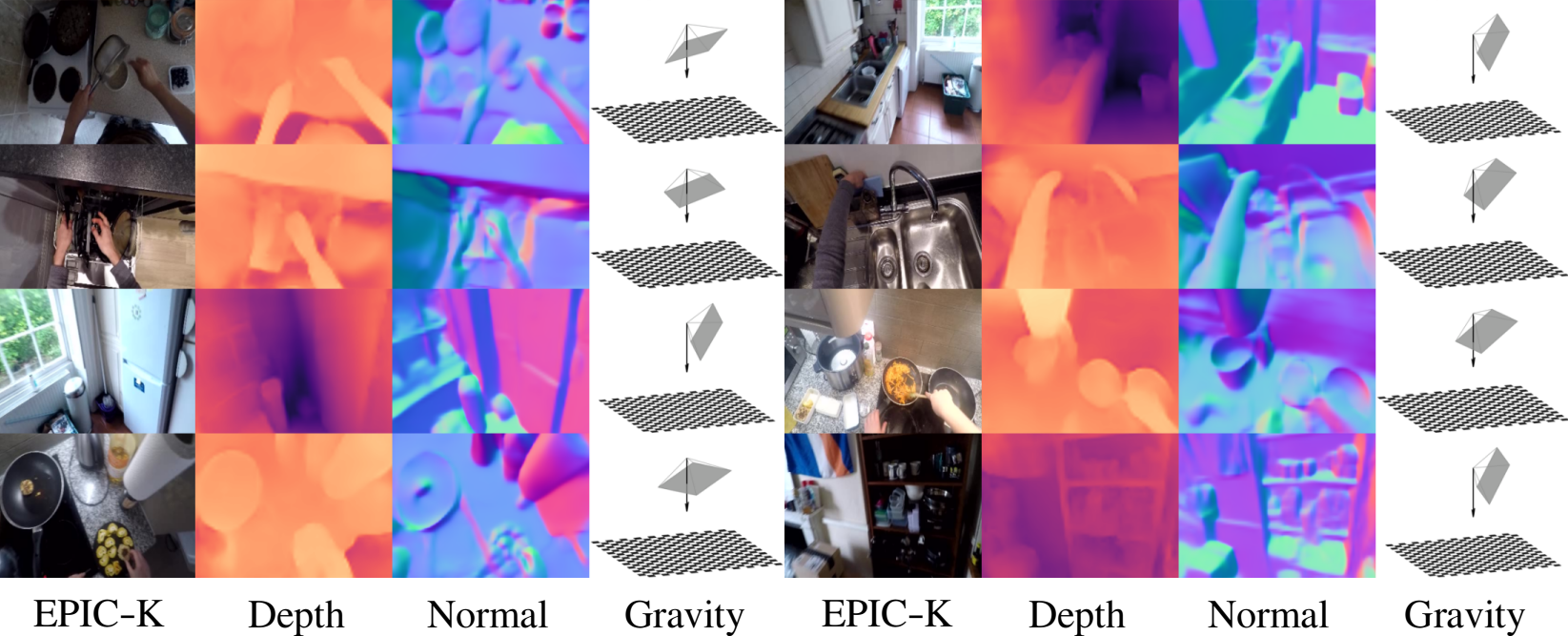

Qualitative results for depth, surface normal, and gravity

prediction on EPIC-KITCHENS dataset.

Qualitative results for depth, surface normal, and gravity

prediction on EPIC-KITCHENS dataset.

To activate the docker environment, run the following command:

nvidia-docker run -it --rm --ipc=host -v /:/home vuong067/egodepthnormal:latest

where / is the directory in the local machine (in this case, the root folder), and /home is the reflection of that directory in the docker.

This docker is built based on NVIDIA-Docker with PyTorch version 21.12 with a few additional common packages (e.g., timm).

Inside the docker, change the working directory to this repository:

cd /home/PATH/TO/THIS/REPO/EgoDepthNormal

Please follow the below steps to extract depth and surface normals from some RGB images using our provided pre-trained model:

-

Make sure you have the following

.ckptfiles inside./checkpoints/folder:edina_midas_depth_baseline.ckpt,edina_midas_normal_baseline.ckpt. You can use this command to download these checkpoints:wget -O edina_midas_depth_baseline.ckpt https://edina.s3.amazonaws.com/checkpoints/edina_midas_depth_baseline.ckpt && mv edina_midas_depth_baseline.ckpt ./checkpoints/ wget -O edina_midas_normal_baseline.ckpt https://edina.s3.amazonaws.com/checkpoints/edina_midas_normal_baseline.ckpt && mv edina_midas_normal_baseline.ckpt ./checkpoints/ -

Our demo RGB images are stored in

demo_data/color -

Run

demo.shto extract the results in./demo_visualization/.sh demo.sh

You can evaluate depth/surface normal predictions quantitatively and qualitatively on EDINA dataset using our provided pre-trained models. Make sure you have the corresponding depth/normal checkpoints inside ./checkpoints/ folder and the dataset split (pickle file) inside ./pickles/ folder. Please refer to dataset on how to download the pickle file.

Run:

sh eval.sh

Specifically, inside the bash script, multiple arguments are needed, e.g. path to dataset/dataset pickle files, path to the pre-trained model, batch size, network architecture, test dataset, etc. Please refer to the actual code for the exact supported arguments options.

For instance, the following sample codeblock can be used to evaluate depth estimation on EDINA test set:

python main_depth.py --train 0 --model_type 'midas_v21' \

--test_usage 'edina_test' \

--checkpoint ./checkpoints/edina_midas_depth_baseline.ckpt \

--dataset_pickle_file ./pickles/scannet_edina_camready_final_clean.pkl \

--batch_size 8 --skip_every_n_image_test 40 \

--data_root PATH/TO/EDINA/DATA \

--save_visualization ./eval_visualization/depth_results

🌟 EDINA data (train + test) set is now available to download! 🌟

EDINA is an egocentric dataset that comprises more than 500K synchronized RGBD frames and gravity directions. Each instance in the dataset is a triplet: RGB image, depths and surface normals, and 3D gravity direction.

Please refer to dataset for more details, including downloading instructions and dataset organization.

If you find our work to be useful in your research, please consider citing our paper:

@InProceedings{Do_2022_EgoSceneMSR,

author = {Do, Tien and Vuong, Khiem and Park, Hyun Soo},

title = {Egocentric Scene Understanding via Multimodal Spatial Rectifier},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022}

}

If you have any questions/issues, please create an issue in this repo or contact us at this email.