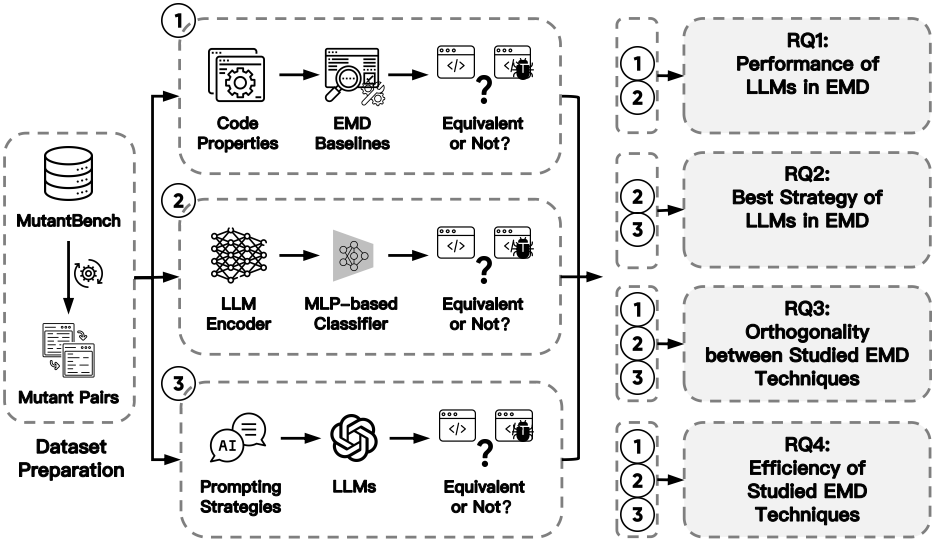

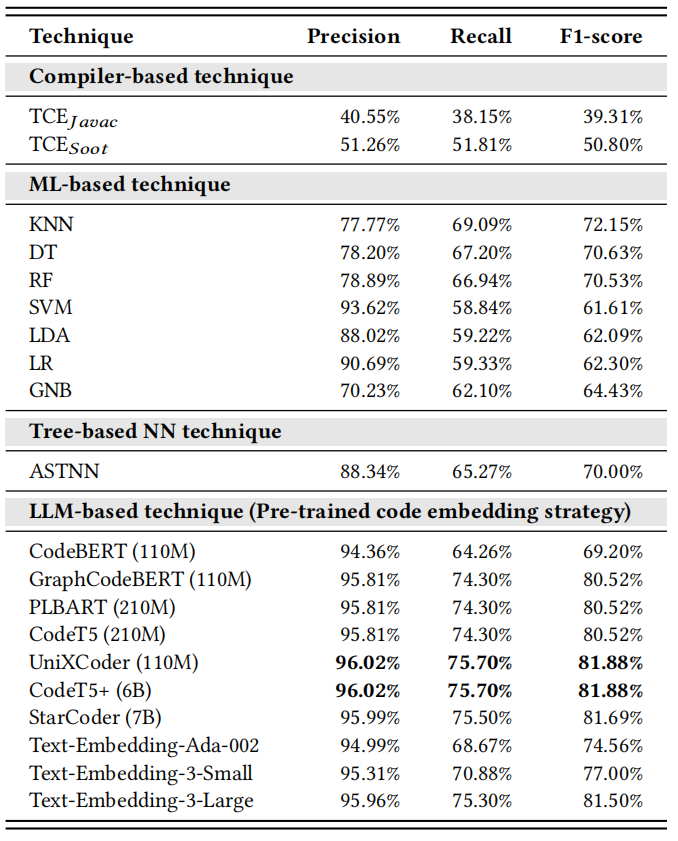

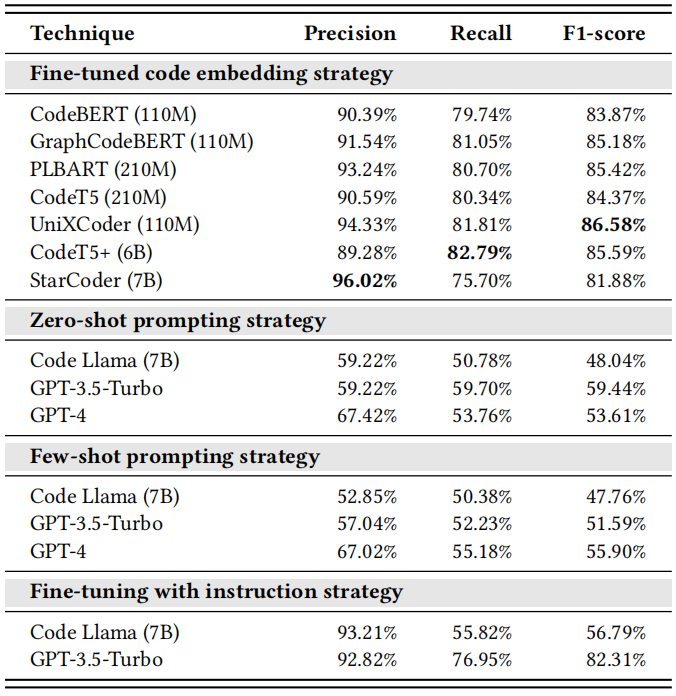

In this study, we empirically investigate various LLMs with different learning strategies for equivalent mutant detection. This is a replication package for our empirical study.

-

Python 3.7.7

-

PyTorch 1.13.1+cu117

-

Sciki-learn 1.2.2

-

Transformers 4.37.0.dev0

-

TRL 0.7.11

-

Numpy 1.18.1

-

Pandas 1.3.0

-

Matplotlib 3.4.2

-

Openai 1.2.3

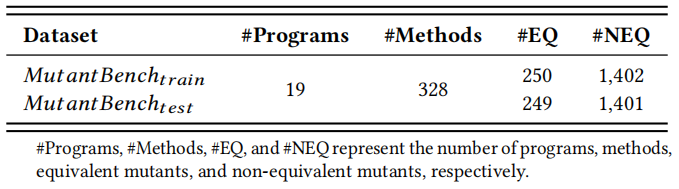

We construct a (Java) Equivalent Mutant Detection dataset based on the MutantBench, which consists of MutantBenchtrain for fine-tuning and MutantBenchtest for testing. Specifically, the dataset can be divided into two parts:

-

Codebase (i.e.,

./dataset/MutantBench_code_db_java.csv) contains 3 columns that we used to conduct our experiments: (1) id (int): The code id is used for retrieving the Java methods. (2) code (str): The original method/mutant written in Java. (3) operator (str): The type of mutation operators. -

Mutant-Pair Datasets (i.e., MutantBenchtrain and MutantBenchtest) contains 4 columns that we used to conduct our experiments: (1) id (int): The id of mutant pair. (2) code_id_1 (int): The code id is used to retrieve the Java methods in Codebase. (3) code_id_2 (int): The code id is used to retrievethe Java methods in Codebase. (4) label (int): The label that determines whether a mutant pair is equivalent or not (i.e., 1 indicates equivalent, 0 indicates non-equivalent).

All the pre-processed data used in our experiments can be downloaded from ./dataset.

All the models' checkpoints in our experiments can be downloaded from our anonymous Zenodo(link1,link2).

For running the open-source LLMs, we recommend using GPU with 48 GB up memory for training and testing, since StarCoder (7B), CodeT5+ (7B), and Code Llama (7B) are computing intensive.

For running the closed-source LLMs (i.e., ChatGPT and Text-Embedding Models), you should prepare your own OpenAI account and API KEY.

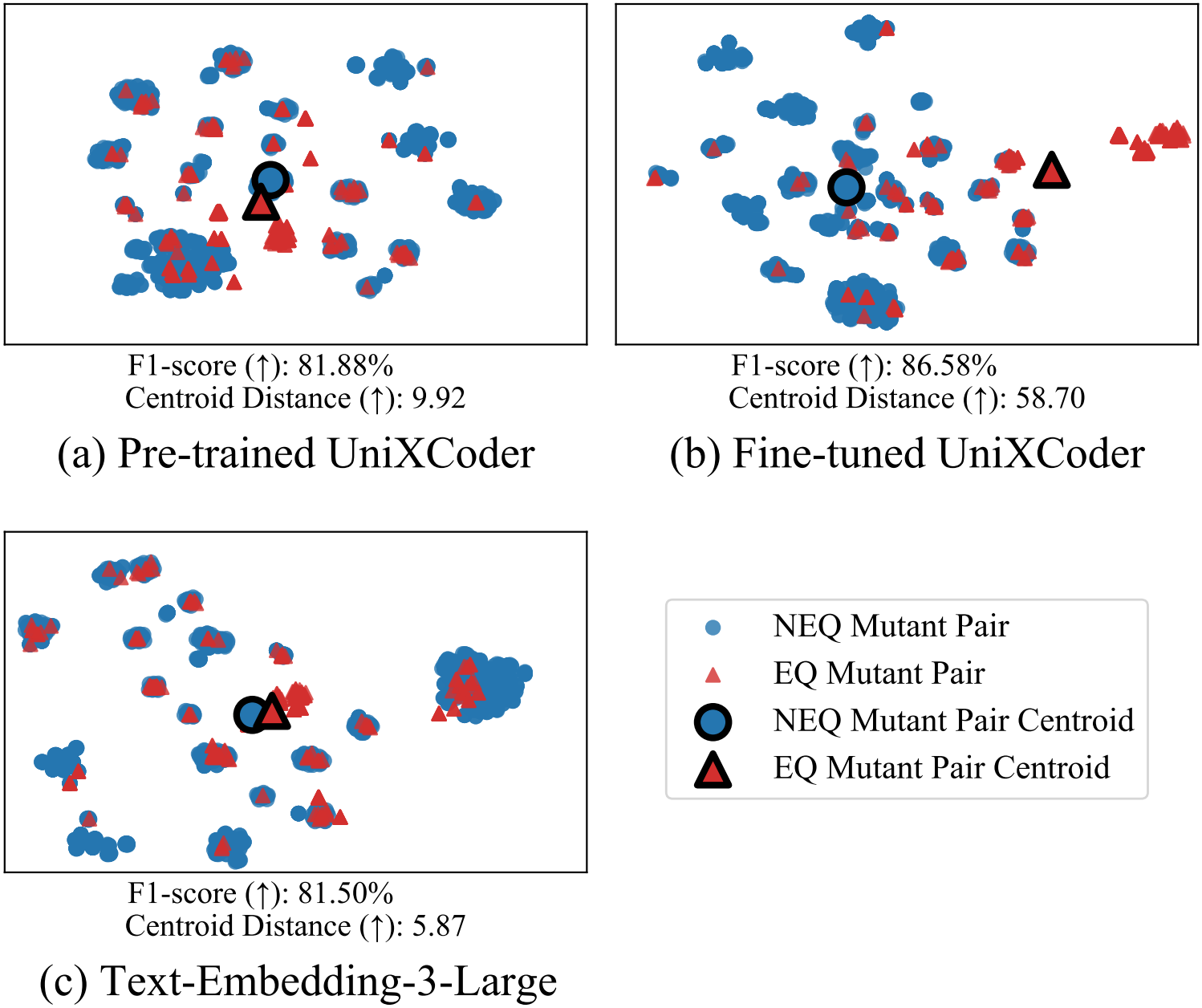

Let's take the pre-trained UniXCoder as an example.

The ./dataset folder contains the training and test data.

You can train the model through the following commands:

cd ./UniXCoder/code;

python train.py;

To run the fine-tuned model to make inferences on the test dataset, run the following commands:

cd ./UniXCoder/code;

python test.py;

How to run the remaining models and strategies All the code can be accessed from respective directories. Please find their README.md files to run respective models.