This repo is the official implementation of NeurIPS 2023 paper, GraspGF.

- README

- Testing

- Training

- Refine and Vis

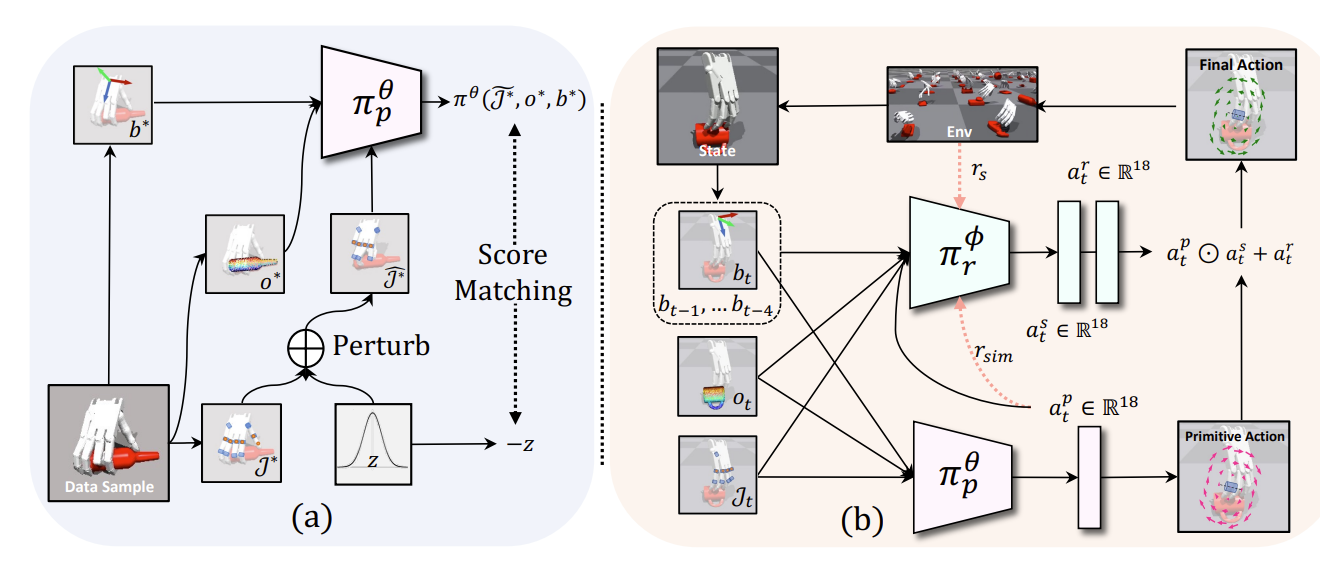

In this paper, we propose a novel task called human-assisting dexterous grasping that aims to train a policy for controlling a robotic hand's fingers to assist users in grasping diverse objects with diverse grasping poses.

We address this challenge by proposing an approach consisting of two sub-modules: a hand-object-conditional grasping primitive called Grasping Gradient Field (GraspGF), and a history-conditional residual policy.

Contents of this repo are as follows:

The code has been tested on Ubuntu 20.04 with Python 3.8.

IsaacGym:

You can refer installation of IsaacGym here. We currently support the Preview Release 4 version of IsaacGym.

Human-assisting Dexterous Grasping Environment:

cd ConDexEnv

pip install -e .

Pointnet2:

cd Networks/pointnet2

pip install -e .

Other Dependencies

python package:

ipdb

tqdm

opencv-python

matplotlib

transforms3d

open3d

you can download filterd mesh and origin grasp pose dataset from Human-assisting Dexterous Grasping/Asset and put on following directopry.

ConDexEnv/assets

There are three sets in our dataset: train, seencategory and unseencategory. You can choose to only download one of them for simple demonstration.

you can download filterd grasping dataset, human trajectories, and pointcloud buffer from Human-assisting Dexterous Grasping/ExpertDatasets and put on current directory.

There are three types of data:

- Human trajectories: sampled from Handover-Sim, which has to be downloaded.

ExpertDatasets/human_traj_200_all.npy

- Pointcloud Buffer: contains pointcloud of objects we are using, you can download according to object dataset you download in asset.

ExpertDatasets/pcl_buffer_4096_train.pkl

ExpertDatasets/pcl_buffer_4096_seencategory.pkl

ExpertDatasets/pcl_buffer_4096_unseencategory.pkl

- Human Grasp Pose: contains human grasp pose which can also be downloaded according to object dataset you download in asset.

ExpertDatasets/grasp_data/ground/*_oti.pth

ExpertDatasets/grasp_data/ground/*_rc_ot.pth

ExpertDatasets/grasp_data/ground/*_rc.pth

For training GraspGF with pointnet2, fill following command in shell "gf_train.sh"

python ./Runners/TrainSDE_update.py \

--log_dir gf_pt2 \

--sde_mode vp \

--batch_size 3027 \

--lr 2e-4 \

--t0 0.5 \

--train_model \

--demo_nums 15387 \

--num_envs=3027 \

--demo_name=train_gf_rc \

--eval_demo_name=train_eval \

--device_id=0 \

--mode train \

--dataset_type train \

--relative \

--space riemann \

--pt_version pt2 \

Then run

sh ./gf_train.sh

fill following command in shell "gf_train.sh"

python ./Runners/TrainSDE_update.py \

--log_dir gf_pt \

--sde_mode vp \

--batch_size 3077 \

--lr 2e-4 \

--t0 0.5 \

--train_model \

--demo_nums 15387 \

--num_envs=3027 \

--demo_name=train_gf_rc \

--eval_demo_name=train_eval \

--device_id=0 \

--mode train \

--dataset_type train \

--relative \

--space riemann \

--pt_version pt \

Then run

sh ./gf_train.sh

sh ./rl_train.sh

sh ./rl_eval.sh

you can download pretrained checkpoint from Human-assisting Dexterous Grasping/Ckpt, and put on current directory for evluating pretrained model by adding following in rl_eval.sh.

--score_model_path="Ckpt/gf" \

--model_dir="Ckpt/gfppo.pt" \

The code and dataset used in this project is built from these repository:

Environment:

Dataset:

Diffusion:

Pointnet:

Pointnet2:

If you find our work useful in your research, please consider citing:

@article{wu2023learning,

title={Learning Score-based Grasping Primitive for Human-assisting Dexterous Grasping},

author={Tianhao Wu and Mingdong Wu and Jiyao Zhang and Yunchong Gan and Hao Dong},

booktitle={Thirty-seventh Conference on Neural Information Processing Systems},

year={2023},

url={https://openreview.net/forum?id=fwvfxDbUFw}

}If you have any suggestion or questions, please feel free to contact us:

Tianhao Wu: thwu@stu.pku.edu.cn

Mingdong Wu: wmingd@pku.edu.cn

This project is released under the MIT license. See LICENSE for additional details.