Purpose of this project is benchmarking Kogito application for a defined business process model using MongoDB as persistence store. The objective is to define a repeatable procedure to generate structured data for the metrics defined in Metrics specifications and results, to easily monitor system performance as the product evolves.

The following table shows the configuration of the test environment,

| Target | Specs |

|---|---|

| Kogito version | 1.8.0.Final |

| Runtime environment | OpenShift |

| JVM runtime | Quarkus |

| Data persistence | MongoDB |

| Testing framework | Gatling |

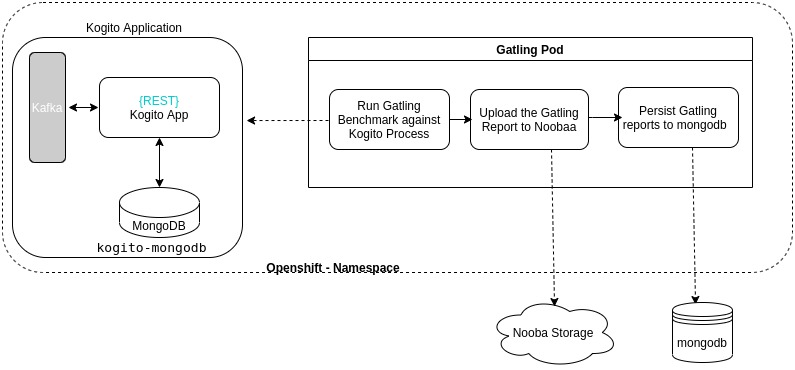

The following diagram illustrates the basic architecture of the testing scenario:

Note: Data-Index infrastructure is not part of this initial setup. Also, the initial metrics will not

validate that the Kafka broker actually sends the expected events.

Gatling Pod is having two containers - Each container runs the tasks sequentially one after another. One container runs the Gatling(Scala Performance Test Suite) code to do the benchmarking against kogito application. Another container uploads the report generated by gatling to the centralized Noobaa storage.

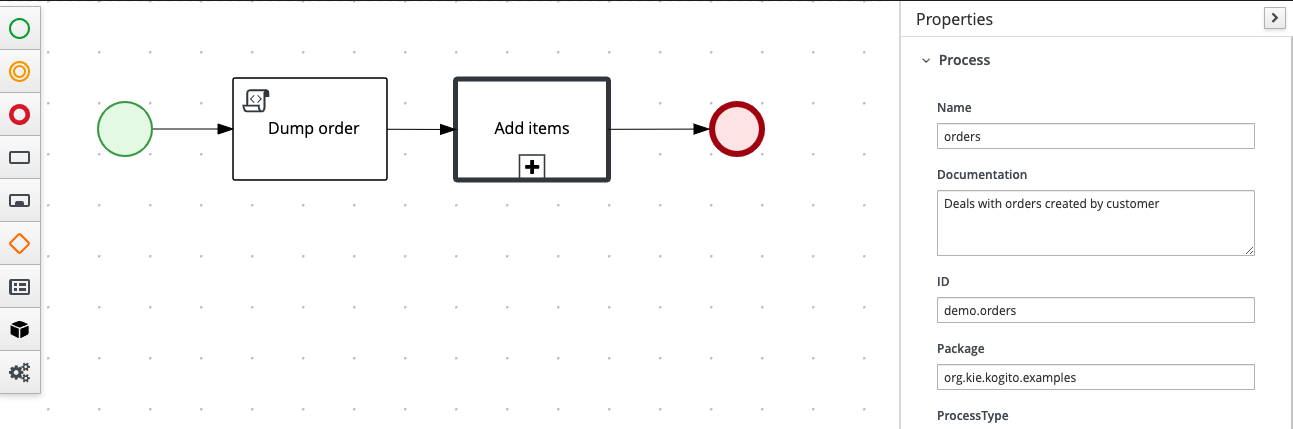

The Business Process Model under test comes from the Process + Quarkus example

application, that is a simple process service for ordering items.

In particular, we will create new instances of the Order data element using a POST REST request, defined by the orders

process:

To reduce the number of external dependencies and simplify the customizations, the original project has been copied to test/process-quarkus-example, starting from the branch 1.11.0.Final

Strategy 1 (ccu): maintain a constant number of concurrent users during a given duration

Gatling: constantConcurrentUsers

Strategy 2 (ru): inject a given number of users with a linear ramp during a given duration

Gatling: rampUsers

Strategy 3 (cups): inject users at a constant rate, defined in users per second, during a given duration

Gatling: constantUsersPerSec

The different strategies are executed with varying pod numbers, number of users and duration of test runs. Those metrics can be found in the Run column in the Results section.

Note: Run column contains - pods-users-time in minutes

A warmup run needs to be executed as a separate test before each test for a newly created application pod

Strategy 1 - ccu

| Run | Latency 95% PCT (ms) | Latency 99% PCT (ms) | Av. Response (ms) | Peak Response (ms) | Error Rate (%) | Throughput (transactions / s - TPS) | Runtime memory (MiB / pod) | CPU Usage (m / pod) | Runtime startup (ms) |

|---|---|---|---|---|---|---|---|---|---|

| 1-100-5 | 1253 | 1948 | 617 | 6782 | 0 | 160 | 1107 | 768 | |

| 2-100-5 | 644 | 1414 | 518 | 3557 | 0 | 191 | 1287 (639,648) | 598 (275,323) | |

| 3-100-5 | 1004 | 1648 | 557 | 4122 | 0 | 178 | 1385 (501,512,372) | 928 (268,258,402) | |

| 1-100-15 | 1438 | 2021 | 636 | 7770 | 0 | 156 | 3096 | 423 | |

| 3-100-15 | 734 | 1499 | 534 | 5406 | 0 | 186 | 3668 (1416,1038,1432) | 973 (271,437,265) |

Strategy 2 - ru

| Run | Latency 95% PCT (ms) | Latency 99% PCT (ms) | Av. Response (ms) | Peak Response (ms) | Error Rate (%) | Throughput (transactions / s - TPS) | Runtime memory (MiB / pod) | CPU Usage (m / pod) | Runtime startup (ms) |

|---|---|---|---|---|---|---|---|---|---|

| 1-50000-10 | 986 | 5690 | 608 | 8454 | 0 | 83 | 1216 | 586 |

Strategy 3 - cups

| Run | Latency 95% PCT (ms) | Latency 99% PCT (ms) | Av. Response (ms) | Peak Response (ms) | Error Rate (%) | Throughput (transactions / s - TPS) | Runtime memory (MiB / pod) | CPU Usage (m / pod) | Runtime startup (ms) |

|---|---|---|---|---|---|---|---|---|---|

| 1-700-5 | 25000 | 36900 | 12000 | 84000 | 46 | 648 | - | - | |

| 3-700-5 | 61000 | 63000 | 20000 | 68000 | 92 | 600 | - | - |

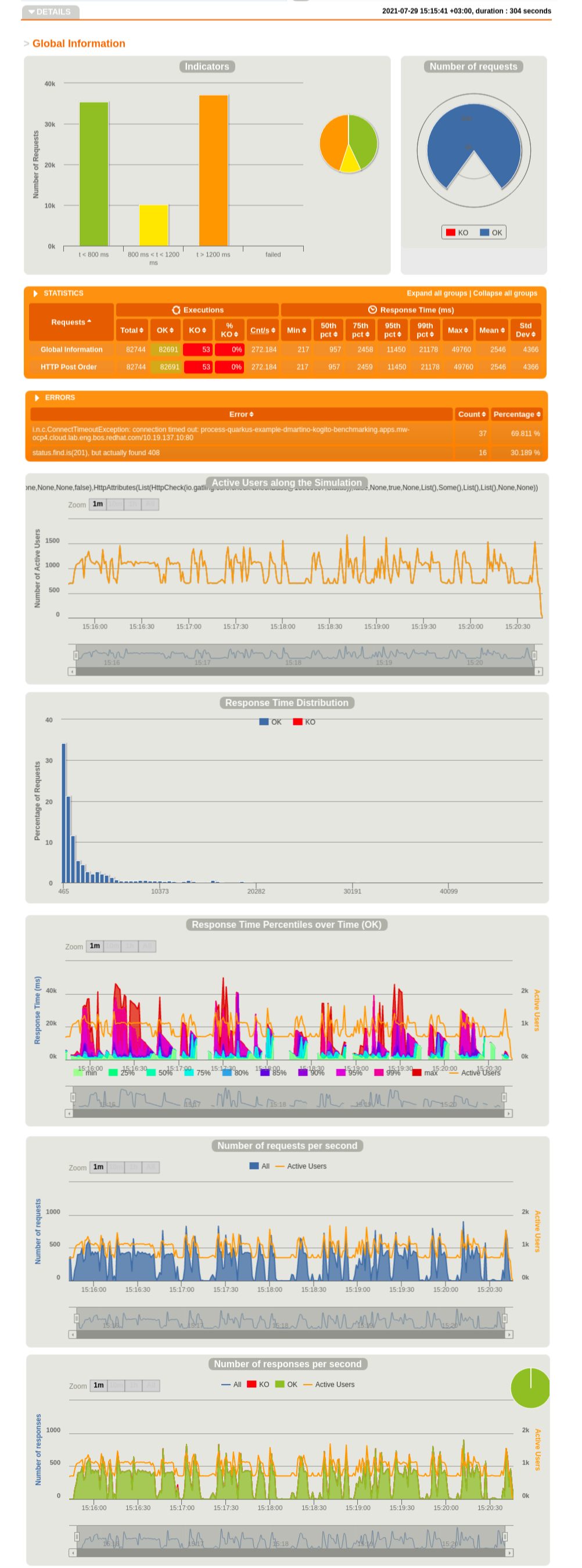

Gatling in same OCP project - strategy cups - 1 pod - 15 minutes test runs

| Run | Latency 95% PCT (ms) | Latency 99% PCT (ms) | Av. Response (ms) | Peak Response (ms) | Error Rate (%) | Throughput (transactions / s - TPS) | Runtime memory (MiB / pod) | CPU Usage (m / pod) | Runtime startup (ms) |

|---|---|---|---|---|---|---|---|---|---|

| 1-20-15 (20210821073521681) | 100 | 376 | 32 | 1183 | 0 | 20 | |||

| 1-20-15 (20210821075409461) | 85 | 360 | 30 | 1309 | 0 | 20 | |||

| 1-30-15 (20210821081717675) | 125 | 451 | 38 | 2098 | 0 | 30 | |||

| 1-50-15 (20210821084740136) | 30004 | 33084 | 2214 | 45681 | 24 | 50 | |||

| 1-40-15 (20210821090817631) | 200 | 579 | 47 | 2228 | 0 | 40 | |||

| 1-50-15 (20210821111043247) | 375 | 1009 | 69 | 2372 | 0 | 50 | |||

| 1-60-15 (20210823050706449) | 28355 | 30004 | 8742 | 30036 | 3 | 58 | |||

| 1-50-15 (20210823053549281) | 30004 | 30006 | 4161 | 30047 | 20 | 49 | |||

| 1-50-15 (20210824095741895) | 22026 | 29282 | 3738 | 30015 | 2 | 49 |

- Fetch Orders from REST API: the URL is

ROUTE_OF_APPLICATION/orders - Access

Swagger UI: the URL isROUTE_OF_APPLICATION/swagger-ui - Every time a new

Orderis defined, the related Pod in the OCP platform will log a message like:

Order has been created Order[12345] with assigned approver JOHN