Xu He1

·

Qiaochu Huang1

·

Zhensong Zhang2

·

Zhiwei Lin1

·

Zhiyong Wu1,4

·

Sicheng Yang1

·

Minglei Li3

·

Zhiyi Chen3

·

Songcen Xu2

·

Xiaofei Wu2

·

1Shenzhen International Graduate School, Tsinghua University 2Huawei Noah’s Ark Lab

3Huawei Cloud Computing Technologies Co., Ltd 4The Chinese University of Hong Kong

-

[2024.05.06] Release training and inference code with instructions to preprocess the PATS dataset.

-

[2024.03.25] Release paper.

- Release data preprocessing code.

- Release inference code.

- Release pretrained weights.

- Release training code.

- Release code about evaluation metrics.

- Release the presentation video.

We recommend a python version >=3.7 and cuda version =11.7. It's possible to have other compatible version.

conda create -n MDD python=3.7

conda activate MDD

pip install -r requirements.txtWe test our code on NVIDIA A10, NVIDIA A100, NVIDIA GeForce RTX 4090.

Download our trained weights including motion_decoupling.pth.tar and motion_diffusion.pt from Baidu Netdisk. Put them in the inference/ckpt folder.

Download WavLM Large model and put it into the inference/data/wavlm folder.

Now, get started with the following code:

cd inference

CUDA_VISIBLE_DEVICES=0 python inference.py --wav_file ./assets/001.wav --init_frame ./assets/001.png --use_motion_selectionDue to copyright considerations, we are unable to directly provide the preprocessed data subset mentioned in our paper. Instead, we provide the filtered interval ids and preparation instructions.

To get started, please download the meta file cmu_intervals_df.csv provided by PATS (you can fint it in any zip file) and put it in the data-preparation folder. Then run the following code to prepare the data.

cd data-preparation

bash prepare_data.shAfter running the above code, you will get the following folder structure containing the preprocessed data:

|--- data-preparation

| |--- data

| | |--- img

| | | |--- train

| | | | |--- chemistry#99999.mp4

| | | | |--- oliver#88888.mp4

| | | |--- test

| | | | |--- jon#77777.mp4

| | | | |--- seth#66666.mp4

| | |--- audio

| | | |--- chemistry#99999.wav

| | | |--- oliver#88888.wav

| | | |--- jon#77777.wav

| | | |--- seth#66666.wavHere we use accelerate for distributed training.

Change into the stage1 folder:

cd stage1Then run the following code to train the motion decoupling module:

accelerate launch run.py --config config/stage1.yaml --mode trainCheckpoints be saved in the log folder, denoted as stage1.pth.tar, which will be used to extract the keypoint features:

CUDA_VISIBLE_DEVICES=0 python run_extraction.py --config config/stage1.yaml --mode extraction --checkpoint log/stage1.pth.tar --device_ids 0 --train

CUDA_VISIBLE_DEVICES=0 python run_extraction.py --config config/stage1.yaml --mode extraction --checkpoint log/stage1.pth.tar --device_ids 0 --testAnd the extracted motion features will save in the feature folder.

Change into the stage2 folder:

cd ../stage2Download WavLM Large model and put it into the data/wavlm folder.

Then slice and preprocess the data:

cd data

python create_dataset_gesture.py --stride 0.4 --length 3.2 --keypoint_folder ../stage1/feature ----wav_folder ../data-preparation/data/audio --extract-baseline --extract-wavlm

cd ..Run the following code to train the latent motion diffusion module:

accelerate launch train.pyChange into the stage3 folder:

cd ../stage3Download mobile_sam.pt provided by MobileSAM and put it in the pretrained_weights folder. Then extract bounding boxes of hands for weighted loss (only training set needed):

python get_bbox.py --img_dir ../data-preparation/data/img/trainNow you can train the refinement network:

accelerate launch run.py --config config/stage3.yaml --mode train --tps_checkpoint ../stage1/log/stage1.pth.tarIf you find our work useful, please consider citing:

@article{he2024co,

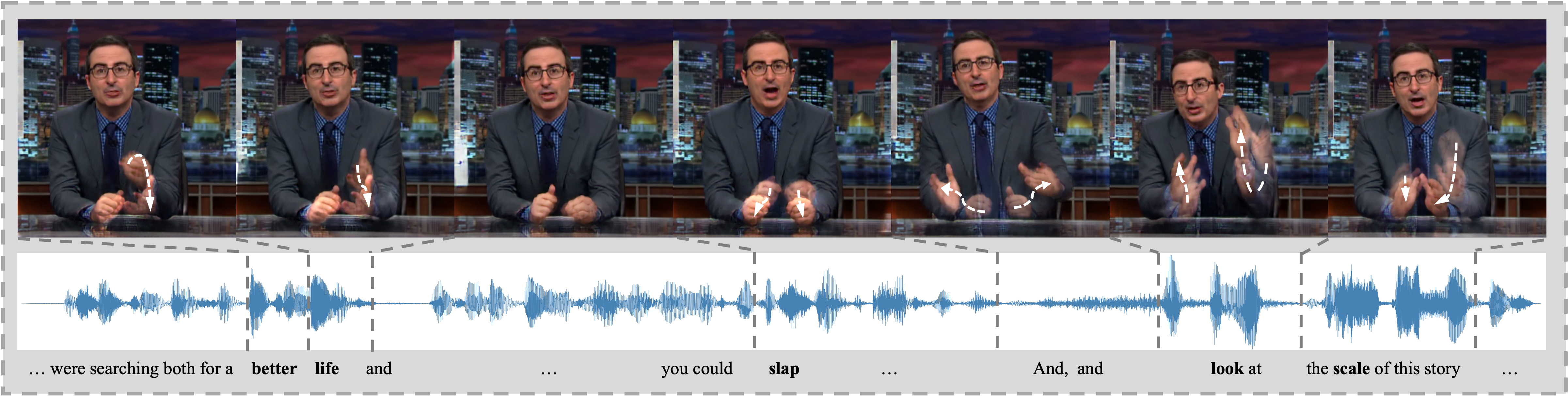

title={Co-Speech Gesture Video Generation via Motion-Decoupled Diffusion Model},

author={He, Xu and Huang, Qiaochu and Zhang, Zhensong and Lin, Zhiwei and Wu, Zhiyong and Yang, Sicheng and Li, Minglei and Chen, Zhiyi and Xu, Songcen and Wu, Xiaofei},

journal={arXiv preprint arXiv:2404.01862},

year={2024}

}Our code follows several excellent repositories. We appreciate them for making their codes available to the public.