This repo is a companion to the paper

Tensor Programs II: Neural Tangent Kernel for Any Architecture

Greg Yang

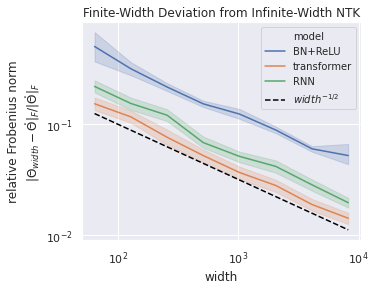

which shows that the infinite-width limit of a neural network of any architecture is well-defined (in the technical sense that the tangent kernel (NTK) of any randomly initialized neural network converges in the large width limit) and can be computed. We explicitly compute several such infinite-width networks in this repo.

Despite what the title suggests, this repo does not implement the infinite-width NTK for every architecture, but rather demonstrates the derivation and implementation for a few select advanced architectures. For more basic NTK like multi-layer perceptron or vanilla convolutional neural network, see neural-tangents.

Note: Currently Github does not render the notebooks properly. We recommend opening them up in Google Colab.

| Architecture | Notebook | Colab |

|---|---|---|

| RNN with avg pooling | Notebook | |

| Transformer | Notebook | |

| Batchnorm+ReLU MLP | Notebook |

Plot.ipynb also reproduces Figure 1 of the paper.

Related: a wide neural network of any architecture is distributed like a Gaussian process, as proved in the previous paper of this series. Derivations and implementations of this Gaussian process for RNN, transformer, batchnorm, and GRU are provided in here.