We present network definition and weights for our second place solution in CVPR 2018 DeepGlobe Building Extraction Challenge.

Vladimir Iglovikov, Selim Seferbekov, Alexandr Buslaev, Alexey Shvets

If you find this work useful for your publications, please consider citing:

@InProceedings{Iglovikov_2018_CVPR_Workshops,

author = {Iglovikov, Vladimir and Seferbekov, Selim and Buslaev, Alexander and Shvets, Alexey},

title = {TernausNetV2: Fully Convolutional Network for Instance Segmentation},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops},

month = {June},

year = {2018}

}

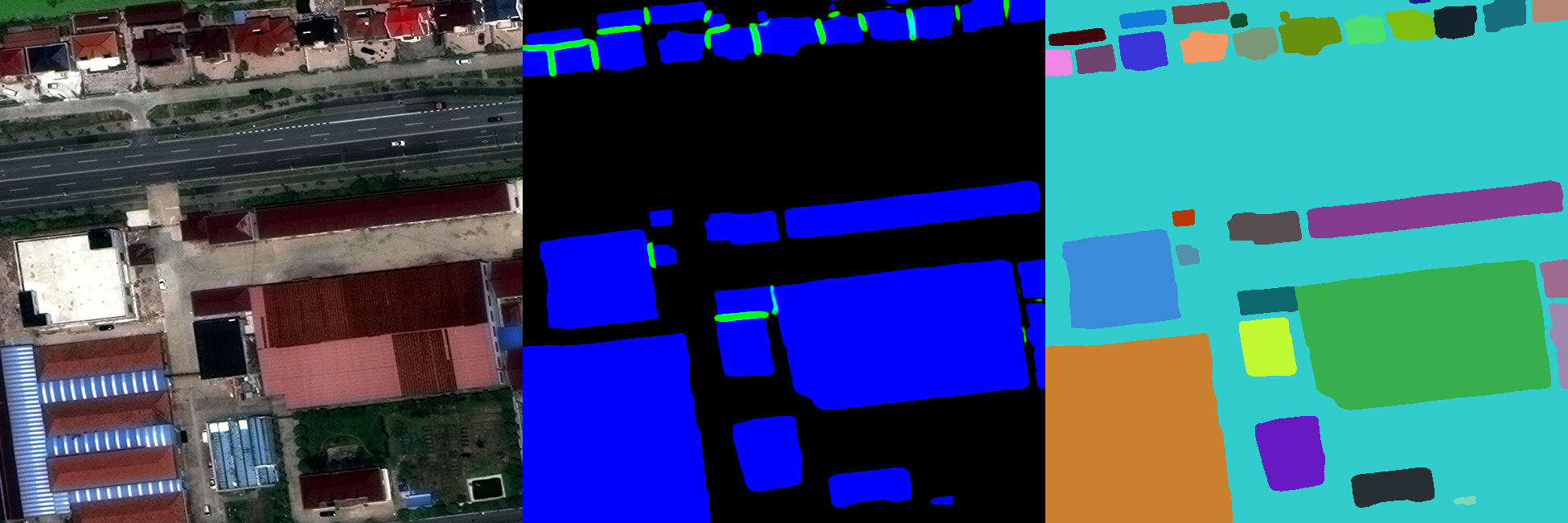

Automatic building detection in urban areas is an important task that creates new opportunities for large scale urban planning and population monitoring. In a CVPR 2018 Deepglobe Building Extraction Challenge participants were asked to create algorithms that would be able to perform binary instance segmentation of the building footprints from satellite imagery. Our team finished second and in this work we share the description of our approach, network weights and code that is sufficient for inference.

The training data for the building detection subchallenge originate from the SpaceNet dataset. The dataset uses satellite imagery with 30 cm resolution collected from DigitalGlobe’s WorldView-3 satellite. Each image has 650x650 pixels size and covers 195x195 m2 of the earth surface. Moreover, each region consists of high-resolution RGB, panchromatic, and 8-channel low-resolution multi-spectral images. The satellite data comes from 4 different cities: Vegas, Paris, Shanghai, and Khartoum with different coverage, of (3831, 1148, 4582, 1012) images in the train and (1282, 381, 1528, 336) images in the test sets correspondingly.

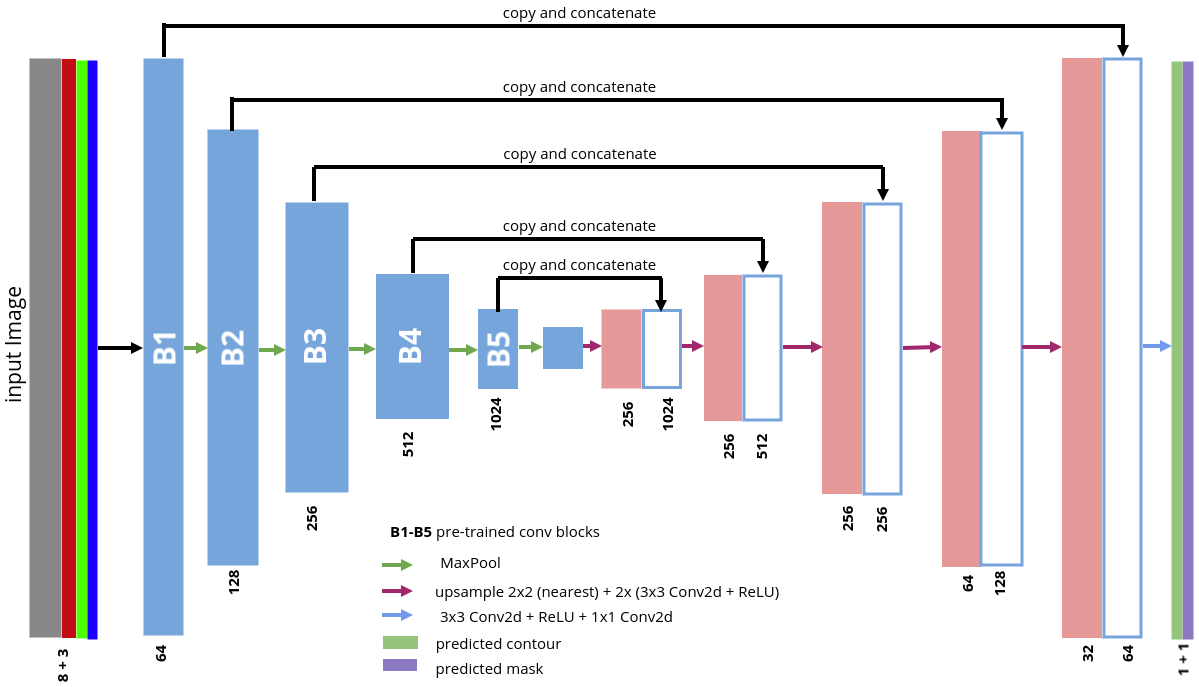

- The originial TernausNet was extened in a few ways:

The encoder was replaced with WideResnet 38 that has In-Place Activated BatchNorm.

The input to the network was extended to work with 11 input channels. Three for RGB and eight for multispectral data.

In order to make our network to perform instance segmentation, we utilized the idea that was proposed and successfully executed by Alexandr Buslaev, Selim Seferbekov and Victor Durnov in their winning solutions of the Urban 3d and Data Science Bowl 2018 challenges.

Output of the network was modified to predict both the binary mask in which we predict building / non building classes on the pixel level and binary mask in which we predict areas of an image where different objects touch or very close to each other. These predicted masks are combined and used as an input to the watershed transform.

Result on the public and private leaderboard with respect to the metric that was used by the organizers of the CVPR 2018 DeepGlobe Building Extraction Challenge.

Results per city| City: | Public Leaderboard | Private Leaderboard |

|---|---|---|

| Vegas | 0.891 | 0.892 |

| Paris | 0.781 | 0.756 |

| Shanghai | 0.680 | 0.687 |

| Khartoum | 0.603 | 0.608 |

| Average | 0.739 | 0.736 |

- Python 3.6

- PyTorch 0.4

- numpy 1.14.0

- opencv-python 3.3.0.10

Network weights

- You can easily start using our network and weights, following the demonstration example

- demo.ipynb