Inference Benchmark

Maximize the potential of your models with the inference benchmark (tool).

What is it

Inference benchmark provides a standard way to measure the performance of inference workloads. It is also a tool that allows you to evaluate and optimize the performance of your inference workloads.

Results

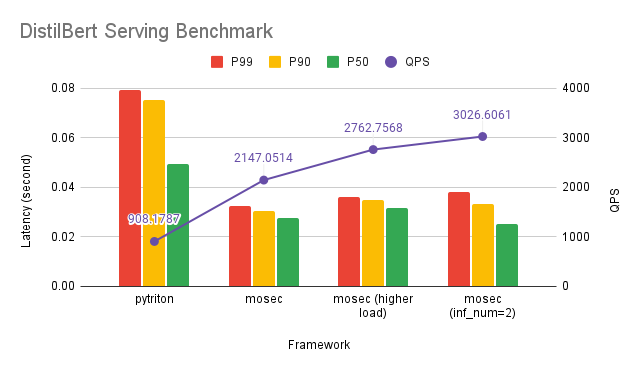

Bert

We benchmarked pytriton (triton-inference-server) and mosec with bert. We enabled dynamic batching for both frameworks with max batch size 32 and max wait time 10ms. Please checkout the result for more details.

More results with different models on different serving frameworks are coming soon.