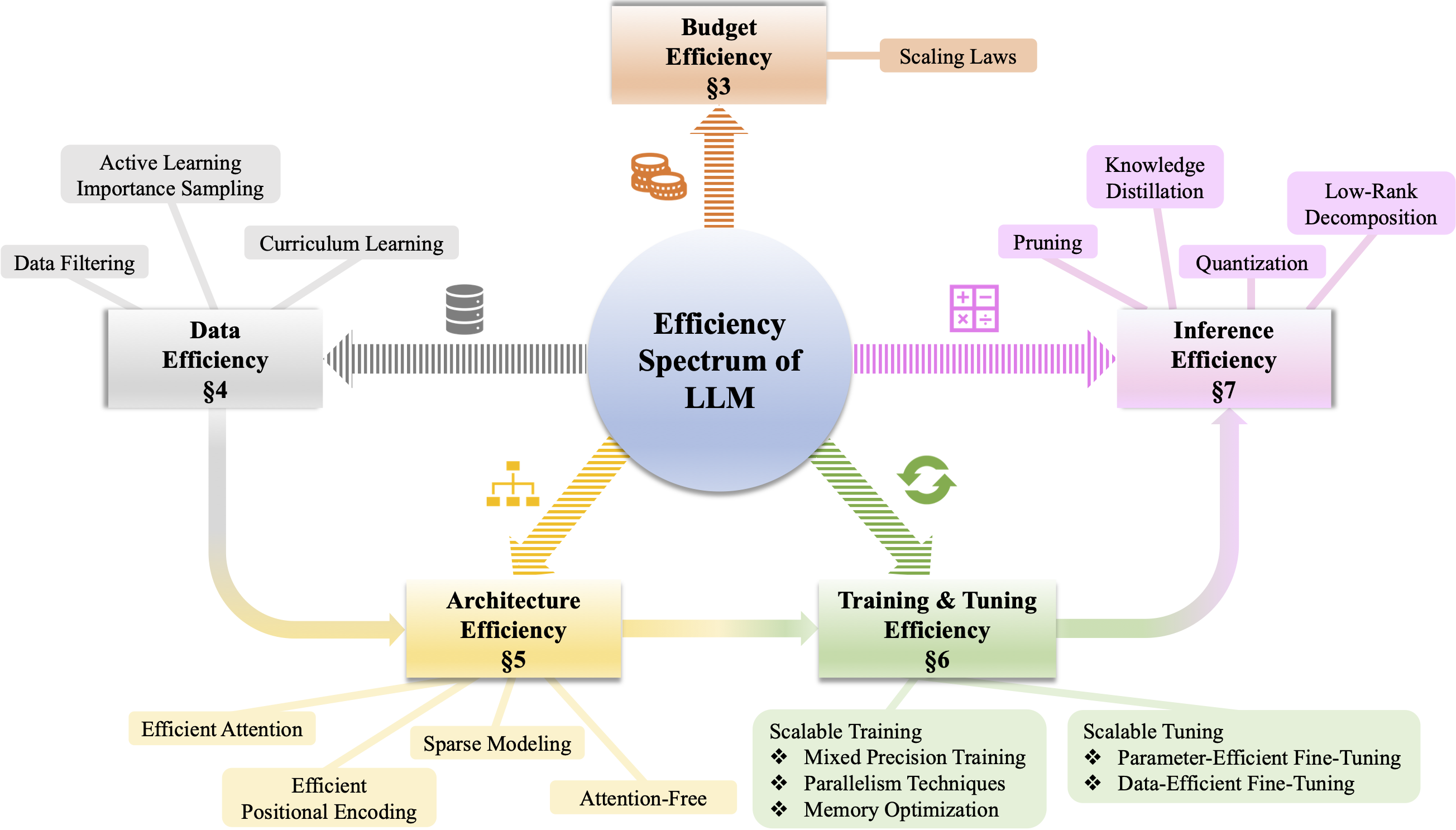

The rapid growth of Large Language Models (LLMs) has been a driving force in transforming various domains, reshaping the artificial general intelligence landscape. However, the increasing computational and memory demands of these models present substantial challenges, hindering both academic research and practical applications. To address these issues, a wide array of methods, including both algorithmic and hardware solutions, have been developed to enhance the efficiency of LLMs. This survey delivers a comprehensive review of algorithmic advancements aimed at improving LLM efficiency1. Unlike other surveys that typically focus on specific areas such as training or model compression, this paper examines the multi-faceted dimensions of efficiency essential for the end-to-end algorithmic development of LLMs. Specifically, it covers various topics related to efficiency, including scaling laws, data utilization, architectural innovations, training and tuning strategies, and inference techniques. This paper aims to serve as a valuable resource for researchers and practitioners, laying the groundwork for future innovations in this critical research area.

Paper link: The Efficiency Spectrum of Large Language Models: An Algorithmic Survey

-

💪 [12/07/2023] We are working on revising our paper and updating our repository to include more recently published studies. An update is planned very soon.

-

🔥 [12/01/2023] The first version of the paper has been released on arXiv.

@article{ding2023efficiency,

title={The Efficiency Spectrum of Large Language Models: An Algorithmic Survey},

author={Ding, Tianyu and Chen, Tianyi and Zhu, Haidong and Jiang, Jiachen and Zhong, Yiqi and Zhou, Jinxin and Wang, Guangzhi and Zhu, Zhihui and Zharkov, Ilya and Liang, Luming},

journal={arXiv preprint arXiv:2312.00678},

year={2023}

}

If you have any questions or suggestions, please contact us via:

- Email: tianyuding@microsoft.com