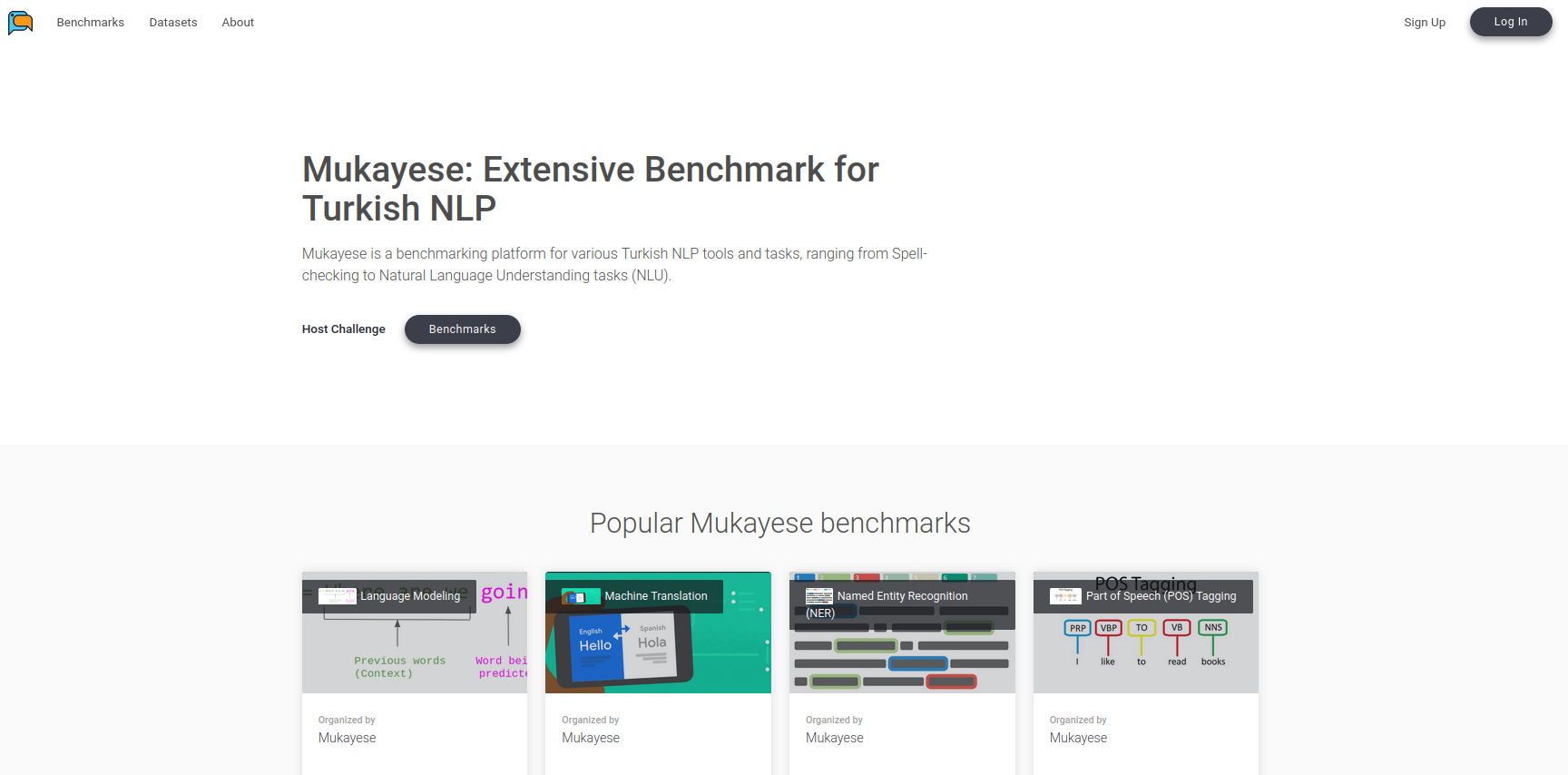

Mukayese mukayese.tdd.ai is an all-in-one benchmarking platform based on EvalAI project for various Turkish NLP tools and tasks, ranging from Spell-checking to Natural Language Understanding tasks (NLU).

The progress of research in any field depends heavily on previous work. Unfortunately, datasets/methods of Turkish NLP are very scattered, and hard to find. We present Mukayese (Turkish word for Comparison), an all-in-one benchmarking platform for Turkish NLP tools. Each enlisted NLP task has a leaderboard along with the relative models with their implementations and the relevant training/testing datasets.

With Mukayese, researchers of Turkish NLP will be able to:

- Compare the performance of existing methods in leaderboards.

- Access existing implementations of NLP baselines.

- Evaluate their own methods on the relevant test datasets.

- Submit their own work to be enlisted in our leaderboards.

The most important goal of Mukayese is to standardize the comparison and evaulation of Turkish natural language processing methods. As a result of the lack of a platform for benchmarking, Turkish Natural Language Processing researchers struggle with comparing their models to the existing ones due to several problems that we solve:

-

Not all datasets in the literature have specified train/validation/test splits or the test sets are annotated. This results in a point where the reported results in a publication must be double-checked by the researcher reviewing the literature to ensure that the eevaluation is made with the same method that researcher uses. Furthermore, not all reported performance values are to be correct and might have been corrupted by the (probably unintentional) mistakes of the researcher. We solve this problem by evaluating the models with datasets from different distributions in which the annotations of test splits are not publicized. To ensure fairness in leaderboard listings, we evaluate the models with open source scripts and disclosed specified versions and settings of the used libraries.

-

In many papers, authors do not include open source implementations of their works. This prevents the researchers to analyse the models and geting a greater understanding of the proposed method. Moreover, when unpublished, these models cannot be used for purposes fine-tuning or retraining with a different set of hyperparameters. We address this problem by labeling the submissions with which an open source implementation provided "verified". As the TDD Team, we test the submitted open source implementation, review it from the unbiased perspective of different researchers and require it to be published in an easy-to-use manner.

-

Benchmarking systems like GLUE and SuperGLUE provide a way for researchers to test a model they developed on an extensive set of tasks. We aim to do a better job with Mukayese by including more NLP tasks.

Currently, we provide leaderboards in 8 different tasks and on X different datasets

- Spell-checking and Correction - Custom Dataset

- Text Classification - OffensEval

- Language Modeling - trwiki-67 and trnews-64

- Named-Entity Recognition - XTREME and Turkish News NER Dataset

- Machine Translation - OpenSubtitles and MUST-C

- Tokenization - 35M Tweets Tokenization, TrMor2018 Tokenization

- Part-of-speech Tagging - UD-Turkish-BOUN

Under this project, we created 5 distinct datasets with in-depth documentation and train/validation/test splits for two datasets. In addition, all the datasets presented by our team in Turkish Data Depository are published.

- NER Turkish News NER Dataset : The original version of this dataset is proposed as 5 folds, we created train, dev and test splits using this folds. For original dataset, please contact to Reyyan Yeniterzi.

- TrMor2018

For baseline models to start the leaderboards with, we trained 18 distinct models for 8 different tasks. All of the scripts of the pretrained models and respective details may be found in this repository we created.

As the TDD team, we developed a state-of-the-art Hunspell-based spell-checker that is reported alongside comparsisons of performance of 7 different models: TurkishSpellChecker, zemberek-nlp, zemberek-python, velhasil, hunspell-tr (vdemir), hunspell-tr (hrzafer), tr-spell.

PhD Magic...

For Named-Entity Recognition task, we trained two nlp models: BiLSTM model and Turkish BERT using two different datasets: XTREME and Turkish News NER Dataset. Test data predictions of both datasets are used for creating baselines in the Named-Entity Recognition challange.

For machine translation, we trained Fairseq, NPMT, Tensor2tensor models on the Turkish-English subsets of 2 different datasets: OpenSubtitles and MUST-C.

Fill in

For Named-Entity Recognition task, we trained two nlp models: BiLSTM model and Turkish BERT using UD-Turkish-BOUN dataset. Test data predictions of this dataset is used for creating baselines in the Part-of-speech Tagging challange.

In this section, the future plans of our project are listed.

In addition to the challenges that are always open to submissions, we plan to organise Turkish Natural Language Processsing challenges and allow researchers to submit their ideas for contests which, after approval from our team, will be hosted.

We plan to present the following benchmarks, on which we have started to work, in the future:

- Morphological Analysis - TrMor2018

- Document Classification - TTC-4900, 1150 News and TRT-11

- Question Answering - XQuad and TQuad

- Dependency Parsing - UD-Turkish-BOUN

- Summarization

- Reading Comprehension

Since we require the open source implementation for submissions, we plan to create a library with the submitted models and their data loaders, tokenizers etc. that will be widely used by the Turkish Natural Language Processing researchers. The core idea is to gather as many Turkish NLP models as possible in a single library where they can be imported in a few lines of code.

- Ali Safaya - @alisafaya

- Emirhan Kurtuluş - @ekurtulus

- Arda Göktoğan - @ardofski