This sample application was constructed an exercise to understand how video data streams can be sent an UAV quadcopter camera to a web browser. This page provides a good primer on KLV encoded data in STANAG 4609.

Once we can read incoming metadata in the browser, we can do interesting things such as displaying our UAV in Cesium, which is a JavaScript library for creating WebGL globes and time-dynamic content (i.e, a flying quadcopter!)

In order for this to be compelling we need to minimize video latency from the video source to the browser. The JSMpeg project does a lot of the heavy lifting for getting the mpeg transport stream to the client, and rendering the video (and mp2 audio if present). We extend this project by adding a KLV decoder, and a simple rendering of the decoded data as JSON.

TL;DR jump to the demo videos.

JSMpeg comes with a websocket server, that accepts a mpeg-ts source and serves it via ws to all connecting browsers. JSMpeg then reads this transport stream passing it as a source to the demuxer, which in turn passes it to the decoder.

We also have a small node webserver that is packaged with Cesium to serve static assets. This server nicely handles CORS requests to other domains (such as map or terrain providers). Nginx or any other webserver could easily be used instead.

To establish a stream from the camera to the websocket server, we map our video and data feeds. Since JSMpeg only supports playback of mpeg1, we need to be explicit in our codec choice as well.

ffmpeg -i rtsp://{camera_source_url} -map: 0:0 -map 0:1 -f mpegts -codec:v mpeg1video -b:v 800k -r 24 -s 800:600 http://127.0.0.1:8081/secretkeyAs noted in the JSMpeg docs, the player sets up the connections between the source, demuxer, decoders, and renderer. In order to extend JSMpeg to accept a data stream we subscribe the demuxer to the correct stream identifier (per the STANAG spec it is 0xBD), implement the decoder, and then send the resultant data to the renderer.

var data = new JSMpeg.Decoder.Metadata();

this.demuxer.connect(JSMpeg.Demuxer.TS.STREAM.PRIVATE_1, data);

var klvOut = new JSMpeg.DataOutput.KLV();

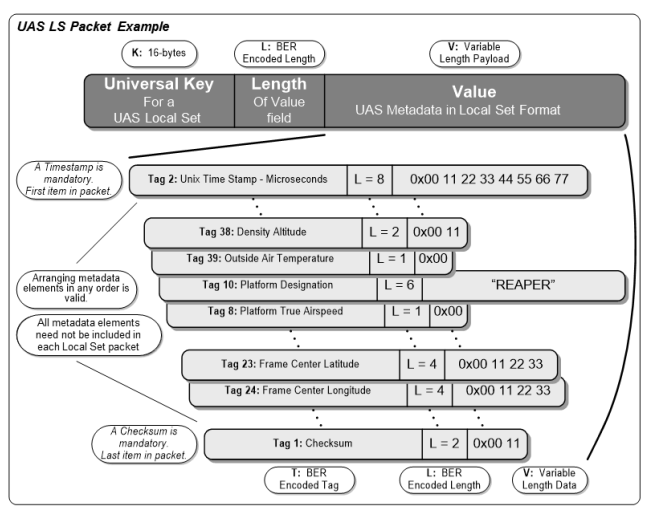

data.connect(klvOut);The decoder is implemented by metadata.js. The basic flow of control is to look for the 16-byte universal UAS LDS key within the bit stream, and once found, start reading the remainder of the LDS packet. The payload boundaries are easily checked, since they begin with a Unix timestamp, and end with a checksum. Of note, in JavaScript, the max integer is 2^53, so we need to use BigInteger.js in order to handle 8 byte timestamps, which are always the first KLV set within the payload.

The key reference here is MISB STANDARD 0601.8 (the UAS LDS standard) which lists 95 KLV metadata elements, a subset of which STANAG 4609 requires. Importantly, floating point values (for example latitude/longitude points) are mapped to integers, so we must convert the incoming values to a more useful realworld datum.

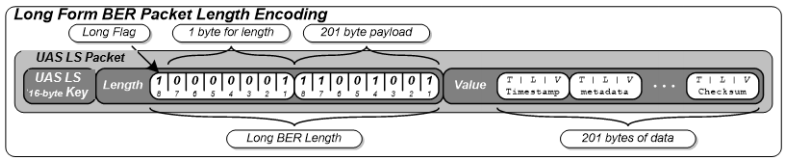

Each length in the KLV set is BER encoded. In practice it looks like our KLV encoder uses long form encoding for the UAS metadata payload length, and short encoding for each metadata item. Regardless, for demonstration purposes we read the most significant bit of the payload length to determine the encoding scheme.

A 16-bit block character checksum appears to be used for CRC. Validation is done by a running 16-bit sum through the entire LDS packet starting with the 16 byte local data set key and ending with summing the 2 byte length field of the checksum data item (but not its value). A sample implementation is given in MISB 0601.8, which we implement here. Efficiency could be gained if we didn't loop twice over the packet, but rather accumulated the sum as the packet is processed.

The renderer is implemented by klvoutput.js. It accepts the JSON object constructed by the decoder, and emits a CustomEvent .

this.element.dispatchEvent(new CustomEvent('klv', { "detail": data}));Interested parties can then listen for this event. This is how we hook up JSMpeg's decoded data to Cesium.

var klv = document.getElementById('somelementid');

klv.addEventListener('klv', _callback_);Once in Cesium, and listening for custom events, we update our HTML telemetry and camera or model position. There are two modes that are currently implemented: a FPV mode and track entity mode.

In FPV mode we take the sensor latitude, longitude, height, roll, pitch and yaw, calling flyTo with the provided destination and orientation. In track entity mode, we set the position and orientation of a model, and follow it with trackedEntity. Unlike flyTo, which has nicely animated interpolation, we must use sampled properties when tracking the model, in order to simulate the effect of motion. In reality we do not know the current velocity or acceleration of the aircraft, so this is really just an approximation of the aircraft's flight path. We also only receive metadata at a rate of 1Hz. Increasing this frequency could provide smoother results.

A note on altitude: per STANAG 4609, tag 75 should provide the height above the ellipsoid (HAE), but instead it appears we are only getting sensor true altitude (tag 15) measured from MSL. Cesium uses HAE for positioning objects, so we need to convert.

Nominally our height above the ellipsoid is calculated by:

where N = geoid undulation (height of the geoid above the ellipsoid) H = orthometric height, roughly the height above MSL. Geoid height above WGS84 using EGM2008 for 575 Kumpf Drive is -36.2835 There is a NodeJS implementation of GeographicLib, so we could create a simple server to return heights given lat/long input. However, after conducting a parking lot flight the value in tag 15 is roughly 300m, and we would expect a value of 336m, so I think HAE is actually being returned. Win!

The frame rate here is slightly reduced because of the screen recorder utilized. True FPS is displayed in Cesium. As previously mentioned, if we could receive LDS packets more frequently, the flyer animation could be smoothed. We could also attempt to change the interpolation algorithm in use.

- Extend this application to include increased FOV (essentially decrease the focal length), so we can have more situational awareness. Currently we centre the video, and perform CSS clipping around the video in order to see the surrounding scene. In this example we show how to use canvas data as an image material, in order to have the video included in the 3d space. While this works, the frame rate drops considerably.

- Full offline support: we need an Internet connection to provide map and terrain data. Cesium does support offline data.

- It would be interesting to add additional information/visuals in Cesium. e.g., camera targets, acoustic footprint, terrain sections, etc.

- This code was purely written for fun and learning about STANAG 4609 - so it is by no means production quality :)

chmod 751 app/build.sh

cd app && ./build.sh && npm install

Enable KLV metadata on your video feed, and run:

./start.sh

In this example, video is being streamed via RTSP.