A light implementation of the 2017 Google paper 'Attention is all you need'. BETSI is the name of the model, which is a recursive acronym standing for BETSI: English to shitty Italian, as the training time I allowed on my graphics card did not give enough time for amazing results.

For this implementation I will implement a translation from English to Italian, as Tranformer models are exceptional at language translation and this seems to be a common use of light implementations of this paper.

The dataset I will be using is the opus books dataset which is a collection of copyright free books. The book content of these translations are free for personal, educational, and research use. OPUS language resource paper.

I'm creating notes as I go, which can be found in NOTES.md.

There is a requirements.txt that has the packages needed to run this. I used PyTorch with ROCm as this sped up training A LOT. Training this model on CPU on my laptop takes around 5.5 hours per epoch, while training the model on GPU on my desktop takes around 13.5 minutes (24.4 times faster!).

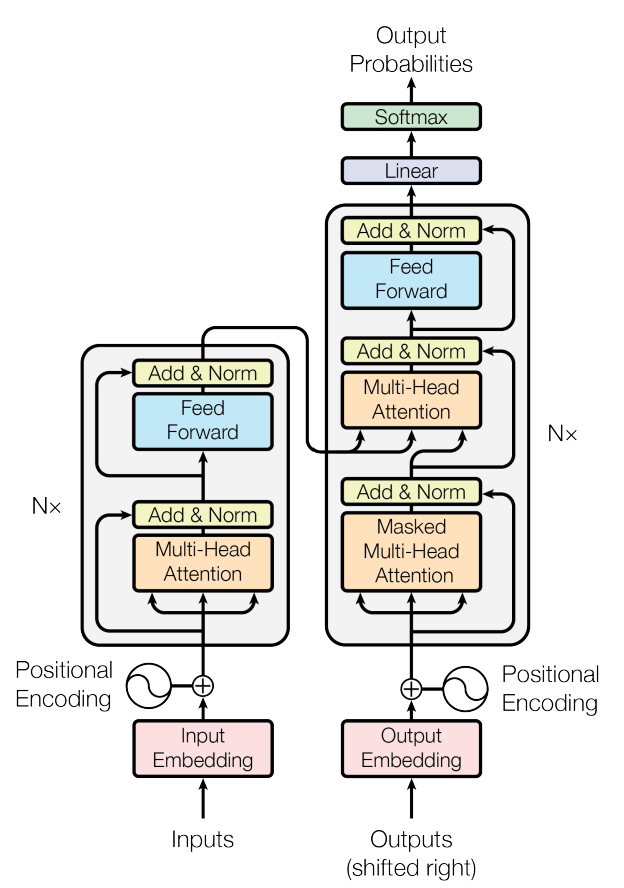

- Input Embeddings

- Positional Encoding

- Layer Normalization - Due by 11/1

- Feed forward

- Multi-Head attention

- Residual Connection

- Encoder

- Decoder - Due by 11/8

- Linear Layer

- Transformer

- Tokenizer - Due by 11/15

- Dataset

- Training loop

- Visualization of the model - Due by 11/22

- Install AMD RocM to train with GPU - Attempt to do by end