This is a submission of Second Assignment for the CIS735 course.

It contains the code necessary to implement multiple deep learning architectures for the zArASL_Database_54K multiclass image classification problem.

The dataset from the zArASL_Database_54K has been used.

Clone the project from GitHub

$ git clone https://github.com/tariqshaban/arabic-sign-language-image-classification.git

No further configuration is required.

Navigate to the bottom of the notebook and specify the following hyperparameters:

- Seed

- Neural network type:

- Fully connected neural network (FCNN)

- Convolutional neural network (CNN)

- Convolutional neural network (CNN) using a pretrained model (transfer learning on the EfficientNetB0 architecture)

- Generic network size:

- Nano

- Micro

- Small

- Activation function type:

- Sigmoid

- Tanh

- ReLU

- Leaky ReLU

- Optimizer

- Stochastic gradient descent (SGD)

- Root Mean Squared Propagation (RMSProp)

- Adam

- Number of epochs [1, 100]

- Early stopping value

Warning: Even though specifying the seed should ensure reproducible results, the kernel must be reset after finishing a single model

Warning: The seed is ineffective if the running machine is different, or if the runtime was switched between CPU and GPU

Note: The learning rate field was discarded since the optimizer's default value usually yields better results

Invoke the following methods:

# Ready the dataset and partition it into training, validation, and testing folders

prime_dataset()

# Conducts preliminary exploration methods

# :param bool show_dataframe: Specify whether to show the classes dataframe or not

# :param bool show_image_sample: Specify whether to display a sample image from each class or not

# :param bool show_class_distribution: Specify whether to plot the distribution of each class with respect to its train/valid/test partitioning

explore_dataset(show_dataframe: bool = True, show_image_sample: bool = True, show_class_distribution: bool = True)

# Builds the model, and returns the fitted object

# :param bool plot_network: Specify whether to visually plot the neural network or not

# :param bool measure_performance: Specify whether to carry out the evaluation metrics on the created model (shows loss/accuracy trend across epochs and displays a confusion matrix on the test set)

build_model(plot_network: bool = True, measure_performance: bool = True)

The following is a table denotes the classes of the dataset (sorted alphabetically based on the ClassAr column).

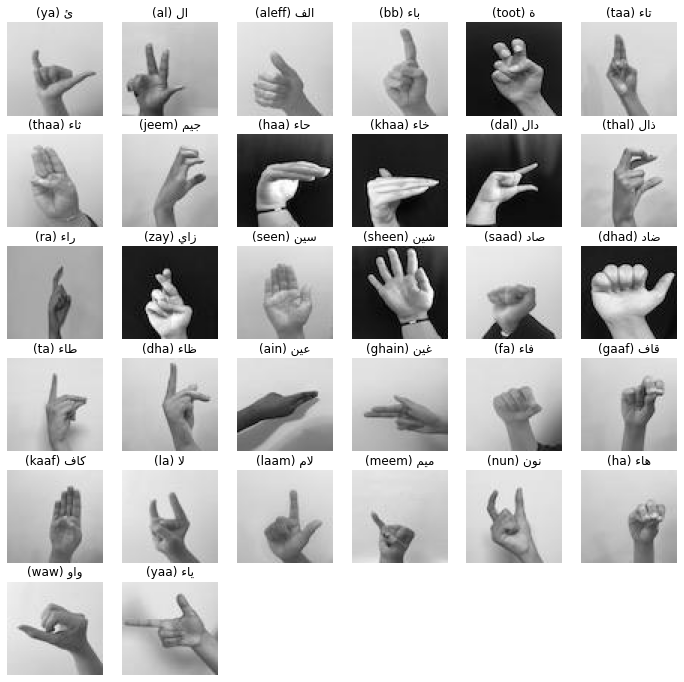

Note that a total of 32 classes can be observed from the table.

ClassId Class ClassAr 29 ya ئ 1 al ال 2 aleff الف 3 bb باء 27 toot ة 24 taa تاء 25 thaa ثاء 12 jeem جيم 11 haa حاء 14 khaa خاء 4 dal دال 26 thal ذال 19 ra راء 31 zay زاي 21 seen سين 22 sheen شين 20 saad صاد 6 dhad ضاد 23 ta طاء 5 dha ظاء 0 ain عين 9 ghain غين 7 fa فاء 8 gaaf قاف 13 kaaf كاف 15 la لا 16 laam لام 17 meem ميم 18 nun نون 10 ha هاء 28 waw واو 30 yaa ياء The figure below displays an image from each class:

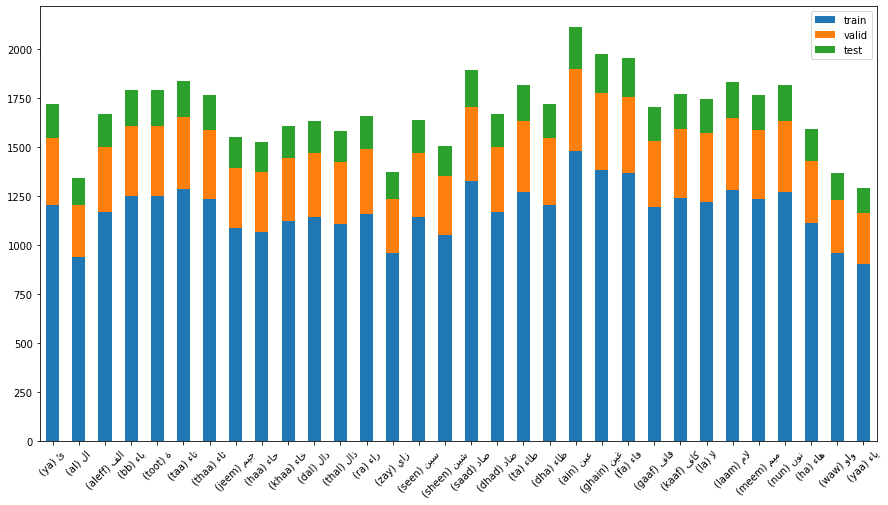

The image displays how well the classes are balanced, note that

(ain) عينhas approximately 700 more images than(yaa) ياء.

- No significant image processing techniques have been carried out

- All images have the same size (64x64x3)

- Image rescaling technique has been used (pixel normalization)

Note: In transfer learning architectures, image rescaling was omitted since the EfficientNetB0 network already contains a normalization layer

Note: In transfer learning architectures, image resolution is upscaled to (256, 256); in order to comply with the EfficientNetB0 input specifications

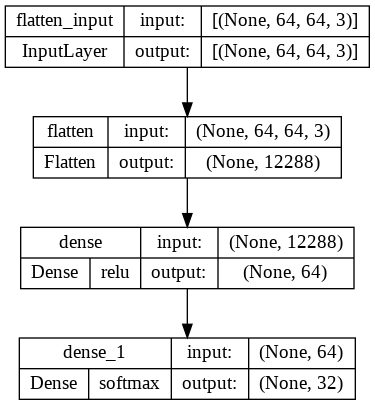

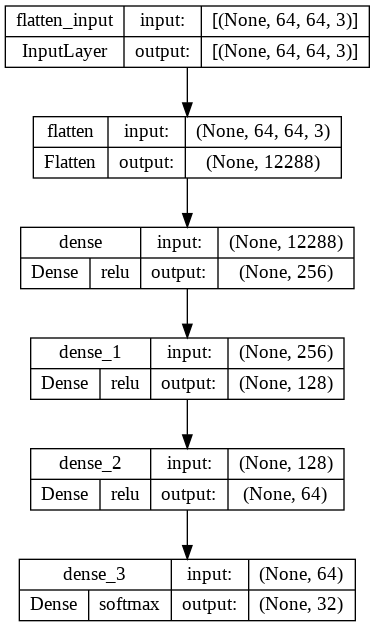

As previously stated, below are the generic network sizes that are enforced on all neural networks in this repository:

- Nano

- Micro

- Small

The leveraged neural networks:

- Fully connected neural network (FCNN)

- Convolutional neural network (CNN)

- Convolutional neural network (CNN) using a pretrained model

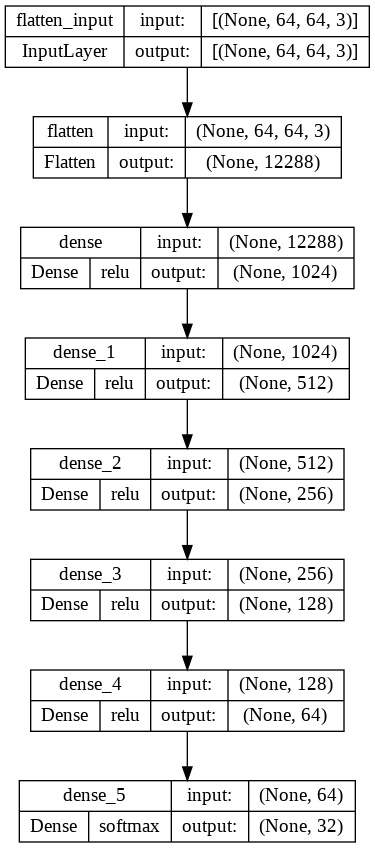

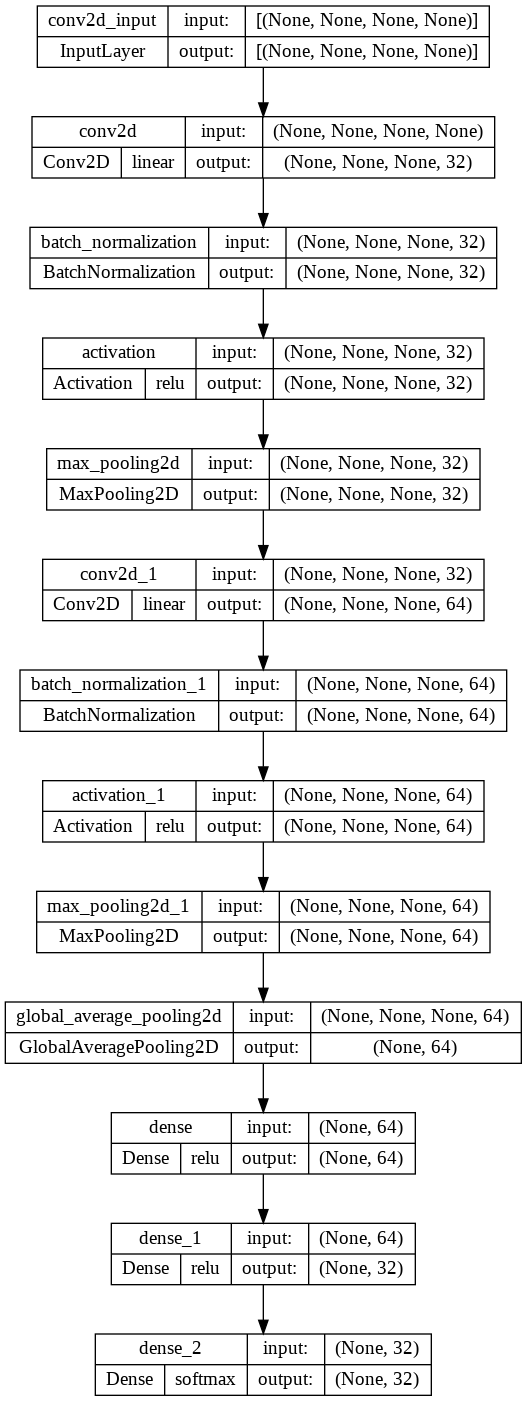

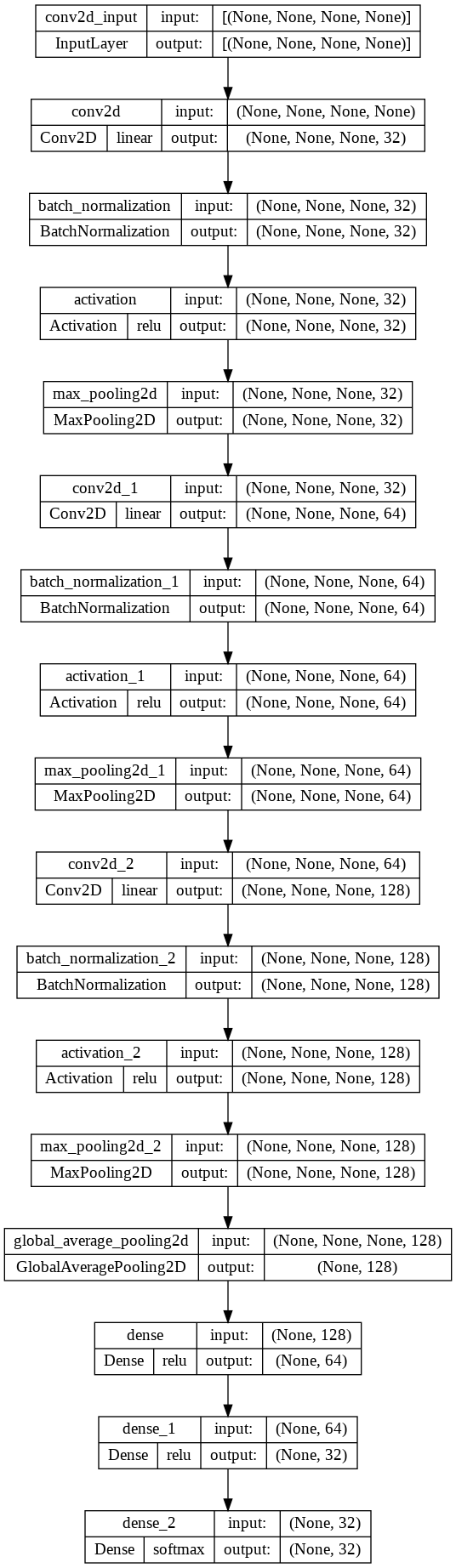

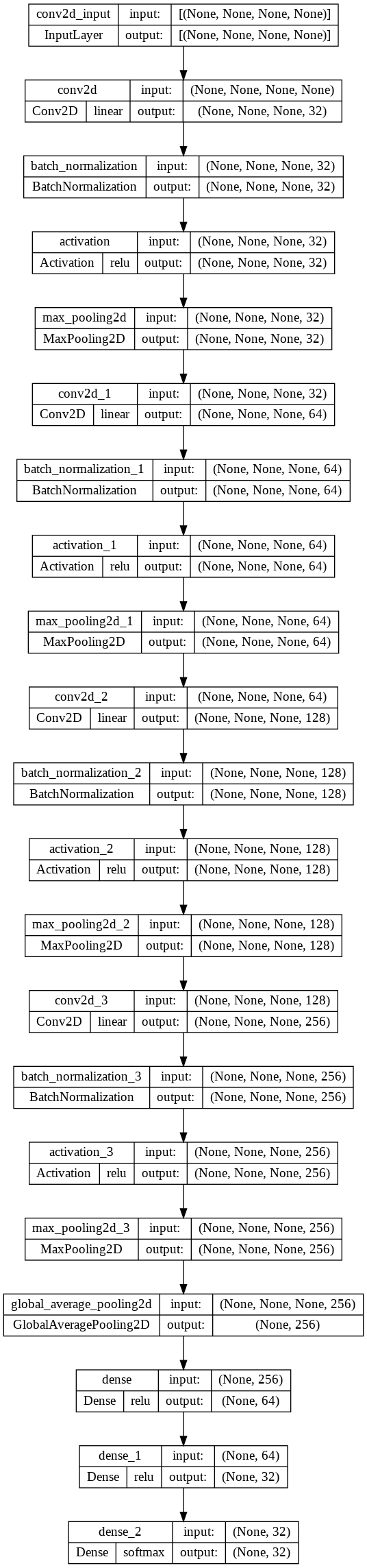

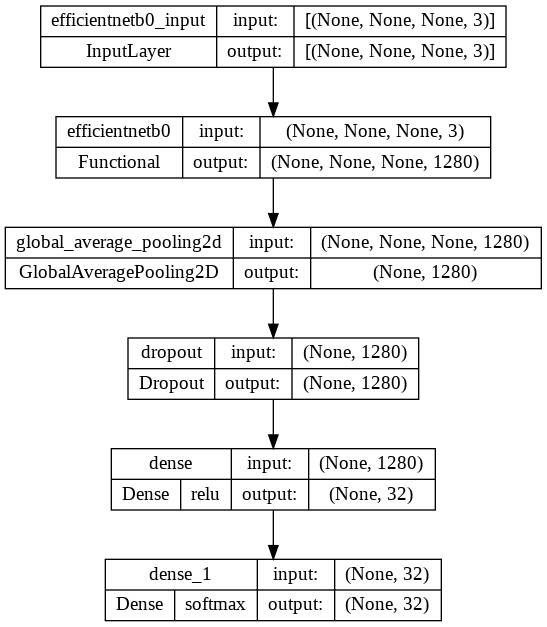

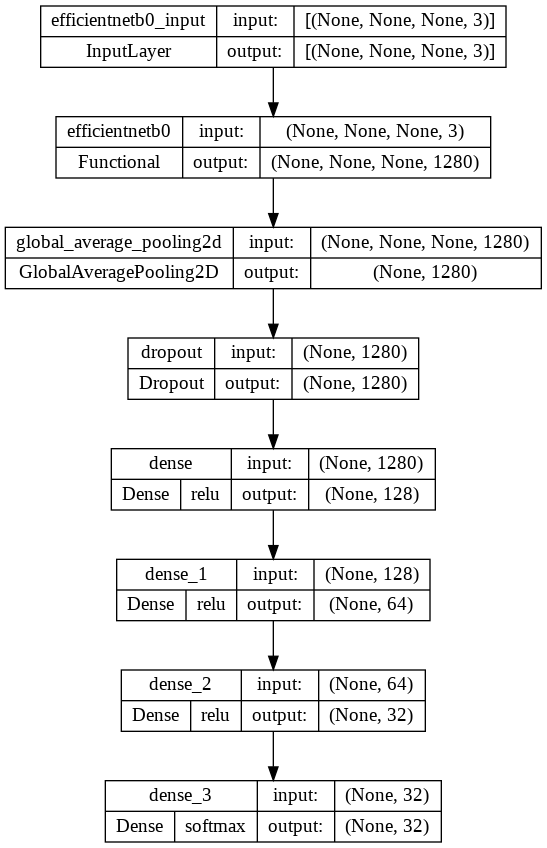

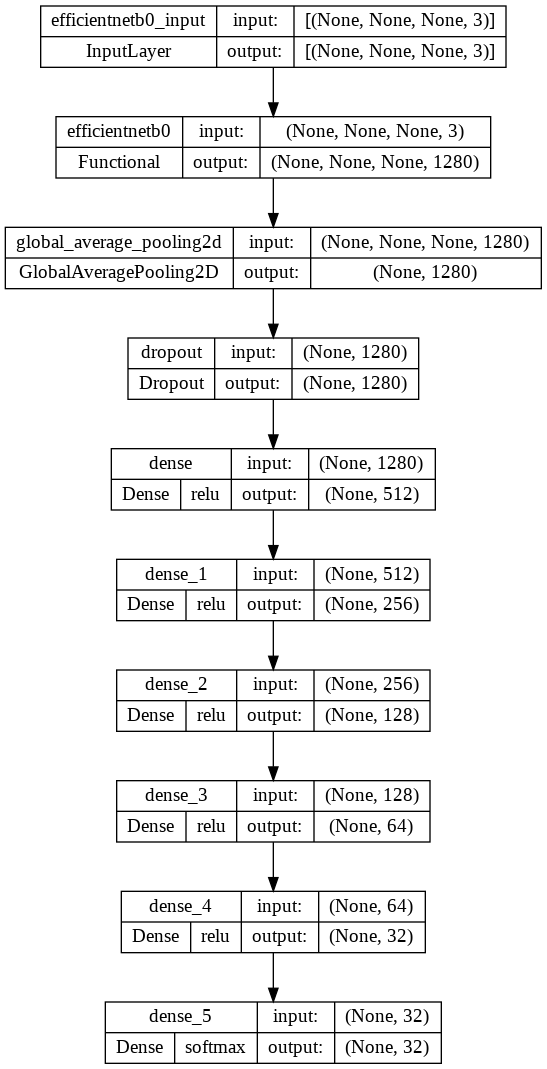

The following images display the affect of each network size on the architecture of each neural network:

Fully Connected Neural Network (FCNN) Nano Micro Small

Convolutional Neural Network (CNN) Nano Micro Small

Convolutional Neural Network (CNN) w/ Transfer Learning Nano Micro Small

| Nano | Micro | Small | |||||

|---|---|---|---|---|---|---|---|

| Activation | Optimizer | Accuracy | Loss | Accuracy | Loss | Accuracy | Loss |

| Sigmoid | SGD | %29.00 | 2.6779 | %5.170 | 3.4457 | %3.92 | 3.4597 |

| RMSProp | %36.90 | 2.1605 | %39.33 | 1.9779 | %27.71 | 2.3854 | |

| Adam | %18.75 | 2.8965 | %20.55 | 2.8522 | %3.92 | 3.4603 | |

| Tanh | SGD | %49.32 | 1.8810 | %66.83 | 1.2045 | %73.47 | 0.8914 |

| RMSProp | %26.22 | 2.5885 | %30.38 | 2.3663 | %7.32 | 3.2007 | |

| Adam | %3.40 | 3.4650 | %14.46 | 3.0153 | %3.49 | 3.4675 | |

| ReLU | SGD | %54.92 | 1.5363 | %70.84 | 0.9636 | %50.19 | 1.7976 |

| RMSProp | %3.92 | 3.4597 | %3.92 | 3.4597 | %3.92 | 3.4597 | |

| Adam | %3.92 | 3.4597 | %83.26 | 0.6132 | %3.92 | 3.4597 | |

| Leaky Relu | SGD | %53.35 | 1.6143 | %67.84 | 1.0764 | %74.21 | 0.8452 |

| RMSProp | %51.77 | 1.5967 | %55.16 | 1.8461 | %76.97 | 0.7628 | |

| Adam | %67.73 | 1.1280 | ✅%84.50 | ✅0.5878 | %84.22 | 0.5939 | |

| Nano | Micro | Small | |||||

|---|---|---|---|---|---|---|---|

| Activation | Optimizer | Accuracy | Loss | Accuracy | Loss | Accuracy | Loss |

| Sigmoid | SGD | %3.92 | 3.4587 | %3.92 | 3.4527 | %4.58 | 3.4398 |

| RMSProp | %2.90 | 8.5300 | %5.48 | 4.8434 | %12.00 | 7.3554 | |

| Adam | %6.29 | 4.902 | %6.53 | 9.1905 | %18.18 | 5.5146 | |

| Tanh | SGD | %4.58 | 4.6877 | %14.13 | 3.1408 | %88.84 | 0.4504 |

| RMSProp | %7.65 | 6.9120 | %19.38 | 6.1763 | %87.74 | 0.4868 | |

| Adam | %7.45 | 7.2570 | %31.41 | 4.4689 | %76.34 | 0.9064 | |

| ReLU | SGD | %10.23 | 3.6544 | %59.78 | 1.1868 | %96.77 | 0.1303 |

| RMSProp | %15.16 | 7.1755 | %38.74 | 4.7649 | %88.93 | 0.4513 | |

| Adam | %15.51 | 9.1730 | %87.02 | 0.4670 | %95.12 | 0.2065 | |

| Leaky Relu | SGD | %10.08 | 4.4987 | %38.68 | 2.5518 | %96.35 | 0.1434 |

| RMSProp | %9.51 | 14.118 | %84.24 | 0.6299 | %94.49 | 0.2322 | |

| Adam | %11.31 | 8.1749 | %52.73 | 2.8816 | ✅%98.03 | ✅0.1078 | |

| Nano | Micro | Small | |||||

|---|---|---|---|---|---|---|---|

| Activation | Optimizer | Accuracy | Loss | Accuracy | Loss | Accuracy | Loss |

| Sigmoid | SGD | %56.83 | 1.8929 | %3.92 | 3.4471 | %3.92 | 3.4597 |

| RMSProp | %93.52 | 0.2538 | %94.77 | 0.2047 | %95.71 | 0.1624 | |

| Adam | %93.55 | 0.2537 | %95.01 | 0.1874 | %96.28 | 0.1377 | |

| Tanh | SGD | %76.05 | 0.8074 | %83.94 | 0.6029 | %86.61 | 0.5011 |

| RMSProp | %94.11 | 0.2150 | %96.46 | 0.1321 | %96.42 | 0.1200 | |

| Adam | %94.14 | 0.2163 | %96.15 | 0.1356 | %96.39 | 0.1182 | |

| ReLU | SGD | %83.98 | 0.5926 | %85.64 | 0.4804 | %89.76 | 0.3596 |

| RMSProp | %94.62 | 0.1921 | %96.53 | 0.1130 | ✅%97.29 | 0.0907 | |

| Adam | %94.60 | 0.1956 | %96.63 | 0.1154 | %97.14 | ✅0.0883 | |

| Leaky Relu | SGD | %84.41 | 0.5792 | %87.04 | 0.4233 | %90.44 | 0.3406 |

| RMSProp | %94.68 | 0.1906 | %96.57 | 0.1184 | %97.18 | 0.0922 | |

| Adam | %94.58 | 0.1974 | %96.20 | 0.1280 | %97.01 | 0.1045 | |

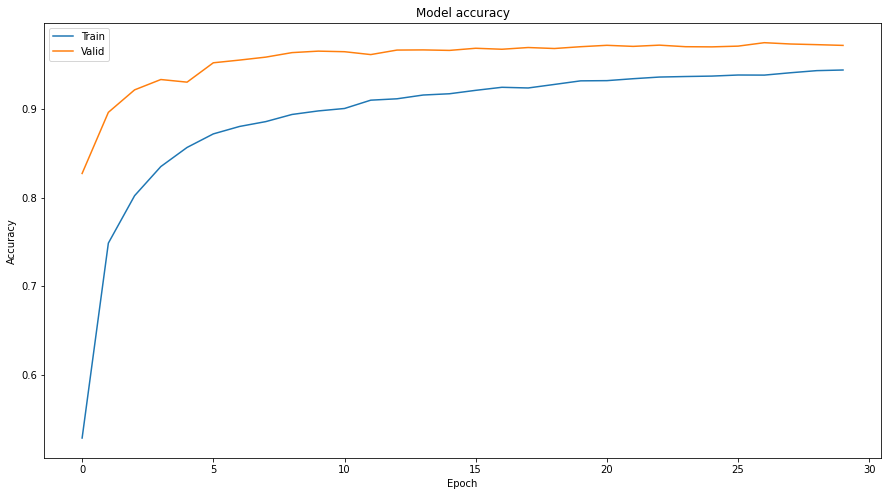

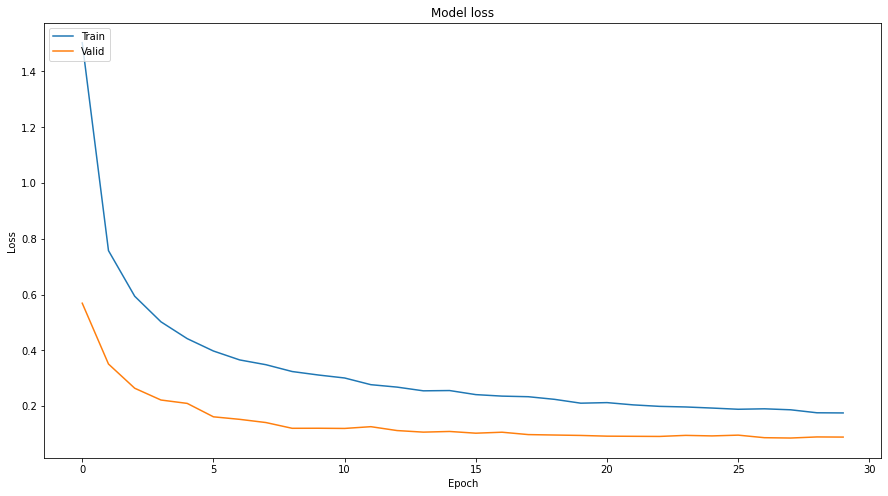

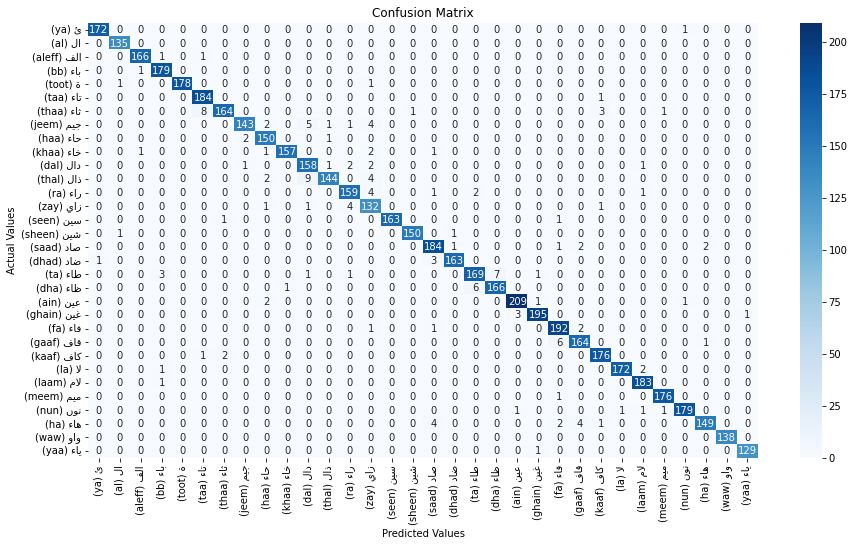

The following images are the result of using the following hyperparameters (one of the hyperparameters that yielded the highest results):

- Seed: 42

- Network Type: CNN w/ transfer learning (EfficientNetB0)

- Network Size: small, see

Models Constructionsection for exact architecture- Activation function: ReLU (used in dense layers appended to the base model)

- Optimizer: Adam

- Epochs: 30

- Early stopping: upon 10 epochs

Notice that the accuracy converged rapidly, and stabilized at approximately the 20ᵗʰ epoch, this indicates that the number of epochs is sufficient for this given model.

Similarly, the model was able to converge quickly when inspecting the loss plot

Based on the confusion matrix, the model was effective in discriminating between Arabic letters represented by sign language, almost all the test set entries reside on the diagonal.

Note: Each activation function has its own case, generally assuming an activation function to be poor might not be a good practice

Note: It appears that different combinations of hyperparameters yield different results (e.g. Adam optimizer performed well on most networks, but it returns suboptimal results when paired with Sigmoid and Tanh for the FCNN architecture)

Note: These observations are strictly bounded to the dataset and should not be generalized. Such observations can be easily contradicted when altering a hyperparameter

Assess the impact of the activation function (Sigmoid, Tanh, ReLU, Leaky ReLU) on the accuracy, loss

The impact of each activation function cannot be easily determined, several variables can affect the behaviour of the activation function.

- Sigmoid:

- Computationally expensive

- Complex derivative calculations due to the exponent

- Flattened ends on both sides cause saturation, saturation causes vanishing gradients

- Converges slowly

- Commonly used in binary classification

- Tanh:

- Similar characteristics to the sigmoid

- Less computationally expensive than sigmoid (but still noticeable)

- Commonly used in NLP domains

- ReLU:

- Well known

- Converges quickly

- Does not saturate easily

- Very simple formula, expedites the computation of the derivative

- Commonly used in the hidden layers of the FCNN

- Leaky ReLU

- Similar characteristics to the sigmoid

- Further reduce the risk of saturation

Observations:

ReLUandLeaky ReLURelu almost always had better results thanSigmoidandTanh(sometimes by a large margin)SigmoidandTanhoften saturate; causing the training to stop early- If

SigmoidandTanhwere provided with more epochs, they might achieve results that compete withReLUandLeaky ReLU

Similarly to the previous question, the impact cannot be generalized, since the parameters of the optimizer can affect its behaviour.

Observations:

- RMSProp and Adam obtained higher results than SGD in many cases

- SGD takes longer time to converge, note that the learning rate default value for the SGD is ten higher than RMSProp and Adam

While it seems from intuition that deeper neural networks should yield better results, it can cause, in some cases, the exact opposite effect. It may result in overfitting; making the test accuracy high but low on unseen data (such as validation and test sets). In addition, it may unnecessarily increase the number of parameters, which subsequently, increases the computational requirement.

Observations:

- Deeper networks provide better results, but they can have a negative effect in some cases

- Deeper networks can exhaust resources exponentially, especially the memory

- Deeper networks should not be used to solve simple problems, this will cause the model to overfit

- Data has noise (outliers/errors); prevents the model from generalizing well

- Data is not standardized (no regularization)

- Model is too deep/complex

- No dropout layers (for CNN architectures)

- Training data is biased (the sample does not represent the population, or represents only a specific stratum)

- No implementation of early stopping (prematurely stop the training when validation loss does not decrease for a predefined number of epochs)

- Usage of SGD rather than RMSProp or Adam

- Surprisingly, some fully connected networks achieved relatively acceptable results, this can be caused by the number of parameters for the image (64x64x3), FCNN is known to be a poor option when used solely on image problems; because unlike CNN, it lacks image feature extraction

- Transfer learning expedites the convergence, making it possible to achieve satisfactory results with only one digit epochs