Shuai Tan1,

Bin Ji1,

Mengxiao Bi2,

Ye Pan1,

1Shanghai Jiao Tong University

2NetEase Fuxi AI Lab

ECCV 2024

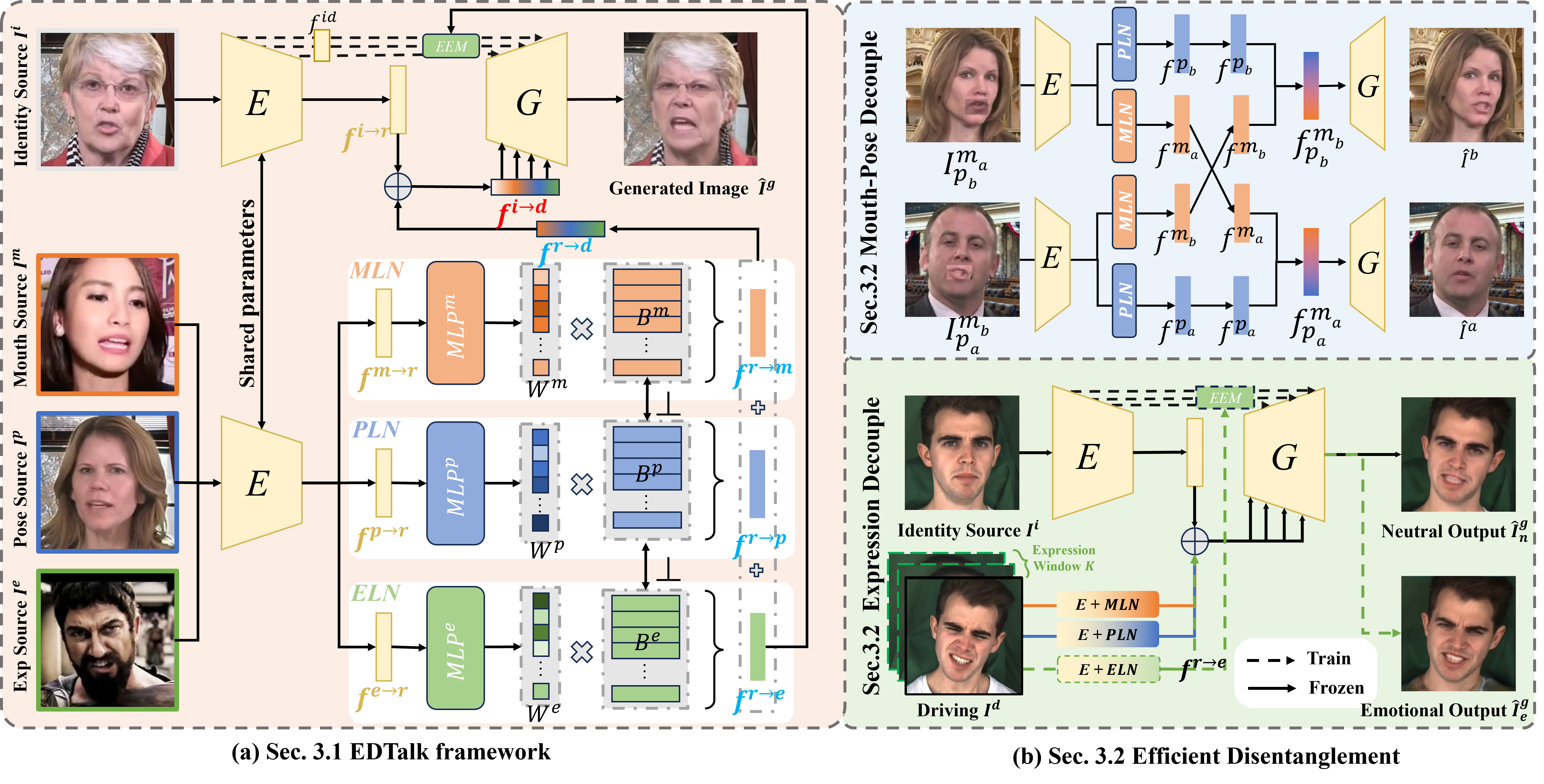

Achieving disentangled control over multiple facial motions and accommodating diverse input modalities greatly enhances the application and entertainment of the talking head generation. This necessitates a deep exploration of the decoupling space for facial features, ensuring that they a) operate independently without mutual interference and b) can be preserved to share with different modal inputs—both aspects often neglected in existing methods. To address this gap, this paper proposes a novel Efficient Disentanglement framework for Talking head generation (EDTalk). Our framework enables individual manipulation of mouth shape, head pose, and emotional expression, conditioned on both video and audio inputs. Specifically, we employ three lightweight modules to decompose the facial dynamics into three distinct latent spaces representing mouth, pose, and expression, respectively. Each space is characterized by a set of learnable bases whose linear combinations define specific motions. To ensure independence and accelerate training, we enforce orthogonality among bases and devise an efficient training strategy to allocate motion responsibilities to each space without relying on external knowledge. The learned bases are then stored in corresponding banks, enabling shared visual priors with audio input. Furthermore, considering the properties of each space, we propose Audio-to-Motion module for audio-driven talking head synthesis. Experiments are conducted to demonstrate the effectiveness of EDTalk.

- 2024.08.06 - 💻 Add the training code for fine-tuning on a specific person, and we take Obama as example.

- 2024.08.06 - 🙏 We hope more people can get involved, and we will promptly handle pull requests. Currently, there are still some tasks that need assistance, such as creating a colab notebook, improved web UI, and translation work, among others.

- 2024.08.04 - 🎉 Add gradio interface.

- 2024.07.31 - 💻 Add optional face super-resolution.

- 2024.07.19 - 💻 Release data preprocess codes and partial training codes (fine-tuning LIA & Mouth-Pose Decouple & Audio2Mouth). But I'm busy now and don't have enough time to clean up all the codes, but I think the current codes can be a useful reference if ones want to reproduce EDTalk or other. If you run into any problems, feel free to propose an issue!

- 2024.07.01 - 💻 The inference code and pretrained models are available.

- 2024.07.01 - 🎉 Our paper is accepted by ECCV 2024.

- 2024.04.02 - 🛳️ This repo is released.

- Release training code.

- Release inference code.

- Release pre-trained models.

- Release Arxiv paper.

We train and test based on Python 3.8 and Pytorch. To install the dependencies run:

git clone https://github.com/tanshuai0219/EDTalk.git

cd EDTalkconda create -n EDTalk python=3.8

conda activate EDTalk

- python packages

pip install -r requirements.txt

- python packages for Windows

pip install -r requirements_windows.txt

Thanks to nitinmukesh for providing a Windows 11 installation tutorial and welcome to follow his channel!

- Launch gradio interface (Thank the contributor: newgenai79!)

python webui_emotions.py

Download the checkpoints/huggingface link and put them into ./ckpts.

[中文用户] 可以通过这个链接下载权重。

For user-friendliness, we extracted the weights of eight common sentiments in the expression base. one can directly specify the sentiment to generate emotional talking face videos (recommended)

python demo_EDTalk_A_using_predefined_exp_weights.py --source_path path/to/image --audio_driving_path path/to/audio --pose_driving_path path/to/pose --exp_type type/of/expression --save_path path/to/save

# example:

python demo_EDTalk_A_using_predefined_exp_weights.py --source_path res/results_by_facesr/demo_EDTalk_A.png --audio_driving_path test_data/mouth_source.wav --pose_driving_path test_data/pose_source1.mp4 --exp_type angry --save_path res/demo_EDTalk_A_using_weights.mp4

angry.mp4 |

Contempt.mp4 |

Disgusted.mp4 |

Fear.mp4 |

Happy.mp4 |

sad.mp4 |

python demo_EDTalk_A.py --source_path path/to/image --audio_driving_path path/to/audio --pose_driving_path path/to/pose --exp_driving_path path/to/expression --save_path path/to/save

# example:

python demo_EDTalk_A.py --source_path res/results_by_facesr/demo_EDTalk_A.png --audio_driving_path test_data/mouth_source.wav --pose_driving_path test_data/pose_source1.mp4 --exp_driving_path test_data/expression_source.mp4 --save_path res/demo_EDTalk_A.mp4

The result will be stored in save_path.

Source_path and videos used must be first cropped using scripts crop_image2.py (download shape_predictor_68_face_landmarks.dat and put it in ./data_preprocess dir) and crop_video.py. Make sure the every video' frame rate must be 25 fps

You can also use crop_image.py to crop the image, but increase_ratio has to be carefully set and tried several times to get the optimal result.

EDTalk-A:lip+pose without exp: If you don't want to change the expression of the identity source, please download the EDTalk_lip_pose.pt and put it into ./ckpts.

python demo_lip_pose.py --fix_pose --source_path path/to/image --audio_driving_path path/to/audio --save_path path/to/save

# example:

python demo_lip_pose.py --fix_pose --source_path test_data/identity_source.jpg --audio_driving_path test_data/mouth_source.wav --save_path res/demo_lip_pose_fix_pose.mp4

python demo_lip_pose.py --source_path path/to/image --audio_driving_path path/to/audio --pose_driving_path path/to/pose --save_path path/to/save

# example:

python demo_lip_pose.py --source_path test_data/identity_source.jpg --audio_driving_path test_data/mouth_source.wav --pose_driving_path test_data/pose_source1.mp4 --save_path res/demo_lip_pose_fix_pose.mp4

| Source Img | EDTalk | EDTalk + liveprotrait |

|---|---|---|

person1_crop_512.mp4 |

||

person4_512.mp4 |

test2.mp4 |

python demo_lip_pose_V.py --source_path path/to/image --audio_driving_path path/to/audio --lip_driving_path path/to/mouth --pose_driving_path path/to/pose --save_path path/to/save

# example:

python demo_lip_pose_V.py --source_path res/results_by_facesr/demo_lip_pose5.png --audio_driving_path test_data/mouth_source.wav --lip_driving_path test_data/mouth_source.mp4 --pose_driving_path test_data/pose_source1.mp4 --save_path demo_lip_pose_V.mp4

| Source Img | demo_lip_pose_V Results | + FaceSR |

|---|---|---|

|

demo_EDTalk_lip_pose_V.mp4 |

demo_EDTalk_lip_pose_V_512.mp4 |

|

demo_EDTalk_lip_pose_V_lei.mp4 |

demo_EDTalk_lip_pose_V_lei_512.mp4 |

python demo_change_a_video_lip.py --source_path path/to/video --audio_driving_path path/to/audio --save_path path/to/save

# example

python demo_change_a_video_lip.py --source_path test_data/pose_source1.mp4 --audio_driving_path test_data/mouth_source.wav --save_path res/demo_change_a_video_lip.mp4

| Source Img | results #1 | results #2 |

|---|---|---|

pose_source1.mp4 |

demo_change_a_video_lip_512.mp4 |

demo_change_a_video_lip_teaser_512.mp4 |

python demo_EDTalk_V.py --source_path path/to/image --lip_driving_path path/to/lip --audio_driving_path path/to/audio --pose_driving_path path/to/pose --exp_driving_path path/to/expression --save_path path/to/save

# example:

python demo_EDTalk_V.py --source_path test_data/identity_source.jpg --lip_driving_path test_data/mouth_source.mp4 --audio_driving_path test_data/mouth_source.wav --pose_driving_path test_data/pose_source1.mp4 --exp_driving_path test_data/expression_source.mp4 --save_path res/demo_EDTalk_V.mp4

The result will be stored in save_path.

The purpose is to upscale the resolution from 256 to 512 and address the issue of blurry rendering.

Please install addtional environment here:

pip install facexlib

pip install tb-nightly -i https://mirrors.aliyun.com/pypi/simple

pip install gfpgan

Then enable the option --face_sr in your scripts. The first time will download the weights of gfpgan (you can optionally first download gfpgan ckpts and put them in gfpgan/weights dir).

Here are some examples:

python demo_lip_pose.py --source_path path/to/image --audio_driving_path path/to/audio --pose_driving_path path/to/pose --save_path path/to/save --face_sr

python demo_EDTalk_V.py --source_path path/to/image --lip_driving_path path/to/lip --audio_driving_path path/to/audio --pose_driving_path path/to/pose --exp_driving_path path/to/expression --save_path path/to/save --face_sr

python demo_EDTalk_A_using_predefined_exp_weights.py --source_path path/to/image --audio_driving_path path/to/audio --pose_driving_path path/to/pose --exp_type type/of/expression --save_path path/to/save --face_sr

| Source Img | EDTalk Results | EDTalk + FaceSR |

|---|---|---|

|

demo_lip_pose5.mp4 |

demo_lip_pose5_512.mp4 |

|

demo_EDTalk_A.mp4 |

demo_EDTalk_A_512.mp4 |

|

RD_Radio51_000.mp4 |

RD_Radio51_000_512.mp4 |

Note: We take Obama and the path in my computer (/data/ts/xxxxxx) as example and you should replace it with your own path:

-

Download the Obama data from AD-Nerf and put it in '/data/ts/datasets/person_specific_dataset/AD-NeRF/video/Obama.mp4'

-

Crop video and resample as 25 fps:

python data_preprocess/crop_video.py --inp /data/ts/datasets/person_specific_dataset/AD-NeRF/video/Obama.mp4 --outp /data/ts/datasets/person_specific_dataset/AD-NeRF/video_crop/Obama.mp4

-

Save video as frames:

ffmpeg -i /data/ts/datasets/person_specific_dataset/AD-NeRF/video_crop/Obama.mp4 -r 25 -f image2 /data/ts/datasets/person_specific_dataset/AD-NeRF/video_crop_frame/Obama/%4d.png

-

Start training:

python train_fine_tune.py --datapath /data/ts/datasets/person_specific_dataset/AD-NeRF/video_crop_frame/Obama --only_fine_tune_dec

Change datapath as your own data. only_fine_tune_dec means only training dec module. In my experience, training only dec can help with image quality, so we recommend it. You also can set it as False, and it means to fune tune full model. You should go through the saved samples (at exp_path/exp_name/checkpoint and in my case, at: /data/ts/checkpoints/EDTalk/fine_tune/Obama/checkpoint) frequently to find the optimal model in time.

| Step #0 | Step #100 | Step #200 |

|---|---|---|

|

|

|

First line is source image, second line is driving image, and third line is generated results.

Data Preprocess for Training

**Note**: The functions provided are available, but one should adjust the way they are called, e.g. by modifying the path to the data. If you run into any problems, feel free to leave your problems! - Download the MEAD and HDTF dataset: 1) **MEAD**. [download link](https://wywu.github.io/projects/MEAD/MEAD.html).We only use *Front* videos and extract audios and orgnize the data as follows:

```text

/dir_path/MEAD_front/

|-- Original_video

| |-- M003#angry#level_1#001.mp4

| |-- M003#angry#level_1#002.mp4

| |-- ...

|-- audio

| |-- M003#angry#level_1#001.wav

| |-- M003#angry#level_1#002.wav

| |-- ...

```

-

HDTF. download link.

We orgnize the data as follows:

/dir_path/HDTF/ |-- audios | |-- RD_Radio1_000.wav | |-- RD_Radio2_000.wav | |-- ... |-- original_videos | |-- RD_Radio1_000.mp4 | |-- RD_Radio2_000.mp4 | |-- ...

-

Crop videos in training datasets:

python data_preprocess/data_preprocess_for_train/crop_video_MEAD.py python data_preprocess/data_preprocess_for_train/crop_video_HDTF.py

-

Split video: Since the video in HDTF is too long, we split both the video and the corresponding audio into 5s segments:

python data_preprocess/data_preprocess_for_train/split_HDTF_video.py

python data_preprocess/data_preprocess_for_train/split_HDTF_audio.py

-

We save the video frames in a lmdb file to improve I/O efficiency:

python data_preprocess/data_preprocess_for_train/prepare_lmdb.py

-

Extract mel feature from audio:

python data_preprocess/data_preprocess_for_train/get_mel.py

-

Extract landmarks from cropped videos:

python data_preprocess/data_preprocess_for_train/extract_lmdk.py

-

Extract bboxs from cropped videos for lip discriminator using extract_bbox.py and we give an unclean example using lmdb like :

python data_preprocess/data_preprocess_for_train/extract_bbox.py

-

After the preprocessing, the data should be orgnized as follows:

/dir_path/MEAD_front/ |-- Original_video | |-- M003#angry#level_1#001.mp4 | |-- M003#angry#level_1#002.mp4 | |-- ... |-- video | |-- M003#angry#level_1#001.mp4 | |-- M003#angry#level_1#002.mp4 | |-- ... |-- audio | |-- M003#angry#level_1#001.wav | |-- M003#angry#level_1#002.wav | |-- ... |-- bbox | |-- M003#angry#level_1#001.npy | |-- M003#angry#level_1#002.npy | |-- ... |-- landmark | |-- M003#angry#level_1#001.npy | |-- M003#angry#level_1#002.npy | |-- ... |-- mel | |-- M003#angry#level_1#001.npy | |-- M003#angry#level_1#002.npy | |-- ... /dir_path/HDTF/ |-- split_5s_video | |-- RD_Radio1_000#1.mp4 | |-- RD_Radio1_000#2.mp4 | |-- ... |-- split_5s_audio | |-- RD_Radio1_000#1.wav | |-- RD_Radio1_000#2.wav | |-- ... |-- bbox | |-- RD_Radio1_000#1.npy | |-- RD_Radio1_000#2.npy | |-- ... |-- landmark | |-- RD_Radio1_000#1.npy | |-- RD_Radio1_000#2.npy | |-- ... |-- mel | |-- RD_Radio1_000#1.npy | |-- RD_Radio1_000#2.npy | |-- ...

Start Training

- Pretrain Encoder- Please refer to [LIA](https://github.com/wyhsirius/LIA) to train from scratch.

- (Optional) If you want to accelerate convergence speed, you can download the pre-trained model of [LIA](https://github.com/wyhsirius/LIA).

- we provide training code to fine-tune the model on MEAD and HDTF dataset:

```bash

python -m torch.distributed.launch --nproc_per_node=2 --master_port 12345 train/train_E_G.py

```

- Train Mouth-Pose Decouple module:

python -m torch.distributed.launch --nproc_per_node=2 --master_port 12344 train/train_Mouth_Pose_decouple.py

- Train Audio2Mouth module:

python -m torch.distributed.launch --nproc_per_node=2 --master_port 12344 train/train_audio2mouth.py

We hope more people can get involved, and we will promptly handle pull requests. Currently, there are still some tasks that need assistance, such as creating a colab notebook, web UI, and translation work, among others.

[ICCV 23] EMMN: Emotional Motion Memory Network for Audio-driven Emotional Talking Face Generation

[AAAI 24] Style2Talker: High-Resolution Talking Head Generation with Emotion Style and Art Style

[AAAI 24] Say Anything with Any Style

@inproceedings{tan2024edtalk,

title = {EDTalk: Efficient Disentanglement for Emotional Talking Head Synthesis},

author = {Tan, Shuai and Ji, Bin and Bi, Mengxiao and Pan, Ye},

booktitle = {Proceedings of the European Conference on Computer Vision (ECCV)},

year = {2024}

}

Some code are borrowed from following projects:

Some figures in the paper is inspired by:

Thanks for these great projects.