24/Sep./2022: We are pleased to announce that our total prize will be up to 4000 CHF (4000 USD) for our two challenges (hand, action).

15/Aug./2022: Our challenges are open here (hand, action). Our 1st phase of the challenges will be closed at October 1st, 2022.

9/May/2022: The download script (downlaod_script.py) is uploaded.

8/May/2022: Labels for hand pose and object pose are released. Please check https://h2odataset.ethz.ch

4/May/2022: The H2O dataset will be part of the workshop, Human Body, Hands, and Activities from Egocentric and Multi-view Cameras@ECCV2022. Please check our workshop site (https://sites.google.com/view/egocentric-hand-body-activity) and our challenges.

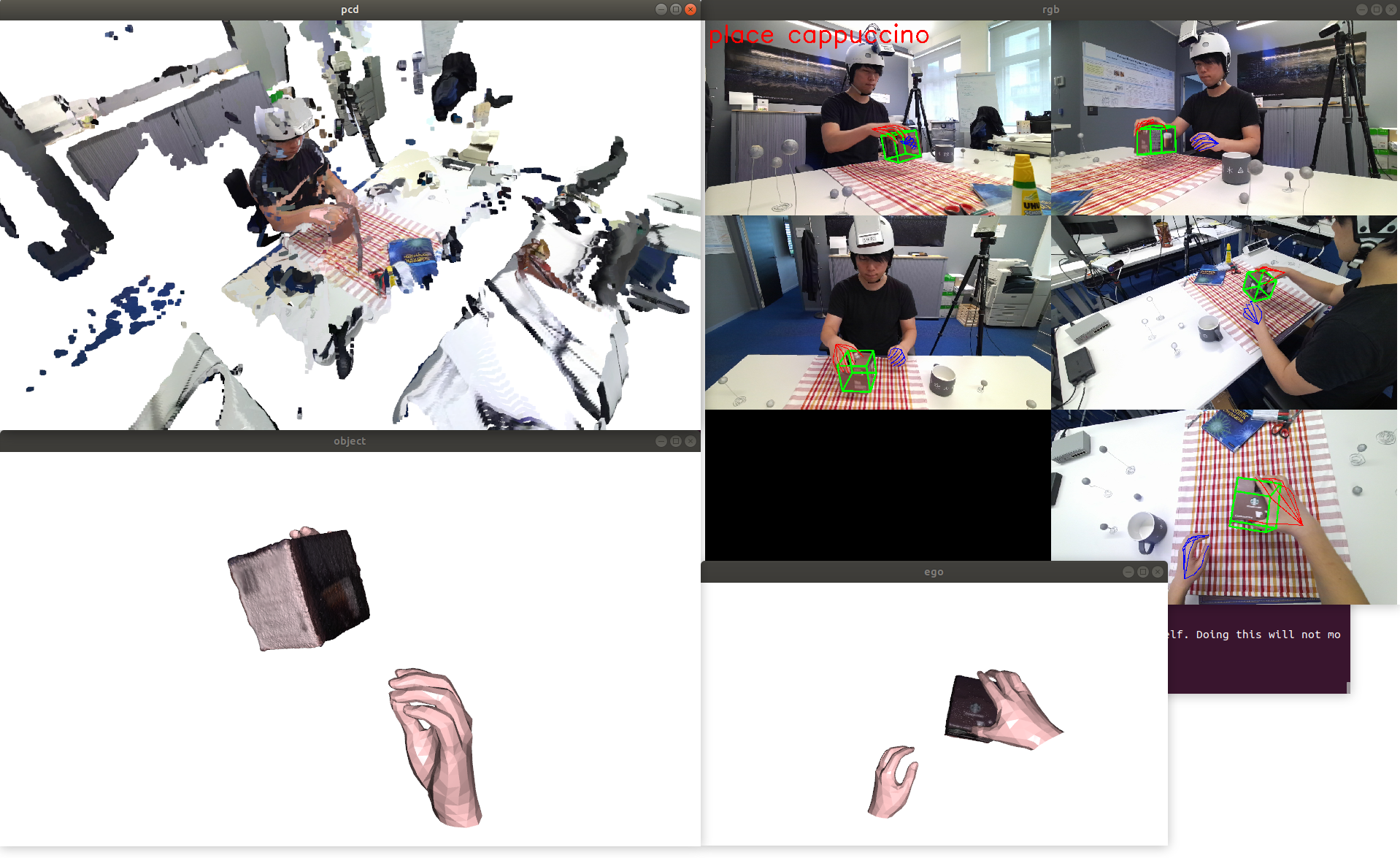

12/Mar./2022: Visualization code is published. https://github.com/taeinkwon/h2oplayer

11/Mar./2022: Updated new labels to the existing files.

8/Dec./2021: Please Download new labels "manolabel_v1.1.tar.gz" in https://h2odataset.ethz.ch for MANO parameters. We will also update existing files soon.

Please check the visualization code in the following link: https://github.com/taeinkwon/h2oplayer.

Please check the visualization code in the following link: https://github.com/taeinkwon/h2oplayer.

- python3

- tqdm

- pathlib

- requests

- argparse

Once you receive the username and password from the download page (https://h2odataset.ethz.ch), you can either download directly from the download page (https://h2odataset.ethz.ch) or use download_script.py.

python download_script.py --username "username" --password "password" --mode "type of view" --dest "dest folder path"

We provide three different modes (views):

- all: download the entire data, including five views (four fixed views, one egocentric view).

- ego: download only egocentric-view data.

- pose: download only pose (hand, object, egocentric view) without RGB-D images.

. ├── h1 │ ├── 0 │ │ │── cam0 │ │ │ ├── rgb │ │ │ ├── depth │ │ │ ├── cam_pose │ │ │ ├── hand_pose │ │ │ ├── hand_pose_MANO │ │ │ ├── obj_pose │ │ │ ├── obj_pose_RT │ │ │ ├── action_label (only in cam4) │ │ │ ├── rgb256 (only in cam4) │ │ │ ├── verb_label │ │ │ └── cam_intrinsics.txt │ │ ├── cam1 │ │ ├── cam2 │ │ ├── cam3 │ │ └── cam4 │ ├── 1 │ ├── 2 │ ├── 3 │ └── ... ├── h2 ├── k1 ├── k2 └── ...

cam0 ~ cam3 are fixed cameras. cam4 is an head-mounted camera (egocentric view).

train_sequences = ['subject1/h1', 'subject1/h2', 'subject1/k1', 'subject1/k2', 'subject1/o1', 'subject1/o2', 'subject2/h1', 'subject2/h2', 'subject2/k1',

'subject2/k2', 'subject2/o1', 'subject2/o2', 'subject3/h1', 'subject3/h2', 'subject3/k1'] (subject 1,2,3)

val_sequences = ['subject3/k2', 'subject3/o1', 'subject3/o2'] (subject 3)

test_sequences = ['subject4/h1', 'subject4/h2', 'subject4/k1', 'subject4/k2', 'subject4/o1', 'subject4/o2'] (subject4)

1280 * 720 resolution rgb images

455 * 256 resolution resized rgb images

1280 * 720 resolution depth images

cam_to_world rotion matrix

16 numbers, 4x4 camera matrix

cam_to_hand

1 (whether annotate or not, 0: not annotate 1: annotate) + 21 * 3 (x, y, z in order) + 1 + 21 * 3 (right hand)

First 64 numbers belong to the left hand. Next 64 numbers belong to the right hand

1 (whether annotate or not, 0: not annotate 1: annotate) + 3 translation values + 48 pose values + 10 shape values + 1 + 3 + 48 + 10 (right hand)

First 59 numbers belong to the left hand. Next 59 numbers belong to the right hand

cam_to_obj

1 (object class) + 21 * 3 (x, y, z in order)

21 numbers : 1 center, 8 corners, 12 mid edge point.

0 background (no object)

1 book

2 espresso

3 lotion

4 spray

5 milk

6 cocoa

7 chips

8 capuccino

1 (object class) + 16 numbers, 4x4 camera matrix

0 background (no verb)

1 grab

2 place

3 open

4 close

5 pour

6 take out

7 put in

8 apply

9 read

10 spray

11 squeeze

Combination of a noun (object class) and a verb (verb label)

0 background

1 grab book

2 grab espresso

3 grab lotion

4 grab spray

5 grab milk

6 grab cocoa

7 grab chips

8 grab cappuccino

9 place book

10 place espresso

11 place lotion

12 place spray

13 place milk

14 place cocoa

15 place chips

16 place cappuccino

17 open lotion

18 open milk

19 open chips

20 close lotion

21 close milk

22 close chips

23 pour milk

24 take out espresso

25 take out cocoa

26 take out chips

27 take out cappuccino

28 put in espresso

29 put in cocoa

30 put in cappuccino

31 apply lotion

32 apply spray

33 read book

34 read espresso

35 spray spray

36 squeeze lotion

Train set file

Validation set file

Test set file

Train set file

Validation set file

Test set file

six numbers : fx, fy, cx, cy, width, height

If you find any usefulness in this dataset, please consider citing:

@InProceedings{Kwon_2021_ICCV,

author = {Kwon, Taein and Tekin, Bugra and St\"uhmer, Jan and Bogo, Federica and Pollefeys, Marc},

title = {H2O: Two Hands Manipulating Objects for First Person Interaction Recognition},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2021},

pages = {10138-10148}

}