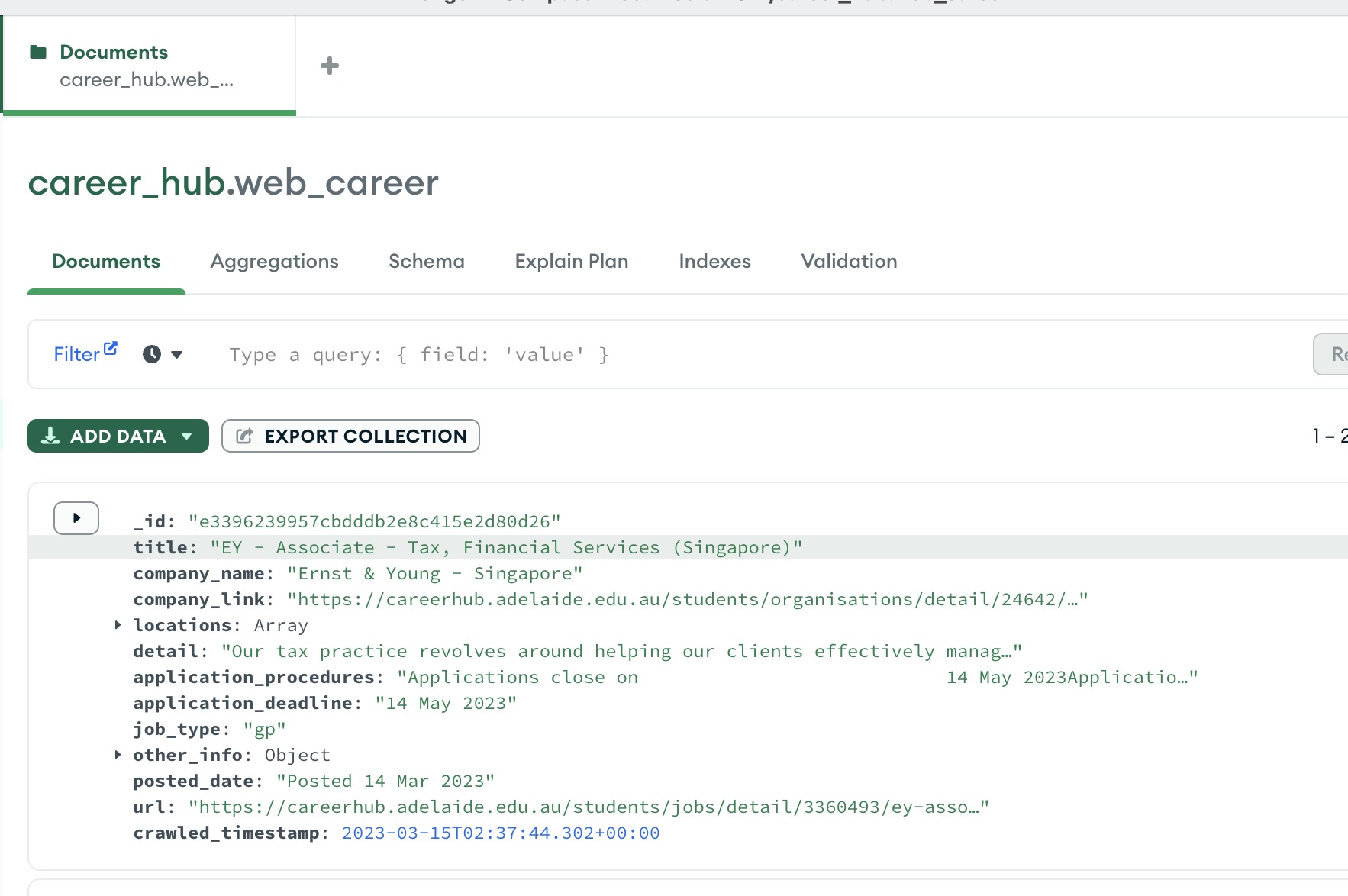

This repository houses a Scrapy project that specializes in scraping job postings from the University of Adelaide Careerhub and storing the results in a MongoDB database. The project is optimized for swiftly scraping hundreds of pages in a matter of minutes and is supplemented with Docker support to facilitate setup and execution.

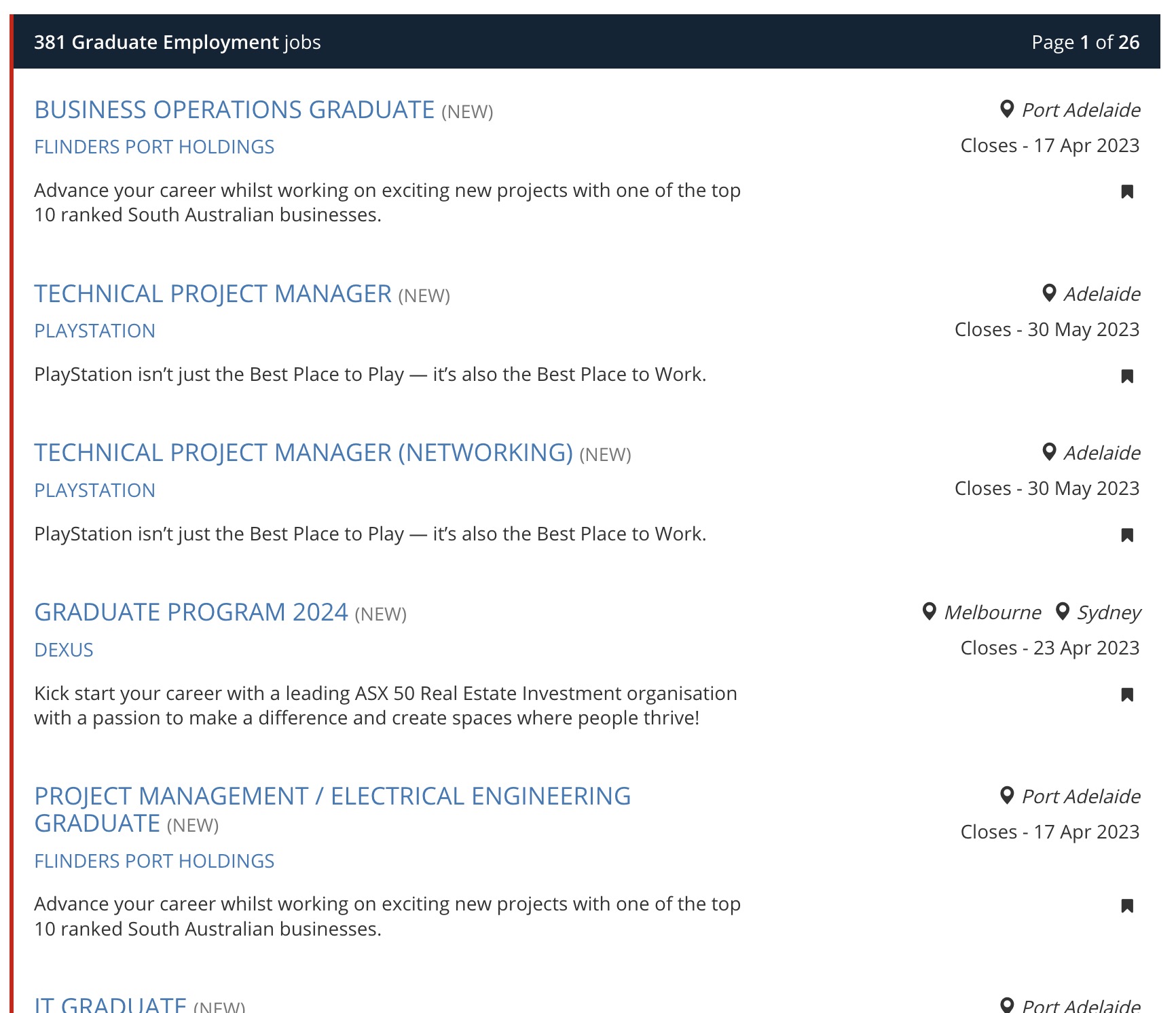

The spider is programmed to navigate through two primary jobs channels, namely graduate-employment and internship, in search of job detail links. Additionally, it will extract pertinent information, such as open and close dates, from the job details.

Be awere: due to UI changes, the codes related to XML parsing may be broken.

- Web examples

- Getting Started

- Docker Setup

- Local Python Environment

- Running the Spider

- Contributing

- License

To get started, clone the repository:

git clone https://github.com/username/careerhub_scrape.gitTo use Docker, ensure that you have Docker and Docker Compose installed. Build the Docker environment and start the containers with the following commands:

docker-compose build

docker-compose upAlternatively, you can use the provided Makefile commands:

make build

make upCreate a folder of mongodb-data/ in the root, it will be the meta data location for mongodb

To set up a local Python environment with conda, use the provided environment.yml file with the following commands:

conda env create -f environment.ymlAlternatively, you can use the provided Makefile command:

make build_python_envBecause it is within university network, you must be a student of Unversity of Adelaide, and put your student id and pass in a file .secrets in the root diretory, then they will be read in the settings.py:

Example of .secrets

# Access the SECRET_KEY environment variable

export USERNAME="your name"

export PASSWORD="your pass"To start the spider, use the following command:

source .secret && cd career_hub && python main.pyAlternatively, you can use the provided Makefile command:

make run

If you would like to contribute to the project, please fork the repository, make your changes, and submit a pull request.

This project is licensed under the MIT License - see the LICENSE