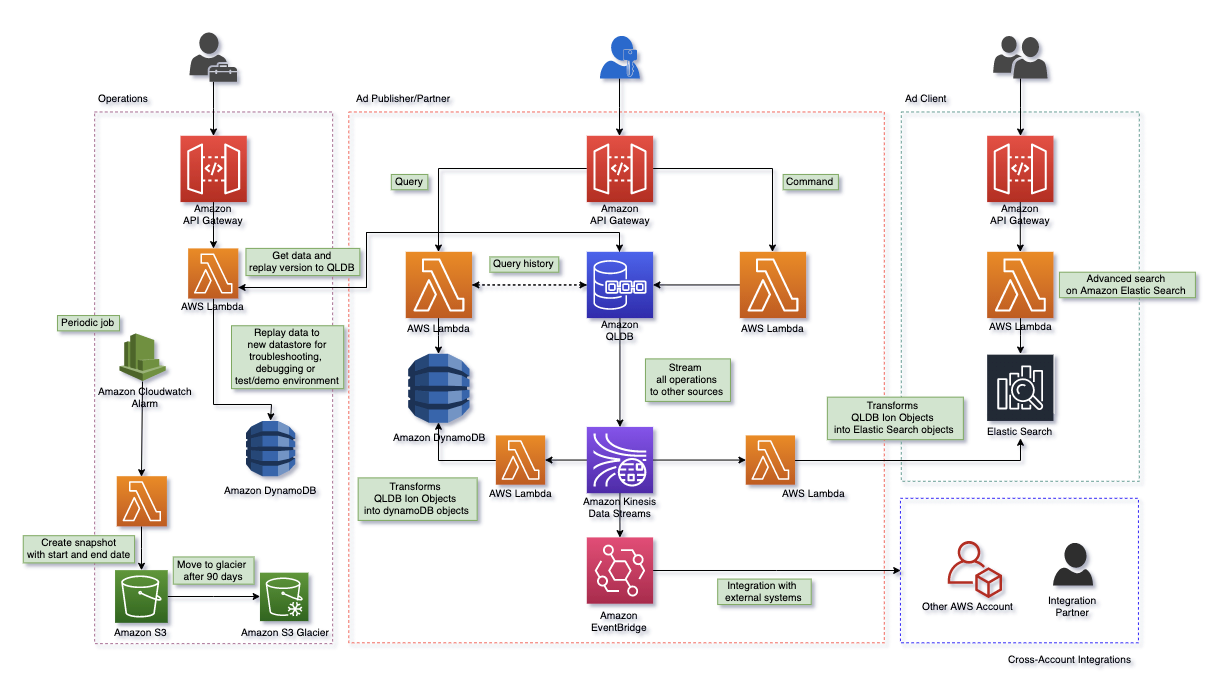

This is an example implementation of a System of Record for a Classified Ads platform. This example follows an Event Sourcing and CQRS (Command Query Responsibility Segregation) pattern using Amazon QLDB as an immutable append-only event store and source of truth.

For this specific use case we have focused on the backend services only.

Event Sourcing is a pattern that tipically introduces the concept of Event Store - where all events are tracked - and State Store - where the latest and final state of each object is stored. While this may add some complexity to the architecture it has some advantages such as:

- Auditing: Events are immutable and each state change corresponds to one or more events stored on the event store.

- Replay events: in case of application failure, we are able to reconstruct the state of an entity by replaying all the events since the event store maintains the complete history of every single entity.

- Temporal queries: we can determine the application state at any point in time very easily. This can be achieved by starting with a blank state store and replaying the events up to a specific point in time.

- Extensibility: The event store raises events, and tasks perform operations in response to those events. This decoupling of the tasks from events allows more flexibility and extensibility.

In traditional data management systems, both commands and queries are executed against the same data store. This introduces some challenges for applications with a large customer base, such as increased risk for data contention, additional complexity managing security and running routine operations. CQRS (Command Query Responsibility Segregation) pattern aims to solve this issues by segregating read and write operations which as some obvious benefits:

- Indepedent scaling: CQRS allows the read and write workloads to scale independently which may result in fewer lock contentions and optimized costs.

- Optimized data schemas: The read side can use a schema optimized for queries, while the write side uses a schema optimized for updates.

- Security: It's easier to ensure that only the right entity as access to perform reads or writes on the correct data store.

- Separation of concerns: Models will be more maintainable and flexible. Most of the complex business logic goes into the write model.

- Simpler queries: By storing a materialized view in the read database the application can avoid complex joins that are compute intensive and potentially more expensive.

The below architecture represents all the components used in setting up this example. Amazon QLDB was the obvious choice for this scenario since it is an immutable data store that triggers events which allows the platform to be highly extensible and support virtually any type of integration (ex: ElasticSearch for Client APIs or EventBridge for integration across AWS Accounts).

- Visual Studio Code (install)

- AWS Toolkit for Visual Studio Code (install)

- Node.js (install)

- Serverless Framework (install)

Every single component of this infrastructure is automatically deploy by using AWS CDK. To deploy follow this steps:

-

Clone the project

$ mkdir projects && cd projects $ git clone https://github.com/t1agob/eventsourcing-qldb.git eventsourcing-qldb

-

Open the project in Visual Studio Code

$ cd eventsourcing-qldb $ code .

-

Navigate to cdk folder and install dependencies

$ cd cdk $ npm install -

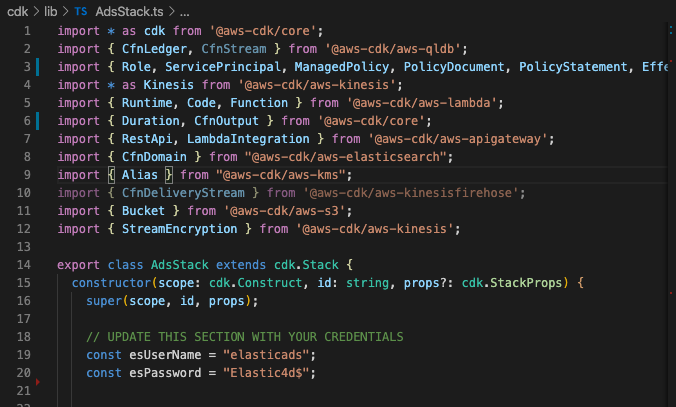

Update master username and password for ElasticSearch

On lines 19 and 20 you may find the master username and password that are going to be used as the credentials to access ElasticSearch service. You may want to use your own credentials so you should update the values here.

ElasticSearch enforces that the password contains one uppercase letter, one lowercase letter, one number and a special character.

-

Install project dependencies and package projects

In order for CDK to be able to deploy, not only the infrastructure required but also the Lambda Functions that do the actual work, we need to make sure they are packaged correctly.

Run the following scirpt from the current location to make sure all dependencies are installed and the projects are packaged.

$ ./package-projects.sh

-

Deploy with CDK

$ cdk deploy

The full deployment takes around 15min to complete since this is a complex infrastructure but once deployed everything should be working right away.

Since we just created the APIs for the services we don't have a UI that allows us to test the scenario so it needs to be done by calling the APIs directly. For that you can use cURL, Postman or any other tool you prefer - I will use cURL for simplicity.

Make sure to replace placeholders in the Url.

curl --location --request POST '[API ENDPOINT]/publisher/[PUBLISHER ID]/ad' \

--header 'Content-Type: application/json' \

--data-raw '{

"adTitle": "awesome title",

"adDescription": "awesome description",

"price": 10,

"currency": "€",

"category": "awesome category",

"tags": [

"awesome",

"category"

]

}'curl --location --request PATCH '[API ENDPOINT]/publisher/[PUBLISHER ID]/ad/[AD ID]' \

--header 'Content-Type: application/json' \

--data-raw '{

"adTitle": "awesome title",

"adDescription": "awesome description",

"price": 150,

"currency": "€",

"category": "awesome category",

"tags": [

"awesome",

"category"

]

}'curl --location --request DELETE '[API_ENDPOINT]/publisher/[PUBLISHER_ID]/ad/[AD_ID]'curl --location --request GET '[API_ENDPOINT]/publisher/[PUBLISHER_ID]/ad'curl --location --request GET '[API_ENDPOINT]/publisher/[PUBLISHER_ID]/ad/[AD_ID]'curl --location --request GET '[API_ENDPOINT]/publisher/[PUBLISHER_ID]/ad/[AD_ID]?versions=true'curl --location --request GET '[API_ENDPOINT]/?q=[QUERY]'- Add DynamoDB as the state store

- Update internal GET operations to query DynamoDB instead of QLDB (for best practices and scalability purposes)

- Implement Event Sourcing features

- Snapshot

- Create a snapshot of state store after specific timeframe (eg. every 1st day of each month)

- Replay

- Allow replay of a single entity within a specific time range. Start and end dates are optional.

- Allow replay of all items - full state store loss

- Snapshot

- Integration with EventBridge

In order to process the items being streamed from QLDB we need to deaggregate these into separate Ion Objects and convert them into the right format so we can push them to Elastic Search and DynamoDB. For that we used the Kinesis Record Aggregation & Deaggregation Modules for AWS Lambda open source project from AWSLabs and took as a reference implementation the one created by Matt Lewis in QLDB Simple Demo.

If you feel that there is space for improvement in this project or if you find a bug please raise an issue or submit a PR.