BEVFormer

The official implementation of the paper "BEVFormer: Learning Bird's-Eye-View Representation from Multi-Camera Images via Spatiotemporal Transformers".

Authors: Zhiqi Li*, Wenhai Wang*, Hongyang Li*, Enze Xie, Chonghao Sima, Tong Lu, Yu Qiao, Jifeng Dai

Code will be released around June 2022.

Team

This project is supported by the Fundamental Vision team and the PerceptionX team of Shanghai AI Laboratory.

News

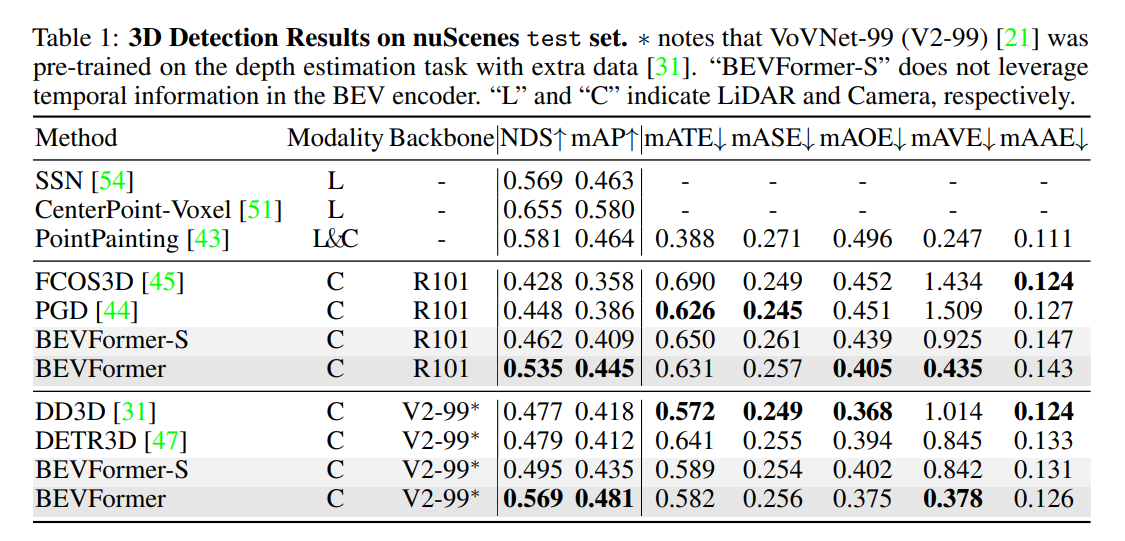

[2022/3/10]: We achieve the SOTA on nuScenes Detection Task with 56.9% NDS (camera-only)!

Abstract

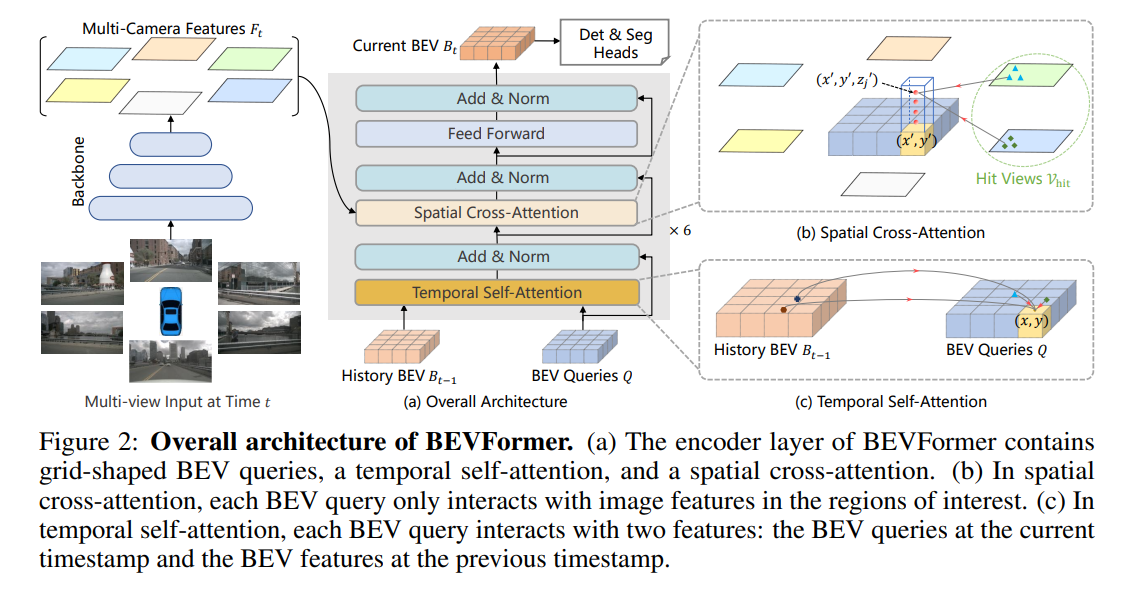

In this work, we present a new framework termed BEVFormer, which learns unified BEV representations with spatiotemporal transformers to support multiple autonomous driving perception tasks. In a nutshell, BEVFormer exploits both spatial and temporal information by interacting with spatial and temporal space through predefined grid-shaped BEV queries. To aggregate spatial information, we design a spatial cross-attention that each BEV query extracts the spatial features from the regions of interest across camera views. For temporal information, we propose a temporal self-attention to recurrently fuse the history BEV information. Our approach achieves the new state-of-the-art 56.9% in terms of NDS metric on the nuScenes test set, which is 9.0 points higher than previous best arts and on par with the performance of LiDAR-based baselines.

Results

Methods

Bibtex

If you find our work helpful for your research, please consider citing the following BibTeX entry.

@misc{li2022bevformer,

title={BEVFormer: Learning Bird's-Eye-View Representation from Multi-Camera Images via Spatiotemporal Transformers},

author={Zhiqi Li and Wenhai Wang and Hongyang Li and Enze Xie and Chonghao Sima and Tong Lu and Qiao Yu and Jifeng Dai},

year={2022},

eprint={2203.17270},

archivePrefix={arXiv},

primaryClass={cs.CV}

}