Authors: Yujia Sun, Geng Chen, Tao Zhou, Yi Zhang, and Nian Liu.

- This repository provides code for "Context-aware Cross-level Fusion Network for Camouflaged Object Detection" IJCAI-2021.

Camouflaged object detection (COD) is a challenging task due to the low boundary contrast between the object and its surroundings. In addition, the appearance of camouflaged objects varies significantly, e.g., object size and shape, aggravating the difficulties of accurate COD. In this paper, we propose a novel Context-aware Cross-level Fusion Network (C2FNet) to address the challenging COD task.Specifically, we propose an Attention-induced Cross-level Fusion Module (ACFM) to integrate the multi-level features with informative attention coefficients. The fused features are then fed to the proposed Dual-branch Global Context Module (DGCM), which yields multi-scale feature representations for exploiting rich global context information. In C2FNet, the two modules are conducted on high-level features using a cascaded manner. Extensive experiments on three widely used benchmark datasets demonstrate that our C2FNet is an effective COD model and outperforms state-of-the-art models remarkably.

Figure 1: The overall architecture of the proposed model, which consists of two key components, i.e., attention-induced cross-level fusion module and dual-branch global context module. See § 3 in the paper for details.

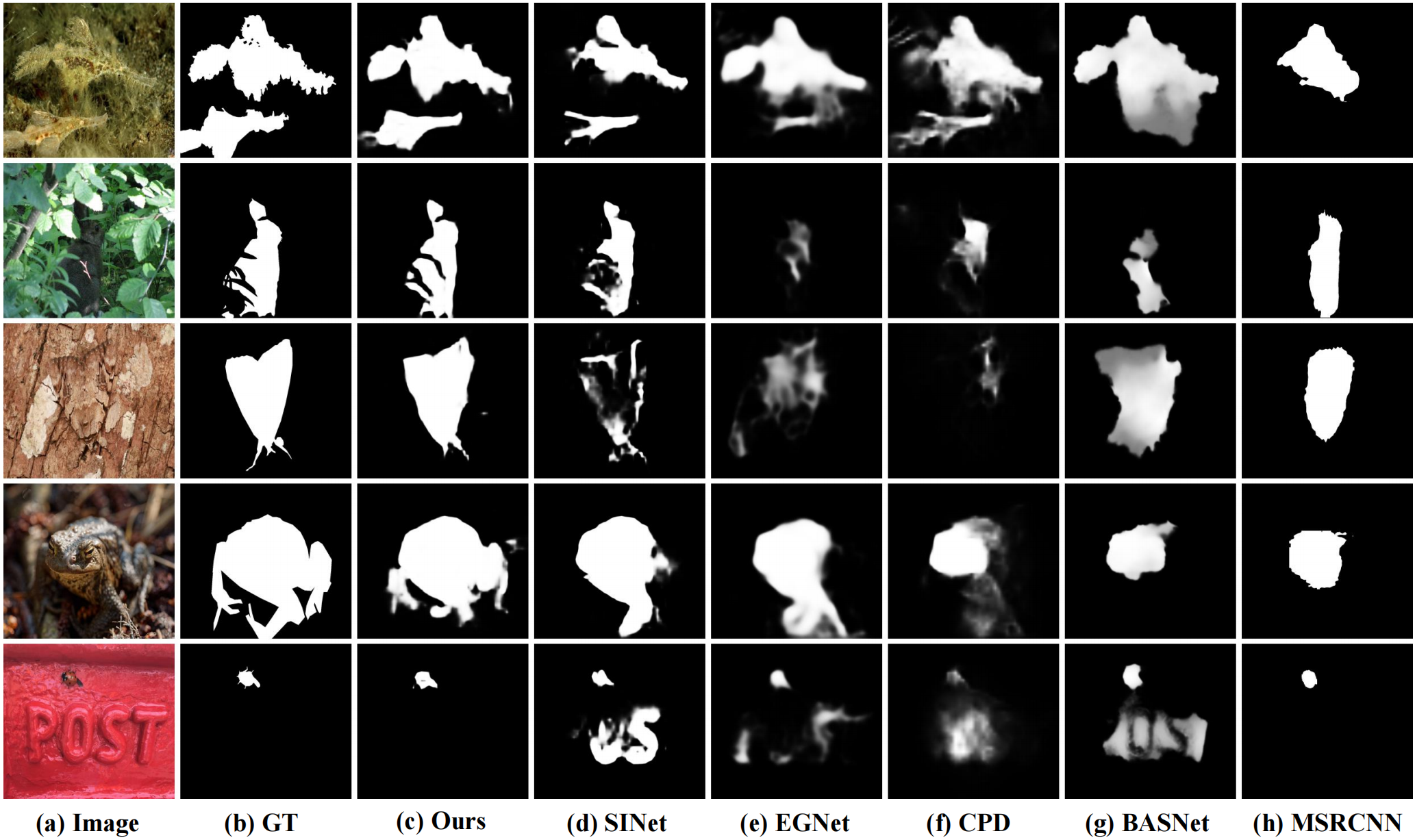

Figure 2: Qualitative Results.

The training and testing experiments are conducted using PyTorch with a single NVIDIA Tesla P40 GPU of 24 GB Memory.

-

Configuring your environment (Prerequisites):

-

Creating a virtual environment in terminal:

conda create -n C2FNet python=3.6. -

Installing necessary packages:

pip install -r requirements.txt.

-

-

Downloading necessary data:

-

downloading testing dataset and move it into

./data/TestDataset/, which can be found in this download link (Google Drive). -

downloading training dataset and move it into

./data/TrainDataset/, which can be found in this download link (Google Drive). -

downloading pretrained weights and move it into

./checkpoints/C2FNet40/C2FNet-39.pth, which can be found in this download link (Google Drive). -

downloading Res2Net weights and move it into

./models/res2net50_v1b_26w_4s-3cf99910.pthdownload link (Google Drive).

-

-

Training Configuration:

- Assigning your costumed path, like

--train_saveand--train_pathinMyTrain.py. - I modify the total epochs and the learning rate decay method (lib/utils.py has been updated), so there are differences from the training setup reported in the paper. Under the new settings, the training performance is more stable.

- Assigning your costumed path, like

-

Testing Configuration:

- After you download all the pre-trained model and testing dataset, just run

MyTest.pyto generate the final prediction map: replace your trained model directory (--pth_path).

- After you download all the pre-trained model and testing dataset, just run

One-key evaluation is written in MATLAB code (revised from link),

please follow this the instructions in ./eval/main.m and just run it to generate the evaluation results in.

If you want to speed up the evaluation on GPU, you just need to use the efficient tool link by pip install pysodmetrics.

Assigning your costumed path, like method, mask_root and pred_root in eval.py.

Just run eval.py to evaluate the trained model.

pre-computed map can be found in download link.

Please cite our paper if you find the work useful:

@inproceedings{sun2021c2fnet,

title={Context-aware Cross-level Fusion Network for Camouflaged Object Detection},

author={Sun, Yujia and Chen, Geng and Zhou, Tao and Zhang, Yi and Liu, Nian},

booktitle={IJCAI},

pages = "1025--1031",

year={2021}

}