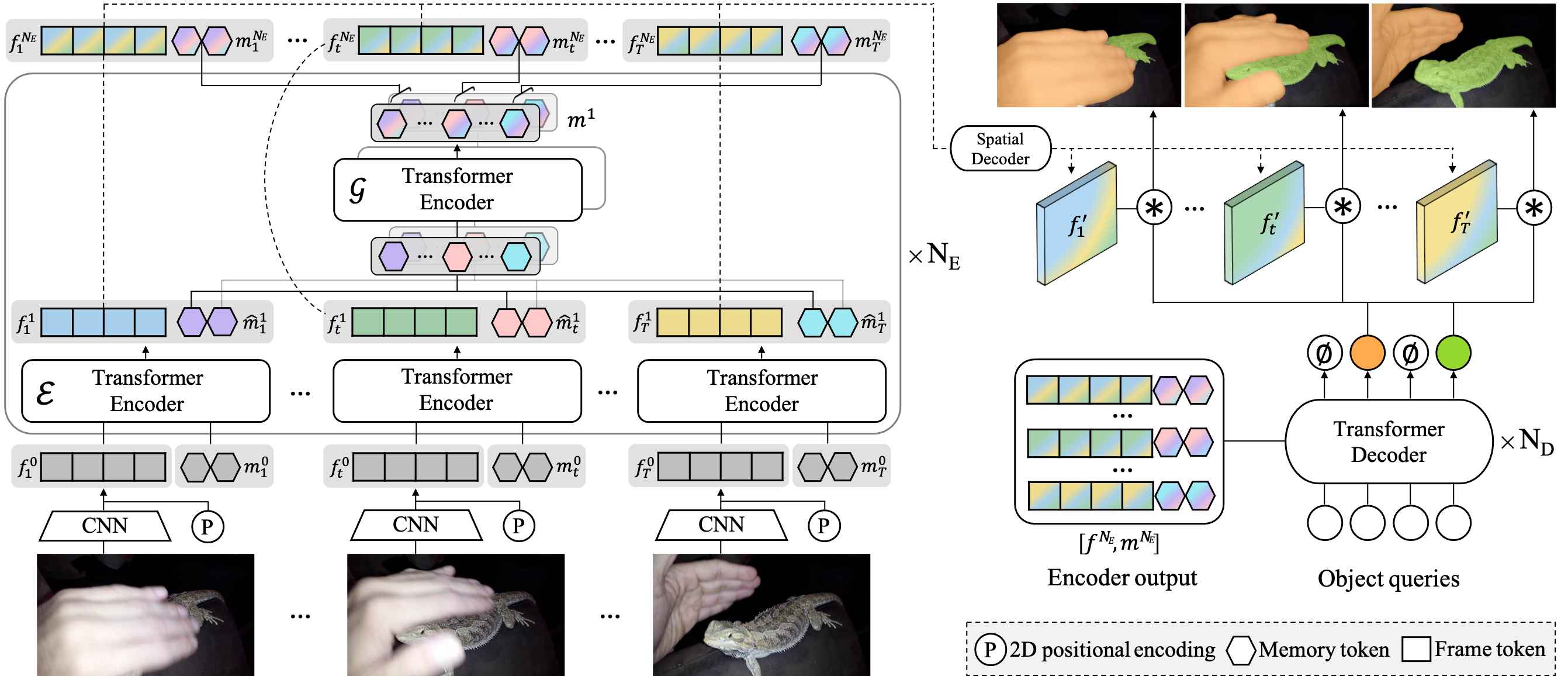

Video Instance Segmentation using Inter-Frame Communication Transformers

- Based on detectron2 and DETR (Used commit : 76ec0a2).

- The codes are under projects/ folder, which follows the convention of detectron2.

- You can easily import our project to the latest detectron2 by following below.

- inserting projects/IFC folder

- updating detectron2/projects/__init__.py

- updating setup.py

- Installation.

Install YouTube-VIS API following the link.

Install the repository by the following command. Follow Detectron2 for details.

git clone https://github.com/sukjunhwang/IFC.git

cd IFC

pip install -e .- Link datasets

COCO

mkdir -p datasets/coco

ln -s /path_to_coco_dataset/annotations datasets/coco/annotations

ln -s /path_to_coco_dataset/train2017 datasets/coco/train2017

ln -s /path_to_coco_dataset/val2017 datasets/coco/val2017YTVIS 2019

mkdir -p datasets/ytvis_2019

ln -s /path_to_ytvis2019_dataset datasets/ytvis_2019We expect ytvis_2019 folder to be like

└── ytvis_2019

├── train

│ ├── Annotations

│ ├── JPEGImages

│ └── meta.json

├── valid

│ ├── Annotations

│ ├── JPEGImages

│ └── meta.json

├── test

│ ├── Annotations

│ ├── JPEGImages

│ └── meta.json

├── train.json

├── valid.json

└── test.json

Training w/ 8 GPUs (if using AdamW and trying to change the batch size, please refer to https://arxiv.org/abs/1711.00489)

- Our suggestion is to use 8 GPUs.

- Pretraining on COCO requires >= 16G GPU memory, while finetuning on YTVIS requires less.

python projects/IFC/train_net.py --num-gpus 8 \

--config-file projects/IFC/configs/base_ytvis.yaml \

MODEL.WEIGHTS path/to/model.pthEvaluating on YTVIS 2019.

We support multi-gpu evaluation and $F_NUM denotes the window size.

python projects/IFC/train_net.py --num-gpus 8 --eval-only \

--config-file projects/IFC/configs/base_ytvis.yaml \

MODEL.WEIGHTS path/to/model.pth \

INPUT.SAMPLING_FRAME_NUM $F_NUMDue to the small size of YTVIS dataset, the scores may fluctuate even if retrained with the same configuration.

Note: We suggest you to refer to the average scores reported in camera-ready version of NeurIPS.

| backbone | stride | FPS | AP | AP50 | AP75 | AR1 | AR10 | download |

|---|---|---|---|---|---|---|---|---|

| ResNet-50 | T=5 T=36 |

46.5 107.1 |

41.6 42.8 |

63.2 65.8 |

45.6 46.8 |

43.6 43.8 |

53.0 51.2 |

model | results |

| ResNet-101 | T=36 | 89.4 | 44.6 | 69.2 | 49.5 | 44.0 | 52.1 | model | results |

IFC is released under the Apache 2.0 license.

If our work is useful in your project, please consider citing us.

@article{hwang2021video,

title={Video instance segmentation using inter-frame communication transformers},

author={Hwang, Sukjun and Heo, Miran and Oh, Seoung Wug and Kim, Seon Joo},

journal={Advances in Neural Information Processing Systems},

volume={34},

pages={13352--13363},

year={2021}

}We highly appreciate all previous works that influenced our project.

Special thanks to facebookresearch for their wonderful codes that have been publicly released (detectron2, DETR).