This is the coursework project page for "Computer Vision - CSCI-GA.2271-001" Fall 2020 (https://cs.nyu.edu/~fergus/teaching/vision/index.html) at NYU Courant.

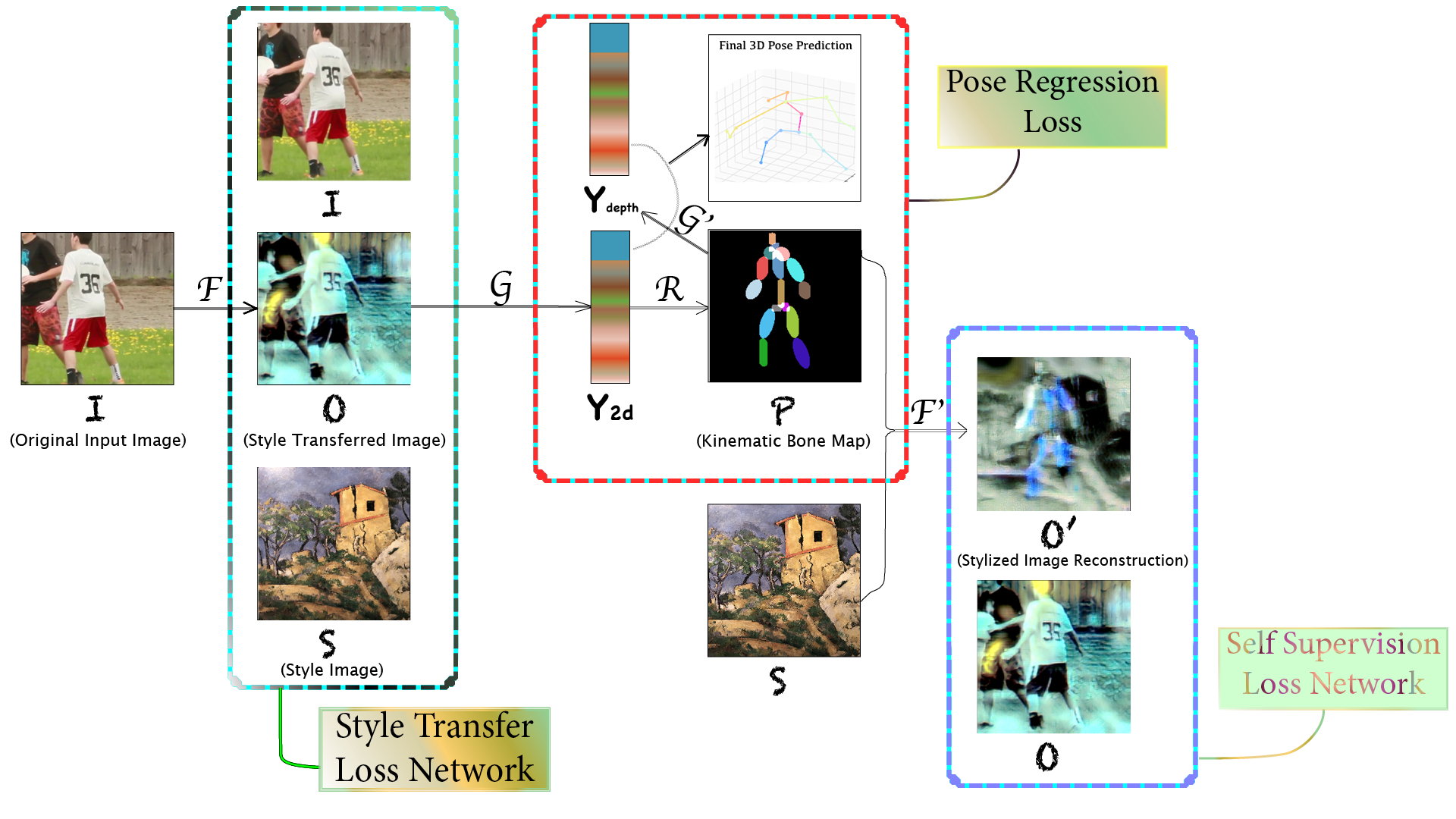

Implementation of "NAPA: Neural Art Human Pose Amplifier".

NAPA: Neural Art Human Pose Amplifier,

Qingfu Wan, Oliver Lu

arXiv technical report (course project report) (arXiv)

[Paper] [2-min Video] [10-sec Preview] [4-page Slide] [Datasets]

Trailers:

[Training] [Testing] [Artwork Annotator] [Pseudo 3D Annotator (OpenGL)] [Pseudo 3D Annotator (GUI)]

[Remark] Some incomplete preliminary theoretical foundations (motivations / analysis) can be found in the Appendix section of the pdf report, where we try to dissect through the lens of topological spaces. Note this only provides some insights into the choices made as well as the components involved. Please refer to 1. the code 2. the experiments in the main body for a thorough understanding.

Upon first look:

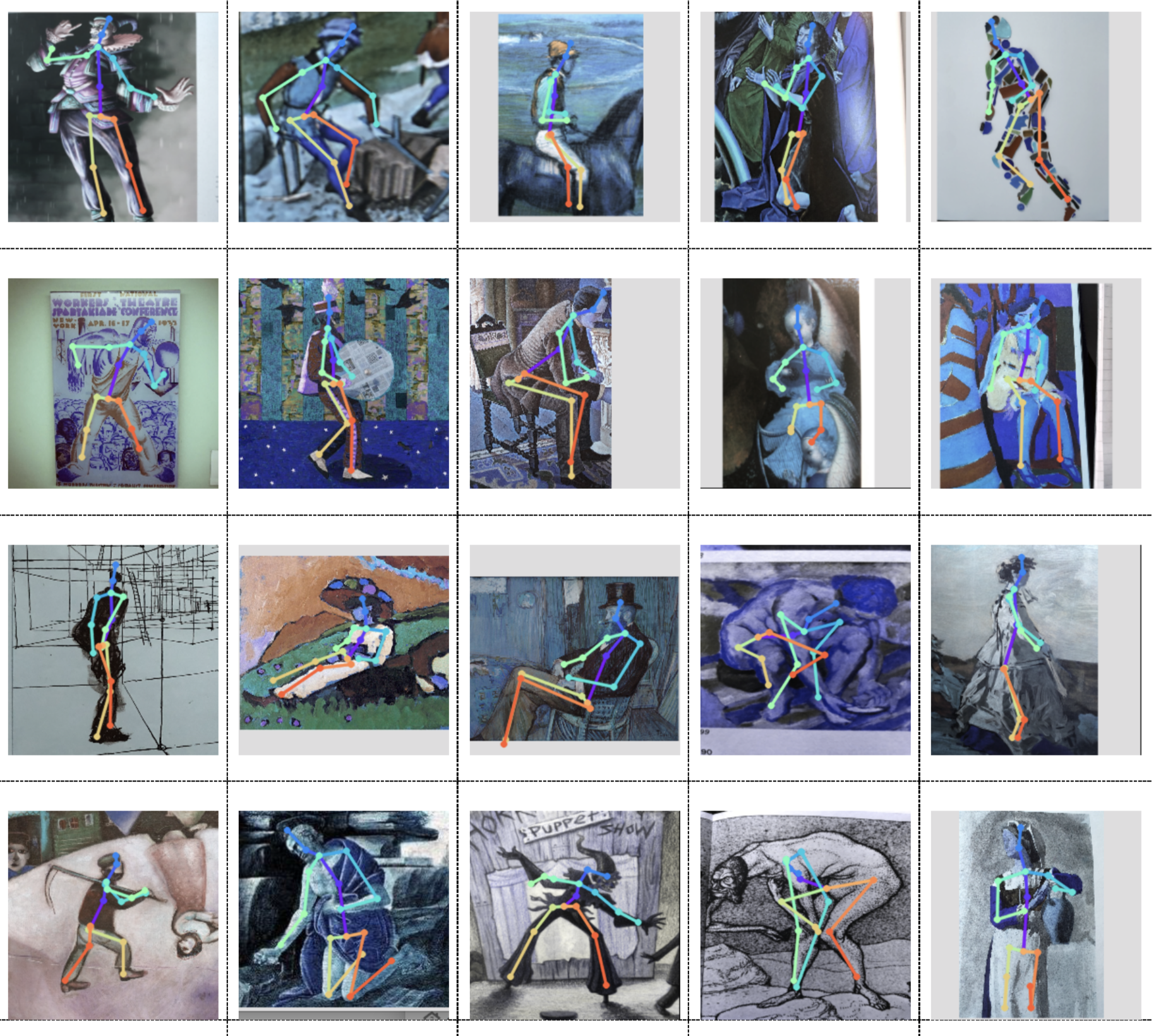

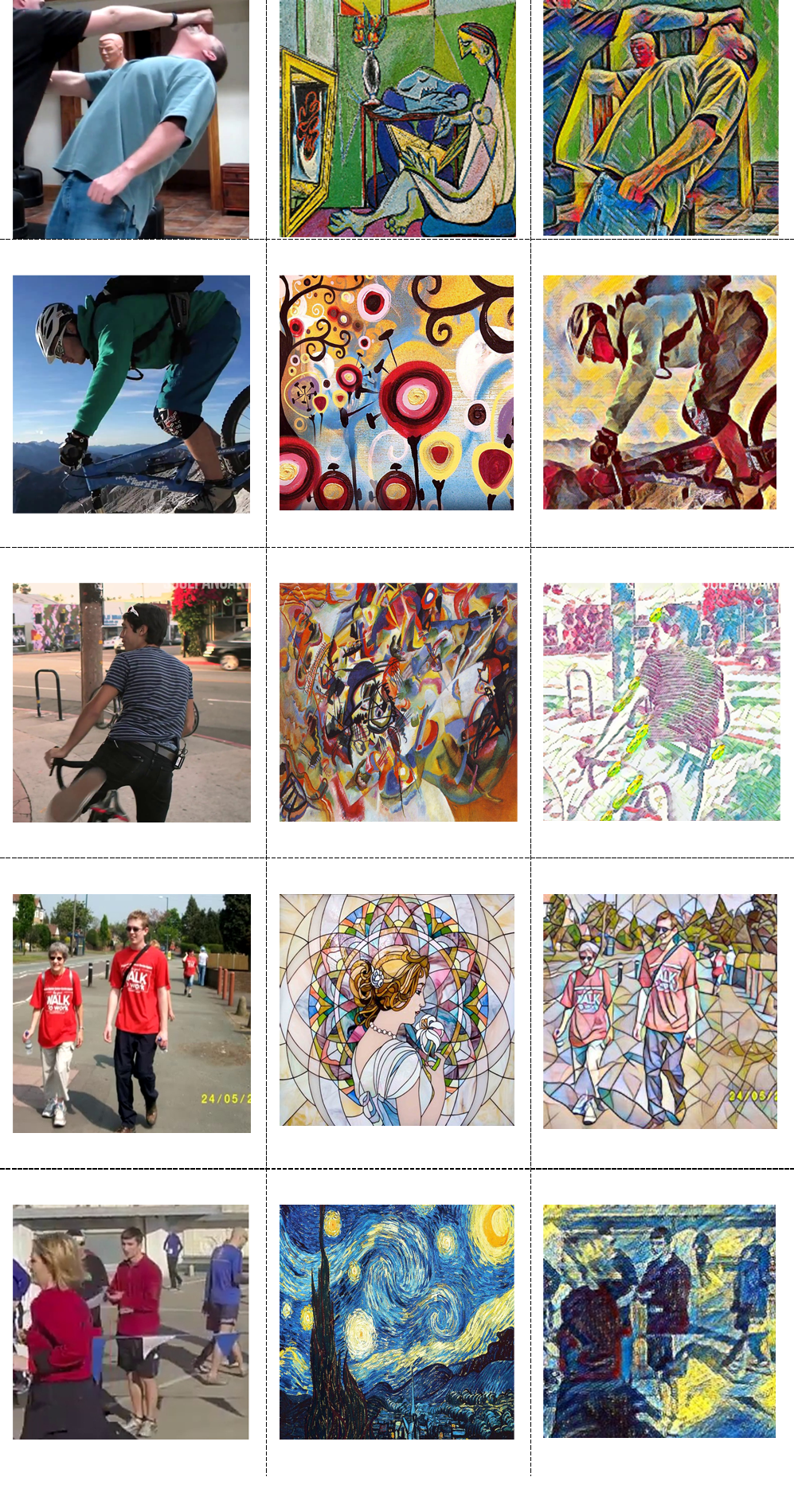

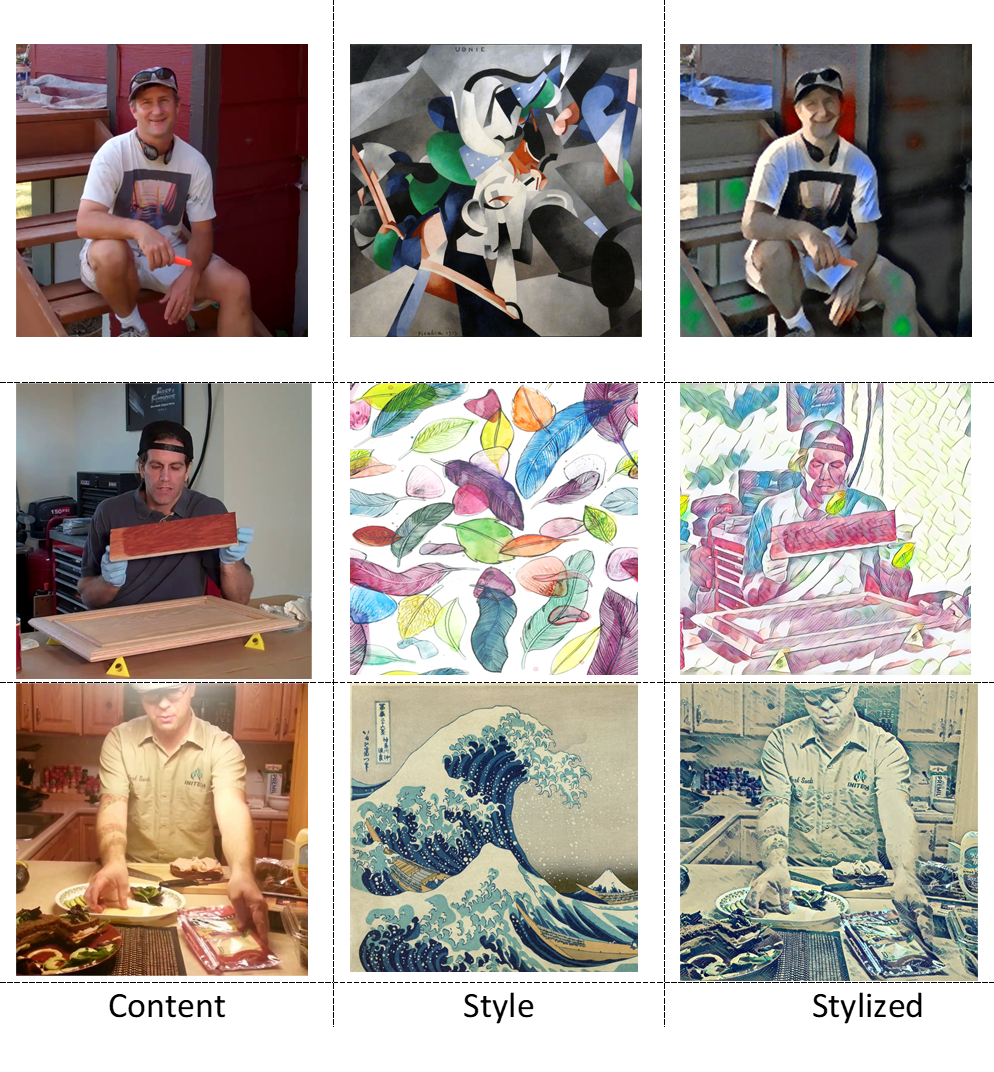

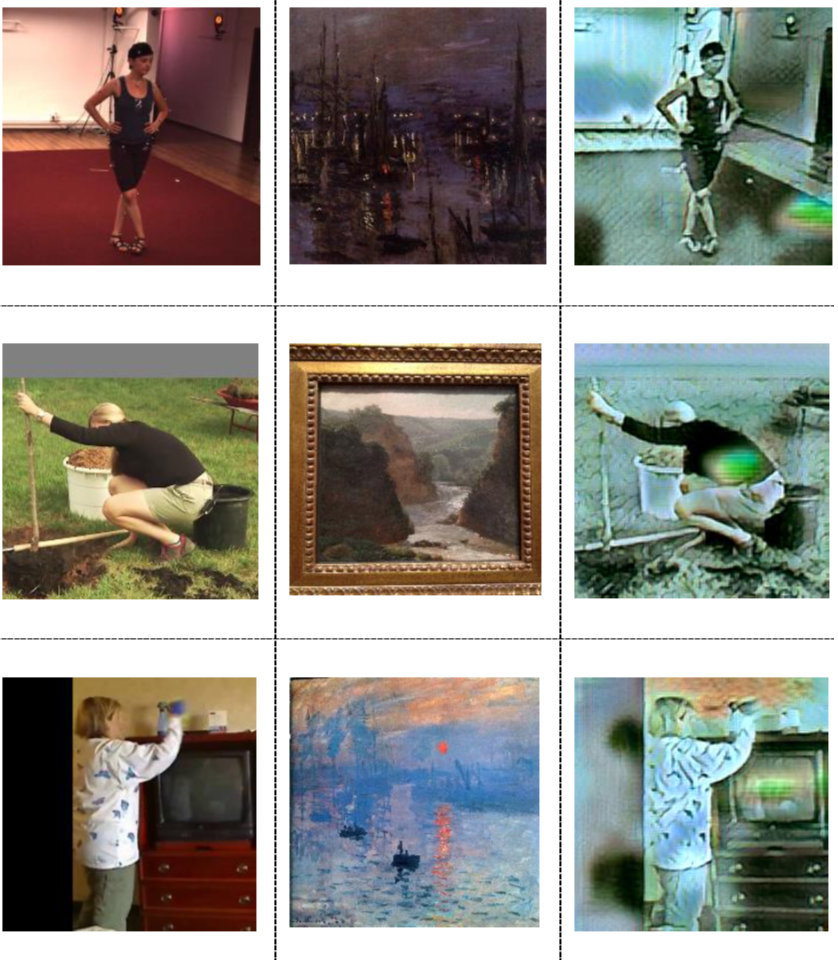

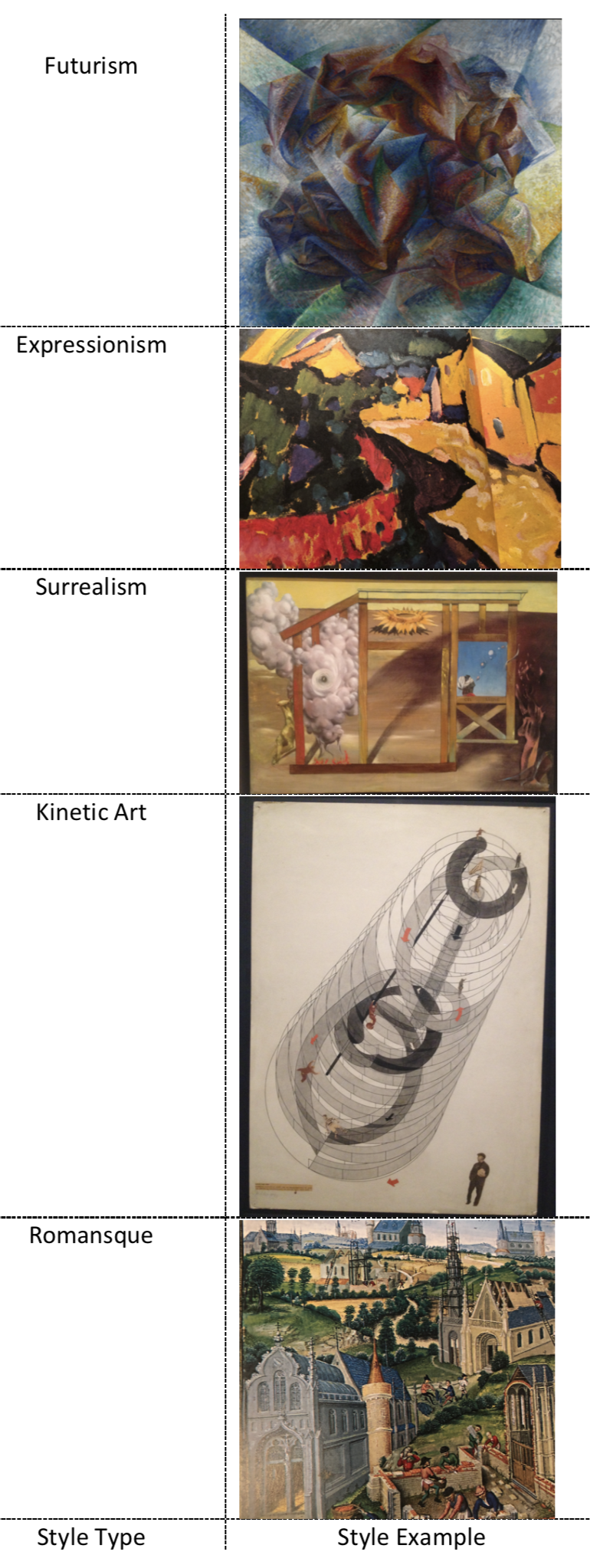

We show style transferred images on MPII below. For more output of style-specific models (recall the separate training), go to this link.

Put data under testset/, download weights from pretrained_weights, and follow here.

It's a simple process of loading weights and saving

1. Overlaid 2d on test image.

2. Predicted 2d joint coordinates.

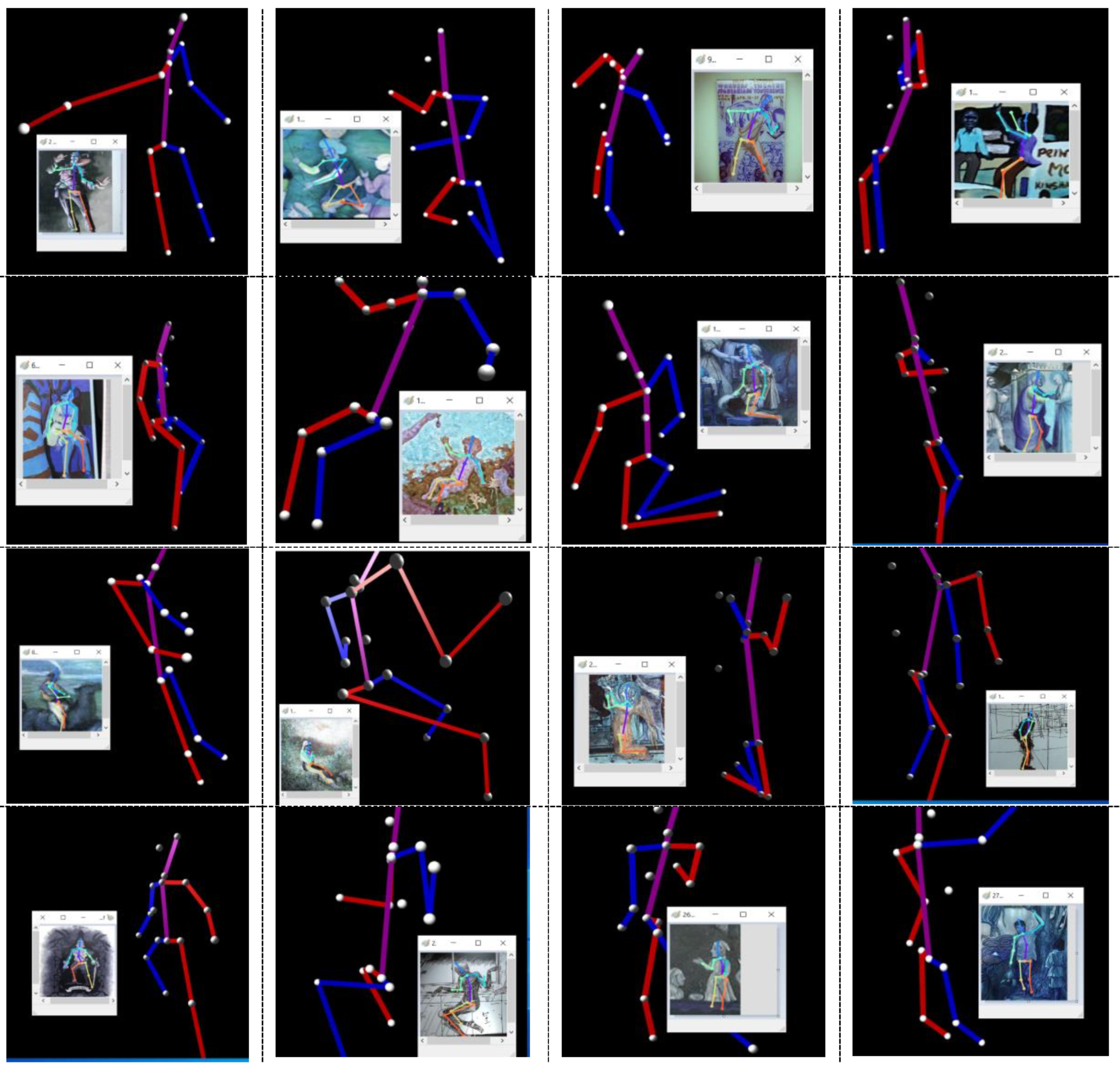

3. Predicted 3d joint coordinates and the Python figures.

GPU cards: GTX 1070, RTX 2080, Tesla K80, Google Colab GPUs.

Environment: Unix + Windows (Alienware 15 R3). (All the stuff should work on Ubuntu)

Tools: Anaconda 3, Microsoft Visual Studio 2013 & 2019.

Deep learning framework: PyTorch 1.6.0.

Languages: Python, C++ and C#.

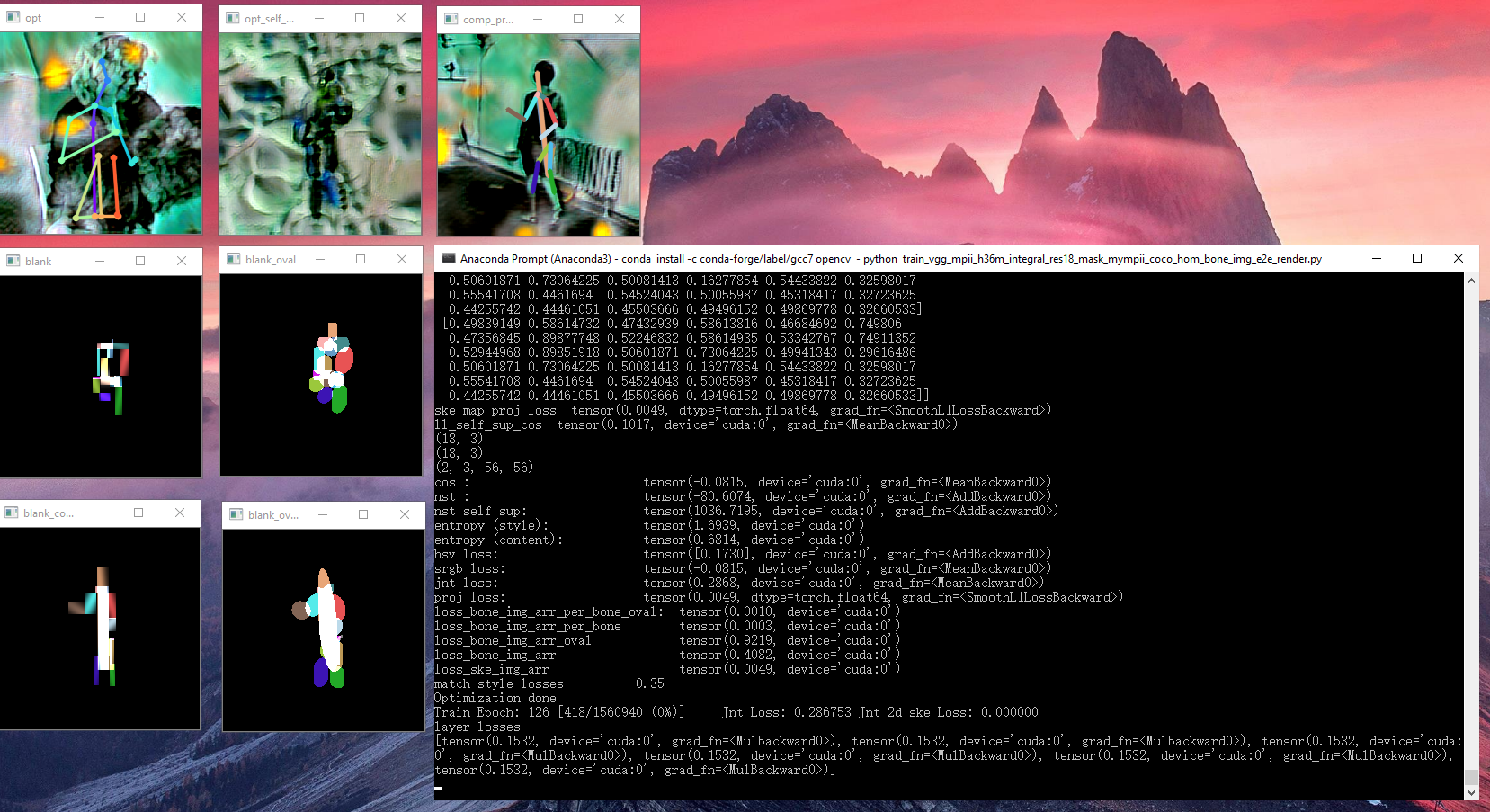

Follow the steps here, you will get something like this:

It's basially

1. load pretrained model weights (optional if you want to train from scratch but do follow the stepwise training procedure and carefully tune the loss weights. Gradually add more style targets if desired.

(medium rare)

2. download data and place into right directories.

(medium well)

3. start training. (set hyper-parameters in the first few lines)

(well done)

- Download MPII dataset into /mpii/images

- Train a style.

python style_transfer.py

- More options:

--content-weight: change weight of content loss--style-weight: change weight of style loss--style-path: path to folder where styles are saved.--si: designate style image.--cuda: set if running on GPU.

- Stylize a set of images on style.

python stylize_image.py --w la_muse.jpg

- More options:

--i: select 1 image to stylize--s: for s>0, sample s images from folder to stylize. Stylize entire folder if s==0--output-folder: path to folder where output images will be saved--cuda: set if running on GPU.

Data is on Google Drive.

The structure:

${DATA_ROOT}

|-- datasets

`-- |-- allstyles

| | | | ### All the artistic style images (277 in total)

`-- |-- per_style_training_styles

| | | | ### Style targets for the per-style training experiment

`-- |-- stylized_mpii ### Per-style training output (1 trained pose model for 1 style)

`-- |-- candy_jpg

`-- |-- composition_vii_jpg

`-- |-- feathers_jpg

`-- |-- la_muse_jpg

`-- |-- mosaic_jpg

`-- |-- starry_night_crop_jpg

`-- |-- the_scream_jpg

`-- |-- udnie_jpg

`-- |-- wave_crop_jpg

`-- |-- 160_png

`-- |-- aniene

`-- |-- memory

`-- |-- h36m

`-- |-- s_01_act_02_subact_01_ca_01

| | | | ### subject (s): 01, 05, 06, 07, 08, 09, 11

| | | | ### action (act): 01, 02, ..., 16

| | | | ### subaction (subact): 01, 02

| | | | ### camera (ca): 01, 02

| | | ### Please refer to "https://github.com/mks0601/Integral-Human-Pose-Regression-for-3D-Human-Pose-Estimation" for details

`-- |-- mpii

`-- |-- annotations

`-- |-- images

| | | ### For the above two folders please refer to "https://github.com/mks0601/Integral-Human-Pose-Regression-for-3D-Human-Pose-Estimation" for details

`-- |-- img

| | | | ### Cropped images for pseudo 3D ground truth annotation

`-- |-- gt_joint_3d_train_all.txt

| | | | ### Pseudo 3D ground truth of MPIi

`-- |-- testset

| | | ### Our hand-crafted test set of 282 images.

`-- |-- pertrained_weights

| | | ### Pretrained PyTorch model weights.

We also provide a fancier C++ OpenGL visualization tool under VisTool to visualize the 3D. You can rotate (R) and translate (T) the coordinate system yourself freely.

The design logic is similar to the MPII annotator here.

@misc{wan2020napa,

title={NAPA: Neural Art Human Pose Amplifier},

author={Qingfu Wan and Oliver Lu},

year={2020},

eprint={2012.08501},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

-

MATH-GA.2310-001 Topology I at Courant Institute of Mathematical Sciences for inspiration.

-

NYC for inspiration.

-

eBay for selling electronics.

-

Bobst library for delivery.

-

Museums for recreation.

-

Adobe Premiere.

-

Jianqi Ma for fruitful discussions and valuable feedback.