🔥 The paperlist & Data Resources website is also available on [ArXiv].

🌟 Any contributions via PRs, issues, emails or other methods are greatly appreciated.

🔮 Interactive paperlist&benchmark website is also available on multilingual-llm.net

In recent years, remarkable progress has been witnessed in large language models (LLMS) , which have achieved excellent performance on various natural language processing tasks. In addition, LLMs raise surprising emergent capabilities, including in-context learning, chain-of-thought reasoning and even planning. Nevertheless, the majority of LLMs are English-Centric LLMs that mainly focus on the English tasks, which still slightly weak for multilingual setting, especially in low-resource scenarios.

Actually, there are over 7000 languages in the world. With the acceleration of globalization, the success of large language models should consider to serve diverse countries and languages. To this end, multilingual large language models (MLLM) possess the advantage when handling multiple languages, gaining increasing attention.

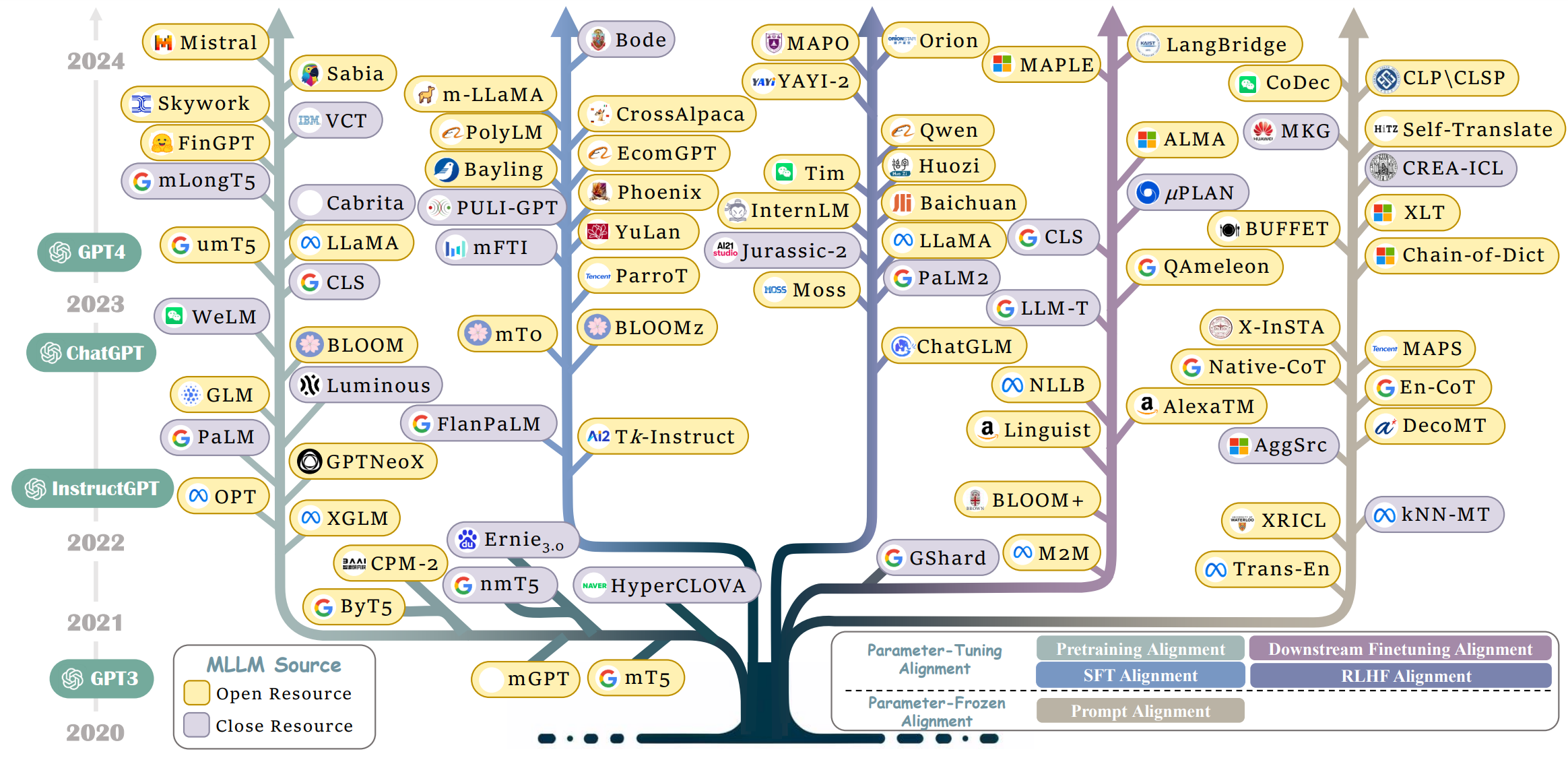

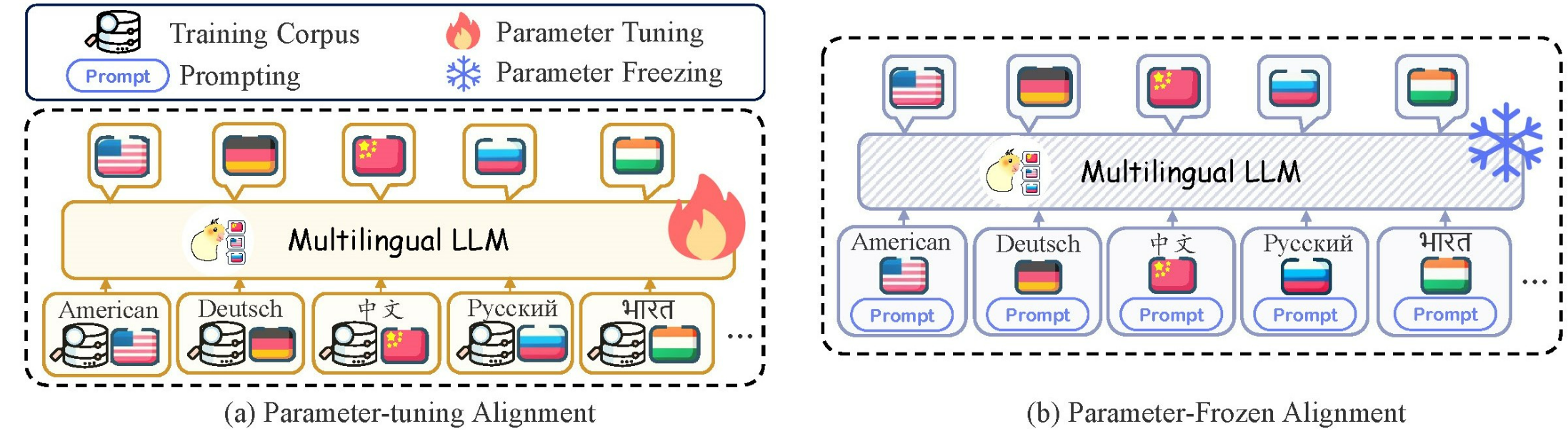

The existing MLLMs can be divided into three groups based on different stages. The first series of work leverage large amounts of multilingual data in the pre-training state to boost the overall multilingual performance. The second series of work focus on incorporating multilingual data during supervised fine-tuning (SFT) stage. The third series of work also adapt the advanced prompting strategies to unlock deeper multilingual potential of MLLM during parameter-frozen inference.

While promising performance have been witnessed in the MLLM, there still remains a lack of a comprehensive review and analysis of recent efforts in the literature, which hinder the development of MLLMs. To bridge this gap, we make the first attempt to conduct a comprehensive and detailed analysis for MLLMs. Specifically, we first introduce the widely used data resource, and in order to provide a unified perspective for understanding MLLM efforts in the literature , we introduce a novel taxonomy focusing on alignment strategies including Parameter-Tuning Alignment and Parameter-Frozen Alignment.

- [2024] Introducing Bode: A Fine-Tuned Large Language Model for Portuguese Prompt-Based Task. Garcia et al.

Arxiv[paper] - [2024] Question Translation Training for Better Multilingual Reasoning. Zhu et al.

Arxiv[paper] [code] - [2024] xCoT: Cross-lingual Instruction Tuning for Cross-lingual Chain-of-Thought Reasoning. Chai et al.

Arxiv[paper] - [2024] Towards Boosting Many-to-Many Multilingual Machine Translation with Large Language Models. Gao et al.

Arxiv[paper] [code] - [2023] ParroT: Translating during chat using large language models tuned with human translation and feedback. Jiao et al.

Arxiv[paper] [code] - [2023] Phoenix: Democratizing chatgpt across languages. Chen et al.

Arxiv[paper] [code] - [2023] Improving Translation Faithfulness of Large Language Models via Augmenting Instructions. Chen et al.

Arxiv[paper] [code] - [2023] Empowering Multi-step Reasoning across Languages via Tree-of-Thoughts. Ranaldi et al.

Arxiv[paper] - [2023] EcomGPT: Instruction-tuning Large Language Model with Chain-of-Task Tasks for E-commerce. Li et al.

Arxiv[paper] [code] - [2023] Improving Translation Faithfulness of Large Language Models via Augmenting Instructions. Chen et al.

Arxiv[paper] [code] - [2023] Camoscio: An italian instruction-tuned llama. Santilli et al.

Arxiv[paper] - [2023] Conversations in Galician: a Large Language Model for an Underrepresented Language. Bao et al.

Arxiv[paper] [code] - [2023] Building a Llama2-finetuned LLM for Odia Language Utilizing Domain Knowledge Instruction Set. Kohli et al.

Arxiv[paper] - [2023] Making Instruction Finetuning Accessible to Non-English Languages: A Case Study on Swedish Models. Holmstrom et al.

NoDaLiDa[paper] [code] - [2023] Extrapolating Large Language Models to Non-English by Aligning Languages. Zhu et al.

Arxiv[paper] [code] - [2023] Instruct-Align: Teaching Novel Languages with to LLMs through Alignment-based Cross-Lingual Instruction. Cahyawijaya et al.

Arxiv[paper] [code] - [2023] Eliciting the Translation Ability of Large Language Models via Multilingual Finetuning with Translation Instructions. Li et al.

Arxiv[paper] - [2023] Palm 2 technical report. Anil et al.

Arxiv[paper] - [2023] TaCo: Enhancing Cross-Lingual Transfer for Low-Resource Languages in LLMs through Translation-Assisted Chain-of-Thought Processes. Upadhayay et al.

Arxiv[paper] [code] - [2023] Polylm: An open source polyglot large language model. Wei et al.

Arxiv[paper] [code] - [2023] Mono- and multilingual GPT-3 models for Hungarian. Yang et al.

LNAI[paper] - [2023] BayLing: Bridging Cross-lingual Alignment and Instruction Following through Interactive Translation for Large Language Models. Zhang et al.

Arxiv[paper] [code] - [2023] Efficient and effective text encoding for chinese llama and alpaca. Cui et al.

Arxiv[paper] - [2023] Monolingual or Multilingual Instruction Tuning: Which Makes a Better Alpaca. Chen et al.

Arxiv[paper] [code] - [2023] YuLan-Chat: An Open-Source Bilingual Chatbot. YuLan-Teaml. [code]

- [2022] Crosslingual generalization through multitask finetuning. Muennighoff et al.

Arxiv[paper] - [2022] Super-naturalinstructions: Generalization via declarative instructions on 1600+ nlp tasks. Wang et al.

ACL[paper] - [2022] Scaling instruction-finetuned language models. Chung et al.

Arxiv[paper]

- [2024] Mixtral of experts. Jiang et al.

Arxiv[paper] [code] - [2024] TURNA: A Turkish Encoder-Decoder Language Model for Enhanced Understanding and Generation. Uludogan et al.

Arxiv[paper] [code] - [2024] Breaking the Curse of Multilinguality with Cross-lingual Expert Language Models. Blevins et al.

Arxiv[paper] - [2024] Chinese-Mixtral-8x7B: An Open-Source Mixture-of-Experts LLM. HIT-SCIR. [code]

- [2023] FinGPT: Large Generative Models for a Small Language. Luukkonen et al.

Arxiv[paper] [code] - [2023] Llama 2: Open foundation and fine-tuned chat models. Touvron et al.

Arxiv[paper] [code] - [2023] Mistral 7B. Jiang et al.

Arxiv[paper] [code] - [2023] Bridging the Resource Gap: Exploring the Efficacy of English and Multilingual LLMs for Swedish. Holmstrom et al.

ACL[paper] - [2023] JASMINE: Arabic GPT Models for Few-Shot Learning. Abdul-Mageed et al.

Arxiv[paper] - [2023] Cross-Lingual Supervision improves Large Language Models Pre-training. Schioppa et al.

Arxiv[paper] - [2023] Searching for Needles in a Haystack: On the Role of Incidental Bilingualism in PaLM's Translation Capability. Briakou et al.

Arxiv[paper] - [2023] Cross-Lingual Transfer of Large Language Model by Visually-Derived Supervision Toward Low-Resource Languages. Muraoka et al.

ACM MM[paper] - [2023] Skywork: A more open bilingual foundation model. Wei et al.

Arxiv[paper] [code] - [2023] Multi-Lingual Sentence Alignment with GPT Models. Liang et al.

AiDAS[paper] - [2023] Llama: Open and efficient foundation language models. Touvron et al.

Arxiv[paper] [code] - [2023] LLaMAntino: LLaMA 2 Models for Effective Text Generation in Italian Language. Basile et al.

Arxiv[paper] - [2023] mLongT5: A Multilingual and Efficient Text-To-Text Transformer for Longer Sequences. Uthus et al.

Arxiv[paper] [code] - [2023] Cabrita: closing the gap for foreign languages. Larcher et al.

Arxiv[paper] - [2023] Align after Pre-train: Improving Multilingual Generative Models with Cross-lingual Alignment. Li et al.

Arxiv[paper] - [2023] Sabia: Portuguese Large Language Models. Pires et al.

Arxiv[paper] [code] - [2023] Efficient and effective text encoding for chinese llama and alpaca. Cui et al.

Arxiv[paper] [code] - [2022] Language contamination helps explain the cross-lingual capabilities of English pretrained models. Blevins et al.

Arxiv[paper] - [2022] Byt5: Towards a token-free future with pre-trained byte-to-byte models. Xue et al.

TACL[paper] [code] - [2022] Overcoming catastrophic forgetting in zero-shot cross-lingual generation. Vu et al.

EMNLP[paper] - [2022] UniMax: Fairer and More Effective Language Sampling for Large-Scale Multilingual Pretraining. Chung et al.

Arxiv[paper] - [2022] mgpt: Few-shot learners go multilingual. Shliazhko et al.

Arxiv[paper] [code] - [2022] Few-shot Learning with Multilingual Generative Language Models. Lin et al.

EMNLP[paper] [code] - [2022] Glm-130b: An open bilingual pre-trained model. Zeng et al.

Arxiv[paper] [code] - [2022] Palm: Scaling language modeling with pathways. Chowdhery et al.

Arxiv[paper] - [2022] GPT-NeoX-20B: An Open-Source Autoregressive Language Model. Black et al.

ACL[paper] [code] - [2022] Opt: Open pre-trained transformer language models. Zhang et al.

Arxiv[paper] - [2022] Bloom: A 176b-parameter open-access multilingual language model. Workshop et al.

Arxiv[paper] [code] - [2022] Welm: A well-read pre-trained language model for chinese. Su et al.

Arxiv[paper] - [2021] Cpm-2: Large-scale cost-effective pre-trained language models. Zhang et al.

Arxiv[paper] [code] - [2021] nmT5--Is parallel data still relevant for pre-training massively multilingual language models?. Kale et al.

ACL[paper] - [2021] What Changes Can Large-scale Language Models Bring? Intensive Study on HyperCLOVA: Billions-scale Korean Generative Pretrained Transformers. Kim et al.

EMNLP[paper] - [2021] Ernie 3.0: Large-scale knowledge enhanced pre-training for language understanding and generation. Sun et al.

Arxiv[paper] - [2020] mT5: A massively multilingual pre-trained text-to-text transformer. Xue et al.

ACL[paper] [code] - [2020] Language models are few-shot learners. Brown et al.

Arxiv[paper]

- [2024] Huozi: An Open-Source Universal LLM. Huozi Team. [code]

- [2024] MAPO: Advancing Multilingual Reasoning through Multilingual Alignment-as-Preference Optimization. She et al.

Arxiv[paper] - [2024] Orion-14B: Open-source Multilingual Large Language Models. Chen et al.

Arxiv[paper] [code] - [2023] TigerBot: An Open Multilingual Multitask LLM. Chen et al.

Arxiv[paper] [code] - [2023] Aligning Neural Machine Translation Models: Human Feedback in Training and Inference. Moura Ramos et al.

Arxiv[paper] - [2023] Salmon: Self-alignment with principle-following reward models. Sun et al.

Arxiv[paper] [code] - [2023] SteerLM: Attribute Conditioned SFT as an (User-Steerable) Alternative to RLHF. Dong et al.

Arxiv[paper] [code] - [2023] Direct Preference Optimization for Neural Machine Translation with Minimum Bayes Risk Decoding. Yang et al.

Arxiv[paper] - [2023] YAYI 2: Multilingual Open-Source Large Language Models. Luo et al.

Arxiv[paper] - [2023] Tim: Teaching large language models to translate with comparison. Zeng et al.

Arxiv[paper] [code] - [2023] Internlm: A multilingual language model with progressively enhanced capabilities. Team et al. [paper] [code]

- [2023] Baichuan 2: Open large-scale language models. Yang et al.

Arxiv[paper] [code] - [2023] MOSS: Training Conversational Language Models from Synthetic Data. Sun et al. [code]

- [2023] Llama 2: Open foundation and fine-tuned chat models. Touvron et al.

Arxiv[paper] [code] - [2023] Qwen-vl: A frontier large vision-language model with versatile abilities. Bai et al.

Arxiv[paper] [code] - [2023] Okapi: Instruction-tuned Large Language Models in Multiple Languages with Reinforcement Learning from Human Feedback. Lai et al.

Arxiv[paper] [code] - [2023] GPT-4 Technical Report. OpenAI.

Arxiv[paper] - [2022] Glm-130b: An open bilingual pre-trained model. Zeng et al.

Arxiv[paper] [code] - [2022] ChatGPT. OpenAI.

Arxiv[paper]

- [2024] Towards Boosting Many-to-Many Multilingual Machine Translation with Large Language Models. Gao et al.

Arxiv[paper] [code] - [2024] MAPO: Advancing Multilingual Reasoning through Multilingual Alignment-as-Preference Optimization. She et al.

Arxiv[paper] - [2024] Introducing Bode: A Fine-Tuned Large Language Model for Portuguese Prompt-Based Task. Garcia et al.

Arxiv[paper] - [2024] Question Translation Training for Better Multilingual Reasoning. Zhu et al.

Arxiv[paper] [code] - [2024] xCoT: Cross-lingual Instruction Tuning for Cross-lingual Chain-of-Thought Reasoning. Chai et al.

Arxiv[paper] - [2024] Orion-14B: Open-source Multilingual Large Language Models. Chen et al.

Arxiv[paper] [code] - [2023] TaCo: Enhancing Cross-Lingual Transfer for Low-Resource Languages in LLMs through Translation-Assisted Chain-of-Thought Processes. Upadhayay et al.

Arxiv[paper] [code] - [2023] TigerBot: An Open Multilingual Multitask LLM. Chen et al.

Arxiv[paper] [code] - [2023] YAYI 2: Multilingual Open-Source Large Language Models. Luo et al.

Arxiv[paper] [code] - [2023] Tim: Teaching large language models to translate with comparison. Zeng et al.

Arxiv[paper] [code] - [2023] Direct Preference Optimization for Neural Machine Translation with Minimum Bayes Risk Decoding. Yang et al.

Arxiv[paper] - [2023] Camoscio: An italian instruction-tuned llama. Santilli et al.

Arxiv[paper] [code] - [2023] Improving Translation Faithfulness of Large Language Models via Augmenting Instructions. Chen et al.

Arxiv[paper] [code] - [2023] Qwen-vl: A frontier large vision-language model with versatile abilities. Bai et al.

Arxiv[paper] [code] - [2023] EcomGPT: Instruction-tuning Large Language Model with Chain-of-Task Tasks for E-commerce. Li et al.

Arxiv[paper] [code] - [2023] SteerLM: Attribute Conditioned SFT as an (User-Steerable) Alternative to RLHF. Dong et al.

Arxiv[paper] [code] - [2023] Empowering Multi-step Reasoning across Languages via Tree-of-Thoughts. Ranaldi et al.

Arxiv[paper] - [2023] Salmon: Self-alignment with principle-following reward models. Sun et al.

Arxiv[paper] [code] - [2023] Aligning Neural Machine Translation Models: Human Feedback in Training and Inference. Moura Ramos et al.

Arxiv[paper] - [2023] Improving Translation Faithfulness of Large Language Models via Augmenting Instructions. Chen et al.

Arxiv[paper] [code] - [2023] Internlm: A multilingual language model with progressively enhanced capabilities. Team et al. [paper] [code]

- [2023] Huozi: An Open-Source Universal LLM. Huozi Team. [code]

- [2023] ParroT: Translating during chat using large language models tuned with human translation and feedback. Jiao et al.

Arxiv[paper] [code] - [2023] Baichuan 2: Open large-scale language models. Yang et al.

Arxiv[paper] [code] - [2023] Conversations in Galician: a Large Language Model for an Underrepresented Language. Bao et al.

Arxiv[paper] [code] - [2023] MOSS: Training Conversational Language Models from Synthetic Data. Sun et al.

- [2023] Llama 2: Open foundation and fine-tuned chat models. Touvron et al.

Arxiv[paper] [code] - [2023] Okapi: Instruction-tuned Large Language Models in Multiple Languages with Reinforcement Learning from Human Feedback. Lai et al.

Arxiv[paper] [code] - [2023] Building a Llama2-finetuned LLM for Odia Language Utilizing Domain Knowledge Instruction Set. Kohli et al.

Arxiv[paper] - [2023] Making Instruction Finetuning Accessible to Non-English Languages: A Case Study on Swedish Models. Holmstrom et al.

NoDaLiDa[paper] - [2023] Extrapolating Large Language Models to Non-English by Aligning Languages. Zhu et al.

Arxiv[paper] [code] - [2023] Instruct-Align: Teaching Novel Languages with to LLMs through Alignment-based Cross-Lingual Instruction. Cahyawijaya et al.

Arxiv[paper] [code] - [2023] Eliciting the Translation Ability of Large Language Models via Multilingual Finetuning with Translation Instructions. Li et al.

Arxiv[paper] - [2023] Palm 2 technical report. Anil et al.

Arxiv[paper] - [2023] GPT-4 Technical Report. OpenAI.

Arxiv[paper] - [2023] BayLing: Bridging Cross-lingual Alignment and Instruction Following through Interactive Translation for Large Language Models. Zhang et al.

Arxiv[paper] [code] - [2023] Phoenix: Democratizing chatgpt across languages. Chen et al.

Arxiv[paper] [code] - [2023] Monolingual or Multilingual Instruction Tuning: Which Makes a Better Alpaca. Chen et al.

Arxiv[paper] [code] - [2023] YuLan-Chat: An Open-Source Bilingual Chatbot. YuLan-Team. [code]

- [2023] Efficient and effective text encoding for chinese llama and alpaca. Cui et al.

Arxiv[paper] [code] - [2023] Mono- and multilingual GPT-3 models for Hungarian. Yang et al.

LNAI[paper] - [2023] Polylm: An open source polyglot large language model. Wei et al.

Arxiv[paper] [code] - [2022] Crosslingual generalization through multitask finetuning. Muennighoff et al.

Arxiv[paper] [code] - [2022] Super-naturalinstructions: Generalization via declarative instructions on 1600+ nlp tasks. Wang et al.

EMNLP[paper] [code] - [2022] Scaling instruction-finetuned language models. Chung et al.

Arxiv[paper] [code] - [2022] ChatGPT. OpenAI.

Arxiv[paper] - [2022] Glm-130b: An open bilingual pre-trained model. Zeng et al.

Arxiv[paper] [code]

- [2024] Machine Translation with Large Language Models: Prompt Engineering for Persian, English, and Russian Directions. Pourkamali et al.

Arxiv[paper] - [2023] Cross-lingual Cross-temporal Summarization: Dataset, Models, Evaluation. Zhang et al.

Arxiv[paper] [code] - [2023] Benchmarking Arabic AI with Large Language Models. Abdelali et al.

Arxiv[paper] [code] - [2023] Breaking Language Barriers with a LEAP: Learning Strategies for Polyglot LLMs. Nambi et al.

Arxiv[paper] - [2023] Large language models as annotators: Enhancing generalization of nlp models at minimal cost. Bansal et al.

Arxiv[paper] - [2023] Cross-lingual knowledge editing in large language models. Wang et al.

Arxiv[paper] [code] - [2023] Document-level machine translation with large language models. Wang et al.

Arxiv[paper] [code] - [2023] Zero-shot Bilingual App Reviews Mining with Large Language Models. Wei et al.

Arxiv[paper] - [2022] Language models are multilingual chain-of-thought reasoners. Shi et al.

Arxiv[paper] [code]

- [2023] Cross-lingual Prompting: Improving Zero-shot Chain-of-Thought Reasoning across Languages. Qin et al.

Arxiv[paper] [code] - [2023] BUFFET: Benchmarking Large Language Models for Few-shot Cross-lingual Transfer. Asai et al.

Arxiv[paper] [code] - [2022] Cross-lingual Few-Shot Learning on Unseen Languages. Winata et al.

ACL[paper] - [2022] Language models are multilingual chain-of-thought reasoners. Shi et al.

Arxiv[paper] [code]

- [2023] Multilingual Large Language Models Are Not (Yet) Code-Switchers. Zhang et al.

Arxiv[paper] - [2023] Prompting multilingual large language models to generate code-mixed texts: The case of south east asian languages. Yong et al.

Arxiv[paper] - [2023] Marathi-English Code-mixed Text Generation. Amin et al.

Arxiv[paper]

- [2023] Document-Level Language Models for Machine Translation. Petrick et al.

Arxiv[paper] - [2023] On-the-Fly Fusion of Large Language Models and Machine Translation. Hoang et al.

Arxiv[paper] - [2023] Breaking Language Barriers with a LEAP: Learning Strategies for Polyglot LLMs. Nambi et al.

Arxiv[paper] - [2023] Exploring Prompt Engineering with GPT Language Models for Document-Level Machine Translation: Insights and Findings. Wu et al.

WMT[paper] [code] - [2023] Interactive-Chain-Prompting: Ambiguity Resolution for Crosslingual Conditional Generation with Interaction. Pilault et al.

Arxiv[paper] [code] - [2023] Evaluating task understanding through multilingual consistency: A ChatGPT case study. Ohmer et al.

Arxiv[paper] [code] - [2023] Empowering Multi-step Reasoning across Languages via Tree-of-Thoughts. Ranaldi et al.

Arxiv[paper] - [2023] Leveraging GPT-4 for Automatic Translation Post-Editing. Raunak et al.

Arxiv[paper] - [2023] SCALE: Synergized Collaboration of Asymmetric Language Translation Engines. Cheng et al.

Arxiv[paper] [code] - [2023] Adaptive machine translation with large language models. Moslem et al.

Arxiv[paper] [code] - [2023] Chain-of-Dictionary Prompting Elicits Translation in Large Language Models. Lu et al.

Arxiv[paper] - [2023] Prompting large language model for machine translation: A case study. Zhang et al.

Arxiv[paper] - [2023] Do Multilingual Language Models Think Better in English?. Etxaniz et al.

Arxiv[paper] [code] - [2023] Not All Languages Are Created Equal in LLMs: Improving Multilingual Capability by Cross-Lingual-Thought Prompting. Huang et al.

Arxiv[paper] [code] - [2023] Cross-lingual Prompting: Improving Zero-shot Chain-of-Thought Reasoning across Languages. Qin et al.

Arxiv[paper] [code] - [2023] DecoMT: Decomposed Prompting for Machine Translation Between Related Languages using Large Language Models. Puduppully et al.

EMNLP[paper] [code] - [2023] Multilingual LLMs are Better Cross-lingual In-context Learners with Alignment. Tanwar et al.

Arxiv[paper] [code] - [2023] Improving Machine Translation with Large Language Models: A Preliminary Study with Cooperative Decoding. Zeng et al.

Arxiv[paper] [code] - [2023] BUFFET: Benchmarking Large Language Models for Few-shot Cross-lingual Transfer. Asai et al.

Arxiv[paper] [code] - [2023] On Bilingual Lexicon Induction with Large Language Models. Li et al.

Arxiv[paper] [code] - [2022] Bidirectional Language Models Are Also Few-shot Learners. Patel et al.

Arxiv[paper] - [2022] CLASP: Few-Shot Cross-Lingual Data Augmentation for Semantic Parsing. Rosenbaum et al.

ACL[paper] - [2022] Language models are multilingual chain-of-thought reasoners. Shi et al.

Arxiv[paper] - [2021] Few-shot learning with multilingual language models. Lin et al.

Arxiv[paper] [code]

- [2024] Enhancing Multilingual Information Retrieval in Mixed Human Resources Environments: A RAG Model Implementation for Multicultural Enterprise. Ahmad et al.

Arxiv[paper] - [2023] Crosslingual Retrieval Augmented In-context Learning for Bangla. Li et al.

Arxiv[paper] - [2023] From Classification to Generation: Insights into Crosslingual Retrieval Augmented ICL. Li et al.

Arxiv[paper] - [2023] Boosting Cross-lingual Transferability in Multilingual Models via In-Context Learning. Kim et al.

Arxiv[paper] - [2023] NoMIRACL: Knowing When You Don't Know for Robust Multilingual Retrieval-Augmented Generation. Thakur et al.

Arxiv[paper] [code] - [2023] LMCap: Few-shot Multilingual Image Captioning by Retrieval Augmented Language Model Prompting. Ramos et al.

Arxiv[paper] [code] - [2023] Multilingual Few-Shot Learning via Language Model Retrieval. Winata et al.

Arxiv[paper] - [2023] The unreasonable effectiveness of few-shot learning for machine translation. Garcia et al.

Arxiv[paper] - [2023] Exploring Human-Like Translation Strategy with Large Language Models. He et al.

Arxiv[paper] [code] - [2023] Leveraging Multilingual Knowledge Graph to Boost Domain-specific Entity Translation of ChatGPT. Zhang et al.

MTSummit[paper] - [2023] Increasing Coverage and Precision of Textual Information in Multilingual Knowledge Graphs. Conia et al.

EMNLP[paper] - [2023] Language Representation Projection: Can We Transfer Factual Knowledge across Languages in Multilingual Language Models?. Xu et al.

EMNLP[paper] - [2022] Xricl: Cross-lingual retrieval-augmented in-context learning for cross-lingual text-to-sql semantic parsing. Shi et al.

ACL[paper] [code] - [2022] In-context examples selection for machine translation. Agrawal et al.

Arxiv[paper]

Manual Creation obtains high-quality pre-training corpora through manual creation and proofreading.

- Bible Corpus. It offers rich linguistic and cultural content, covering 833 different languages.

- MultiUN. It is composed of official records and other parliamentary documents of the United Nations that are in the public domain.

- IIT Bombay. The dataset comprises parallel content for English-Hindi, along with monolingual Hindi data gathered from diverse existing sources and corpora.

Web Crawling involves crawling extensive multilingual data from the internet.

- CC-100. This corpus comprises of monolingual data for 100+ languages and also includes data for romanized languages.

- mC4. It is a multilingual colossal, cleaned version of Common Crawl's web crawl corpus.

- RedPajamav2. It is an open dataset with 30 Trillion Tokens for Training Large Language Models.

- OSCAR. It is a huge multilingual corpus obtained by language classification and filtering of the Common Crawl corpus using the ungoliant architecture.

- Oromo. The Oromo dataset is mostly from the BBC news website, and some languages also have data from Common Crawl.

- Wu Dao 2.0. It is a large dataset constructed for training Wu Dao 2.0. It contains 3 terabytes of text scraped from web data, 90 terabytes of graphical data (incorporating 630 million text/image pairs), and 181 gigabytes of Chinese dialogue (incorporating 1.4 billion dialogue rounds).

- Europarl. The Europarl parallel corpus is extracted from the proceedings of the European Parliament. It includes versions in 21 European languages.

- JW300. It is a parallel corpus of over 300 languages with around 100 thousand parallel sentences per language pair on average.

- Glot500. It is a large corpus covering more than 500 diverse languages.

- Wikimedia. This dataset includes Wikipedia, Wikivoyage, Wiktionary, Wikisource, and others.

- WikiMatrix. It is a freely available corpus with 135 million parallel sentences extracted from Wikipedia articles in 85 languages, facilitating multilingual natural language processing tasks.

- OPUS-100. It is an English-centric corpus covering 100 languages, including English, selected based on the volume of parallel data available in OPUS, with all training pairs including English on either the source or target side.

- AfricanNews. The corpus covers 16 languages, some languages are very low-resource languages.

- Taxi1500. This dataset is used for assessing multilingual pre-trained language models' cross-lingual generalization, comprising a sentence classification task across 1502 languages from 112 language families.

- CulturaX. It is a substantial multilingual dataset with 6.3 trillion tokens in 167 languages, tailored for LLM development.

Benchmark Adaption means re-cleaning existing benchmark to enhance data quality or integrating existing benchmarks to create more extensive pre-training datasets.

- ROOTS. It is a 1.6TB dataset spanning 59 languages (46 natural languages and 13 programming languages), aimed at training the multilingual model BLOOM.

- OPUS. It is a large collection of freely available parallel corpora. It covers over 90 languages and includes data from several domains.

- CCMT Dataset. The datasets associated with the Conference on China Machine Translation (CCMT) often emphasize Chinese language processing and translation tasks. These datasets typically include parallel corpora of Chinese texts with translations into various languages.

- WMT Dataset. The datasets released by the Workshop on Machine Translation (WMT) covers a wide range of languages and translation tasks. They include parallel corpora from various domains, such as news, literature, and technical documents.

- IWSLT Dataset: Datasets released by the International Workshop on Spoken Language Translation (IWSLT) are tailored for spoken language translation tasks, often comprising audio recordings with corresponding transcripts and translations.

Manual Creation acquires SFT corpora through manual creation and proofreading.

- Sup-NatInst. It is a benchmark of 1,616 diverse NLP tasks with expert-written instructions, covering 76 task types.

- OpenAssist. It is a conversation corpus comprising 161,443 messages across 66,497 conversation trees in 35 languages.

- EcoInstruct. It comprises 2.5 million E-commerce instruction data, scaling up the data size and task diversity by constructing atomic tasks with E-commerce basic data types.

- COIG-PC-lite. It is a curated dataset for Chinese NLP tasks, aimed at improving language models' performance in handling Chinese text across various applications.

Benchmark Adaptation involves transformation from existing benchmarks to instruction format.

- xP3. It is a collection of prompts and datasets spanning 46 languages and 16 NLP tasks, utilized for training BLOOMZ and mT0.

- BUFFET. It unifies 15 diverse NLP datasets in typologically diverse 54 languages.

- PolyglotPrompt. The dataset covers six tasks - topic classification, sentiment classification, named entity recognition, question answering, natural language inference, and summarization - across 49 languages.

Machine Translation translates the existing monolingual datasets into multilingual instruction datasets.

- xP3-MT. It is a mixture of 13 training tasks in 46 languages with prompts in 20 languages (machine-translated from English).

- MultilingualSIFT. It is a dataset translated using GPT-3.5 Turbo.

- Bactrian-X. It is a dataset with 3.4 million instruction-response pairs in 52 languages. English instructions from alpaca-52k and dolly-15k are translated into 51 languages using Google Translate API.

- CrossAlpaca. They are benchmarks used in CrossAlpaca.

- MGSM8KInstruct. It is an inaugural multilingual math reasoning instruction dataset.

MLLM Aided Generation means that the data are automatically synthesized with the help of MLLM.

- MultiAlpaca. It is a multilingual instruction dataset with 132,701 samples.

- Guanaco. It is the dataset used in Guanaco models.

- Alpaca-4. It is a dataset generated by GPT-4, consisting of a 52K instruction-following dataset in both English and Chinese, along with GPT-4-generated feedback data rating the outputs of three instruction-tuned models.

- OverMiss. It is a dataset used to improve model faithfulness by comparing over-translation and misstranslation results with the correct translation.

- ShareGPT. ShareGPT is originally an open-source Chrome Extension, aiming to share user's ChatGPT conversations.

- TIM. The dataset utilizes constructed samples for model learning comparison signals, supplementing regular translation data with dictionary information or translation errors.

- Okapi. It offers resources for instruction tuning with RLHF across 26 languages, encompassing ChatGPT prompts, multilingual instruction datasets, and multilingual response ranking data.

Please update the paper information with the following format:

title: [Title]

paper: [Conference/Journal/arXiv]

author: [Authors]

code: (optional)

key-point: (optional)

For any interesting news about multilingual LLM , you can also @Qiguang_Chen on Twitter or email me at charleschen2333@gmail.com to follow and update it at our Awesome-Multilingual-LLM GitHub repo.

Hope everyone enjoy the Multilingual LLM future :)