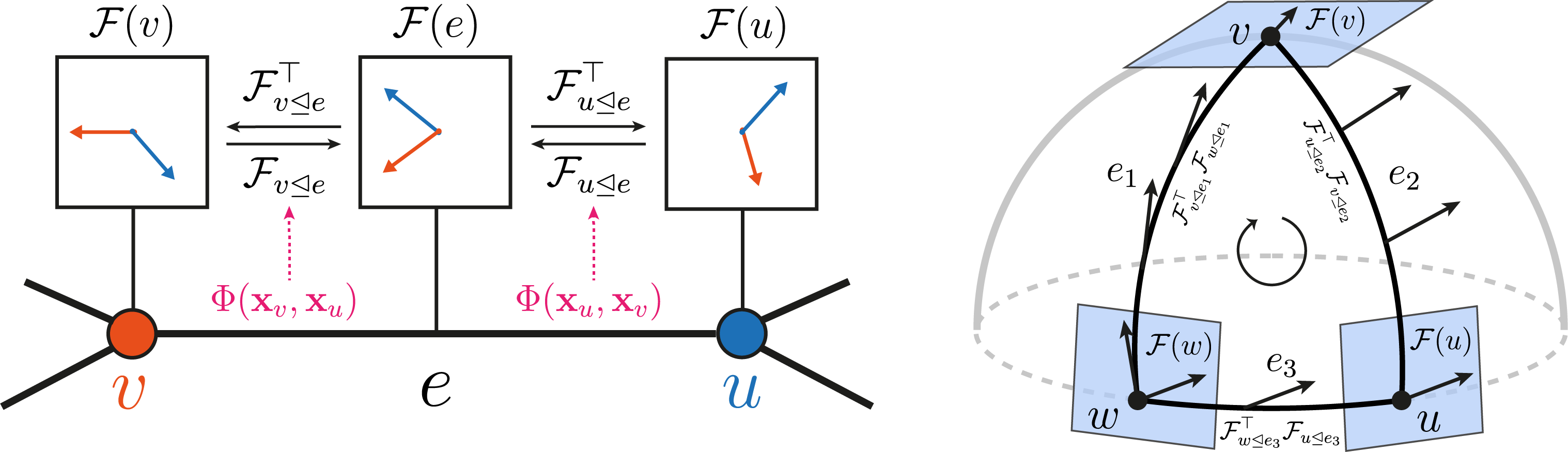

This repository contains the official code for the paper Neural Sheaf Diffusion: A Topological Perspective on Heterophily and Oversmoothing in GNNs (NeurIPS 2022).

We used CUDA 10.2 for this project. To set up the environment, run the following command:

conda env create --file=environment_gpu.yml

conda activate nsdFor using another CUDA version, modify the version specified inside environment_gpu.yml. If you like to run

the code in a CPU-only environment, then use environment_cpu.

To make sure that everything is set up correctly, you can run all the tests using:

pytest -v .To run the experiments without a Weights & Biases (wandb) account, first disable wandb by running wandb disabled.

Then, for instance, to run the training procedure on texas, simply run the example script provided:

sh ./exp/scripts/run_texas.sh

To run the experiments using wandb, first create a Weights & Biases account. Then run the following

commands to log in and follow the displayed instructions:

wandb online

wandb loginThen, you can run the example training procedure on texas via:

export ENTITY=<WANDB_ACCOUNT_ID>

sh ./exp/scripts/run_texas.shScripts for the other heterophilic datasets are also provided in exp/scripts.

To run a hyperparameter sweep, you will need a wandb account. Once you have an account, you can run an example

sweep as follows:

export ENTITY=<WANDB_ACCOUNT_ID>

wandb sweep --project sheaf config/orth_webkb_sweep.ymlThis will set up the sweep for a discrete bundle model on the WebKB datasets

as described in the yaml config at config/orth_webkb_sweep.yml.

To run the sweep on a single GPU, simply run the command displayed on screen after running the sweep command above.

If you like to run the sweep on multiple GPUs, then run the following command by typing in the SWEEP_ID received above.

sh run_sweeps.sh <SWEEP_ID>The WebKB (texas, wisconsin, cornell) and film datasets are downloaded on the fly. The

WikipediaNetwork datasets with the Geom-GCN pre-processing

can be downloaded from the Geom-GCN repo.

The files for the Planetoid datasets can also be found in the Geom-GCN repo.

The downloaded files must be placed into datasets/<DATASET_NAME>/raw/.

For attribution in academic contexts, please use the bibtex entry below:

@inproceedings{

bodnar2022neural,

title={Neural Sheaf Diffusion: A Topological Perspective on Heterophily and Oversmoothing in {GNN}s},

author={Cristian Bodnar and Francesco Di Giovanni and Benjamin Paul Chamberlain and Pietro Li{\`o} and Michael M. Bronstein},

booktitle={Advances in Neural Information Processing Systems},

editor={Alice H. Oh and Alekh Agarwal and Danielle Belgrave and Kyunghyun Cho},

year={2022},

url={https://openreview.net/forum?id=vbPsD-BhOZ}

}