Student Helper Unity 3D Chatbot

Hack-a-Roo Spring 2021 Submission

Team Ethnic Gems

- Srichakradhar Reddy

- Rohit Reddy

Links

Sneak Peek

Flow

- User interacts in augmented reality and gives voice commands such as "Walk Forward".

- The phone microphone picks up the voice command and the running application sends it to Watson Speech-to-Text.

- Watson Speech-to-Text converts the audio to text and returns it to the running application on the phone.

- The application sends the text to Watson Assistant. Watson assistant returns the recognized intent "Forward". The intent triggers an animation state event change.

- The application sends the response from Watson Assistant to Watson Text-to-Speech.

- Watson Text-to-Speech converts the text to audio and returns it to the running application on the phone.

- The application plays the audio response and waits for the next voice command.

Description

It is the year 2020 and students are experiencing a new way of life when it comes to getting an education. Students are realizing they need to adopt a proactive and self-service mindset in to fulfill their academic needs. An intelligent chatbot that helps students find and access learning content supports this new self-service model. This pattern shows users how to build a self-service chatbot not only for education, but also for any other industries where users need to find information quickly and easily.

Using Watson Assistant, this pattern defines a dialog that a student and a course provider might experience as a student searches for learning content. Students can input grade-level and academic topics question, and the chatbot responds with course recommendations and learning content links. The conversation responses are further enhanced by using Watson Discovery and the Watson Assistant Search skill. Natural Language Understanding (NLU) is introduced in this pattern to complement Watson Discovery's accuracy by extracting custom fields for entities, concepts, and categories.

1. Pre-requisites

- IBM Cloud Account

- Unity

- Node.js Versions >= 6: An asynchronous event driven JavaScript runtime, designed to build scalable applications.

- Python V3.5+: Download the latest version of Python

- Pandas: pandas is a fast, powerful, flexible, and easy-to-use open source data analysis and manipulation tool built on top of the Python programming language.

2. IBM Cloud services

- IBM Watson Speech-To-Text service instance.

- IBM Watson Text-to-Speech service instance.

- IBM Watson Assistant service instance.

- IBM Watson Natural Language Understanding (NLU) service

- IBM Watson Discovery

NOTE: use the

Plusoffering of Watson Assistant. You have access to a 30 day trial.

What is an Assistant Search Skill?

An Assistant search skill is a mechanism that allows you to directly query a Watson Discovery collection from your Assistant dialog. A search skill is triggered when the dialog reaches a node that has a search skill enabled. The user query is then passed to the Watson Discovery collection via the search skill, and the results are returned to the dialog for display to the user. Customizing how your documents are indexed into Discovery improves the answers returned from queries and what your users experience.

Click here for more information about the Watson Assistant search skill.

Why Natural Language Understanding (NLU)?

NLU performs text analysis to extract metadata such as concepts, entities, keywords, and other types of categories of words. Data sets are then enriched with NLU-detected entities, keywords, and concepts (for example, course names). Although Discovery provides great results, sometimes a developer finds that the results are not as relevant as they might be and that there is room for improvement. Discovery is built for "long-tail" use cases where the use case has many varied questions and results that you can't easily anticipate or optimize. Additionally, if the corpus of documents is relatively small (less than 1000), Discovery doesn't have enough information to distinguish important terms and unimportant terms. Discovery can work with a corpus this small - but it is less effective because it has less information about the relative frequency of terms in the domain.

Flow

- Execute Python program to run data set through Natural Language Understanding to extract the meta-data (e.g. course name, desciption,etc) and enrich the

.csvfile - Run Node program to convert

.csvto.jsonfiles (required for the Discovery collection) - Programmatially upload .json files into the Discovery Collection

- The user interacts through the chatbot via a Watson Assistant Dialog Skill

- When the student asks about course information, a search query is issued to the Watson Discovery service through a Watson Assistant search skill. Discovery returns the responses to the dialog

3. Configure Watson NLU

NLU enriches Discovery by creating the addition of metadata tags to your data sets. In other words, includes terms that overlap with words that users might actually provide in their queries.

- The following instruction has the developer run the

.csvfiles through NLU and extract entities and concepts. Do this by running the python program:

cd src

pip install watson-developer-cloud==1.5

pip install --upgrade ibm-watson

pip install pandas

sudo pip3 install -U python-dotenv

python NLUEntityExtraction.py4. Configure Watson Discovery

Watson Discovery uses AI search technology to retrieve answers to questions. It contains language processessing capabilities and can be trained on both structured and unstructured data. The data that Discovery is trained on is contained within what is called a Collection (aka Database). You can learn more about Watson Discovery here

Create Discovery Collection

Configure Discovery:

- Create a set of

.jsonfiles that Discovery will consume for its collection. The node program converts the.csvfile to a set of.jsonfiles in a directory namedmanualdocs.

-

Install Node.js (Versions >= 6).

-

In the root directory of your repository, install the dependencies.

npm install- Run below command

node read-file.js - Verify the

JSONfiles exists.

- Programmatically upload the

.jsonfiles into the discovery collection

ensure you have added your Discovery credentials into a .env file sitting in your root directory of your repo.

npm install python-dotenv

npm install ibm-watson

node upload-file.js 3. Building and Running

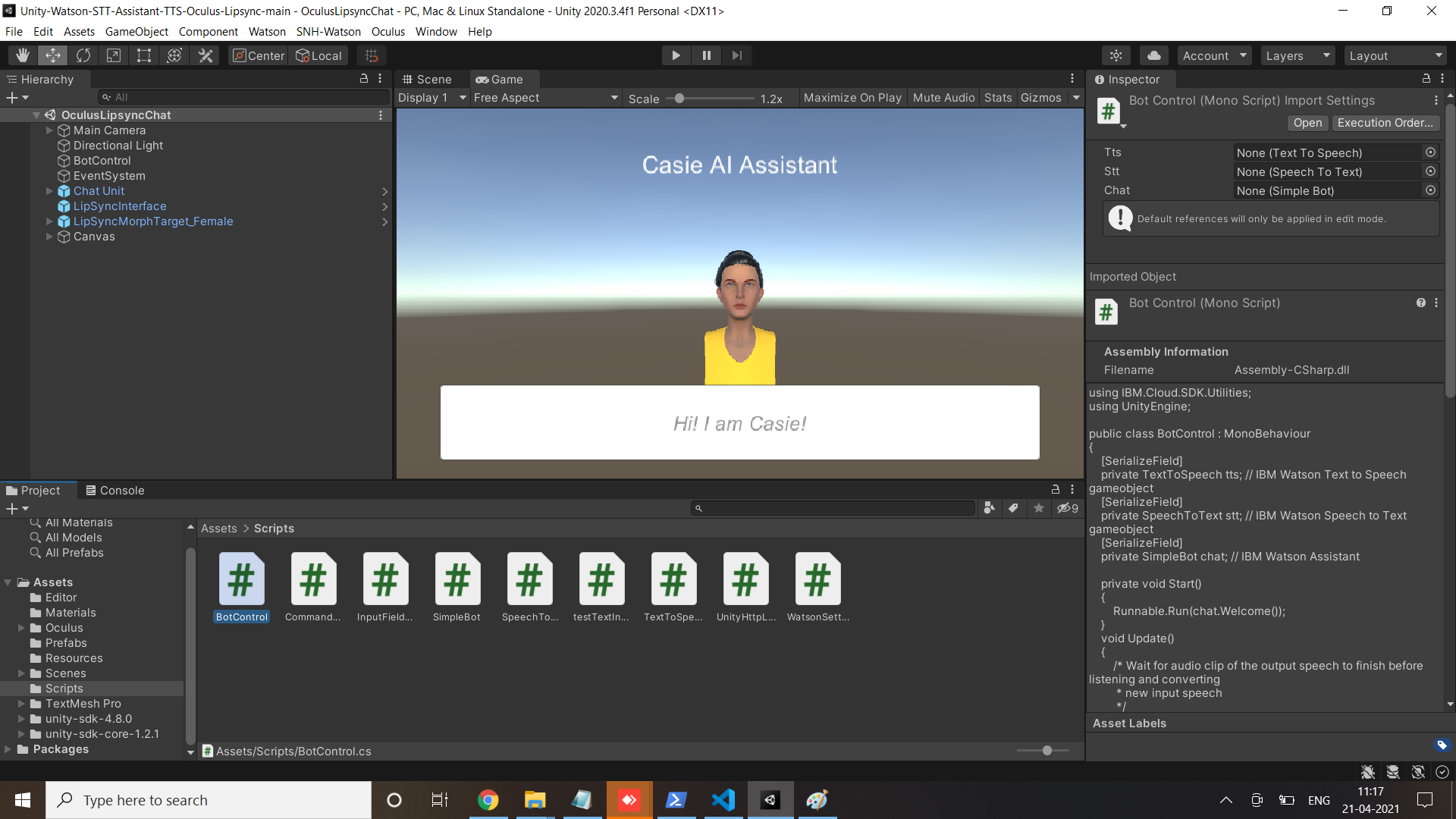

Note: This has been compiled and tested using Unity 2018.3.0f2 and Watson SDK for Unity 3.1.0 (2019-04-09) & Unity Core SDK 0.2.0 (2019-04-09).

The directories for unity-sdk and unity-sdk-core are blank within the Assets directory, placeholders for where the SDKs should be. Either delete these blank directories or move the contents of the SDKs into the directories after the following commands.

- Download the Watson SDK for Unity or perform the following:

git clone https://github.com/watson-developer-cloud/unity-sdk.git

Make sure you are on the 3.1.0 tagged branch.

- Download the Unity Core SDK or perform the following:

git clone https://github.com/IBM/unity-sdk-core.git

Make sure you are on the 0.2.0 tagged branch.

- Open Unity and inside the project launcher select the

button.

button. - If prompted to upgrade the project to a newer Unity version, do so.

- Follow these instructions to add the Watson SDK for Unity downloaded in step 1 to the project.

- Follow these instructions to create your Speech To Text, Text to Speech, and Watson Assistant services and find your credentials using IBM Cloud

Please note, the following instructions include scene changes and game objects have been added or replaced for AR Foundation.

- In the Unity Hierarchy view, click to expand under

AR Default Plane, clickDefaultAvatar. If you are not in the Main scene, clickScenesandMainin your Project window, then find the game objects listed above. - In the Inspector you will see Variables for

Speech To Text,Text to Speech, andAssistant. If you are using US-South or Dallas, you can leave theAssistant URL,Speech to Text URL, andText To Speech URLblank, taking on the default value as shown in the WatsonLogic.cs file. If not, please provide the URL values listed on the Manage page for each service in IBM Cloud. - Fill out the

Assistant Id,Assistant IAM Apikey,Speech to Text Iam Apikey,Text to Speech Iam Apikey. All Iam Apikey values are your API key or token, listed under the URL on the Manage page for each service.

Building for iOS

Build steps for iOS have been tested with iOS 11+ and Xcode 10.2.1.

- To Build for iOS and deploy to your phone, you can File -> Build Settings (Ctrl + Shift +B) and click Build.

- When prompted you can name your build.

- When the build is completed, open the project in Xcode by clicking on

Unity-iPhone.xcodeproj. - Follow steps to sign your app. Note - you must have an Apple Developer Account.

- Connect your phone via USB and select it from the target device list at the top of Xcode. Click the play button to run it.

- Alternately, connect the phone via USB and File-> Build and Run (or Ctrl+B).

Building for Android

Build steps for Android have been tested with Pie on a Pixel 2 device with Android Studio 3.4.1.

- To Build for Android and deploy to your phone, you can File -> Build Settings (Ctrl + Shift +B) and click Switch Platform.

- The project will reload in Unity. When done, click Build.

- When prompted you can name your build.

- When the build is completed, install the APK on your emulator or device.

- Open the app to run.

Troubleshooting

AR features are only available on iOS 11+ and can not run on an emulator/simulator. Be sure to check your player settings to target minimum iOS device of 11, and your Xcode deployment target (under deployment info) to be 11 also.

In order to run the app you will need to sign it. Follow steps here.

Mojave updates may adjust security settings and block microphone access in Unity. If Watson Speech to Text appears to be in a ready and listening state but not hearing audio, make sure to check your security settings for microphone permissions. For more information: https://support.apple.com/en-us/HT209175.

You may need the ARCore APK for your Android emulator. This pattern has been tested with ARCore SDK v1.9.0 on a Pixel 2 device running Pie.