[📜 Paper] •

[🐱 GitHub]

Quick Start •

Citation

Repo for "A Comprehensive Evaluation of Large Language Models on Legal Judgment Prediction"

published at EMNLP Findings 2023

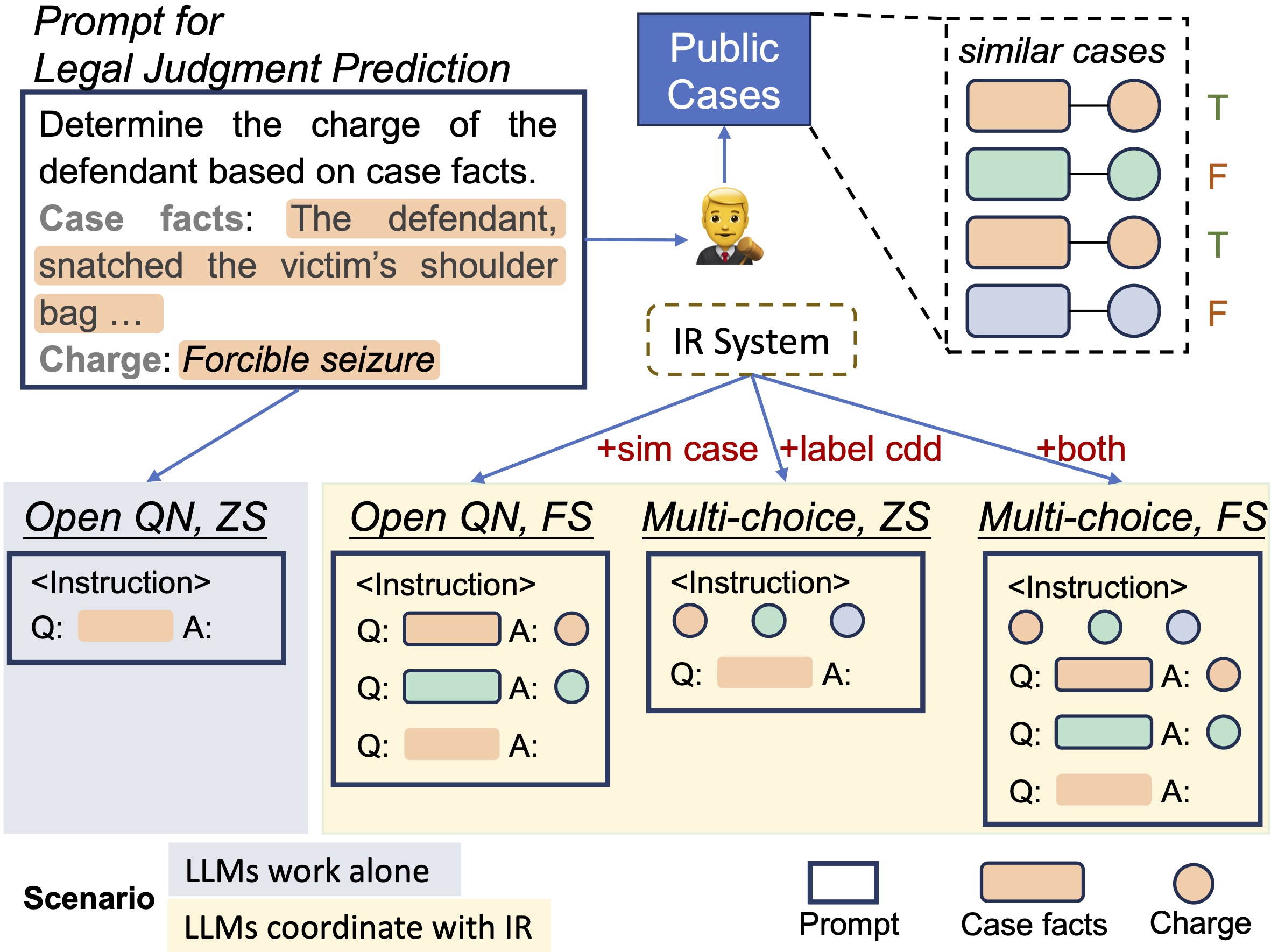

To comprehensively evaluate the law capacity of large language models, we propose baseline solutions and conduct evaluation on the task of legal judgment prediction.

Motivation

Existing benchmarks, e.g., lm_eval_harness, mainly adopt a perplexity-based approach to select the most possible options as the prediction for classification tasks. However, LMs typically interact with humans in the way of open-ended generation. It is critical to directly evaluate the contents generated by greedy decoding or sampling.

Evaluation on LM Generated Contents

We propose an automatic evaluation pipeline to directly evaluate the generated contents for classification tasks.

- Prompt LMs with task instruction to generate class labels. The generated contents may not strictly match standard label names.

- Then, a parser is to map generated contents to labels, based on the text similarity scores.

LM + Retrieval System

To address the performance with retrieved information of LMs in legal domain, additional information, e.g., label candidates and similar cases as demonstrations, are included into prompts. Considering the combination of the two additional information, there are four sub-settings of prompts:

- (free, zero shot): No additional information. Only task instruction.

- (free, few shot): Task instruction + demonstrations

- (multi, zero shot): Task instruction + label candidates (options)

- (multi, few shot): Task instruction + label candidates + demonstrations

| rank | model | score | free-0shot | free-1shot | free-2shot | free-3shot | free-4shot | multi-0shot | multi-1shot | multi-2shot | multi-3shot | multi-4shot |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | gpt4 | 63.05 | 50.52 | 62.72 | 67.54 | 68.61 | 71.02 | 62.31 | 70.42 | 71.81 | 73.24 | 74.00 |

| 2 | chatgpt | 58.13 | 43.14 | 58.42 | 61.86 | 64.40 | 66.16 | 60.67 | 63.51 | 66.85 | 69.59 | 66.62 |

| 3 | chatglm_6b | 47.74 | 41.89 | 50.30 | 47.76 | 48.59 | 48.67 | 53.74 | 49.26 | 47.56 | 47.61 | 45.32 |

| 4 | bloomz_7b | 44.14 | 46.90 | 53.28 | 51.06 | 50.90 | 49.26 | 50.68 | 29.25 | 27.92 | 25.27 | 23.37 |

| 5 | vicuna_13b | 39.83 | 25.50 | 48.85 | 47.64 | 49.49 | 39.82 | 44.70 | 41.73 | 41.48 | 35.03 | 21.61 |

Note:

- Metric: Macro-F1

$score = (free\text{-}0shot + free\text{-}2shot + multi\text{-}0shot + multi\text{-}2shot)/4$ - OpenAI model names: gpt-3.5-turbo-0301, gpt-4-0314

git clone https://github.com/srhthu/LM-CompEval-Legal.git

# Enter the repo

cd LM-CompEval-Legal

pip install -r requirements.txt

bash download_data.sh

# Download evaluation dataset to data_hub/ljp

# Download model generated results to runs/paper_versionThe data is availabel at Google Drive

There are totally 10 sub_tasks: {free,multi}-{0..4}.

Evaluate a Huggingface model on all sub_tasks:

CUDA_VISIBLE_DEVICES=0 python main.py \

--config ./config/default_hf.json \

--output_dir ./runs/test/<model_name> \

--model_type hf \

--model <path of model>Evaluate a OpenAI model on all sub_tasks:

CUDA_VISIBLE_DEVICES=0 python main.py \

--config ./config/default_openai.json \

--output_dir ./runs/test/<model_name> \

--model_type openai \

--model <path of model>To evaluate some of the whole settings, add one more argument, e.g.,

--sub_tasks 'free-0shot,free-2shot,multi-0shot,multi-2shot'The huggingface paths of the evaluated models in the paper are

- ChatGLM:

THUDM/chatglm-6b - BLOOMZ:

bigscience/bloomz-7b1-mt - Vicuna:

lmsys/vicuna-13b-delta-v1.1

Features:

- If the evaluation process is interupted, just run it again with the same parameters. The process saves model outputs immediately and will skip previous finished samples when resuming.

- Samples that trigger a GPU out-of-memory error will be skipped. You can change the configurations and run the process again. (See suggested GPU configurations below)

Suggested GPU configurations

- 7B model

- 1 GPU with RAM around 24G (RTX 3090, A5000)

- If total RAM >=32G, e.g., 2*RTX3090 or 1*V100(32G), add the

--speedargument for faster inference.

- 13B model

- 2 GPU with RAM >= 24G (e.g., 2*V100)

- If total RAM>=64G, e.g., 3*RTX3090 or 2*V100, add the

--speedargument for faster inference

When context is long, e.g., in multi-4shot setting, 1 GPU of 24G RAM may be insufficient for 7B model. You have to eigher increase the number of GPUs or decrease the demonstration length (default to 500) by modifying the demo_max_len parameter in

config/default_hf.json

After evaluating some models locally, the leaderboard can be generated in csv format:

python scripts/get_result_table.py \

--exp_dir runs/paper_version \

--metric f1 \

--save_path resources/paper_version_f1.csv@misc{shui2023comprehensive,

title={A Comprehensive Evaluation of Large Language Models on Legal Judgment Prediction},

author={Ruihao Shui and Yixin Cao and Xiang Wang and Tat-Seng Chua},

year={2023},

eprint={2310.11761},

archivePrefix={arXiv},

primaryClass={cs.CL}

}