Paper | Model | Website and Examples | More Examples | Demo

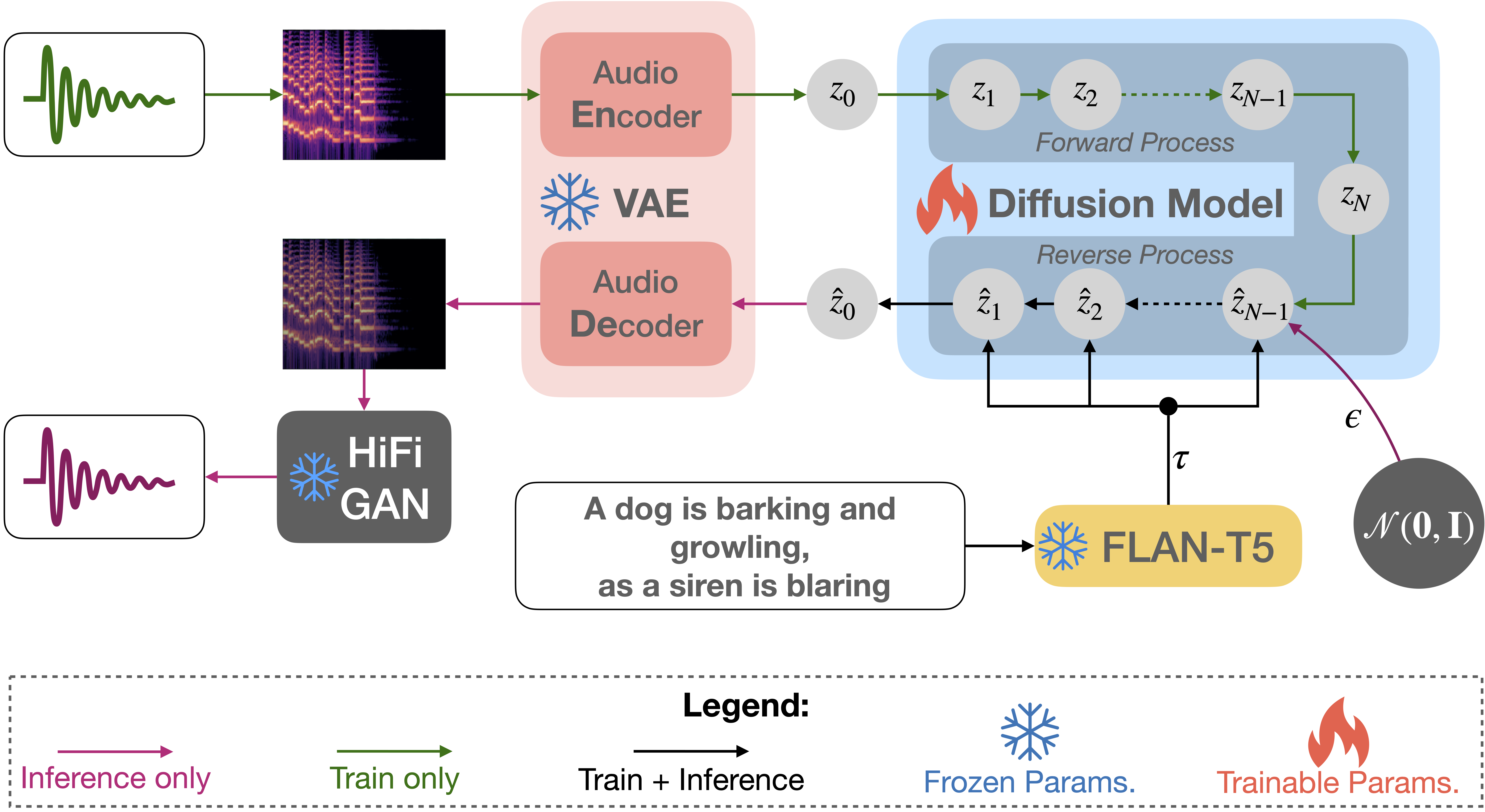

TANGO is a latent diffusion model for text to audio generation. TANGO can generate realistic audios including human sounds, animal sounds, natural and artificial sounds and sound effects from textual prompts. We use the frozen instruction-tuned LLM Flan-T5 as the text encoder and train a UNet based diffusion model for audio generation. We outperform current state-of-the-art models for audio generation across both objective and subjective metrics. We release our model, training, inference code and pre-trained checkpoints for the research community.

Download the TANGO model and generate audio from a text prompt:

import IPython

import soundfile as sf

from tango import Tango

tango = Tango("declare-lab/tango")

prompt = "An audience cheering and clapping"

audio = tango.generate(prompt)

sf.write(f"{prompt}.wav", audio, samplerate=16000)

IPython.display.Audio(data=audio, rate=16000)CheerClap.webm

The model will be automatically downloaded and saved in cache. Subsequent runs will load the model directly from cache.

The generate function uses 100 steps by default to sample from the latent diffusion model. We recommend using 200 steps for generating better quality audios. This comes at the cost of increased run-time.

prompt = "Rolling thunder with lightning strikes"

audio = tango.generate(prompt, steps=200)

IPython.display.Audio(data=audio, rate=16000)Thunder.webm

Use the generate_for_batch function to generate multiple audio samples for a batch of text prompts:

prompts = [

"A car engine revving",

"A dog barks and rustles with some clicking",

"Water flowing and trickling"

]

audios = tango.generate_for_batch(prompts, samples=2)This will generate two samples for each of the three text prompts.

More generated samples are shown here.

Install requirements.txt. You will also need to install the diffusers package from the directory provided in this repo:

pip install -r requirements.txt

cd diffusers

pip install -e .Follow the instructions given in the AudioCaps repository for downloading the data. The audio locations and corresponding captions are provided in our data directory. The *.json files are used for training and evaluation.

We use the accelerate package from Hugging Face for multi-gpu training. Run accelerate config from terminal and set up your run configuration by the answering the questions asked.

You can now train TANGO on the AudioCaps dataset using:

accelerate launch train.py \

--text_encoder_name="google/flan-t5-large" \

--scheduler_name="stabilityai/stable-diffusion-2-1" \

--unet_model_config="configs/diffusion_model_config.json" \

--freeze_text_encoder --augment --snr_gamma 5 \The argument --augment uses augmented data for training as reported in our paper. We recommend training for at-least 40 epochs, which is the default in train.py.

To start training from our released checkpoint use the --hf_model argument.

accelerate launch train.py \

--hf_model "declare-lab/tango" \

--unet_model_config="configs/diffusion_model_config.json" \

--freeze_text_encoder --augment --snr_gamma 5 \Check train.py and train.sh for the full list of arguments and how to use them.

Checkpoint from training will be saved in the saved/*/ directory.

To perform audio generation and objective evaluation in AudioCaps test set from a checkpoint:

CUDA_VISIBLE_DEVICES=0 python inference.py \

--original_args="saved/*/summary.jsonl" \

--model="saved/*/best/pytorch_model_2.bin" \Check inference.py and inference.sh for the full list of arguments and how to use them.

We use wandb to log training and infernce results.

Please consider citing the following article if you found our work useful:

@article{ghosal2023tango,

title={Text-to-Audio Generation using Instruction Tuned LLM and Latent Diffusion Model},

author={Deepanway Ghosal, Navonil Majumder, Ambuj Mehrish, Sounjanya Poria},

journal={arXiv preprint arXiv:2304.},

year={2023}

}We borrow the code in audioldm and audioldm_eval from the AudioLDM repositories. We thank the AudioLDM team for open-sourcing their code.