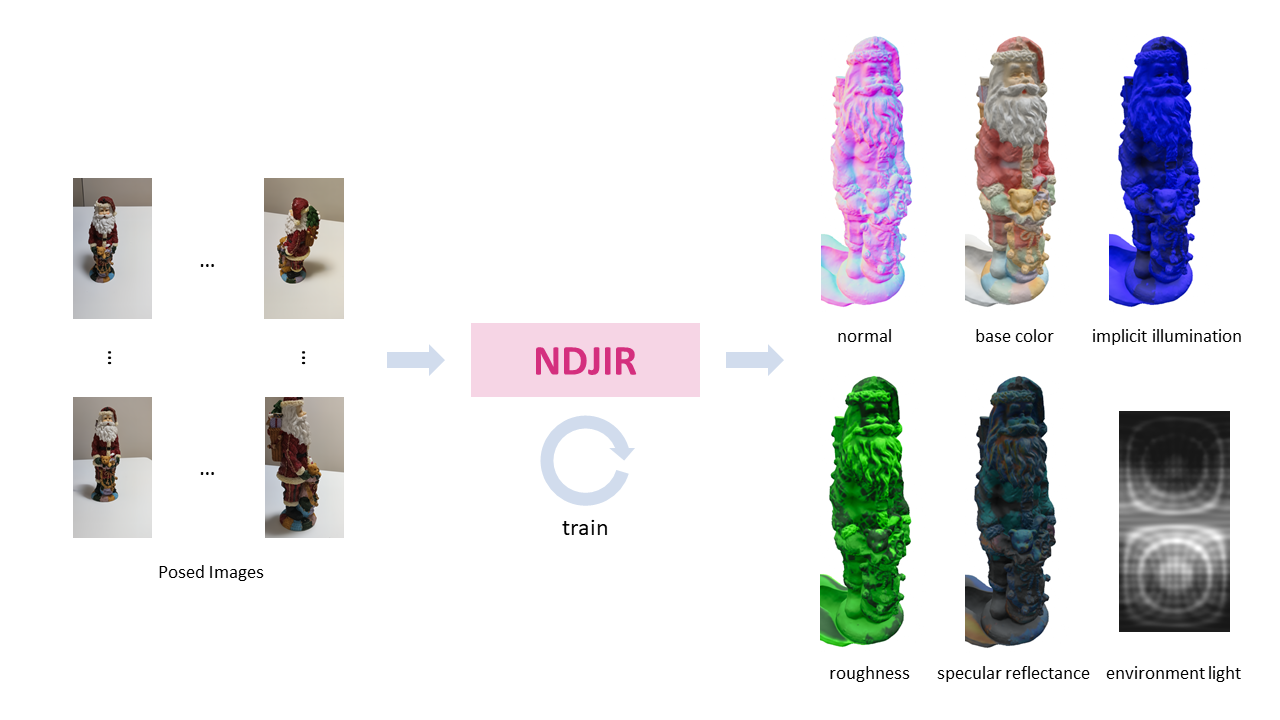

Neural direct and joint inverse rendering (NDJIR) jointly decomposes an object in a scene into geometry (mesh), lights (environment light and implicit illumination), and materials (base color, roughness, and specular reflectance) given multi-view (posed) images. NDJIR directly uses the physically-based rendering equation, so we can smoothly import the decomposed geometry with materials into existing DCC tools.

| PBR | Base color (light-baked) | PBR (light-baked) |

|---|---|---|

bears_pbr_mesh.mp4 |

bears_mesh_ilbaked.mp4 |

bears_pbr_mesh_ilbaked.mp4 |

santa_pbr_mesh.mp4 |

santa_mesh_ilbaked.mp4 |

santa_pbr_mesh_ilbaked.mp4 |

camel_pbr_mesh.mp4 |

camel_mesh_ilbaked.mp4 |

camel_pbr_mesh_ilbaked.mp4 |

We assume using DTUMVS dataset in training, but the usage is not limited to DTUMVS dataset. When using a custom_dataset, go to Custom Dataset.

export NNABLA_VER=1.29.0 \

&& docker build \

--build-arg http_proxy=${http_proxy} \

--build-arg NNABLA_VER=${NNABLA_VER} \

-t ndjir/train \

-f docker/Dockerfile .Run the following command to download DTU MVS dataset.

wget https://www.dropbox.com/s/ujmakiaiekdl6sh/DTU.zip

unzip DTU.zip

For the mesh evaluation, we rely on this python script, see the github here.

Download the reference datasets in this page:

Then, run the following commands:

mkdir DTUMVS_ref

unzip -r SampleSet.zip .

unzip -r Points.zip .

mv SampleSet Points DTUMVS_refpython python/train.py --config-name default \

device_id=0 \

monitor_base_path=<monitor_base_path> \

data_path=<path_to_DTU_scanNN>Mesh extraction and its evaluation are included in the training.

At the end of the training, the mesh is extracted using MarchingCubes with 512 grid size as default. For explicitly extracting the mesh, set config and trained model properly, and then

python python/extract_by_mc.py \

--config-path <results_path> \

--config-name config \

device_id=0 \

model_load_path=<results_path>/model_01499.h5 \

extraction.grid_size=512The obj file is extracted in the <results_path>.

To evaluate the extracted mesh, run the following like

python python/evaluate_chamfer_dtumvs.py \

--config-path <results_path> \

--config-name config \

valid.dtumvs.mesh_path=<path to extracted mesh> \

valid.dtumvs.scan=scan<id> \

valid.dtumvs.ref_dir=DTUMVS_ref \

valid.dtumvs.vis_out_dir=<training_result_path>

data_path=<data_path>Set the config and trained model properly, then do neural rendering.

python python/render_image.py \

device_id=0 \

valid.n_rays=4000 \

valid.n_down_samples=0 \

model_load_path=<results_path>/model_01499.h5 \

data_path=<data_path>Now we have neural-rendered images. With these images, run like

python scripts/evaluate_rendered_images.py \

-d_rd <neural_rendered_image_dir> \

-d_gt <gt_image_dir> \

-d_ma <gt_mask_dir>To PBR images, do the following steps.

- Smooth mesh (optional)

- Rebake light distribution (optional)

- Create texture map

- Create open3d camera parameters (optional)

- View PBR-mesh

docker build --build-arg http_proxy=${http_proxy} \

-t ndjir/post \

-f docker/Dockerfile.post .Run the script for smoothing mesh, rebaking light, and texturing:

bash run_all_postprocesses.sh <result_path> <model_iters> <trimmed or raw> <filter_iters>When using Open3D as a viewer, we can create O3D camera parameters based on cameras.npy:

python scripts/create_o3d_camera_parameters.py -f <path to cameras.npy>python scripts/viewer_pbr.py \

-fc <base_color_mesh> \

-fuv <triangle_uvs> \

-fr <roughness_texture> \

-fs <specular_reflectance_texture>To save image only, add path to a camera parameter or directory containing those:

python scripts/viewer_pbr.py \

-fc <base_color_mesh> \

-fuv <triangle_uvs> \

-fr <roughness_texture> \

-fs <specular_reflectance_texture> \

-c <o3d_camera_parameter>Pipeline for using custom dataset is as follows

- Prepare images

- Deblur images

- Mask creation (i.e., background matting)

- Estimate camera parameters

- Normalize camera poses

- Train with custom dataset for decomposition

- Physically-based render

Instead of taking photos, we can use video of an object. Use the batch script to execute 1-5 above.

docker build --build-arg http_proxy=${http_proxy} \

-t ndjir/pre \

-f docker/Dockerfile.pre .Create the scene directory:

mkdir -p <data_path>Take videos and put those under '<data_path>'

Tips:

- Take a video of an object around 360' view

- Take two patterns: 0' and 45' to the ground

- All parts of an object are included in an image or video

- Keep slow move when taking video and/or use shake correction

- Do not change a focal length so much

If taking photos of object(s), put all images to <data_path>/image, then run the script:

bash scripts/rename_images.sh <data_path>/imageRun the script for extracting/deblurring images, creating masks, and estimating/normalizing camera parameters:

bash scripts/run_all_preprocesses.sh <data_path> <n_images:100> <single_camera:1> <colmap_use_mask:0>python python/train.py --config-name custom \

device_id=0 \

monitor_base_path=<monitor_base_path> \

data_path=<data_path>Training takes about 3.5 hours using 100 images with an A100 GPU as default setting.

Now, we have geometry and spatially-varying materials, go to Physically-based Rendering.

@misc{https://doi.org/10.48550/arxiv.2302.00675,

url = {https://arxiv.org/abs/2302.00675},

author = {Yoshiyama, Kazuki and Narihira, Takuya},

title = {NDJIR: Neural Direct and Joint Inverse Rendering for Geometry, Lights, and Materials of Real Object},

year = {2023}

}