Chong Mou, Xintao Wang, Jiechong Song, Ying Shan, Jian Zhang

- [2023/7/6] Paper is available here.

In this paper, we aim to develop a fine-grained image editing scheme based on the strong correspondence of intermediate features in diffusion models. To this end, we design a classifier-guidance-based method to transform the editing signals into gradients via feature correspondence loss to modify the intermediate representation of the diffusion model. The feature correspondence loss is designed with multiple scales to consider both semantic and geometric alignment. Moreover, a cross-branch self-attention is added to maintain the consistency between the original image and the editing result.

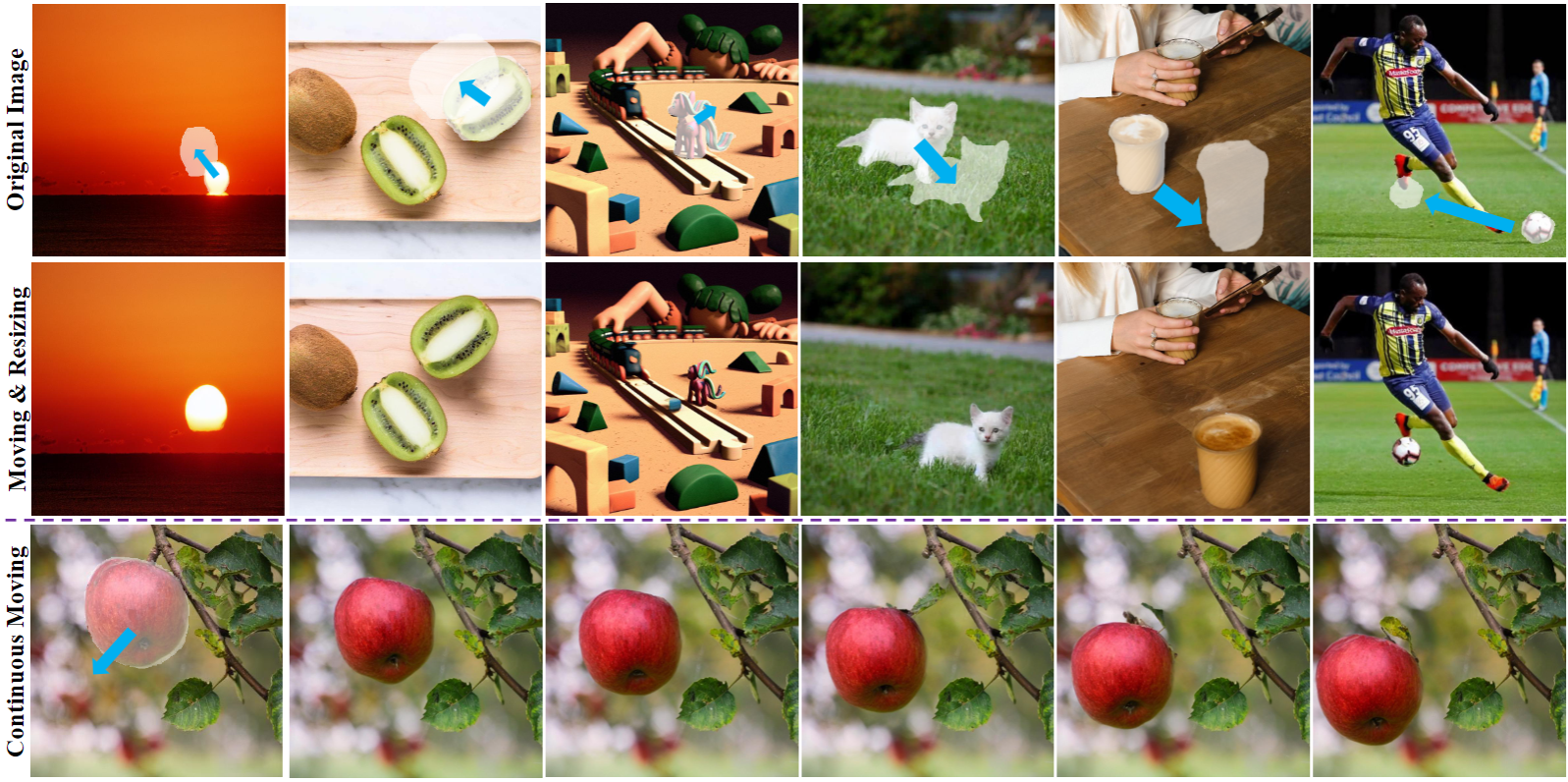

- Object Moving & Resizing

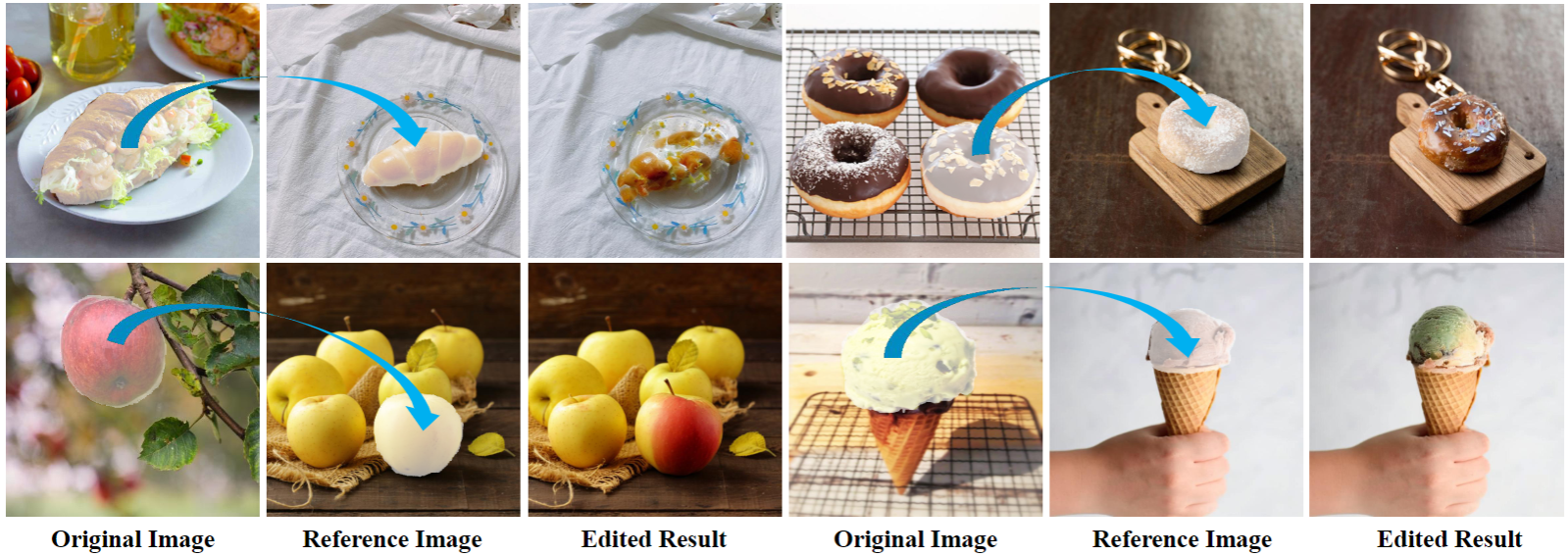

- Object Appearance Replacement

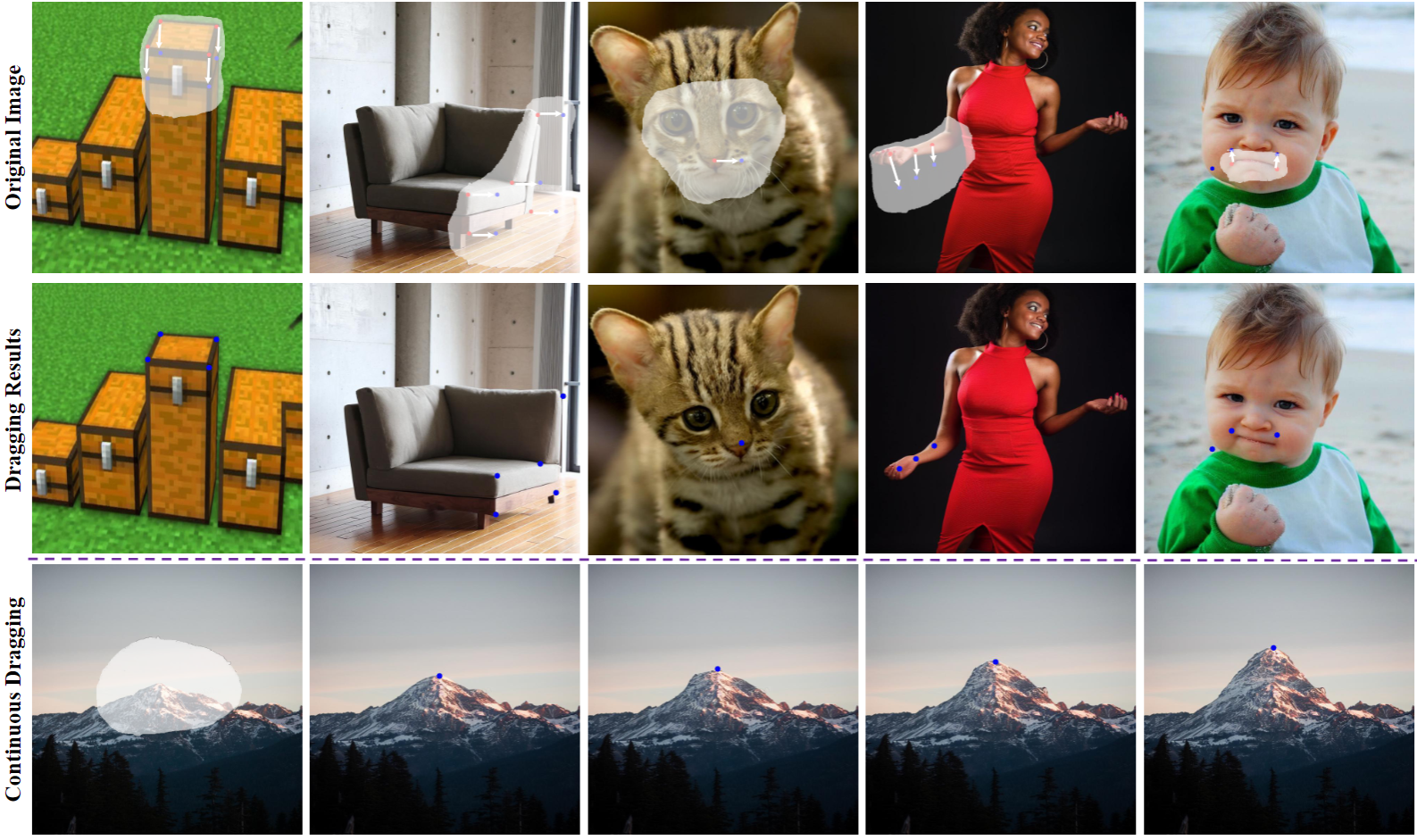

- Content Dragging

[1] Drag Your GAN: Interactive Point-based Manipulation on the Generative Image Manifold

[2] DragDiffusion: Harnessing Diffusion Models for Interactive Point-based Image Editing (The first attempt and presentation for point dragging on diffusion)

[3] Diffusion Self-Guidance for Controllable Image Generation