i-SRT:Aligning Large Multimodal Models for Videos by Iterative Self-Retrospective Judgment,

Daechul Ahn*1,3, Yura Choi*1,3, San Kim1,3, Youngjae Yu1, Dongyeop Kang2, Jonghyun Choi3,†(*Equal Contribution)

1Yonsei University, 2University of Minnesota, 3Seoul National University

†Corresponding Author

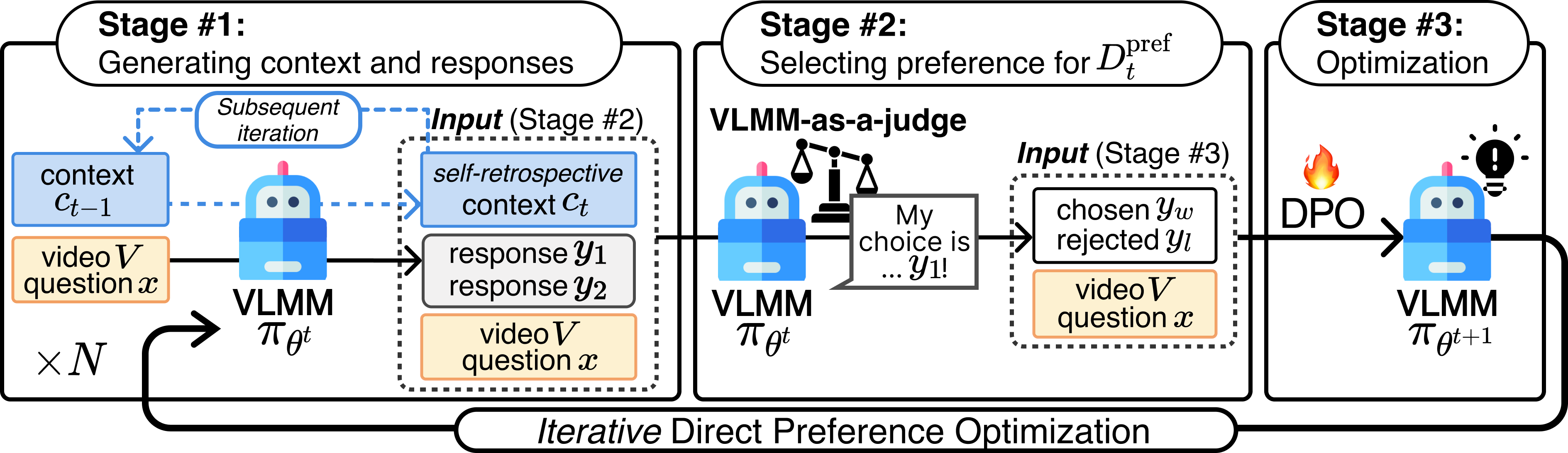

Abstract: Aligning Video Large Multimodal Models (VLMMs) face challenges such as modality misalignment and verbose responses. Although iterative approaches such as self-rewarding or iterative direct preference optimization (DPO) recently showed a significant improvement in language model alignment, particularly on reasoning tasks, self-aligned models applied to large video-language models often result in lengthy and irrelevant responses. To address these challenges, we propose a novel method that employs self-retrospection to enhance both response generation and preference modeling, and call iterative self-retrospective judgment (i-SRT). By revisiting and evaluating already generated content and preference in loop, i-SRT improves the alignment between textual and visual modalities, reduce verbosity, and enhances content relevance. Our empirical evaluations across diverse video question answering benchmarks demonstrate that i-SRT significantly outperforms prior arts. We are committed to opensourcing our code, models, and datasets to encourage further investigation.

- [07/02] Upload model checkpoint & evaluation code

- [06/17] Create repository, update README

- using the script from LLaVA-Hound-DPO

TEST_VIDEO_DIR=YOUR_PATH bash setup/setup_test_data.sh - or, download manually from this link

# out-domain video question answering

bash Evaluation/pipeline/outdomain_test_pipeline.sh \

results \

SNUMPR/isrt_video_llava_7b_9th

- Coming soon

- Coming soon

GNU GENERAL PUBLIC LICENSE

- LLaVA-Hound-DPO: Our code is built upon the codebase from LLaVA-Hound-DPO