A PyTorch Implementation

Paper: https://arxiv.org/pdf/2012.08125, official TF2 code: https://github.com/ruiqigao/recovery_likelihood

If you find any problems/bugs in my implementation, you can open an issue to point out. Thank you!

The authors use 8 TPUs to train on CIFAR10 for 40+ hours and evaluate FID using 10,000 generated samples.

Due to the computational constraints, I try num_res_blocks=2 and n_batch_train=64.

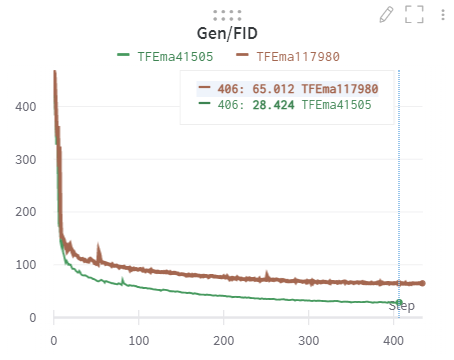

My results (the main difference is the number of generated samples for evaluation)

| Eval num of samples | FID | Time |

|---|---|---|

| 1000 | 65 | 5 days 1.8 hours |

| 10000 | 28.42 | 6 days 11.5 hours |

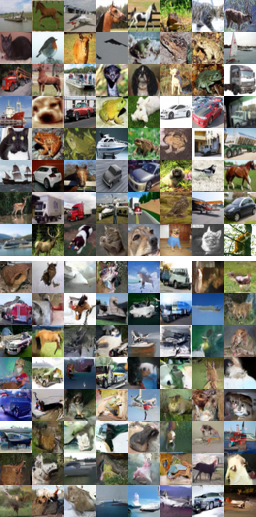

Generated samples (Top 8 rows are real data, bottom 8 rows are generated images):

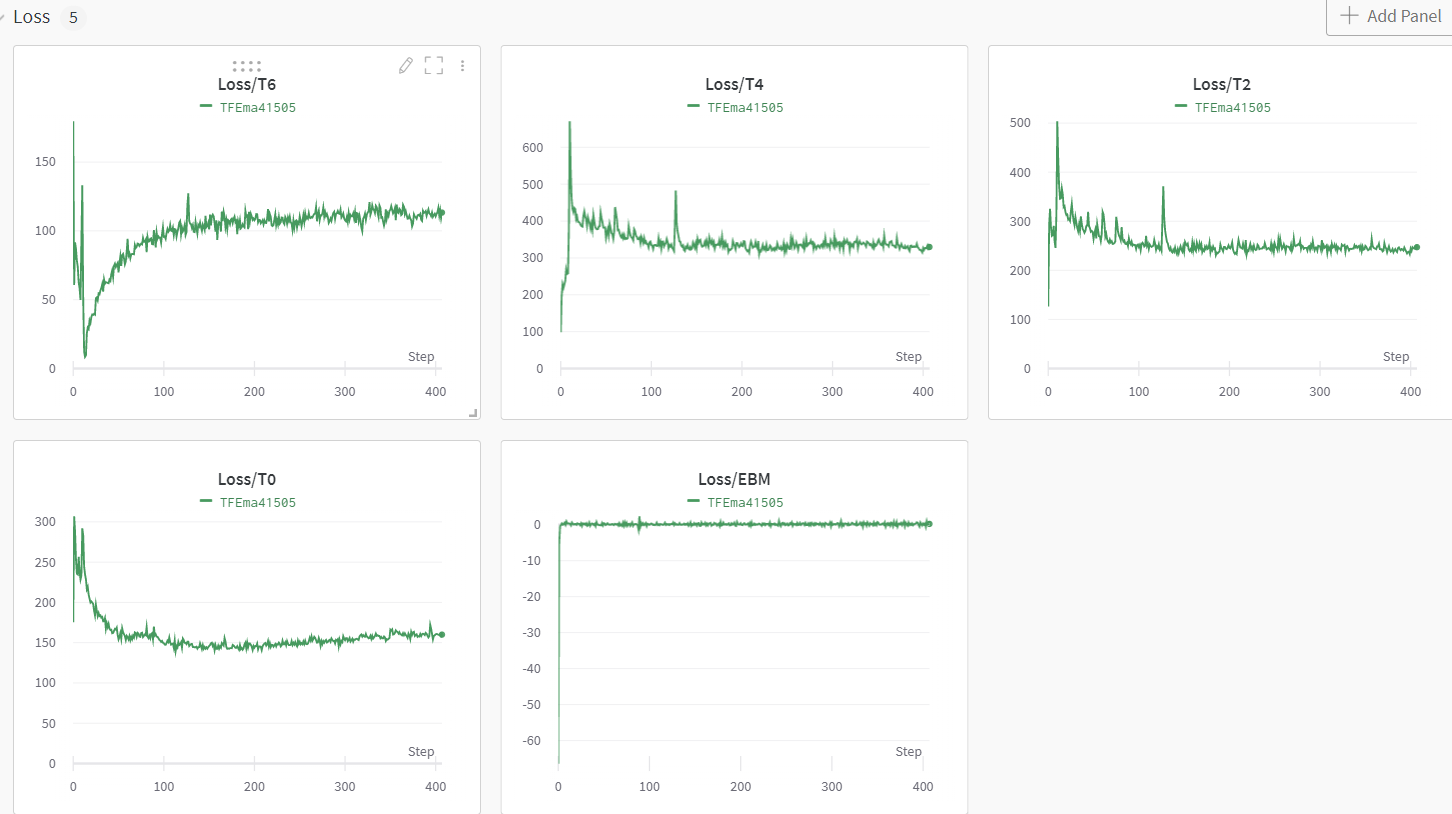

Curves of Some training variables:

You can also try pytorch_difrec.ipynb

I almost implement the main parts of the algorithm.

- for simplicity, I use the Wide-ResNet 28-10 used in JEM, instead of implementing the ResNet model in the paper.

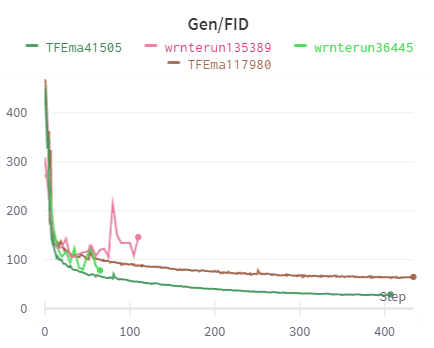

- I didn't implement the EMA(Exponential Moving Average used in the code but not mentioned in the paper). 1) it may improves the performance by a large margin, 2) it may just results in a fluctuated/non-smooth FID curve(verified by TF code).

- I'm not sure whether the Spectral Norm implementation is correct. I fail to use the SN layer in my code. SN

- I use the code from IGEBM to compute FID/Inception Score. Almost the same but still potentially a bit different from the paper. --- Using TF to compute FID/IS is aweful, suggest to use torch_fidelity with my example https://github.com/sndnyang/Diffusion_ViT/blob/master/utils/eval_quality.py#L56, eval_is_fid(images, the values of images are ranging from [0, 255].

pip install -r requirements.txt

I think all versions of torch >= 1.3.1 can use for it.

For Inception Score/FID, you need to install tensorflow-gpu, CPU is too slow.

Using TF to compute FID/IS is aweful, suggest to use torch_fidelity, my example https://github.com/sndnyang/Diffusion_ViT/blob/master/utils/eval_quality.py#L56, eval_is_fid(images, the values of images are ranging from [0, 255].

I suggest to use miniconda or anaconda for evaluation Inception Score install tensorflow in conda

CIFAR10

python DRLTrainer.py --gpu-id=?

Since Wide-Resnet is used, you can change widen factor and depth factor. You can also find other architectures for it.

No pretrained models

If you find their work helpful to your research :), please cite:

@article{gao2020learning,

title={Learning Energy-Based Models by Diffusion Recovery Likelihood},

author={Gao, Ruiqi and Song, Yang and Poole, Ben and Wu, Ying Nian and Kingma, Diederik P},

journal={International Conference on Learning Representations (ICLR)},

year={2021}

}