Paper | Demo | Checkpoints | Datasets

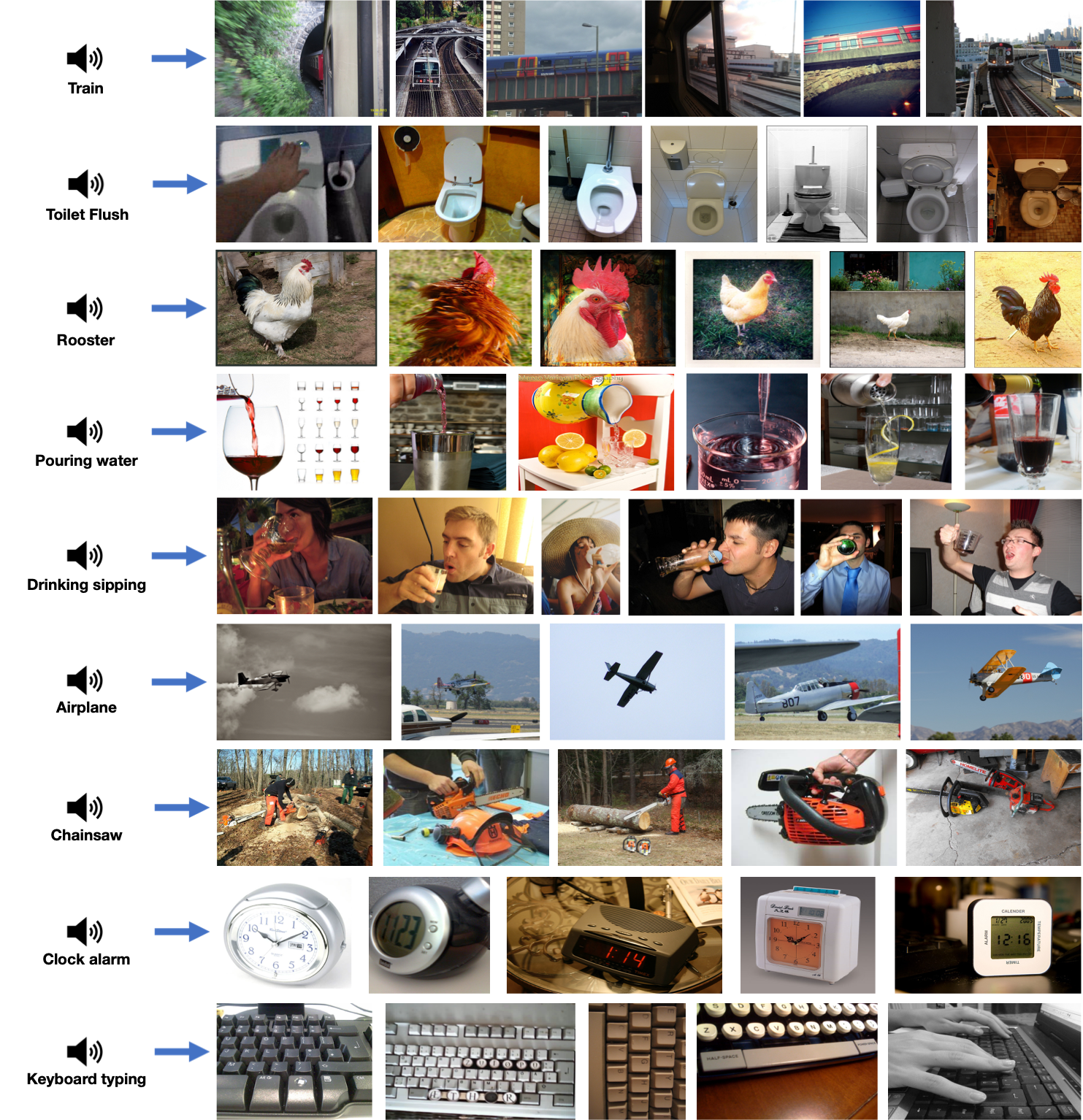

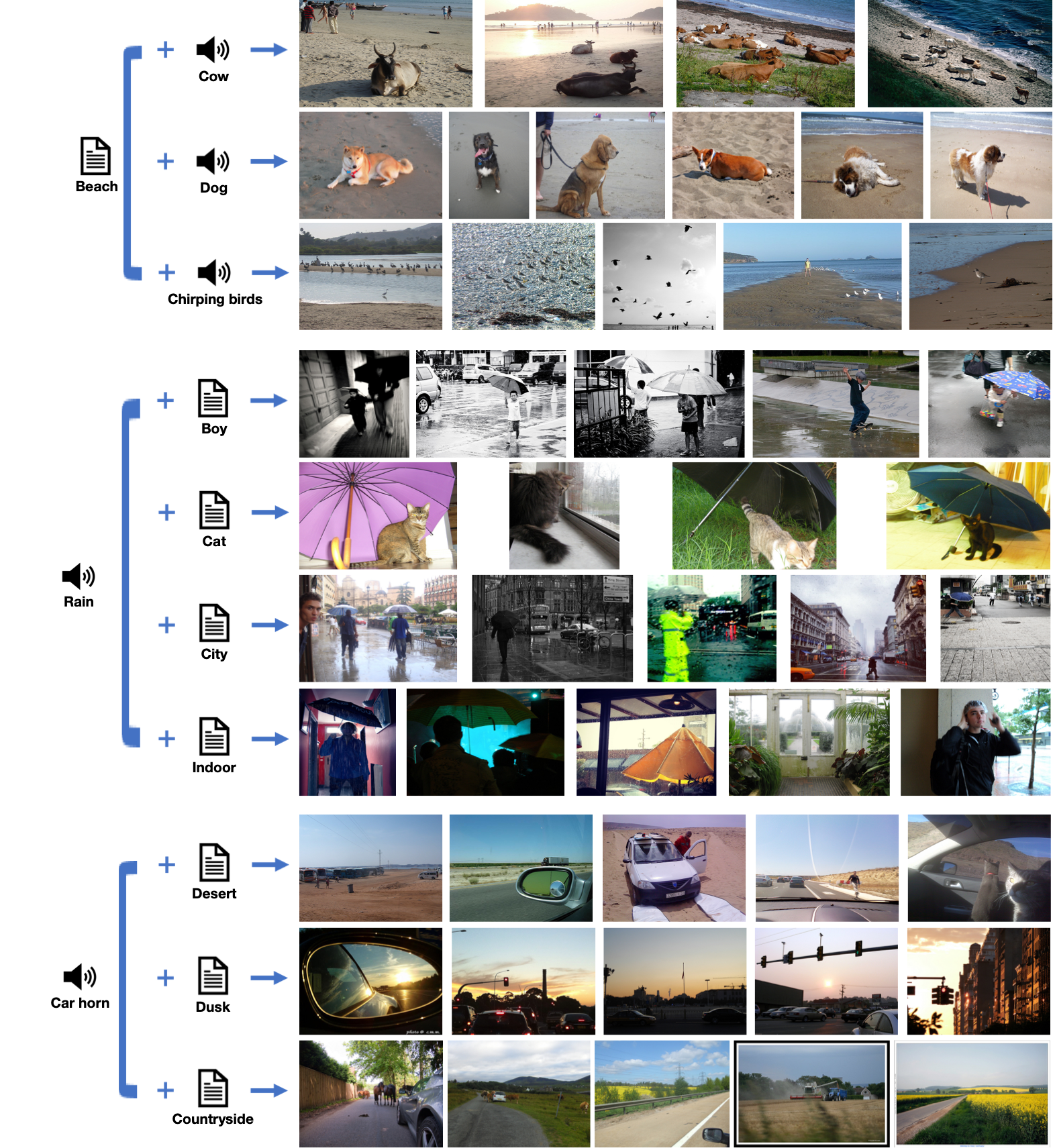

ONE-PEACE is a general representation model across vision, audio, and language modalities, Without using any vision or language pretrained model for initialization, ONE-PEACE achieves leading results in vision, audio, audio-language, and vision-language tasks. Furthermore, ONE-PEACE possesses a strong emergent zero-shot retrieval capability, enabling it to align modalities that are not paired in the training data.

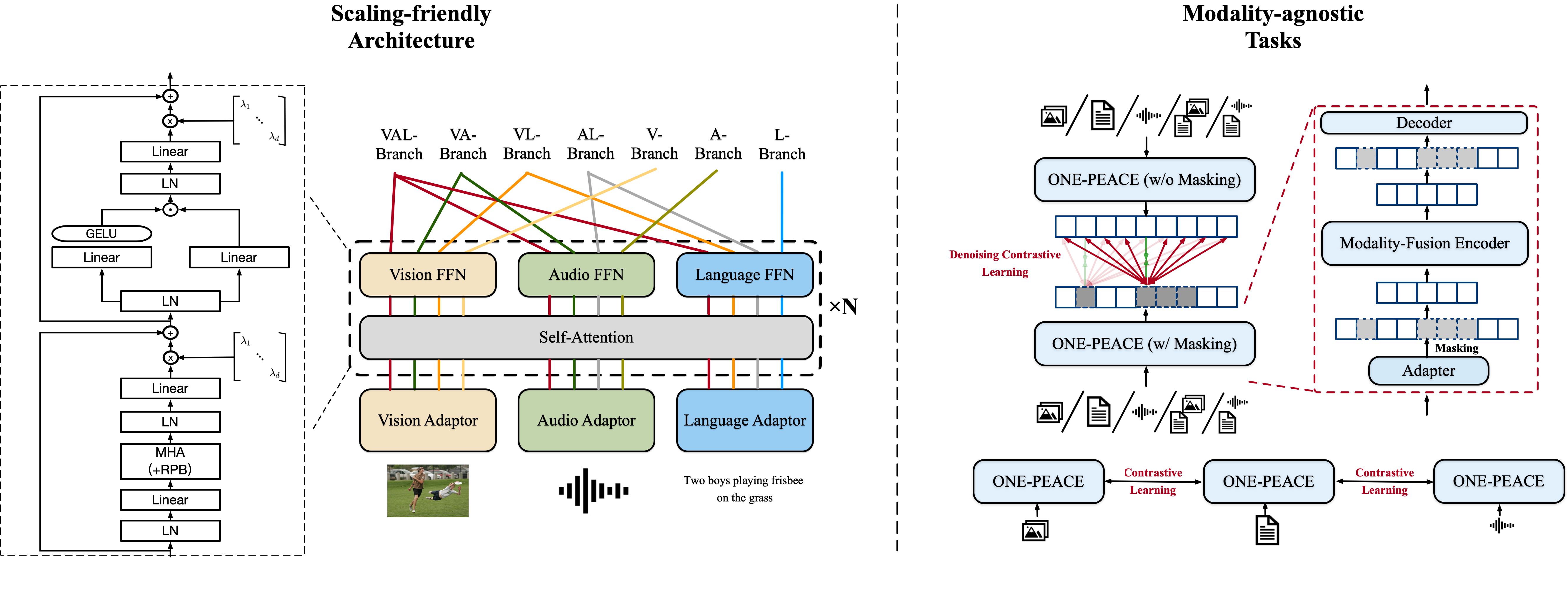

Below shows the architecture and pretraining tasks of ONE-PEACE. With the scaling-friendly architecture and modality-agnostic tasks, ONE-PEACE has the potential to expand to unlimited modalities.

- 2023.5.25: Released the easy-to-use API, which enables the quick extraction for image, audio and text representations.

- 2023.5.23: Released the pretrained checkpoint, as well as finetuning & inference scripts for vision-language tasks.

- 2023.5.19: Released the paper and code. Pretrained & finetuned checkpoints, training & inference scripts, as well as demos will be released as soon as possible.

We list the parameters and pretrained checkpoint of ONE-PEACE below.

| Model | Ckpt | Params | Hidden size | Intermediate size | Attention heads | Layers |

|---|---|---|---|---|---|---|

| ONE-PEACE | Download | 4B | 1536 | 6144 | 24 | 40 |

| Task | Image classification | Semantic Segmentation | Object Detection (w/o Object365) | Video Action Recognition |

|---|---|---|---|---|

| Dataset | Imagenet-1K | ADE20K | COCO | Kinetics 400 |

| Split | val | test | test | test |

| Metric | Acc. | mIoUss / mIoUms | APbox / APmask | Top-1 Acc. / Top-5 Acc. |

| ONE-PEACE | 89.8 | 62.0 / 63.0 | 60.4 / 52.9 | 88.1 / 97.8 |

| Task | Audio-Text Retrieval | Audio Classification | Audio Question Answering | |||||

|---|---|---|---|---|---|---|---|---|

| Dataset | AudioCaps | Clotho | ESC-50 | FSD50K | VGGSound (Audio Only) | AVQA (Audio + Question) | ||

| Split | test | evaluation | full | eval | test | val | ||

| Metric | T2A R@1 | A2T R@1 | T2A R@1 | A2T R@1 | Zero-shot Acc. | MAP | Acc. | Acc. |

| ONE-PEACE | 42.5 | 51.0 | 22.4 | 27.1 | 91.8 | 69.7 | 59.6 | 86.2 |

| Task | Image-Text Retrieval (w/o ranking) | Visual Grounding | VQA | Visual Reasoning | |||||

|---|---|---|---|---|---|---|---|---|---|

| Dataset | COCO | Flickr30K | RefCOCO | RefCOCO+ | RefCOCOg | VQAv2 | NLVR2 | ||

| Split | test | test | val / testA / testB | val / testA / testB | val-u / test-u | test-dev / test-std | dev / test-P | ||

| Metric | I2T R@1 | T2I R@1 | I2T R@1 | T2I R@1 | Acc@0.5 | Acc. | Acc. | ||

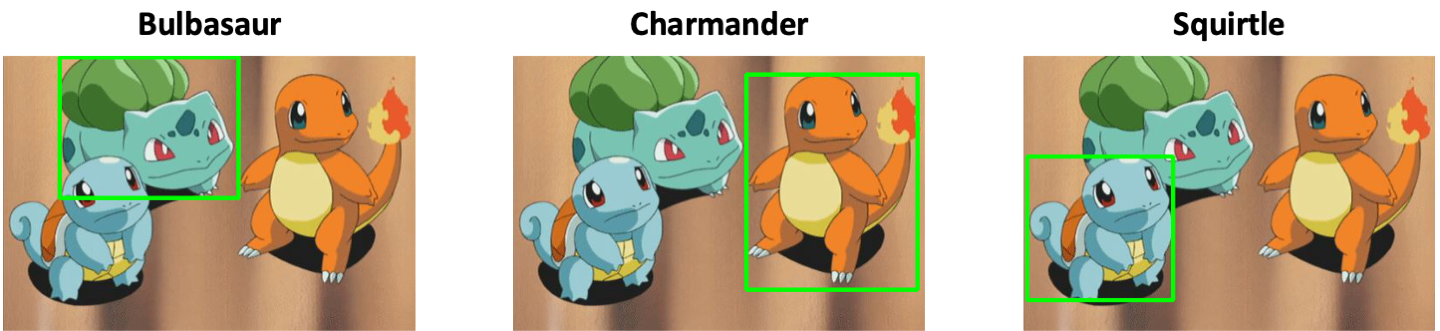

| ONE-PEACE | 84.1 | 65.4 | 97.6 | 89.6 | 92.58 / 94.18 / 89.26 | 88.77 / 92.21 / 83.23 | 89.22 / 89.27 | 82.6 / 82.5 | 87.8 / 88.3 |

- Python >= 3.7

- Pytorch >= 1.10.0 (recommend 1.13.1)

- CUDA Version >= 10.2 (recommend 11.6)

- Install required packages:

git clone https://github.com/OFA-Sys/ONE-PEACE

pip install -r requirements.txt- For faster training install Apex library (recommended but not necessary):

git clone https://github.com/NVIDIA/apex

cd apex && pip install -v --disable-pip-version-check --no-cache-dir --global-option="--cpp_ext" --global-option="--cuda_ext" --global-option="--distributed_adam" --global-option="--deprecated_fused_adam"- Install Xformers library to use Memory-efficient attention (recommended but not necessary):

conda install xformers -c xformers- Install FlashAttention library to use faster LayerNorm (recommended but not necessary):

git clone --recursive https://github.com/HazyResearch/flash-attention

cd flash-attn && pip install .

cd csrc/layer_norm && pip install .See datasets.md and checkpoints.md.

We provide a simple code snippet to show how to use the API for ONE-PEACE. We use ONE-PEACE to compute embeddings for text, images, and audio, as well as their similarities:

import torch

from one_peace.models import from_pretrained

device = "cuda" if torch.cuda.is_available() else "cpu"

# "ONE-PEACE" can also be replaced with ckpt path

model = from_pretrained("ONE-PEACE", device=device, dtype="float32")

# process raw data

src_tokens = model.process_text(["cow", "dog", "elephant"])

src_images = model.process_image(["dog.JPEG", "elephant.JPEG"])

src_audios, audio_padding_masks = model.process_audio(["cow.flac", "dog.flac"])

with torch.no_grad():

# extract normalized features

text_features = model.extract_text_features(src_tokens)

image_features = model.extract_image_features(src_images)

audio_features = model.extract_audio_features(src_audios, audio_padding_masks)

# compute similarity

i2t_similarity = image_features @ text_features.T

a2t_similarity = audio_features @ text_features.T

print("Image-to-text similarities:", i2t_similarity)

print("Audio-to-text similarities:", a2t_similarity)In addition to the API, we also provide the instructions of training and inference in getting_started.

Feel free to submit Github issues or pull requests. Welcome to contribute to our project!

To contact us, never hestitate to send an email to zheluo.wp@alibaba-inc.com or saimeng.wsj@alibaba-inc.com!

If you find our paper and code useful in your research, please consider giving a star ⭐ and citation 📝 :)

@article{ONEPEACE,

title={ONE-PEACE: Exploring one general Representation Model toward unlimited modalities},

author={Wang, Peng and Wang, Shijie and Lin, Junyang and Bai, Shuai and Zhou, Xiaohuan and Zhou, Jingren and Wang, Xinggang and Zhou, Chang},

journal={arXiv preprint arXiv:2305.11172},

year={2023}

}