Important! The code must be compiled with Clang. Using GCC causes the program to run 1.5 to 2 times slower on my machine. Please don’t expect peak performance without fine-tuning the hyperparameters, such as the number of threads, kernel and block sizes, unless you are running it on a Ryzen 7700(X). More on this in the tutorial.

- Simple and scalable C code (<150 LOC)

- Step by step tutorial

- Targets x86 processors with AVX and FMA instructions (=all modern Intel Core and AMD Ryzen CPUs)

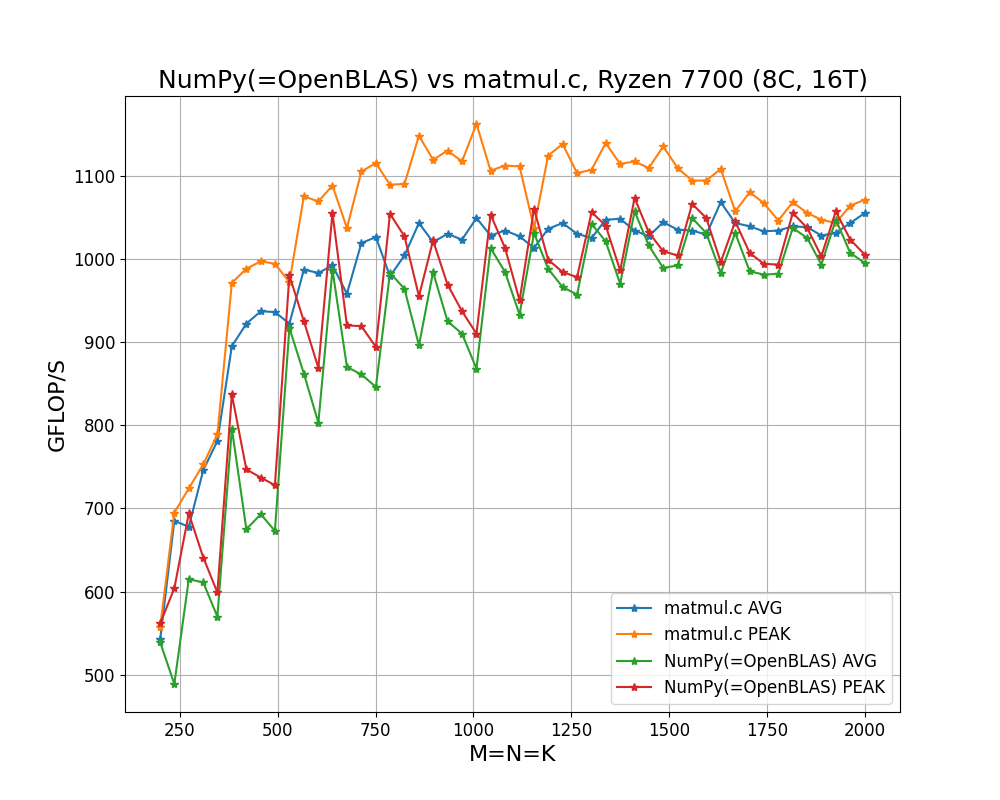

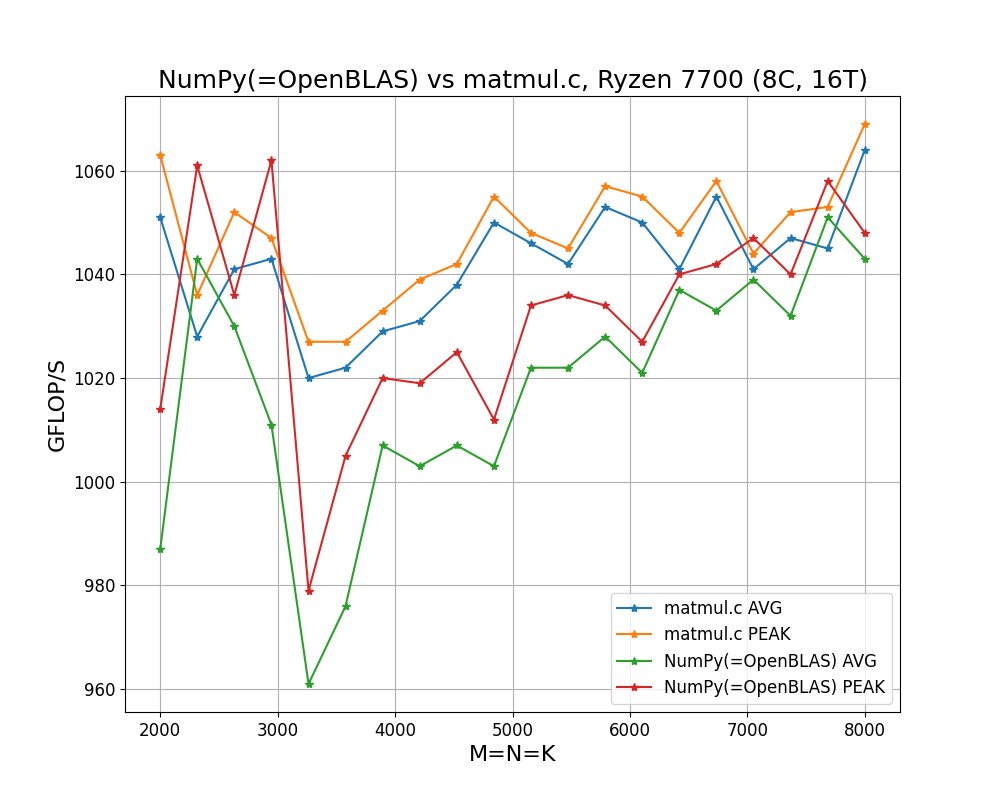

- Faster than OpenBLAS when fine-tuned for Ryzen 7700

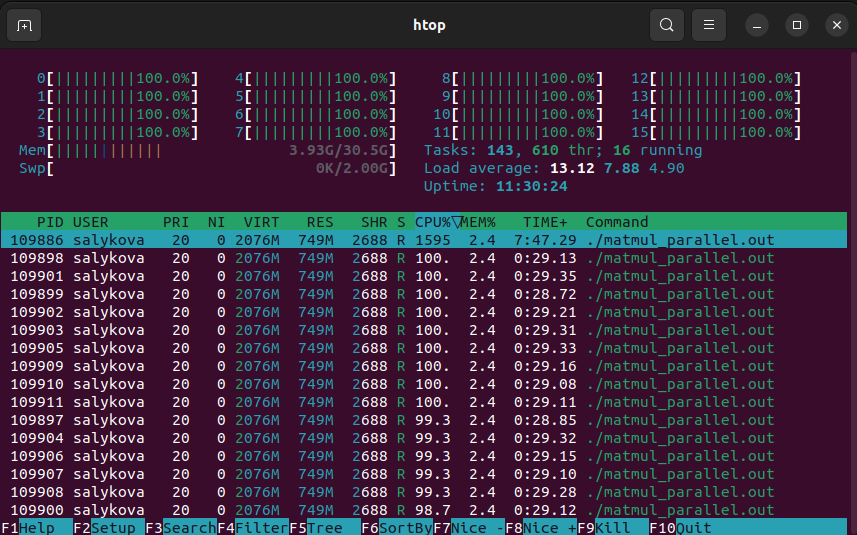

- Efficiently parallelized with just 3 lines of OpenMP directives

- Follows the BLIS design

- Works for arbitrary matrix sizes

- Intuitive API

void matmul(float* A, float* B, float* C, const int M, const int N, const int K)

Tested on:

- CPU: Ryzen 7 7700 8 Cores, 16 Threads

- RAM: 32GB DDR5 6000 MHz CL36

- Numpy 1.26.4

- Compiler:

clang-17 - Compiler flags:

-O2 -mno-avx512f -march=native - OS: Ubuntu 22.04.4 LTS

To reproduce the results, run:

python benchmark_numpy.py

clang-17 -O2 -mno-avx512f -march=native -fopenmp benchmark.c -o benchmark.out && ./benchmark.out

python plot_benchmark.py