|

You can take a look about our resluts on our speaker-recognition-attacker project home page.

If you use this code or part of it, please cite us!

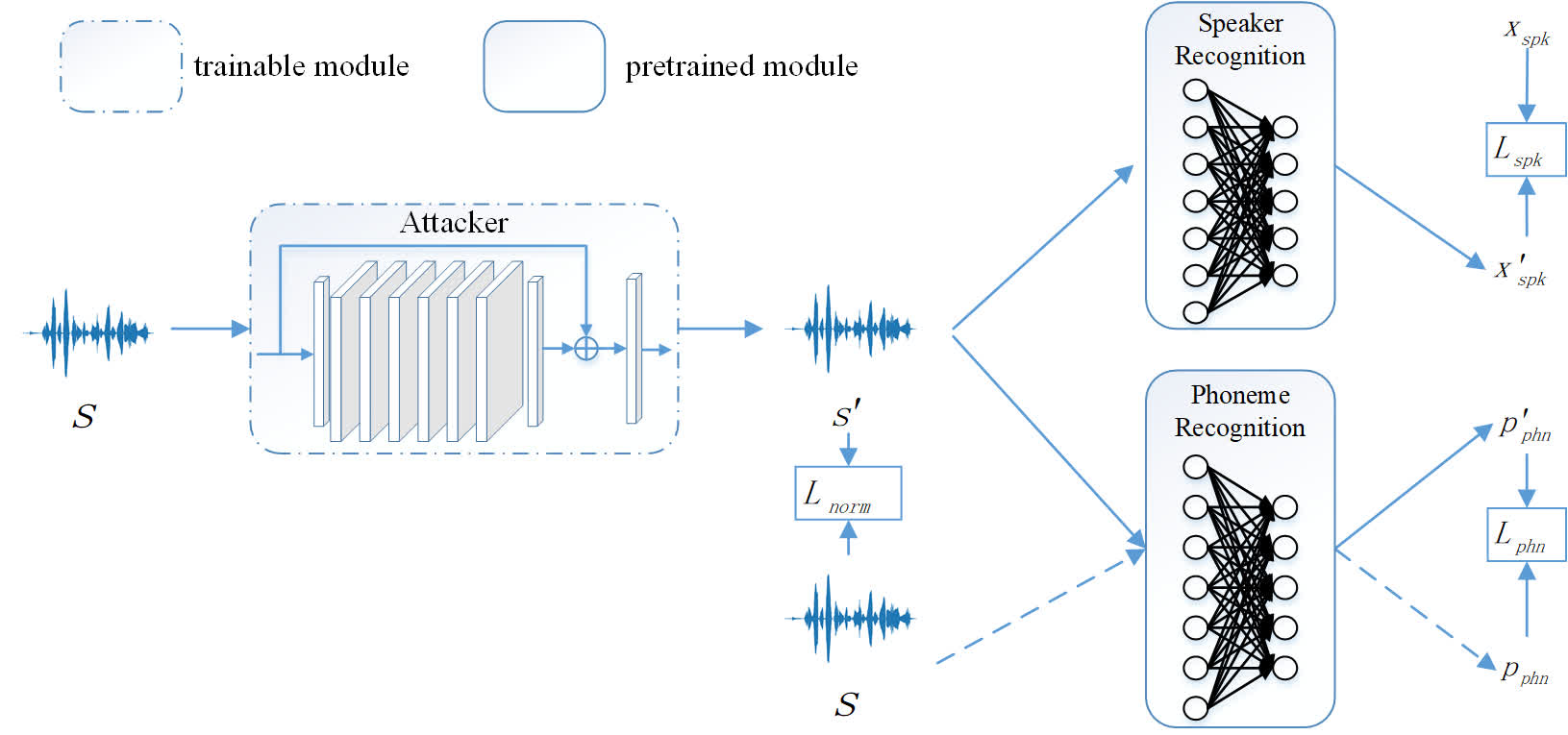

Jiguo Li, Xinfeng Zhang, Chuanming Jia, Jizheng Xu, Li Zhang, Yue Wang, Siwei Ma, Wen Gao, "learning to fool the speaker recognition" arxiv, IEEE Xplore

- linux

- python 3.5 (not test on other versions)

- pytorch 1.2+

- torchaudio 0.3

- librosa, pysoundfile

- json, tqdm, logging

The experiments are conducted on TIMIT dataset.

- you can download TIMIT dataset from the offficial site or here

- download the pretrained speaker recognition model from here, this model is released by the author of SincNet.

- download our pretrained phoneme recognition model from here. You can refer Pytorch-kaldi if you want to train your own phoneme recognition model.

- Run

python ./prepare_dataset.py --data_root PATH_FOR_TIMIT

Two csv files and a pickle file will be saved in PATH_FOR_TIMIT/processed.

In the project folder "learning-to-fool-the-speaker-recignition", run

python train_transformer.py --output_dir ./output/attacker_transformer --speaker_factor 1 --speech_factor 5 --norm_factor 1000 --speech_kld_factor 1 --norm_clip 0.01 --data_root PATH_FOR_TIMIT --speaker_model PATH_FOR_PRETRAINED_SPEAKER_MODEL --speech_model PATH_FOR_PRETRAINED_PBONENE_MODEL --speaker_cfg ./config/timit_speaker_transformer.cfg --no_dist

An example of the script:

python train_transformer.py --output_dir ./output/timit_non_targeted_trans --speaker_factor 1 --speech_factor 5 --norm_factor 1000 --speech_kld_factor 1 --data_root /media/ubuntu/Elements/dataset/TIMIT_lower --no_dist --speaker_model ./pretrained/SincNet_TIMIT/model_raw.pkl --speech_model ./pretrained/timit_speech_no_softmax_fixed_augment/epoch_23.pth --speaker_cfg ./config/timit_speaker_transformer.cfg --norm_clip 0.01 --no_dist

The training for TIMIT dataset will cost about 1 day on a 1080ti GPU.

Run

python train_transformer.py --pt_file PATH_FOR_MODEL --data_root PATH_FOR_TIMIT --speaker_model PATH_FOR_PRETRAINED_SPEAKER_MODEL --speaker_cfg ./config/timit_speaker_transformer.cfg --no_dist --test

our results, data, pretrained model can be found on our speaker-recognition-attacker project main page.

Thanks to the valuable discussion with Jiayi Fu, Jing Lin and Junjie Shi. Besides, thanks to the open source of SincNet, Pytorch-Kaldi, PESQ.

Please feel free to contact me (jiguo.li@vipl.ict.ac.cn, jgli@pku.edu.cn) if you have any questions about this project. Note that this work is only for research. Please do not use it for illegal purposes.