HumanLiff learns layer-wise 3D human with a unified diffusion process.

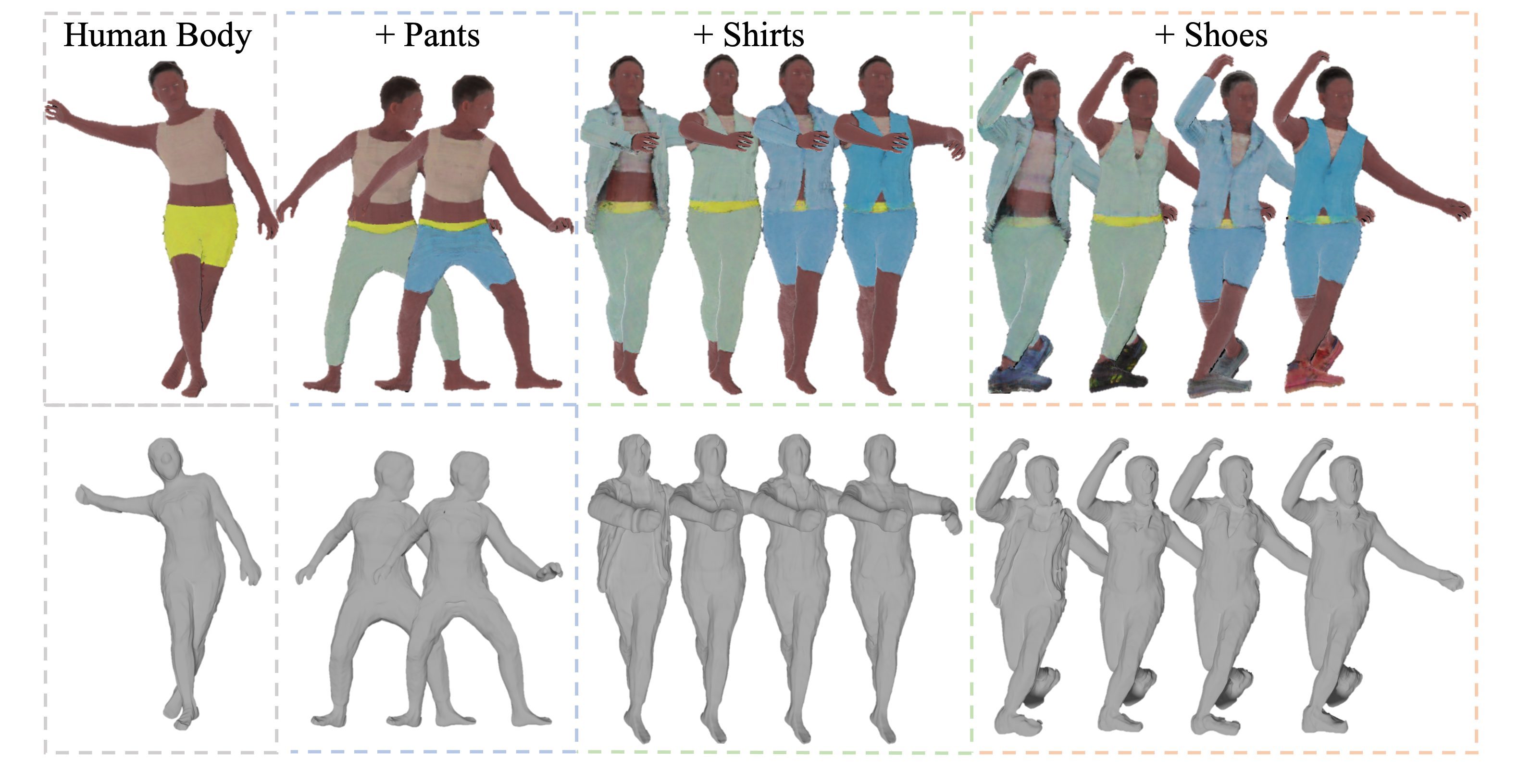

Figure 1. HumanLiff learns to generate layer-wise 3D human with a unified diffusion process. Starting from a random noise, HumanLiff first generates a human body and then progressively generates 3D humans conditioned on previous generation. We use the same background color to denote generation results from the same human layer.

Figure 1. HumanLiff learns to generate layer-wise 3D human with a unified diffusion process. Starting from a random noise, HumanLiff first generates a human body and then progressively generates 3D humans conditioned on previous generation. We use the same background color to denote generation results from the same human layer.

📖 For more visual results, go checkout our project page

This repository contains the official implementation of HumanLiff: Layer-wise 3D Human Generation with Diffusion Model.

NVIDIA GPUs are required for this project. We recommend using anaconda to manage python environments.

conda create --name humanliff python=3.8

conda install pytorch==1.11.0 torchvision==0.12.0 torchaudio==0.11.0 cudatoolkit=11.3 -c pytorch

conda install -c fvcore -c iopath -c conda-forge fvcore iopath

conda install pytorch3d -c pytorch3d (or pip install --no-index --no-cache-dir pytorch3d -f https://dl.fbaipublicfiles.com/pytorch3d/packaging/wheels/py38_cu113_pyt1110/download.html)

pip install -r requirements.txt

conda activate sherfPlease download our rendered multi-view images of layerwise SynBody dataset from OneDrive. The folder structure should look like

./

├── ...

└── recon_NeRF/data/

├── SynBody/

├── 20230423

Please download our rendered multi-view images of layerwise TightCap dataset from OneDrive. The folder structure should look like

./

├── ...

└── recon_NeRF/data/

├── TightCap/

Register and download SMPL and SMPLX (version 1.0) models here. Put the downloaded models in the folder smpl_models. Only the neutral one is needed. The folder structure should look like

./

├── ...

└── recon_NeRF/assets/

├── SMPL_NEUTRAL.pkl

├── models/

├── smplx/

├── SMPLX_NEUTRAL.npz

./

├── ...

└── human_diffusion/assets/

├── SMPL_NEUTRAL.pkl

├── models/

├── smplx/

├── SMPLX_NEUTRAL.npz

cd recon_NeRFpython -m torch.distributed.launch --nproc_per_node 4 --master_port 12373 run_nerf_batch.py --config configs/SynBody.txt --data_root data/SynBody/20230423_layered/seq_000000 --expname SynBody_185_view_100_subject_triplane_256x256x27_tv_loss_1e-2_l1_loss_5e-4 --num_instance 100 --num_worker 3 --i_weights 50000 --i_testset 5000 --mlp_num 2 --batch_size 2 --n_samples 128 --n_importance 128 --views_num 185 --use_clamp --ddp 1 --lrate 5e-3 --tri_plane_lrate 1e-1 --triplane_dim 256 --triplane_ch 27 --tv_loss --tv_loss_coef 1e-2 --l1_loss_coef 5e-4python -m torch.distributed.launch --nproc_per_node 1 --master_port 12373 run_nerf_batch_ft.py --config configs/SynBody.txt --data_root data/SynBody/20230423_layered/seq_000000 --expname SynBody_185_view_100_subject_triplane_256x256x27_tv_loss_1e-2_l1_loss_5e-4 --num_instance 1 --num_worker 3 --i_weights 2000 --i_testset 8000 --mlp_num 2 --batch_size 8 --n_samples 128 --n_importance 128 --views_num 185 --use_clamp --ddp 1 --lrate 0 --tri_plane_lrate 1e-1 --ft_triplane_only --n_iteration 2000 --ft_path 200000.tar --multi_person 0 --start_idx 0 --end_idx 10 --triplane_dim 256 --triplane_ch 27 --start_dim 256 --tv_loss --tv_loss_coef 1e-2 --l1_loss_coef 5e-4where start_idx and end_idx denote the start index and end index of subjects to be optimized.

python -m torch.distributed.launch --nproc_per_node 1 --master_port 12373 run_nerf_batch.py --config configs/SynBody.txt --data_root data/SynBody/20230423_layered/seq_000000 --expname SynBody_185_view_100_subject_triplane_256x256x27_tv_loss_1e-2_l1_loss_5e-4 --num_instance 100 --num_worker 3 --i_weights 50000 --i_testset 5000 --mlp_num 2 --batch_size 1 --n_samples 128 --n_importance 128 --views_num 185 --use_clamp --ddp 1 --lrate 5e-3 --tri_plane_lrate 1e-1 --triplane_dim 256 --triplane_ch 27 --tv_loss --tv_loss_coef 1e-2 --l1_loss_coef 5e-4 --test --ft_path 200000.tar --test_layer_id 1where test_layer_id denotes the layer index.

python -m torch.distributed.launch --nproc_per_node 4 --master_port 12373 run_nerf_batch.py --config configs/TightCap.txt --data_root data/TightCap/f_c_10412256613 --expname TightCap_185_view_100_subject_triplane_256x256x27_tv_loss_1e-2_l1_loss_5e-4 --num_instance 100 --num_worker 3 --i_weights 50000 --i_testset 5000 --mlp_num 2 --batch_size 2 --n_samples 128 --n_importance 128 --views_num 185 --use_clamp --ddp 1 --use_canonical_space --lrate 5e-3 --tri_plane_lrate 1e-1 --triplane_dim 256 --triplane_ch 27 --tv_loss --tv_loss_coef 1e-2 --l1_loss_coef 5e-4python -m torch.distributed.launch --nproc_per_node 1 --master_port 12373 run_nerf_batch_ft.py --config configs/TightCap.txt --data_root data/TightCap/f_c_10412256613 --expname TightCap_185_view_100_subject_triplane_256x256x27_tv_loss_1e-2_l1_loss_5e-4 --num_instance 1 --num_worker 3 --i_weights 2000 --i_testset 5000 --mlp_num 2 --batch_size 8 --n_samples 128 --n_importance 128 --views_num 185 --use_clamp --ddp 1 --use_canonical_space --lrate 0 --tri_plane_lrate 1e-1 --ft_triplane_only --n_iteration 2000 --ft_path 200000.tar --multi_person 0 --start_idx 0 --end_idx 10 --triplane_dim 256 --triplane_ch 27 --start_dim 256 --tv_loss --tv_loss_coef 1e-2 --l1_loss_coef 5e-4where start_idx and end_idx denote the start index and end index of subjects to be optimized.

python -m torch.distributed.launch --nproc_per_node 1 --master_port 12373 run_nerf_batch.py --config configs/TightCap.txt --data_root data/TightCap/f_c_10412256613 --expname TightCap_185_view_100_subject_triplane_256x256x27_tv_loss_1e-2_l1_loss_5e-4 --num_instance 100 --num_worker 3 --i_weights 50000 --i_testset 5000 --mlp_num 2 --batch_size 1 --n_samples 128 --n_importance 128 --views_num 185 --use_clamp --ddp 1 --use_canonical_space --lrate 5e-3 --tri_plane_lrate 1e-1 --triplane_dim 256 --triplane_ch 27 --tv_loss --tv_loss_coef 1e-2 --l1_loss_coef 5e-4 --test --ft_path 200000.tar --test_layer_id 1where test_layer_id denotes the layer index.

cd ../human_diffusionbash triplane_scripts/SynBody_triplane_train_layered_cond_controlnet_scale_256x256x27_tv_loss_nineplane.sh 1000 4 27 192 27 8 1000 200000bash triplane_scripts/SynBody_triplane_sample_layered_cond_controlnet_scale_256x256x27_tv_loss_nineplane.sh 1000 27 192 27 0 12889bash triplane_scripts/TightCap_triplane_train_layered_cond_controlnet_scale_256x256x27_tv_loss_nineplane.sh 1000 4 27 192 27 8 107 200000bash triplane_scripts/TightCap_triplane_sample_layered_cond_controlnet_scale_256x256x27_tv_loss_nineplane.sh 1000 27 192 27 0 12889Distributed under the S-Lab License. See LICENSE for more information.