*Kai Ye, *Siyan Dong, †Qingnan Fan, He Wang, Li Yi, Fei Xia, Jue Wang, †Baoquan Chen

*Joint first authors | †Corresponding authors | Video | Poster

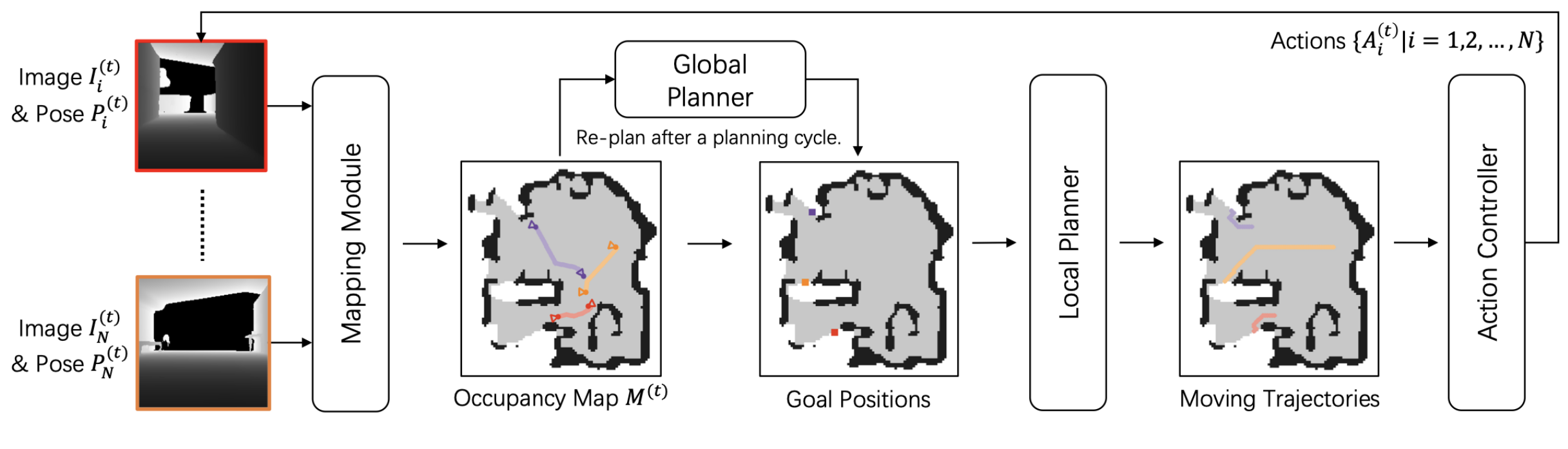

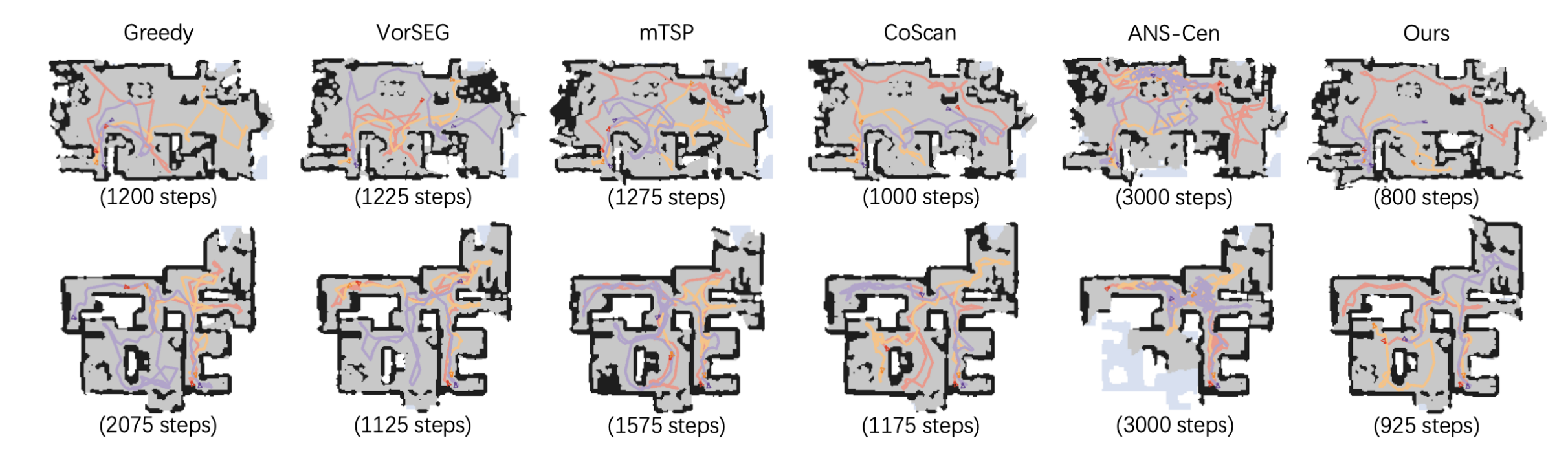

We study the problem of multi-robot active mapping, which aims for complete scene map construction in minimum time steps. The key to this problem lies in the goal position estimation to enable more efficient robot movements. Previous approaches either choose the frontier as the goal position via a myopic solution that hinders the time efficiency, or maximize the long-term value via reinforcement learning to directly regress the goal position, but does not guarantee the complete map construction. We propose a novel algorithm, namely NeuralCoMapping, which takes advantage of both approaches. Here is the implementation.

conda create -p ./venv python=3.6

source activate ./venv

sh ./build.sh && python -m gibson2.utils.assets_utils --download_assets- Gibson

-

get dataset here

-

copy URL of

gibson_v2_4+.tar.gz -

run command

python -m gibson2.utils.assets_utils --download_dataset {URL}- Matterport3D

-

get dataset according to README

-

run command

python2 download_mp.py --task_data igibson -o . `- move each folder of scenes to

Gibson Dataset path

You can check Gibson Dataset path by running

python -m gibson2.utils.assets_utils --download_assets- Train

python main.py --global_lr 5e-4 --exp_name 'ma3_history' --critic_lr_coef 5e-2 --train_global 1 --dump_location train --scenes_file scenes/train.scenes- Test (Example)

python main.py --exp_name 'eval_coscan_mp3dhq0f' --scenes_file scenes/mp3dhq0-f.scenes --dump_location std --num_episodes 10 --load_global best.global- Test via scripts

python eval.py --load best.global --dataset mp3d --method rl -n 5

python eval.py --dataset mp3d --method coscan -n 5- Analyze performance (compared to CoScan)

python analyze.py --dir std --dataset gibson -ne 5 --bins 35,70

python analyze.py --dir std --dataset mp3d -ne 5 --bins 100- Analyze performance (single method)

python scripts/easy_analyze.py rl --dataset hq --subset abcdef --dir std- Specify GPU Index

export CUDA_VISIBLE_DEVICES=3

export GIBSON_DEVICE_ID=4- Visualization

python main.py --exp_name 'eval_coscan_mp3dhq0f' --scenes_file scenes/mp3dhq0-f.scenes --dump_location /mnt/disk1/vis --num_episodes 5 --load_global best.global --vis_type 2

# dump at ./video/

python scripts/map2d.py --dir /mnt/disk1/vis vis_hrl -ne 5 -ns 4-

Pretrained model

If you find our work helpful in your research, please consider citing:

@InProceedings{Ye_2022_CVPR,

author = {Ye, Kai and Dong, Siyan and Fan, Qingnan and Wang, He and Yi, Li and Xia, Fei and Wang, Jue and Chen, Baoquan},

title = {Multi-Robot Active Mapping via Neural Bipartite Graph Matching},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {14839-14848}

}

@article{dong2019multi,

title={Multi-robot collaborative dense scene reconstruction},

author={Dong, Siyan and Xu, Kai and Zhou, Qiang and Tagliasacchi, Andrea and Xin, Shiqing and Nie{\ss}ner, Matthias and Chen, Baoquan},

journal={ACM Transactions on Graphics (TOG)},

volume={38},

number={4},

pages={84},

year={2019},

publisher={ACM}

}

In this repository, we use parts of the implementation from Active Neural SLAM, SuperGlue and iGibson. We thank the respective authors for open sourcing their code.