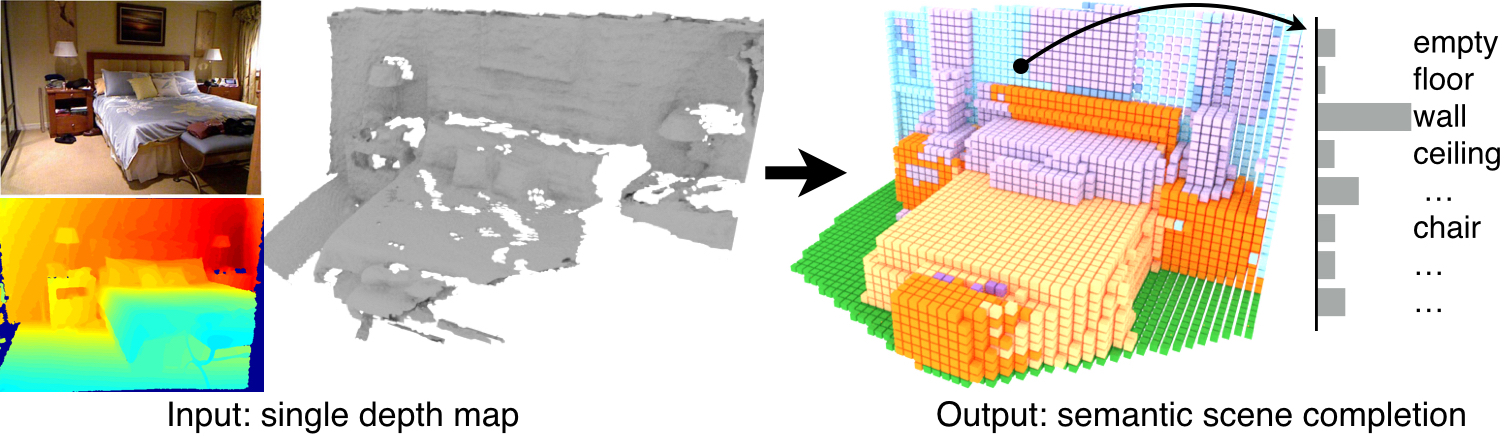

This repo contains training and testing code for our paper on semantic scene completion, a task for producing a complete 3D voxel representation of volumetric occupancy and semantic labels for a scene from a single-view depth map observation. More information about the project can be found in our paper and project webset

If you find SSCNet useful in your research, please cite:

@article{song2016ssc,

author = {Song, Shuran and Yu, Fisher and Zeng, Andy and Chang, Angel X and Savva, Manolis and Funkhouser, Thomas},

title = {Semantic Scene Completion from a Single Depth Image},

journal = {arXiv preprint arXiv:1611.08974},

year = {2016},

}

The code and data is organized as follows:

sscnet

|-- matlab_code

|-- caffe_code

|-- caffe3d_suncg

|-- script

|-train

|-test

|-- data

|-- depthbin

|-- NYUtrain

|-- xxxxx_0000.png

|-- xxxxx_0000.bin

|-- NYUtest

|-- NYUCADtrain

|-- NYUCADtest

|-- SUNCGtest

|-- SUNCGtrain01

|-- SUNCGtrain02

|-- ...

|-- eval

|-- NYUtest

|-- NYUCADtest

|-- SUNCGtest

|-- models

|-- results- Download the data: download_data.sh (1.1 G) Updated on Sep 27 2017

- Download the pretrained models: download_models.sh (9.9M)

- [optional] Download the training data: download_suncgTrain.sh (16 G)

- [optional] Download the results: download_results.sh (8.2G)

-

Software Requirements:

- Requirements for

Caffeandpycaffe(see: Caffe installation instructions) - Matlab 2016a or above with vision toolbox

- OPENCV

- Requirements for

-

Hardware Requirements: at least 12G GPU memory.

-

Install caffe and pycaffe.

- Modify the config files based on your system. You can reference Makefile.config.sscnet_example.

- Compile

cd caffe_code/caffe3d_suncg # Now follow the Caffe installation instructions here: # http://caffe.berkeleyvision.org/installation.html make -j8 && make pycaffe

-

Export path

export LD_LIBRARY_PATH=~/build_master_release/lib:/usr/local/cudnn/v5/lib64:~/anaconda2/lib:$LD_LIBRARY_PATH export PYTHONPATH=~/build_master_release/python:$PYTHONPATH

cd demo

python demotest_model.pyThis demo runs semantic scene compeletion on one NYU depth map using our pretrained model and outputs a '.ply' visulization of the result.

- Run the testing script

cd caffe_code/script/test python test_model.py - The output results will be stored in folder

resultsin .hdf5 format - To test on other testsets (e.g. suncg, nyu, nyucad) you need to modify the paths in “test_model.py”.

- Finetuning on NYU

cd caffe_code/train/ftnyu ./train.sh - Training from scratch

cd caffe_code/train/trainsuncg ./train.sh - To get more training data from SUNCG, please refer to the SUNCG toolbox

-

After testing, the results should be stored in folder

results/ -

You can also download our precomputed results:

./download_results.sh -

Run the evaluation code in matlab:

matlab & cd matlab_code evaluation_script('../results/','nyucad')

-

The visualization of results will be stored in

results/nyucadas “.ply” files.

- Data format

- Depth map :

16 bit png with bit shifting.

Please refer to

./matlab_code/utils/readDepth.mfor more information about the depth format. - 3D volume:

First three float stores the origin of the 3D volume in world coordinate.

Then 16 float of camera pose in world coordinate.

Followed by the 3D volume encoded by run-length encoding.

Please refer to

./matlab_code/utils/readRLEfile.mfor more details.

- Depth map :

16 bit png with bit shifting.

Please refer to

- Example code to convert NYU ground truth data:

matlab_code/perpareNYUCADdata.mThis function provides an example of how to convert the NYU ground truth from 3D CAD model annotations provided by: Guo, Ruiqi, Chuhang Zou, and Derek Hoiem. "Predicting complete 3d models of indoor scenes." You need to download the original annotations by runingdownload_UIUCCAD.sh. - Example code to generate testing data without ground truth and room boundary:

matlab_code/perpareDataTest.mThis function provides an example of how to generate your own testing data without ground truth labels. It will generate a the .bin file with camera pose and an empty volume, without room boundary.

You can generate more training data from SUNCG by following steps:

- Download SUNCG data and toolbox from: https://github.com/shurans/SUNCGtoolbox

- Compile the toolbox.

- Download the voxel data for objects (

download_objectvox.sh) and move the folder under SUNCG data directory. - Run the script: genSUNCGdataScript()

You may need to modify the following paths:

suncgDataPath,SUNCGtoolboxPath,outputdir.

Code is released under the MIT License (refer to the LICENSE file for details).