[ Paper | Benchmarking | Documentation ]

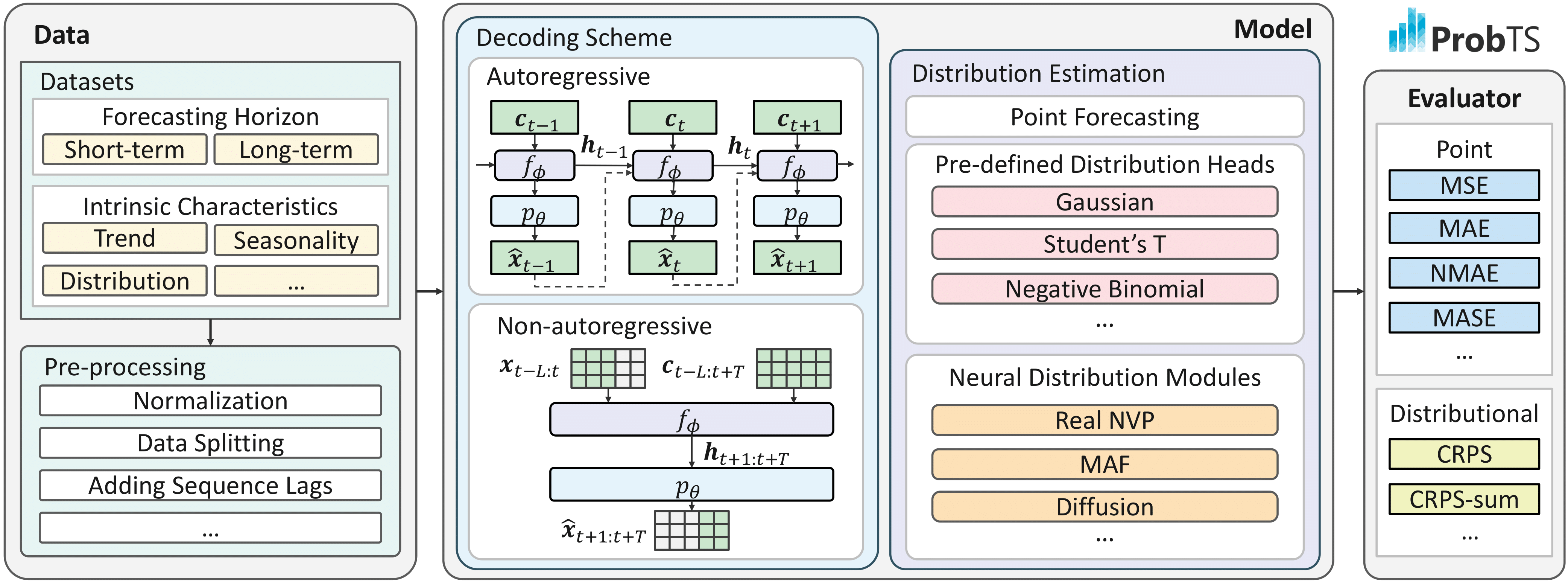

A wide range of industrial applications desire precise point and distributional forecasting for diverse prediction horizons. ProbTS serves as a benchmarking tool to aid in understanding how advanced time-series models fulfill these essential forecasting needs. It also sheds light on their advantages and disadvantages in addressing different challenges and unveil the possibilities for future research.

To achieve these objectives, ProbTS provides a unified pipeline that implements cutting-edge models from different research threads, including:

- Long-term point forecasting approaches, such as PatchTST, iTransformer, etc.

- Short-term probabilistic forecasting methods, such as TimeGrad, CSDI, etc.

- Recent time-series foundation models for universal forecasting, such as TimesFM, MOIRAI, etc.

Specifically, ProbTS emphasizes the differences in their primary methodological designs, including:

- Supporting point or distributional forecasts

- Using autoregressive or non-autoregressive decoding schemes for multi-step outputs

ProbTS includes both classical time-series models, specializing in long-term point forecasting or short-term distributional forecasting, and recent time-series foundation models that offer zero-shot and arbitrary-horizon forecasting capabilities for new time series.

| Model | Original Eval. Horizon | Estimation | Decoding Scheme | Class Path |

|---|---|---|---|---|

| Linear | - | Point | Auto / Non-auto | probts.model.forecaster.point_forecaster.LinearForecaster |

| GRU | - | Point | Auto / Non-auto | probts.model.forecaster.point_forecaster.GRUForecaster |

| Transformer | - | Point | Auto / Non-auto | probts.model.forecaster.point_forecaster.TransformerForecaster |

| Autoformer | Long-trem | Point | Non-auto | probts.model.forecaster.point_forecaster.Autoformer |

| N-HiTS | Long-trem | Point | Non-auto | probts.model.forecaster.point_forecaster.NHiTS |

| NLinear | Long-trem | Point | Non-auto | probts.model.forecaster.point_forecaster.NLinear |

| DLinear | Long-trem | Point | Non-auto | probts.model.forecaster.point_forecaster.DLinear |

| TimesNet | Short- / Long-term | Point | Non-auto | probts.model.forecaster.point_forecaster.TimesNet |

| PatchTST | Long-trem | Point | Non-auto | probts.model.forecaster.point_forecaster.PatchTST |

| iTransformer | Long-trem | Point | Non-auto | probts.model.forecaster.point_forecaster.iTransformer |

| GRU NVP | Short-term | Probabilistic | Auto | probts.model.forecaster.prob_forecaster.GRU_NVP |

| GRU MAF | Short-term | Probabilistic | Auto | probts.model.forecaster.prob_forecaster.GRU_MAF |

| Trans MAF | Short-term | Probabilistic | Auto | probts.model.forecaster.prob_forecaster.Trans_MAF |

| TimeGrad | Short-term | Probabilistic | Auto | probts.model.forecaster.prob_forecaster.TimeGrad |

| CSDI | Short-term | Probabilistic | Non-auto | probts.model.forecaster.prob_forecaster.CSDI |

| TSDiff | Short-term | Probabilistic | Non-auto | probts.model.forecaster.prob_forecaster.TSDiffCond |

| Model | Any Horizon | Estimation | Decoding Scheme | Class Path |

|---|---|---|---|---|

| Lag-Llama | ✔ | Probabilistic | Auto | probts.model.forecaster.prob_forecaster.LagLlama |

| ForecastPFN | ✔ | Point | Non-auto | probts.model.forecaster.point_forecaster.ForecastPFN |

| TimesFM | ✔ | Point | Auto | probts.model.forecaster.point_forecaster.TimesFM |

| TTM | ✘ | Point | Non-auto | probts.model.forecaster.point_forecaster.TinyTimeMixer |

| Timer | ✔ | Point | Auto | probts.model.forecaster.point_forecaster.Timer |

| MOIRAI | ✔ | Probabilistic | Non-auto | probts.model.forecaster.prob_forecaster.Moirai |

| UniTS | ✔ | Point | Non-auto | probts.model.forecaster.point_forecaster.UniTS |

| Chronos | ✔ | Probabilistic | Auto | probts.model.forecaster.prob_forecaster.Chronos |

Stay tuned for more models to be added in the future.

ProbTS is developed with Python 3.10 and relies on PyTorch Lightning. To set up the environment:

# Create a new conda environment

conda create -n probts python=3.10

conda activate probts

# Install required packages

pip install .

pip uninstall -y probts # recommended to uninstall the root package (optional)[Optional] For time-series foundation models, you need to install basic packages and additional dependencies:

# Create a new conda environment

conda create -n probts_fm python=3.10

conda activate probts_fm

# Install required packages

pip install .

# Git submodule

git submodule update --init --recursive

# Install additional packages for foundation models

pip install ".[tsfm]"

pip uninstall -y probts # recommended to uninstall the root package (optional)

# For MOIRAI, we fix the version of the package for better performance

cd submodules/uni2ts

git reset --hard fce6a6f57bc3bc1a57c7feb3abc6c7eb2f264301Optional for TSFMs reproducibility

# For TimesFM, fix the version for reproducibility (optional)

cd submodules/timesfm

git reset --hard 5c7b905

# For Lag-Llama, fix the version for reproducibility (optional)

cd submodules/lag_llama

git reset --hard 4ad82d9

# For TinyTimeMixer, fix the version for reproducibility (optional)

cd submodules/tsfm

git reset --hard bb125c14a05e4231636d6b64f8951d5fe96da1dc-

Short-Term Forecasting: We use datasets from GluonTS. Configure the datasets using

--data.data_manager.init_args.dataset {DATASET_NAME}. You can choose from multivariate or univariate datasets as per your requirement.# Multivariate Datasets ['exchange_rate_nips', 'electricity_nips', 'traffic_nips', 'solar_nips', 'wiki2000_nips'] # Univariate Datasets ['tourism_monthly', 'tourism_quarterly', 'tourism_yearly', 'm4_hourly', 'm4_daily', 'm4_weekly', 'm4_monthly', 'm4_quarterly', 'm4_yearly', 'm5']

-

Long-Term Forecasting: To set up the long-term forecasting datasets, please follow these steps:

- Download long-term forecasting datasets from HERE and place them in

./dataset. - [Opt.] Download CAISO and NordPool datasets from DEPTS and place them in

./dataset.

Configure the datasets using

--data.data_manager.init_args.dataset {DATASET_NAME}with the following list of available datasets:# Long-term Forecasting ['etth1', 'etth2','ettm1','ettm2','traffic_ltsf', 'electricity_ltsf', 'exchange_ltsf', 'illness_ltsf', 'weather_ltsf', 'caiso', 'nordpool']

Note: When utilizing long-term forecasting datasets, you must explicitly specify the

context_lengthandprediction_lengthparameters. For example, to set a context length of 96 and a prediction length of 192, use the following command-line arguments:--data.data_manager.init_args.context_length 96 \ --data.data_manager.init_args.prediction_length 192 \

- Download long-term forecasting datasets from HERE and place them in

For full reproducibility, we provide the checkpoints for some foundation models as of the paper completion date. Download the checkpoints from here and place them in the ./checkpoints folder.

You can also download the newest checkpoints from the following repositories:

- For

Timer, download the checkpoints from its official repository (Google Drive or Tsinghua Cloud) under the folder/path/to/checkpoints/timer/. - For

ForecastPFN, download the checkpoints from its official repository (Google Drive) under the folder/path/to/checkpoints/forecastpfn/. - For

UniTS, download the checkpointsunits_x128_pretrain_checkpoint.pthfrom its official repository under the folder/path/to/checkpoints/units/. - For

Lag-Llama, download the checkpointslag-llama.ckptfrom its huggingface repository under the folder/path/to/checkpoints/lag_llama/. - For other models, they can be automatically downloaded from huggingface during the first run.

| Model | HuggingFace |

|---|---|

MOIRAI |

Link |

Chronos |

Link |

TinyTimeMixer |

Link |

TimesFM |

Link |

Specify --config with a specific configuration file to reproduce results of point or probabilistic models on commonly used long- and short-term forecasting datasets. Configuration files are included in the config folder.

To run non-universal models:

bash run.shTo run foundation models:

bash run_tsfm.shFor short-term forecasting scenarios, datasets and corresponding context_length and prediction_length are automatically obtained from GluonTS. Use the following command:

python run.py --config config/path/to/model.yaml \

--data.data_manager.init_args.path /path/to/datasets/ \

--trainer.default_root_dir /path/to/log_dir/ \

--data.data_manager.init_args.dataset {DATASET_NAME}See full DATASET_NAME list:

from gluonts.dataset.repository import dataset_names

print(dataset_names)For long-term forecasting scenarios, context_length and prediction_length must be explicitly assigned:

python run.py --config config/path/to/model.yaml \

--data.data_manager.init_args.path /path/to/datasets/ \

--trainer.default_root_dir /path/to/log_dir/ \

--data.data_manager.init_args.dataset {DATASET_NAME} \

--data.data_manager.init_args.context_length {CTX_LEN} \

--data.data_manager.init_args.prediction_length {PRED_LEN} DATASET_NAME options:

['etth1', 'etth2','ettm1','ettm2','traffic_ltsf', 'electricity_ltsf', 'exchange_ltsf', 'illness_ltsf', 'weather_ltsf', 'caiso', 'nordpool']By utilizing ProbTS, we conduct a systematic comparison between studies that focus on point forecasting and those aimed at distributional estimation, employing various forecasting horizons and evaluation metrics. For more details

For detailed information on configuration parameters and model customization, please refer to the documentation.

- Adjust model and data parameters in

run.sh. Key parameters include:

| Config Name | Type | Description |

|---|---|---|

trainer.max_epochs |

integer | Maximum number of training epochs. |

model.forecaster.class_path |

string | Forecaster module path (e.g., probts.model.forecaster.point_forecaster.PatchTST). |

model.forecaster.init_args.{ARG} |

- | Model-specific hyperparameters. |

model.num_samples |

integer | Number of samples per distribution during evaluation. |

model.learning_rate |

float | Learning rate. |

data.data_manager.init_args.dataset |

string | Dataset for training and evaluation. |

data.data_manager.init_args.path |

string | Path to the dataset folder. |

data.data_manager.init_args.scaler |

string | Scaler type: identity, standard (z-score normalization), or temporal (scale based on average temporal absolute value). |

data.data_manager.init_args.context_length |

integer | Length of observation window (required for long-term forecasting). |

data.data_manager.init_args.prediction_length |

integer | Forecasting horizon length (required for long-term forecasting). |

data.data_manager.init_args.var_specific_norm |

boolean | If conduct per-variate normalization or not. |

data.batch_size |

integer | Batch size. |

-

To print the full pipeline configuration to a file:

python run.py --print_config > config/pipeline_config.yaml

Special thanks to the following repositories for their open-sourced code bases and datasets.

Classical Time-series Models

- Autoformer

- N-HiTS

- NLinear, DLinear

- TimesNet

- RevIN

- PatchTST

- iTransformer

- GRU NVP, GRU MAF, Trans MAF, TimeGrad

- CSDI

- TSDiff

Time-series Foundation Models

If you have used ProbTS for research or production, please cite it as follows.

@article{zhang2023probts,

title={{ProbTS}: Benchmarking Point and Distributional Forecasting across Diverse Prediction Horizons},

author={Zhang, Jiawen and Wen, Xumeng and Zhang, Zhenwei and Zheng, Shun and Li, Jia and Bian, Jiang},

journal={arXiv preprint arXiv:2310.07446},

year={2023}

}