This is the PyTorch implementation for QAFE-Net: Quality Assessment of Facial Expressions with Landmark Heatmaps arXiv version

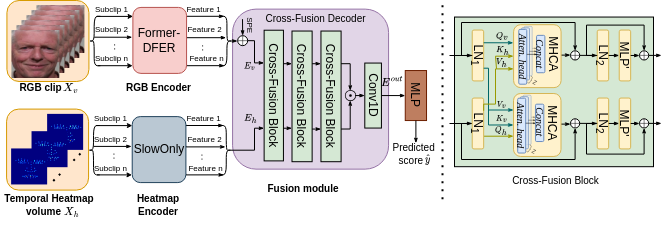

We propose a novel landmark-guided approach, QAFE-Net, that combines temporal landmark heatmaps with RGB data to capture small facial muscle movements that are encoded and mapped to severity scores.

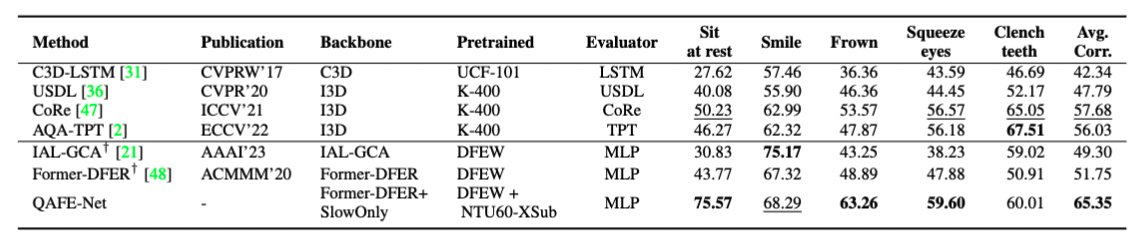

PFED5 is a Parkinson’s disease (PD) dataset for facial expression quality assessment. Videos were recorded using a single RGB camera from 41 PD patients performed five different facial expressions including sit at rest, smile, frown, squeeze eyes tightly, and clench teeth in clinical settings. The trained rater assigned a score for each expression, based on the protocols of MDS-UPDRS, varying between 0 and 4 depending on the level of severity.

pytroch >= 1.3.0, tensorboardX, cv2, scipy, einops, torch_videovision

1: Download PFED5 dataset. To access the PFED5 dataset, please complete and sign the PFED5 request form and forward it to shuchao.duan@bristol.ac.uk. By submitting your application, you acknowledge and confirm that you have read and understood the relevant notice. Upon receiving your request, we will promptly respond with the necessary link and guidelines. Please note that ONLY faculty members can request for their team to be granted access to the dataset.

2: Download UNBC-McMaster dataset.

3: We adopt SCRFD from InsightFace for face detection and landmark estimation, and Albumentation library for normalising the landmark positions to cropped face regions.

4: Generate landmark heatmaps for corresponding video clips.

run python main_PFED5.py --gpu 0,1 --batch_size 4 --epoch 100

Download pretrain weights (RGB encoder and heatmap encoder) from Google Drive. Put entire pretrain folder under models folder.

```

- models/pretrain/

FormerDFER-DFEWset1-model_best.pth

pose_only.pth

```

Comparative Spearman's Rank Correlation results of QAFE-Net with SOTA AQA methods on PFED5

If you find our work useful in your research, please consider giving it a star ⭐ and citing our paper in your work:

@misc{duan2023qafenet,

title={QAFE-Net: Quality Assessment of Facial Expressions with Landmark Heatmaps},

author={Shuchao Duan and Amirhossein Dadashzadeh and Alan Whone and Majid Mirmehdi},

year={2023},

eprint={2312.00856},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

We would like to gratefully acknowledge the contribution of the Parkinson’s study participants and extend special appreciation to Tom Whone for his additional labelling efforts. The clinical trial from which the video data of the people with Parkinson’s was sourced was funded by Parkinson’s UK (Grant J-1102), with support from Cure Parkinson’s.

Our implementation and experiments are built on top of Former-DFER. We thank the authors who made their code public, which tremendously accelerated our project progress.