Consulting Project

I consulted for a project using machine learning and neural networks to model and predict resource usage.The company offers startups and new developers a simple yet revolutionary platform to manage their applications and cloud resources in one place. IT also serves as a marketplace for additional applications and services, as well as an easy way to work with multiple APIs in one location.

As a consultant, I wanted to get a sense of Company’s needs to help them reach their goals. Company was interested in gaining a sense of their current network resource usage and provisioning. In addition, Company wanted to move towards a machine learning approach to make real-time usage predictions and automate scheduling of provisions. This is especially important because they were spending valuable hours manually setting limits, at a continuously high resource limit to minimize downtime (i.e., system crashing). Historically, companies like these have to balance the competing needs of minimizing cost of paying for CPU bandwidth, for example, but also minimizing downtime by slightly over provisioning. This was even evidenced in the publicly available dataset I used for my data analysis, because of an NDA agreement. Therefore, my role as a consultant was to develop a model that will provide a more intelligent prediction of resource usage.

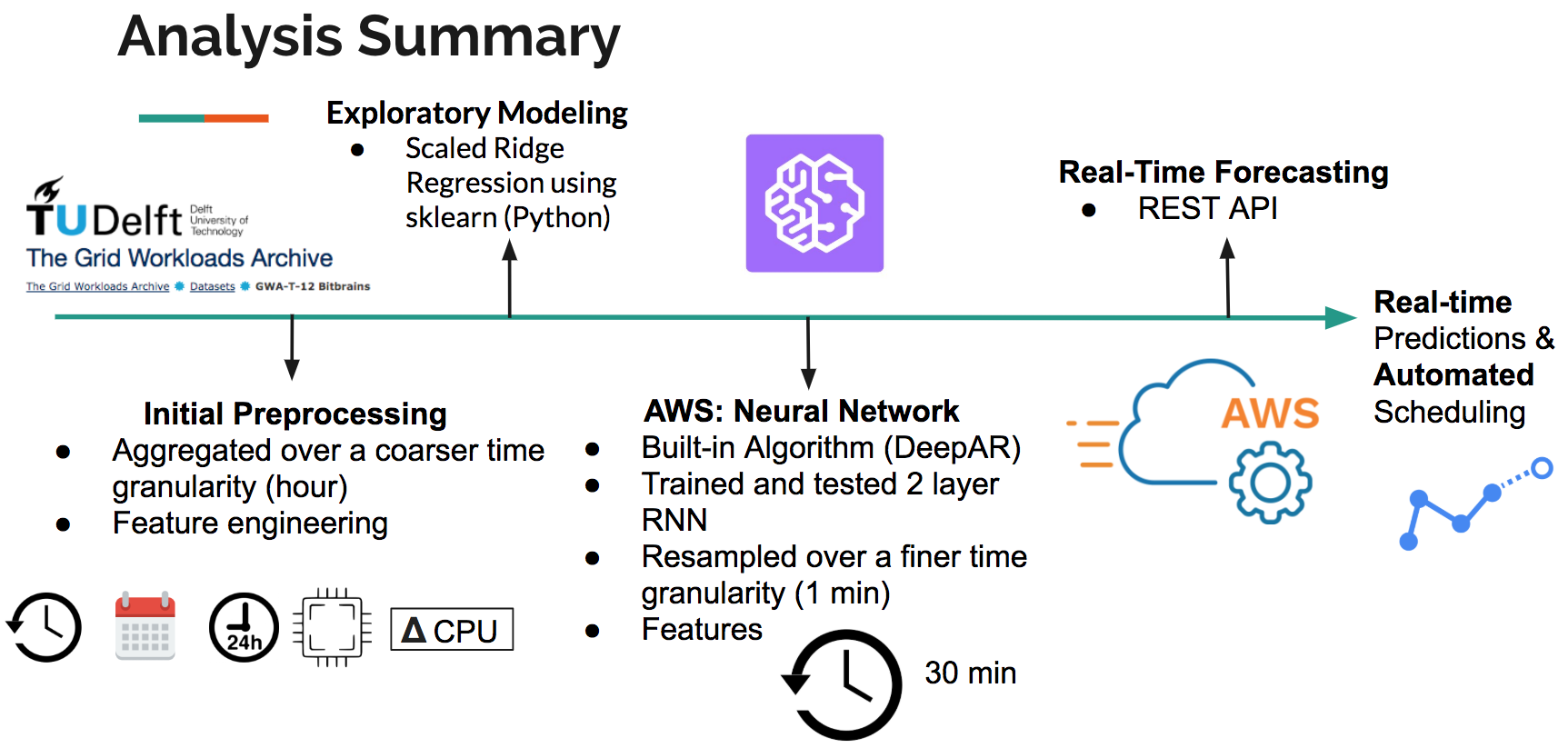

Here is how I translated Company’s business objectives into an actionable deliverable.

First, I used time-series analysis, advanced regression techniques, and time series cross-validation in Python (using sklearn) to characterize resource usage as well as to identify important predictive features.

Next, I implemented DeepAR, a recently developed built-in algorithm from Amazon Sagemaker (hosted on AWS) to help Company shift towards real-time analytics. Amazon SageMaker DeepAR is a supervised learning algorithm used to forecast time series using recurrent neural networks (RNN).

###I havent used Amazon SageMaker before but due to the agreement i had to use it.###

-

Manifold_TimeSeries_Models.py: - Modeling 500 time series using sklearn. Techniques include feature engineering, rolling window averages (smoothing), linear regression, scaled regression, and lasso, and ridge regression to perform feature selection and reduce overfitting.

-

Manifold_AWS_DeepAR.py: AWS Jupyter notebook for Sagemaker DeepAR. Includes code to download and read in data, format into JSON strings, push to S3 bucket, create train a recurrent neural network (RNN), and visualize model predictions.

-

Manifold_Visualize_Initial_Explore.py.ipynb: - Includes some initial visualizations of data (aggregated and resampled hourly) using python and matplotlb.

-

Timeseries_FirstLook_1month.py.ipynb - Contains code for exploring timeseries from 1 month and 100 VMs. Includes initial models using ARIMA, SARIMAX, Holt-Winters (smoothing), some visualizations, and stationarity tests.

-

HyperparameterTuning_DeepAR_Example.ipynb: Example code for hyperparameter tuning request in AWS sagemaker.

-

Data publically available from The Grid Workloads Archive (Bit Brains- I used the rnd traces)

-

Helpful blog and source of some code here