In this project, we created a trainer with Anaconda, Python 3.5, Keras, Tensorflow, NVIDIA CUDA, Titan X GPU, and Udacity’s Self-Driving Car Simulator to use a previously trained neural network to train a new neural network how to drive a simulated car on the same track as the one for project 3, but this time on its own using real-time image data from the simulator in autonomous mode and reinforcing corrective behavior. This project was inspired by DeepMind: https://deepmind.com/ and their human-level control through Deep Reinforcement Learning: https://deepmind.com/research/dqn/ and their AphaGo training method: https://deepmind.com/research/alphago/.

A detail report of this project can be found in the project PDF: MLND-Capstone-Project.pdf

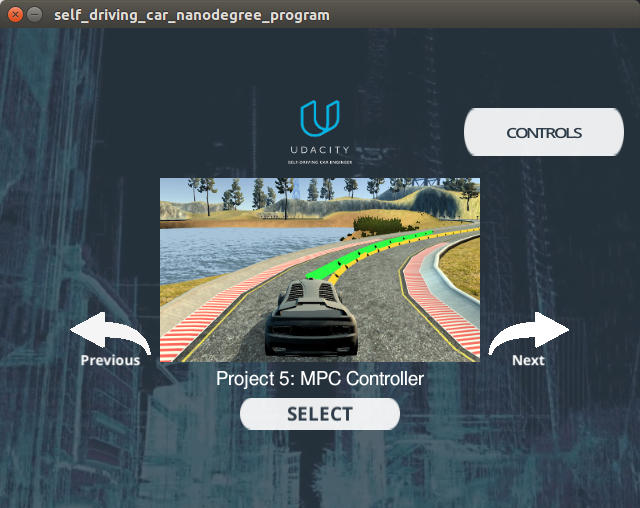

The Udacity simulator for project 3, when in autonomous mode, will send the vehicles front camera images, throttle/brake combination, and steering angle, but lacks the waypoints, as well as vehicle orientation, for doing the CTE calculations, but we found that for term 2 project 5, Model Predictive Control (MPC), the MPC simulator did have these features, but lack the front camera images that we needed for training the models. But, since the Udacity simulator is open sourced, we can fork a copy and make the modification necessary to include the front camera image. This modification is detailed here: https://github.com/diyjac/self-driving-car-sim/commit/6d047cace632f4ae22681830e9f6163699f8a095, and is available in the github: https://github.com/diyjac/self-driving-car-sim. I have include the binary executables at the following google drive shared link for your evaluation enjoyment:

- Windows: https://drive.google.com/file/d/0B8uuDpajc_uGVVRYczFyQnE3Rkk/view?usp=sharing

- MacOS: https://drive.google.com/file/d/0B8uuDpajc_uGTnJvV0RXbjRMcDA/view?usp=sharing

- Linux: https://drive.google.com/file/d/0B8uuDpajc_uGTld2LXl3VFYzZHc/view?usp=sharing

This project uses python 3.6 installed using Anaconda in Ubuntu Linux for our training system. Clone the GitHub repository, and use Udacity CarND-Term1-Starter-Kit to get the most of the dependencies. In addition, we use NVIDIA CUDA GPU acceleration for Keras/Tensorflow Model Training. While it is possible to use AWS with GPU and Tensorflow support, we have not tried it. This article https://alliseesolutions.wordpress.com/2016/09/08/install-gpu-tensorflow-from-sources-w-ubuntu-16-04-and-cuda-8-0/ provides a good walk through on how to install CUDA 8.0 and Tensorflow 1.2. We are using Keras 2.0.5 for creating our NVIDIA and custom steering models.

git clone https://github.com/diyjac/MLND-Capstone-Project.git

autoTrainer is a python based CLI. You list options by using --help:

$ python autoTrain.py --help

Using TensorFlow backend.

usage: python autoTrain.py [options] gen_n_model gen_n_p_1_model

DIYJAC's Udacity MLND Capstone Project: Automated Behavioral Cloning

positional arguments:

inmodel gen0 model or another pre-trained model

outmodel gen1 model or another target model

optional arguments:

-h, --help show this help message and exit

--maxrecall MAXRECALL maximum samples to collect for training session,

defaults to 500

--speed SPEED cruz control speed, defaults to 30NOTE: The outmodel is a directory containing the model.py class library that defines the target model. An example of this file can be found in test/model.py.

The test directory contains a NVIDIA 64x64 Model that is described below in the CNN Model Section.

To train a test generation, use this sample:

$ python autoTrain.py gen0/model.h5 testTo get the current running status of the training, use the provided Jupyter Notebook: ./visualization/training_visualization.ipynb

$ cd visualization

$ jupyter notebook training_visualization.ipynbAfter training, you can verify the new target model is working by using the testdrive.py script:

$ python testdrive.py --help

usage: testdrive.py [-h] [--speed SPEED] [--laps LAPS] model [image_folder]

Remote Driving

positional arguments:

model Path to model h5 file. Model should be on the same path.

image_folder Path to image folder. This is where the images from the run

will be saved.

optional arguments:

-h, --help show this help message and exit

--speed SPEED Cruse Control Speed setting, default 30Mph

--laps LAPS Number of laps to drive around the track, default 1 lapExample (assuming that test/model-session11.h5 was selected as the best ACTE):

python testdrive.py test/model-session11.h5For Project 3, Udacity has created a car simulator based on the Unity engine that uses game physics to create a close approximation to real driving, where the students’ are to use Behavioral Cloning to train the simulated car to drive around a test track. The student’s task are:

- Collect left, center and right camera images and steering angle data by driving the simulated car

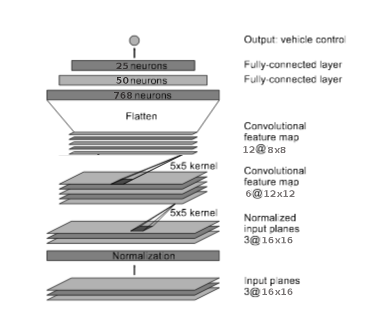

- Design and create a CNN regression model architecture for learning the steering angle based on the center camera image

- Train it to drive the simulated car

- Test the trained model in the simulator in Autonomous mode.

- Repeat 1-4 until the trained model can drive the simulated car repeatedly on the test track.

But, training in batch mode is tough! Times from step 1 to 3 could take hours, before trying on the simulator in Autonomous mode and find out:

- Data collected was not enough

- Data collected was not the right kind

- Key areas in the track were not learned correctly by the model

- Model was not designed or created correctly.

Clearly the data collection, model design, training and testing cycle needs to be faster. The goal of this project is to design an automated system to iterate the model design, data gathering and training in a tighter automated loop where successive improved models can be used to train even more advance models. To accomplish this goal, the tasks involved are the following:

- Evaluate the current Udacity Self-Driving Car Simulator for the task

- Design and create the automated training system and its training method

- Select a trained CNN model as the initial generation (g=0)

- Select target CNN models for training as the next generation (g+1)

- Train using the automated training system

- Test the target CNN model effectiveness

- Discard target CNN model and repeat 4-6 if it does not meet metrics criteria

- Repeat 4-7 successively using CNN model generation g to create generation g+1

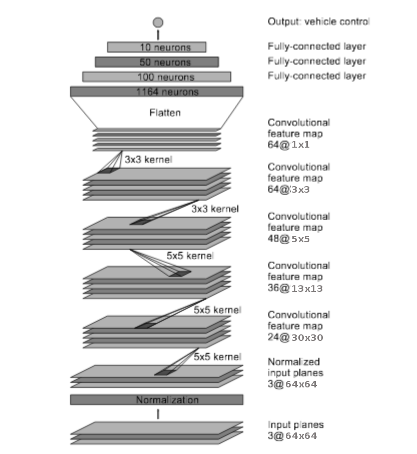

We will start off by using our trained NVIDIA model as generation 0 (g=0), and a modified NVIDIA target models in our test as generation 1-3 to see if this successive models can perform well on driving the car around the test lake track. Below are the models used in this evaluation and their generations:

| Model | Description | g |

|---|---|---|

| NVIDIA | Pre-Trained NVIDIA Model from SDC Project 3 | 0 |

| NVIDIA | 64x64 model - no weights | 1 |

| NVIDIA | 64x64 model - no weights | 2 |

| NVIDIA | 64x64 model - no weights | 3 |

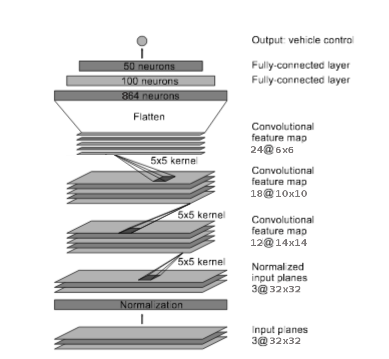

| Custom 1 | 32x32 model - no weights | 4 |

| Custom 2 | 16x16 model - no weights | 5 |

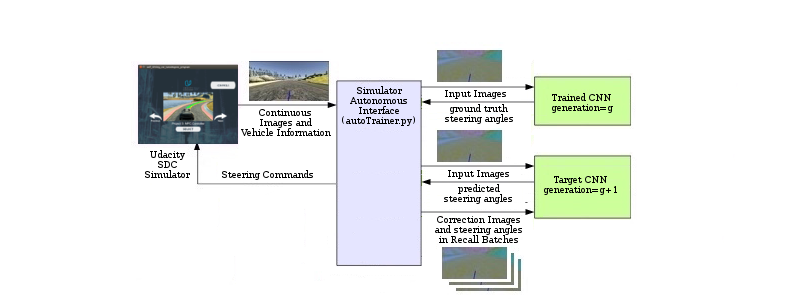

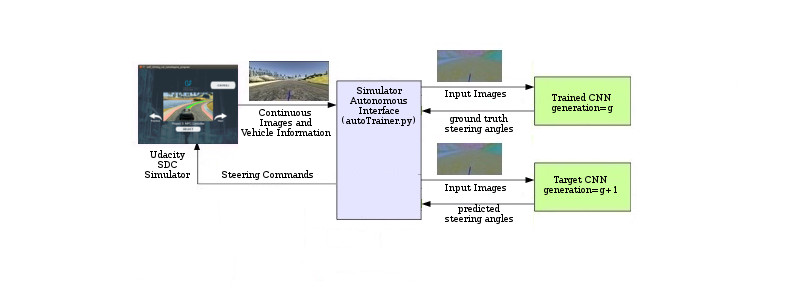

As stated before, the goal of this project is to design an automated system to train target convoluted neural networks (CNN) steering models using previously trained CNN models. Below is a diagram of the architecture of the automated system and how the trains the target CNN. It is similar as the one used for our project 3 Agile Trainer (https://github/diyjac/AgileTrainer) where a continuous stream of images is stored into a randomized replay buffer for training batches, but now instead of manual intervention by an human, we use a pre-trained model to handle corrective steering actions. Similar to reinforcement learning, we will have a training period where the majority of the time the target model will just receive training at higher learning rates; however, as time elapse, we will reduce the training and start having the target predict the steering and any mistakes will be corrected by the trained model and added to the target model for training refinement.

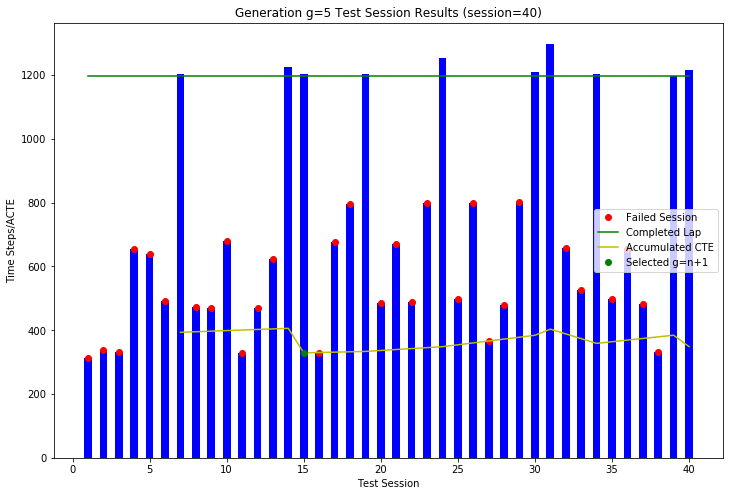

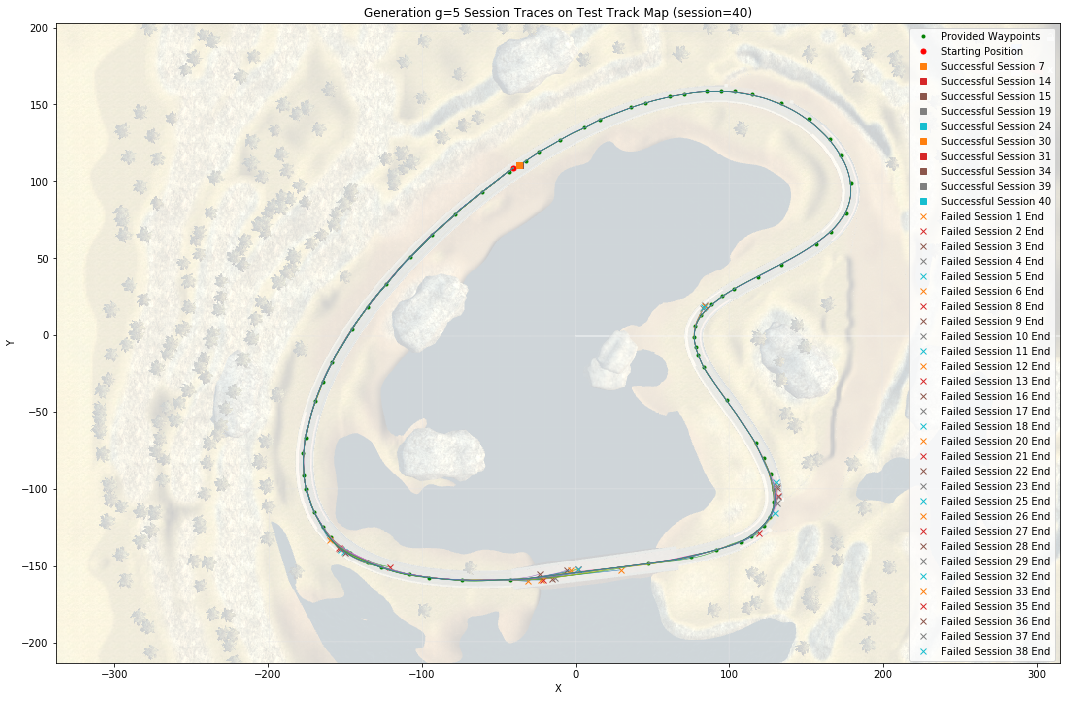

Below is an example of the output from the provided Jupyter Notebook: ./visualization/training_visualization.ipynb. You can look at a complete process of generations 1 through 5 and the speed training using the completed notebook: ./visualization/sample_data_visualization.ipynb

The following are video sessions of model generation training.

This video trains a test generation

This video trained a 40Mph model from a 30Mph trainer model.