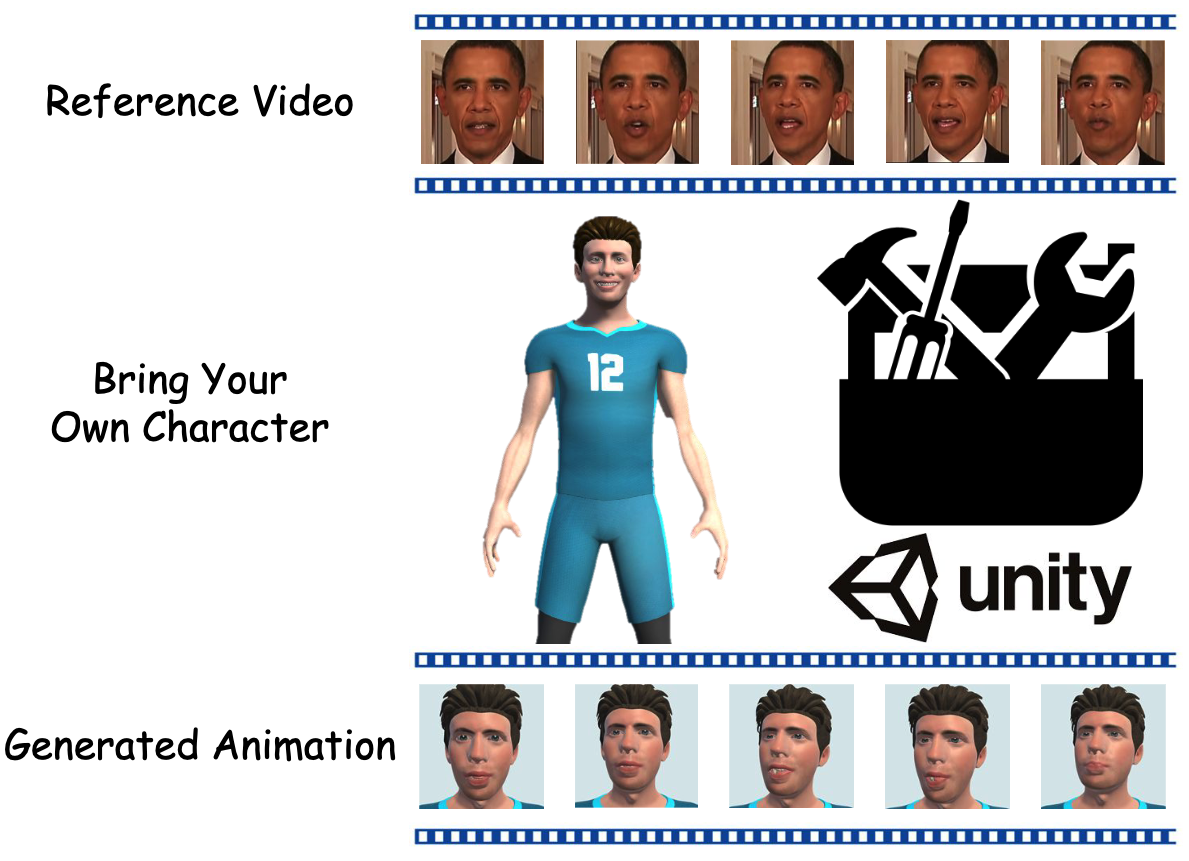

Bring Your Own Characters: A Holistic Solution for Automatic Facial Animation Generation of Customized Characters

This repository is the official implementation of Bring Your Own Characters published in IEEE-VR 2024.

- BYOC is a holistic solution for creating facial animations of virtual human in VR applications. This solution incorporates two key contributions:

- (1) a deep learning model that transforms human facial images into desired blendshape coefficients and replicate the specified facial expression.

- (2) a Unity-based toolkit that encapsulates the deep learning model, allowing users to utilize the trained model to create facial animation when developing their own VR applications.

- We also conduct a user study, collecting feedback from a wide range of potential users. It would inspire more future works in this direction.

git clone https://github.com/showlab/BYOC

cd BYOC

conda create -n byoc python=3.8

conda activate byoc

pip install torch==1.12.1+cu113 torchvision==0.13.1+cu113 torchaudio==0.12.1 --extra-index-url https://download.pytorch.org/whl/cu113

pip install -r requirements.txt

Pre-traind Base Model

- Go to google drive to download the pre-trained model.

- Put the downloaded file at

./image2bs/landmark2exp/checkpoints/facerecon..

Dependent Data

- Go to google drive to download the essential files.

- Unzip and put the

BFMfolder at./image2bs/landmark2exp.

File Structure

trainingfolder is a training workspace for you to train the Adapter model on your customized dataset.image2bsfolder is an inference workspace for you to debug the full inference pipeline of Base Model + Adapter Model, and test the performance of your trained model.BlendshapeToolkitis a project of Unity that implements the toolkit with rich user interface.

Option 1: Try the training dataset provided by us

- Go to google drive to download the training dataset.

- Put

blendshape_gt.jsonat./training/Deep3DFaceRecon_pytorch/datasets - Put

test_imagesat./training/Deep3DFaceRecon_pytorch/checkpoints/facerecon/results/

Option 2: Prepare the training dataset by yourself

- Run the script

./training/construct_dataset/random_blendshape_weights.pyto generate customized dataset. - Put the generated

./training/construct_dataset/blendshape_gt/xxxx.csvintoMAYA Softwareto generate training images. - Put the generated training images into

./image2bs/test_imagesand run the script./image2bs/process_training_dataset.py - Put

./training/construct_dataset/blendshape_gt.jsoninto./training/Deep3DFaceRecon_pytorch/datasets - Put

./image2bs/landmark2exp/checkpoints/facerecon/results/test_imagesinto./training/Deep3DFaceRecon_pytorch/checkpoints/facerecon/results/

- Run

bash train.shto train the model. - Run the command

python -m visdom.server -port 8097to start visualization of training.

- Put the pretrained_model

latest_net_V.pthinto./image2bs/exp2bs/checkpoint/EXP2BSMIX/Exp2BlendshapeMixRegress. - Put your video into

./image2bs/test_videoor put images into./image2bs/test_images. - Run the script

inference.pyto inference only.

- In the

image2bs/inference.batfile, (1) changee:on the second line to the disk partition name whereimage2bsis located, such asd:. (2) Change the path followingcdon the third line to the absolute path of theimage2bsfolder. (3) ChangePYTHON_PATHto the absolute path of the folder containing the Python interpreter used forinference. (4) ChangeINF_PATHto the absolute path of theimage2bsfolder. - Start the

BlendshapeToolkitwith Unity and run the project. - Click the

Initializebutton to callimage2bsproject to generate animation. For more details about usage of the toolkit, please refer to our paper.

We are grateful for the following awesome projects: