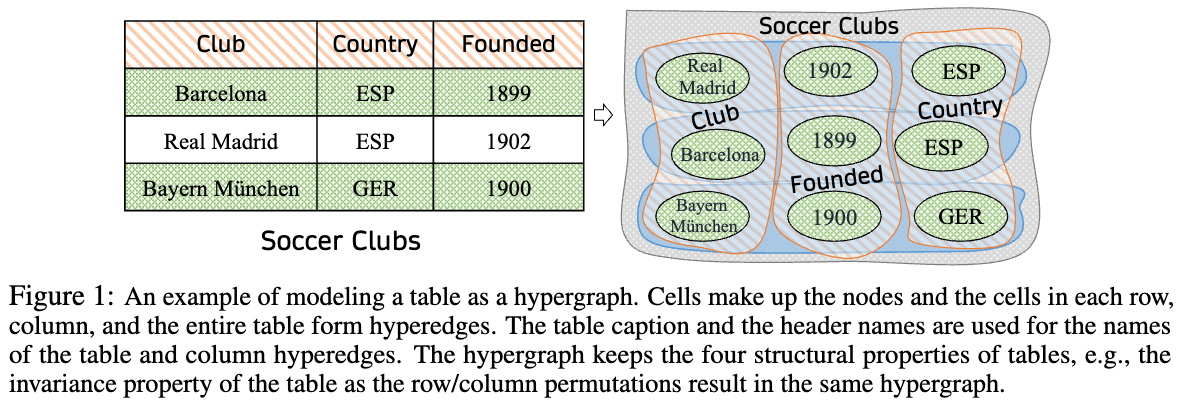

A hypergraph-based tabular language model.

This repository contains the official implementation for the paper HyTrel: Hypergraph-enhanced Tabular Data Representation Learning with code, data, and checkpoints.

It's recommended to use python 3.9.

Here is an example of creating the environment using Anaconda.

- Create the virtual environment using

conda create -n hytrel python=3.9 - Install the required packages with the corresponding versions from

requirements.txt

Note: If you encounter difficulty installing torch_geometric, please refer here to install it according to your environment settings.

-

Pre-process the raw data, slicing the big file into chunks, and put the

*.jsonlfiles into the directory/data/pretrain/chunks/. Sample data is present here and the files can be used as reference.

Note: Pretraining data*.jsonlare acquired and preprocessed by using the scripts from the TaBERT. -

Run

python parallel_clean.pyto clean and serialize the tables.

Note: We serialize the tables as arrow in consideration of memory usage. -

Run

sh pretrain_electra.shto pretrain HyTrel with the ELECTRA objective. -

Run

sh pretrain_contrast.shto pretrain HyTrel with the Contrastive objective.

First put the ELECTRA-pretrained checkpoint to /checkpoints/electra/, and Contrast-pretrained checkpoint to /checkpoints/contrast/.

-

Put the data

{train, dev, test}.table_col_type.jsonandtype_vocab.txtinto the directory/data/col_ann/. -

Run

sh evaluate_cta_electra.shwith ELECTRA-pretrained checkpoint. -

Run

sh evaluate_cta_contrast.shwith Contrast-pretrained checkpoint.

-

Put the data

{train, dev, test}.table_rel_extraction.jsonandrelation_vocab.txtinto the directory/data/col_rel/. -

Run

sh evaluate_cpa_electra.shwith ELECTRA-pretrained checkpoint. -

Run

sh evaluate_cpa_contrast.shwith Contrast-pretrained checkpoint.

-

Decompose

ttd.tar.gzintotrain, dev, testdata folders under the directory/data/ttd/. -

Run

sh evaluate_ttd_electra.shwith ELECTRA-pretrained checkpoint. -

Run

sh evaluate_ttd_contrast.shwith Contrast-pretrained checkpoint.

Please cite our paper.

@article{chen2023hytrel,

archiveprefix = {arXiv},

author = {Pei Chen and Soumajyoti Sarkar and Leonard Lausen and Balasubramaniam Srinivasan and Sheng Zha and Ruihong Huang and George Karypis},

eprint = {2307.08623},

primaryclass = {cs.LG},

title = {HYTREL: Hypergraph-enhanced Tabular Data Representation Learning},

year = {2023}

}

For questions on details regarding the implementation, please email: chen.pei518@163.com (Pei Chen)