Shiyi Zhang*, Sule Bai*, Guangyi Chen, Lei Chen, Jiwen Lu, Junle Wang, Yansong Tang†

This repository contains the PyTorch implementation for the paper "Narrative Action Evaluation with Prompt-Guided Multimodal Interaction" (CVPR 2024)

- Train/Evaluation Code

- Dataset preparation and installation guidance

- Pre-trained weights

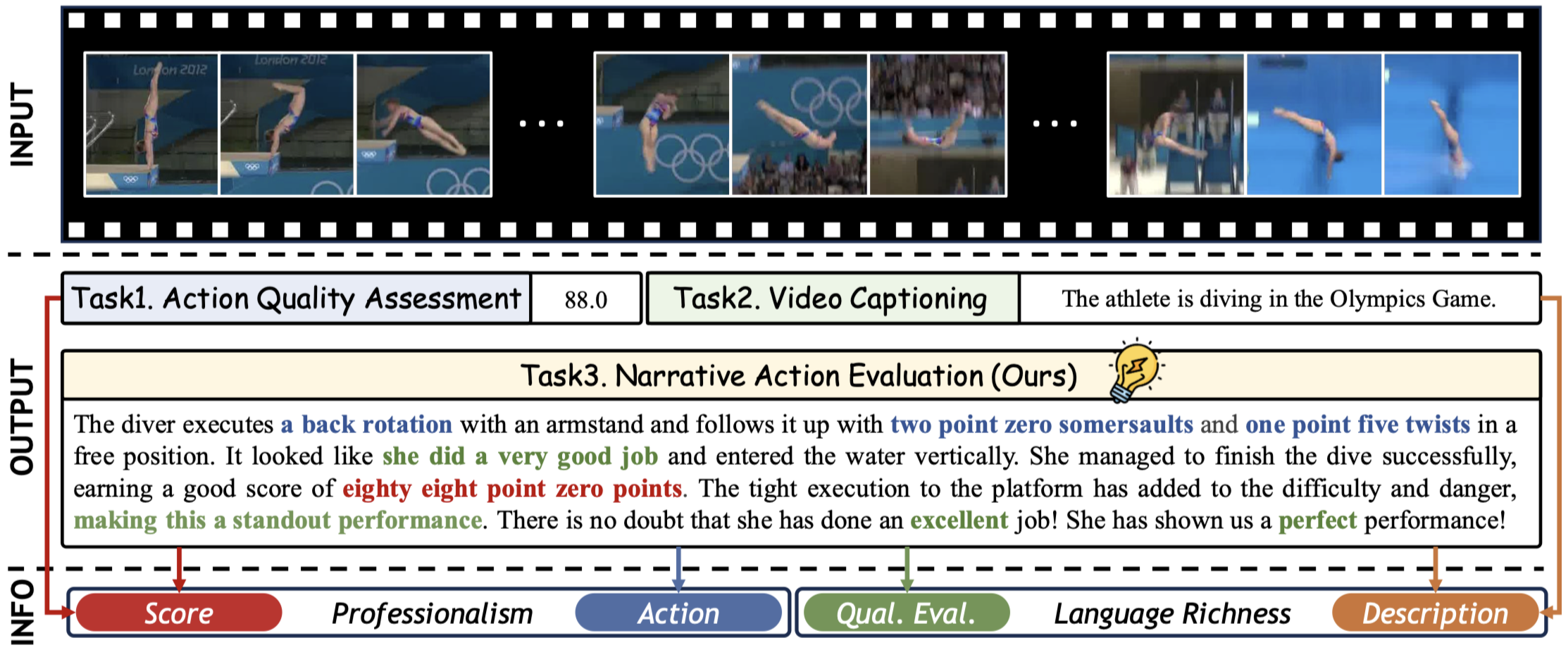

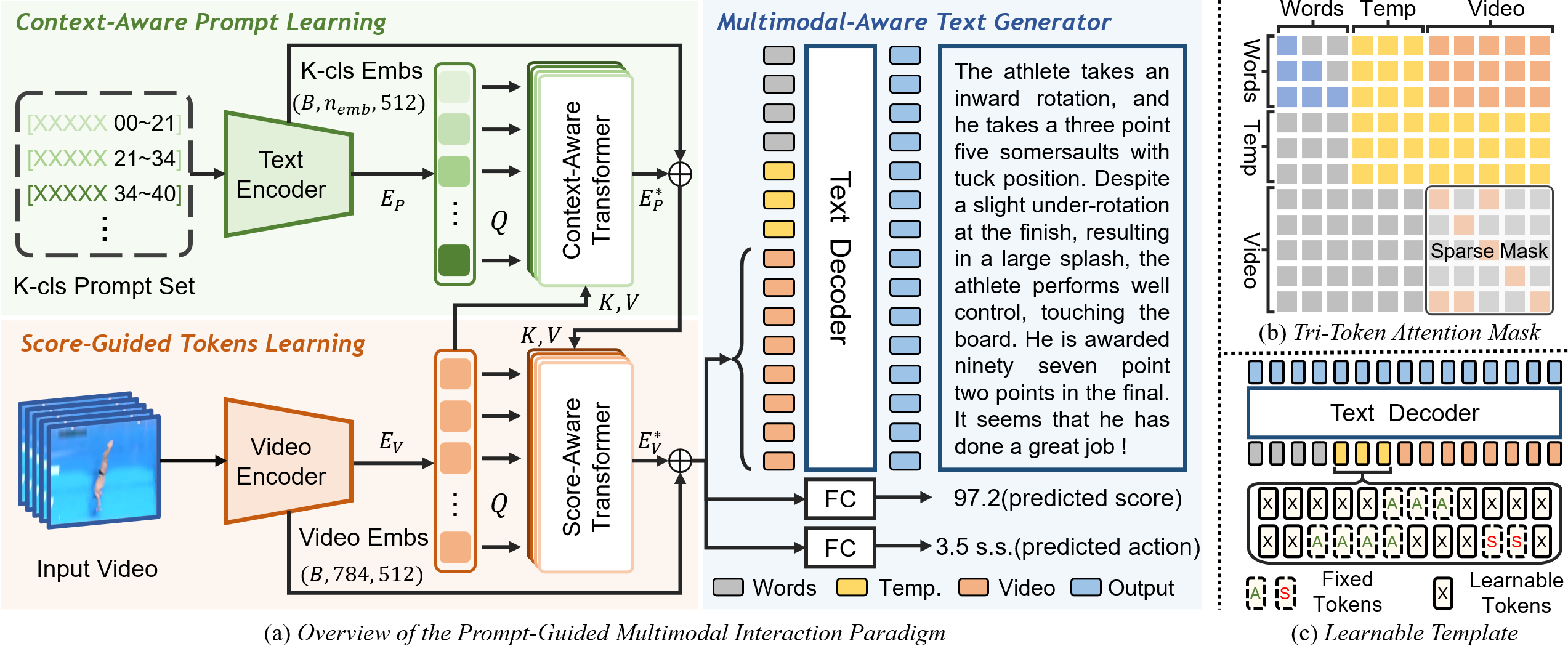

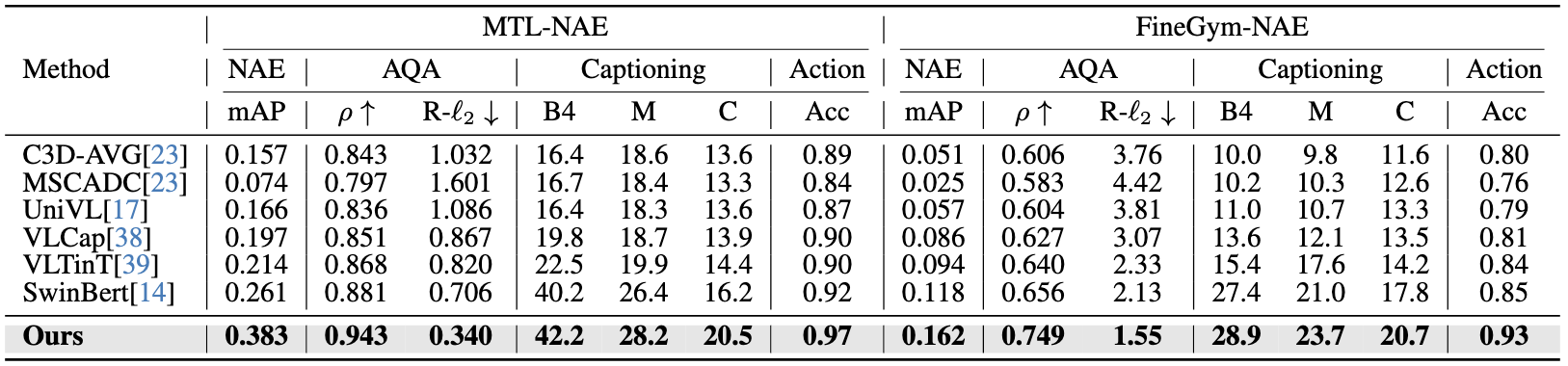

In this paper, we investigate a new problem called Narrative Action Evaluation (NAE). NAE aims to generate professional commentary that evaluates the execution of an action. Unlike traditional tasks such as score-based action quality assessment and video captioning involving superficial sentences, NAE focuses on creating Detailed Narratives in Natural Language. These narratives provide intricate descriptions of Actions along with Objective Evaluations. NAE is a more challenging task because it Requires Both Narrative Flexibility and Evaluation Rigor. One existing possible solution is to use multi-task learning, where narrative language and evaluative information are predicted separately. However, this approach results in reduced performance for individual tasks because of variations between tasks and differences in modality between language information and evaluation information. To address this, we propose a Prompt-Guided Multimodal Interaction Framework. This framework utilizes a pair of transformers to facilitate the interaction between different modalities of information. It also uses prompts to transform the score regression task into a video-text matching task, thus enabling task interactivity. To support further research in this field, we Re-annotate the MTL-AQA and FineGym Datasets with high-quality and comprehensive action narration. Additionally, we establish benchmarks for NAE. Extensive experiment results prove that our method outperforms separate learning methods and naive multi-task learning methods.

In comparison to Action Quality Assessment, NAE provides rich language

Overview of our Prompt-Guided Multimodal Interaction paradigm.

Comparison with previous video captioning methods on two benchmarks for NAE task.

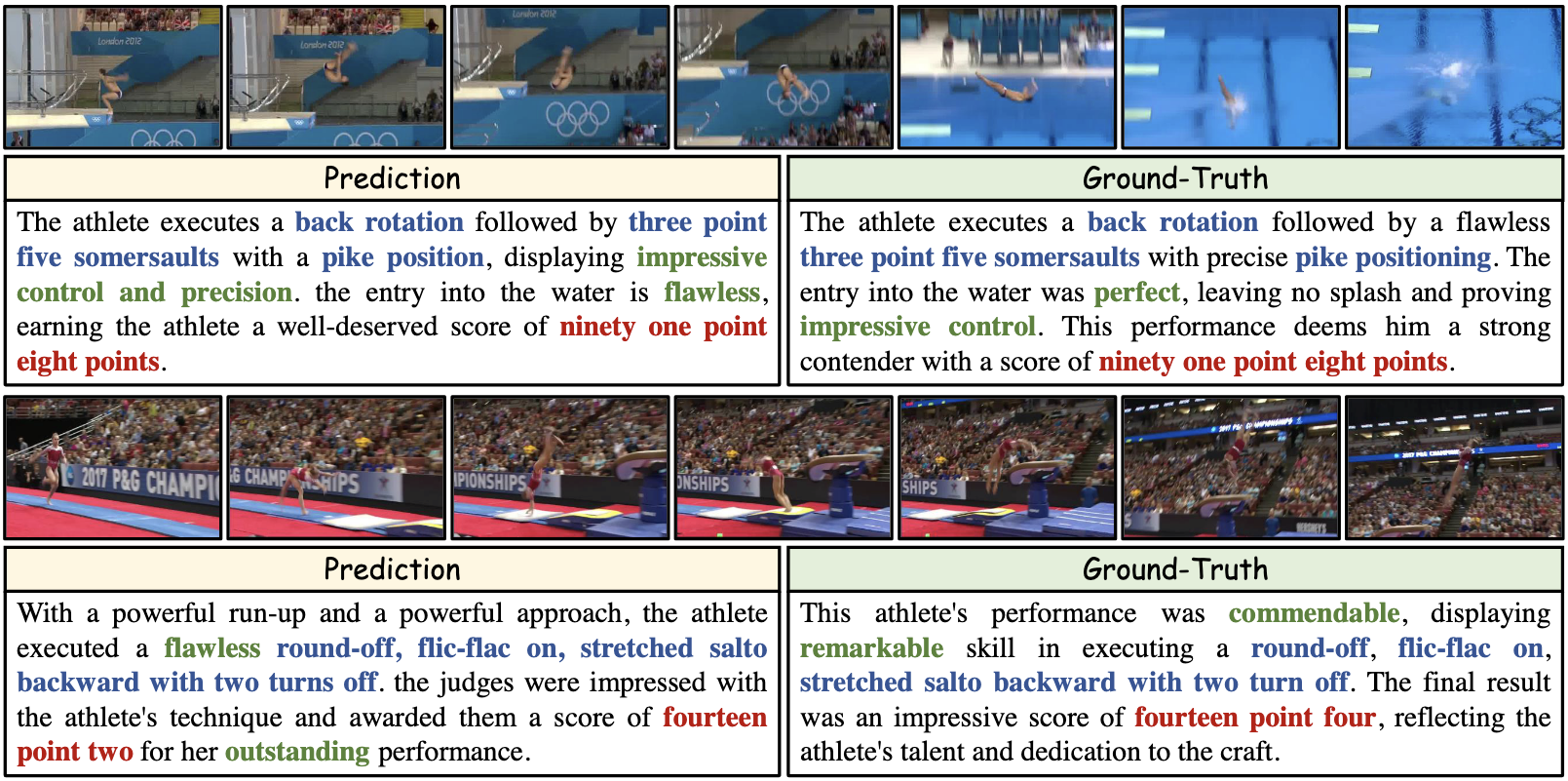

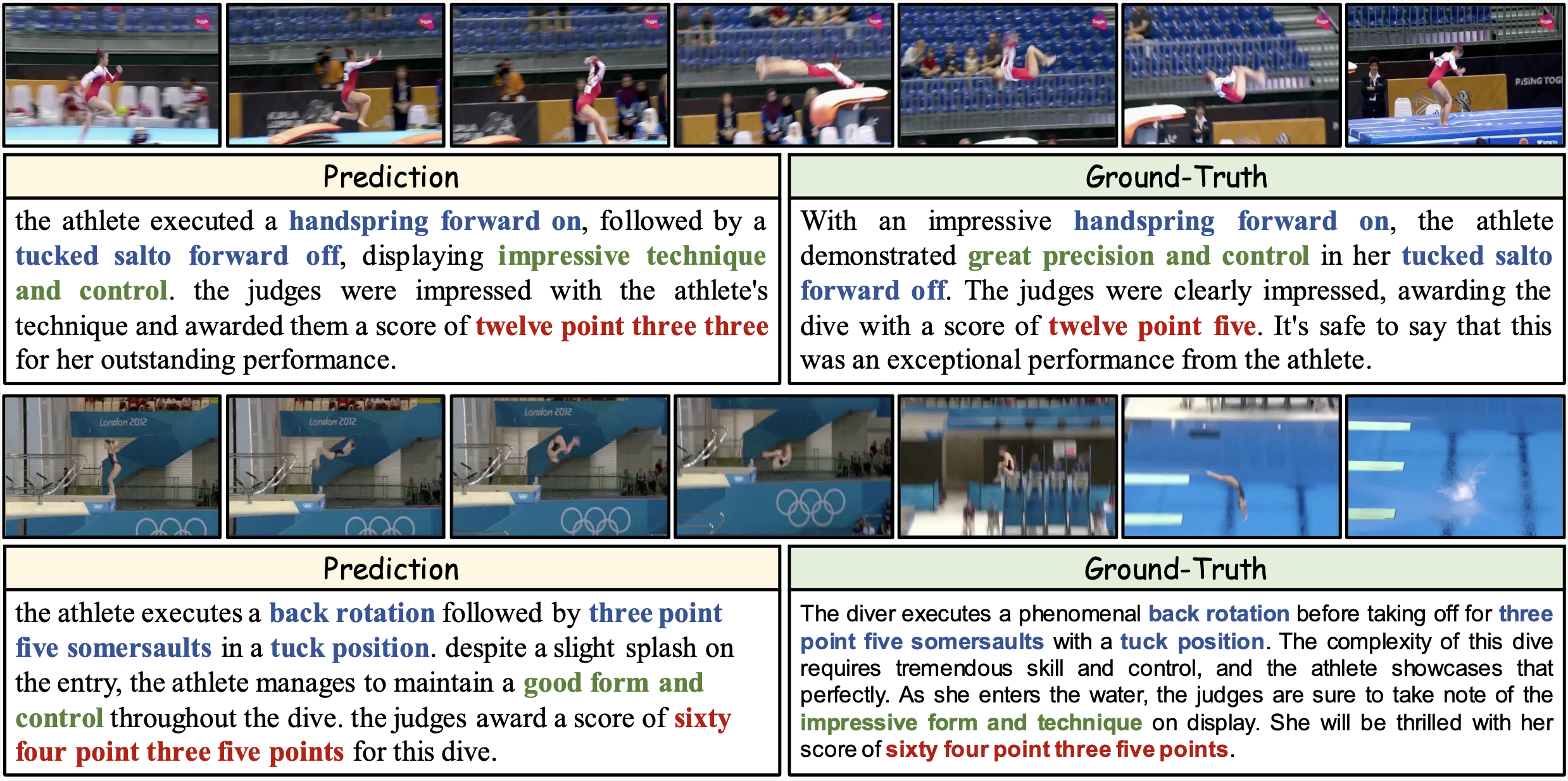

Qualitative results. Our model can generate detailed narrations including

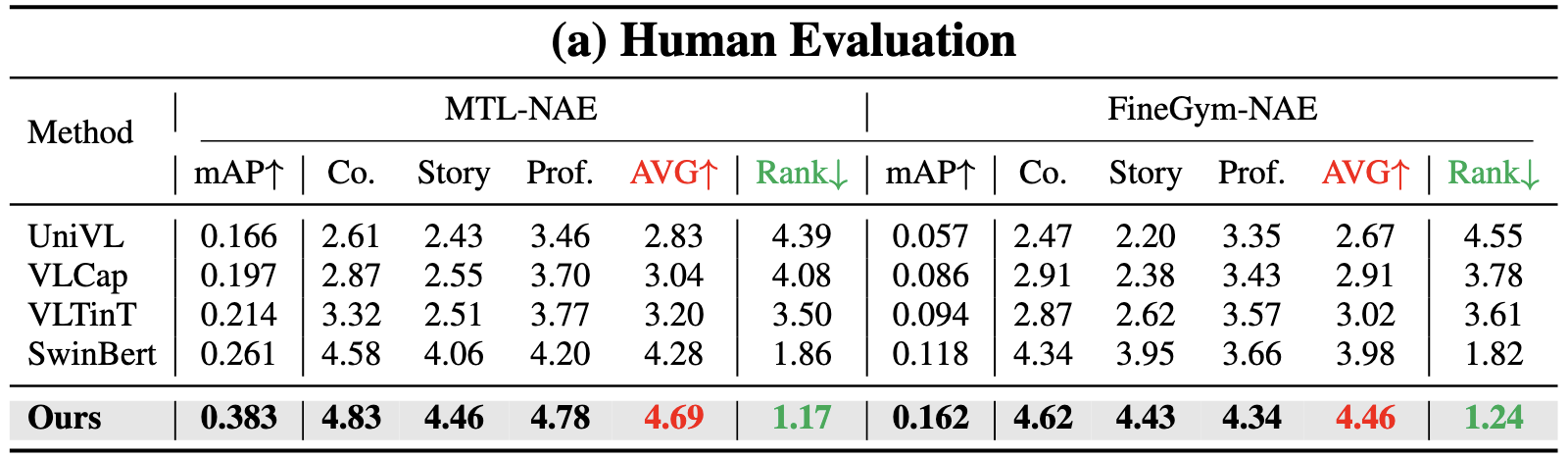

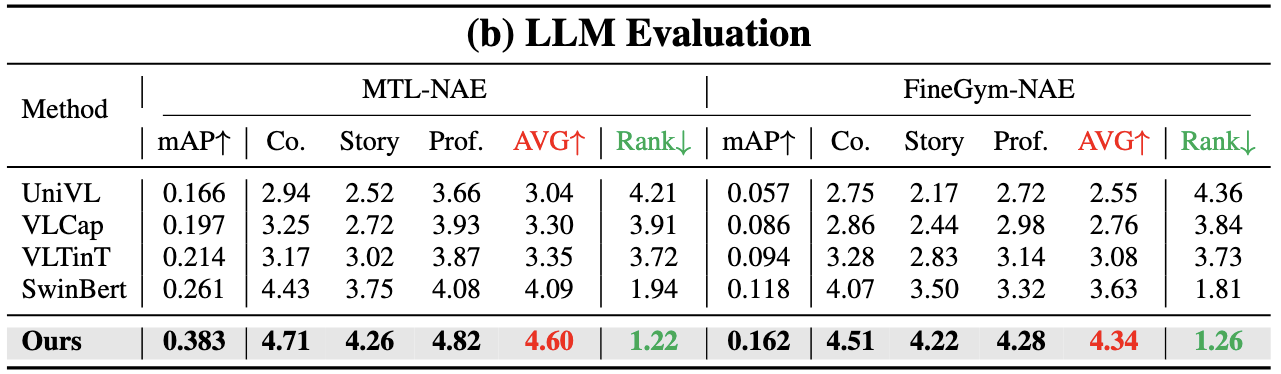

(a) Human Evaluation. (b) LLM Evaluation. We use both the 5-point Likert scale and Rank-based methods. For the 5-point Likert scale, we consider three criteria for each text: Coherence (Co.), Storytelling (Story), and Professionalism (Pro.). Each criterion is rated from 1 to 5 by humans or LLM. The average score (AVG) is then calculated. As for the Rank-based method, for each video, we present all texts generated by each method to humans or LLM and ask them to rank the quality of these texts (rank from 1 to 5). Then we obtain each method’s average ranking (Rank).

We would like to thank the authors of the following repositories for their excellent work: SwinBERT, MUSDL, DenseCLIP, CLIP.

E-mail: sy-zhang23@mails.tsinghua.edu.cn / bsl23@mails.tsinghua.edu.cn

WeChat: ZSYi-408