Code and data are described in the paper:

Code and data are described in the paper:

Xinyi Chen, Raquel Fernández and Sandro Pezzelle. The BLA Benchmark: Investigating Basic Language Abilities of Pre-Trained Multimodal Models. In the Proceedings of EMNLP 2023 (Singapore, 6-10 December 2023).

We provide the benchmark dataset and code for reproducing our results.

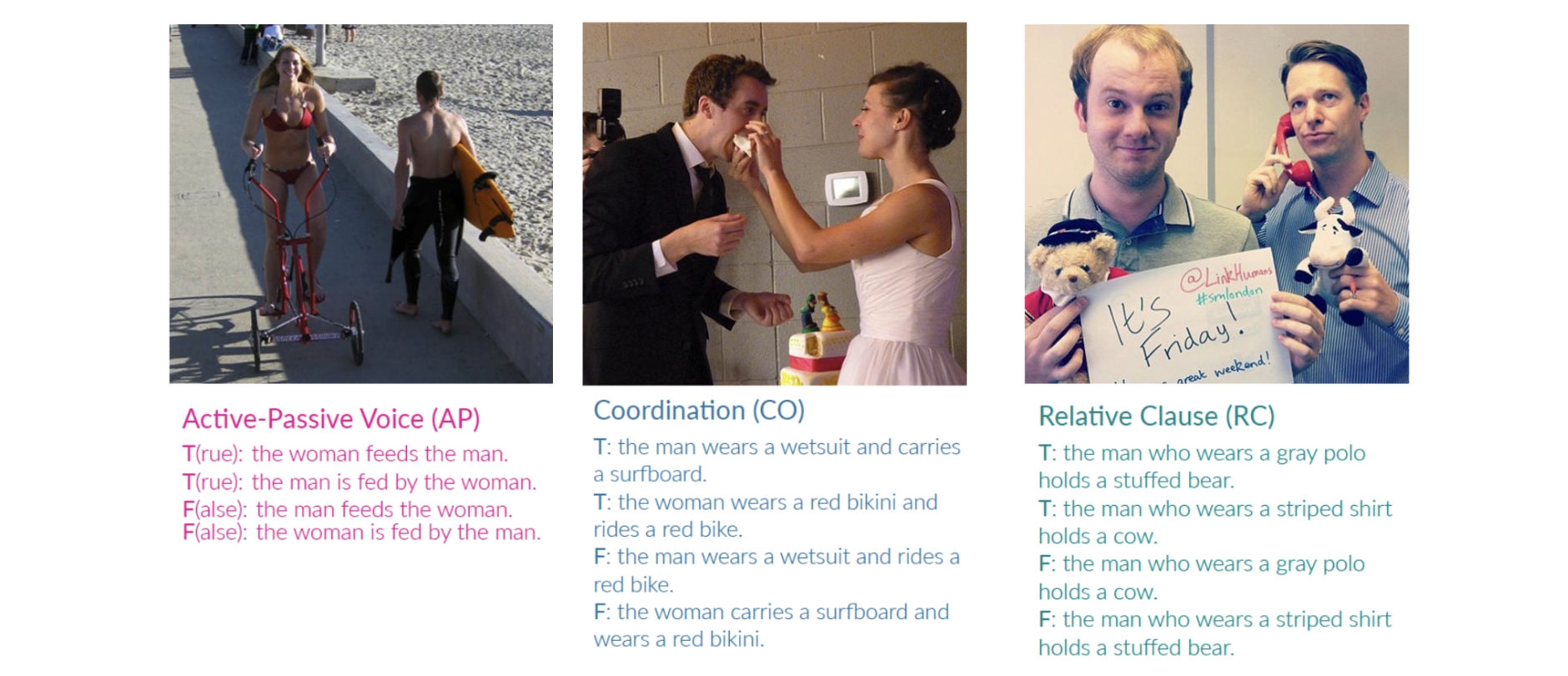

BLA is a novel, automatically constructed benchmark to evaluate multimodal models on Basic Language Abilities. In the BLA tasks, we explore to what extent the models handle basic linguistic constructions—active-passive voice, coordination, and relative clauses—that even preschool children can typically master. The benchmark dataset can be downloaded here. Check out dataset/demo for the benchmark task demo examples.

We investigate the following pretrained multimodel models in the experiments, and provide the links for downloading their checkpoints: ViLBERT (CTRL), LXMERT (CTRL), CLIP (ViT-B-32), BLIP2 (FlanT5 XXL), OpenFlamingo (RedPajama-INCITE-Instruct-3B-v1), MAGEMA, FROMAGe.

Note: Our preliminary finding suggests that MAGEMA and FROMAGe might only generate expected outputs ("yes" or "no") for 80% cases.

We provide scripts to perform zero-shot evaluation and task-specific learning (i.e. fine-tune or in-context learning). See repo volta-bla for how to evaluate and fine-tune ViLBERT and LXMERT.

In order to run each model on the BLA benchmark, please follow the steps described in the orginial model page to set up the environment. We suggest creating one Conda environment for each model.

Run CLIP on BLA Active-Passive task in a zero-shot setting

cd evaluation_models/clip

python test_clip_bla.py --annotation_dir dataset/BLA_Benchmark/annotations --img_dir dataset/BLA_Benchmark/images --phenomenon ap

Run BLIP2 on BLA Active-Passive task in a zero-shot setting

cd evaluation_models/blip2

python test_blip2_bla.py --annotation_dir dataset/BLA_Benchmark/annotations --img_dir dataset/BLA_Benchmark/images --phenomenon ap -dataset_type whole

Run BLIP2 on BLA Active-Passive task using in-context learning

cd evaluation_models/blip2

python test_blip2_bla.py --annotation_dir dataset/BLA_Benchmark/annotations --img_dir dataset/BLA_Benchmark/images --phenomenon ap -dataset_type test --in_context_learning

Run BLIP2 on BLA Coordination task using in-context learning with cross dataset examples from Relative Clause

cd evaluation_models/blip2

python test_blip2_bla.py --annotation_dir dataset/BLA_Benchmark/annotations --img_dir dataset/BLA_Benchmark/images --phenomenon co -dataset_type test --in_context_learning --cross_dataset_example --example_task rc

Run OpenFlamingo on BLA Active-Passive task in a zero-shot setting

cd evaluation_models/openflamingo

python test_oflamingo_bla.py --annotation_dir dataset/BLA_Benchmark/annotations --img_dir dataset/BLA_Benchmark/images --phenomenon ap -dataset_type whole

Check out test_fromage_bla.py and test_magma_bla.py for example codes to run fromage and magma.

This work is licensed under the MIT license. See LICENSE for details.

Third-party software and data sets are subject to their respective licenses.

If you find our code/data/models or ideas useful in your research, please consider citing the paper:

@inproceedings{chen2023bla,

title={The BLA Benchmark: Investigating Basic Language Abilities of Pre-Trained Multimodal Models},

author={Chen, Xinyi and Fern{\'a}ndez, Raquel and Pezzelle, Sandro},

booktitle={Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing},

pages={5817--5830},

year={2023}

}

For questions, comments, or concerns, reach out to: x dot chen2 at uva dot nl