Paper | Video | Spurious | Style

This is an addition to the official implementation for the paper

Towards Robust and Adaptive Motion Forecasting: A Causal Representation Perspective

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2022.

Yuejiang Liu,

Riccardo Cadei,

Jonas Schweizer,

Sherwin Bahmani,

Alexandre Alahi

École Polytechnique Fédérale de Lausanne (EPFL)

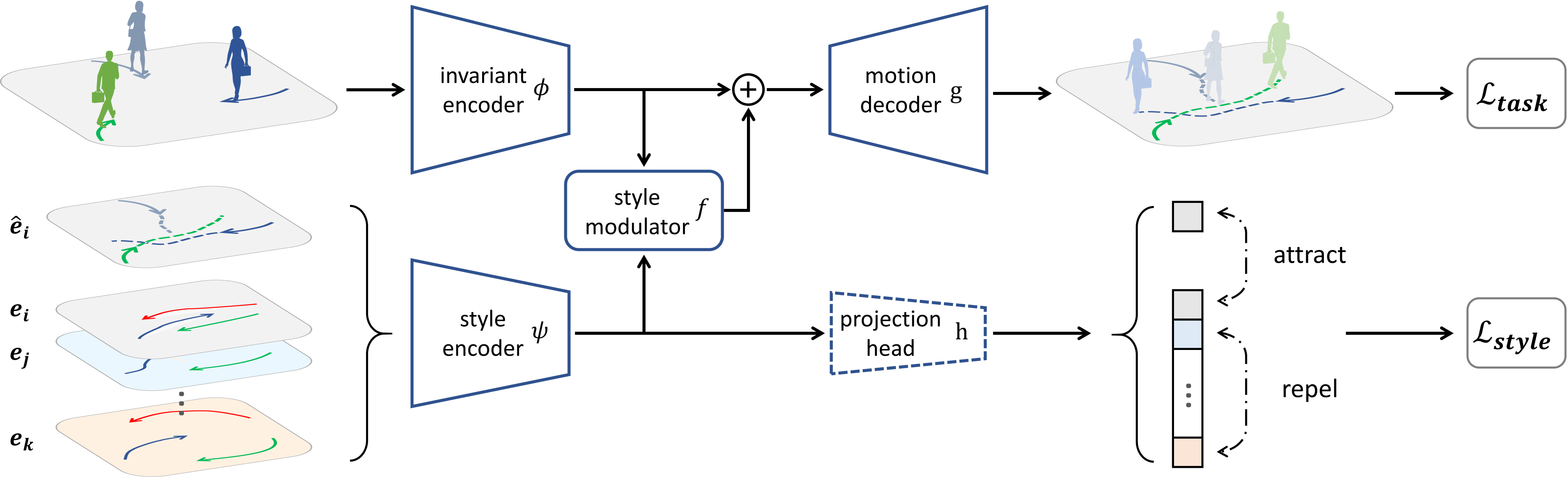

TL;DR: incorporate causal invariance and structure into the design and training of motion forecasting models to improve the robustness and reusability of the learned representations under common distribution shifts

- causal formalism of motion forecasting with three groups of latent variables

- causal (invariant) representations to suppress spurious features and promote robust generalization

- causal (modular) structure to approximate a sparse causal graph and facilitate efficient adaptation

If you find this code useful for your research, please cite our paper:

@InProceedings{Liu2022CausalMotionRepresentations,

title = {Towards Robust and Adaptive Motion Forecasting: A Causal Representation Perspective},

author = {Liu, Yuejiang and Cadei, Riccardo and Schweizer, Jonas and Bahmani, Sherwin and Alahi, Alexandre},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {17081-17092}

}Install PyTorch, for example using pip

pip install --upgrade pip

pip install torch==1.9.0+cu111 torchvision==0.10.0+cu111 torchaudio==0.9.0 -f https://download.pytorch.org/whl/torch_stable.html

Install dependencies

pip install -r requirements.txt

Build ddf dependency

cd ddf

python setup.py install

mv build/lib*/* .

Get the raw dataset, our filtered custom dataset and segmentation masks for SDD from the original Y-net authors

pip install gdown && gdown https://drive.google.com/uc?id=14Jn8HsI-MjNIwepksgW4b5QoRcQe97Lg

unzip sdd_ynet.zip

After unzipping the file the directory should have following structure:

sdd_ynet

├── dataset_raw

├── dataset_filter

│ ├── dataset_ped

│ ├── dataset_biker

│ │ ├── gap

│ │ └── no_gap

│ └── ...

└── ynet_additional_files

In addition to our custom datasets in sdd_ynet/dataset_filter, you can create custom datasets:

bash create_custom_dataset.sh

- Train Baseline

bash run_train.sh

Our pretrained models can be downloaded from google drive.

cd ckpts

gdown https://drive.google.com/uc?id=180sMpRiGhZOyCaGMMakZPXsTS7Affhuf

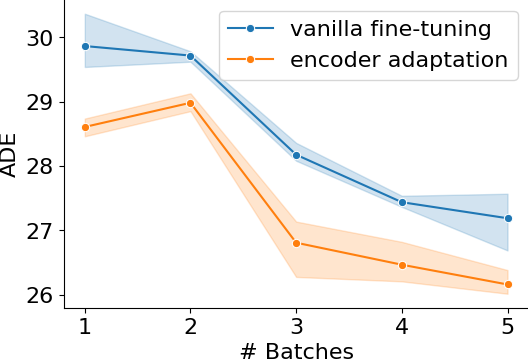

- Low-shot Adaptation

bash run_vanilla.sh

bash run_encoder.sh

python utils/visualize.py

Results of different methods for low-shot transfer across agent types and speed limits.

Out code is developed upon the public code of Y-net and Decoupled Dynamic Filter.