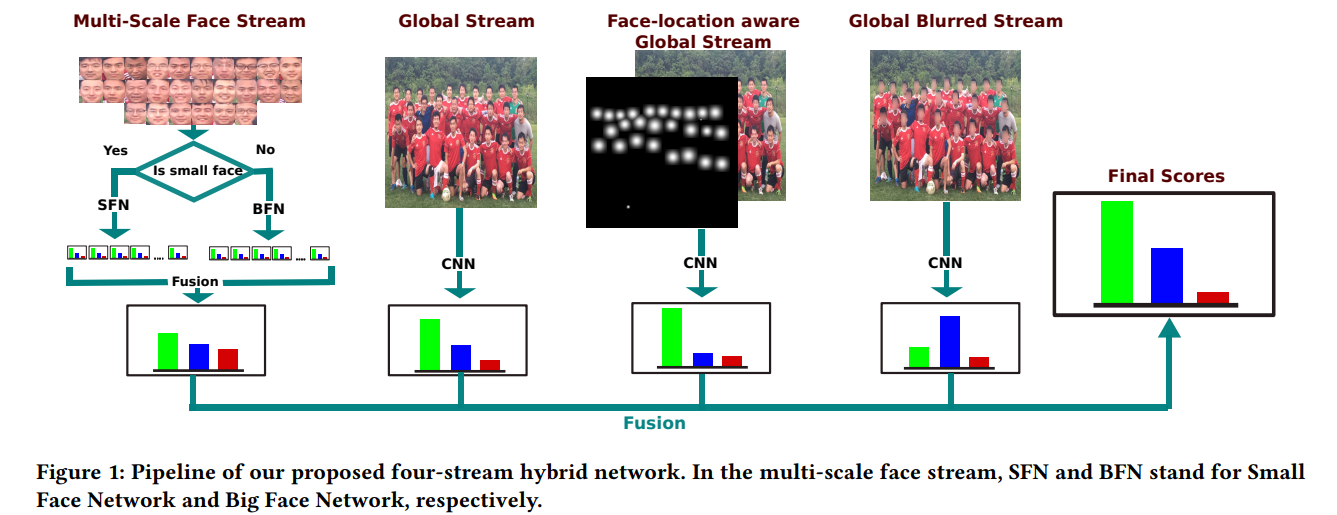

Group-Level Emotion Recognition using Deep Models with A four-stream Hybrid Network

This repository contains code for our paper at ICMI 18, as a part of Emotiw18 challenge. Our propoosed method secured 3rd position in the sub-challenge. For a detailed understanding of the proposed method consider reading our paper. If you think this repository or our paper was helpful consider citing it.

@inproceedings{khan2018group,

title={Group-Level Emotion Recognition using Deep Models with A Four-stream Hybrid Network},

author={Khan, Ahmed Shehab and Li, Zhiyuan and Cai, Jie and Meng, Zibo and O'Reilly, James and Tong, Yan},

booktitle={Proceedings of the 2018 on International Conference on Multimodal Interaction},

pages={623--629},

year={2018},

organization={ACM}

}

Menu

Before you start

Clone Repository: Clone this repository with the follwing command. Submodule is necessary as it contains the MTCNN code as submodule.

git clone --recurse-submodules https://github.com/shehabk/icmi18_group_level.gitEmotiW18 Dataset: Collect the dataset from the organizers, and put it inside the data folder. The name of this subfolder should be 'OneDrive-2018-03-20' which contains 'Test_Images', 'Train', and 'Validation'.

Pretrained Models: The Multi-Scale faces stream of our proposed method is pretrained on RAF-DB and FER3013 datasets. I have shared the pretrained models here. Download the pretrained models and keep them in models directory.

Folder Strucure: Make sure to put the downloaded files in the folloing folder structure.

project_root/

|--data/

|--OneDrive-2018-03-20

|--Train

|--

|--Validation

|--

|--Test_Images

|--

|--****

|--models/

|--pretrained

|--raf_fer2013

|--****

|--scripts/

|--src/

|--images/

|README.md

Running code

Preprocessing

Make sure the data and pretrained models are placed in appropriate location. cd into the project root and run the preprocessing script.

bash scripts/preprocessing/preprocess.shIt will do all the preprocessing steps, which includes getting landmarks, cropping and aligning face images, creating images for heatmap and blurred stream. It will also generate necessary image lists to be used for the training stream.

Training

Make sure you have run the preprocessing step properly. Run the following scripts from project root.

# training on the training set.

# choose best model from validation set.

bash scripts/training/train_on_train.sh

# training on the training+validation set

# choose last model for testing.

bash scripts/training/train_on_train_val.shEvaluation

Make sure the training step is completed. This step depends on the previous two step, and will require all the trained models. Run the following scripts from the project_root.

# Use models trained on training set

# Evaluate the validation set.

bash scripts/evaluation/evaluate_train.sh

# Use the models trained on training+validation sets

# Evaluated on the test set.

bash scripts/evaluation/evaluate_train_val.sh

Prepare Output

This script will prepare the test set in the format of the organizers. But you need to modify the weights in this file. This file is kept commented, uncomment it and adjust the weights.

bash scripts/evaluation/prepare_submission.sh